热门标签

热门文章

- 1VS code配置Markdown以及预览

- 2AI大模型学习:引领智能时代的新篇章

- 3Centos7-Tshark安装_tshark下载

- 4SAP BASIS ADM100 中文版 Unit 8(2)_sap假脱机请求什么意思

- 5全面:vue.config.js 的完整配置_vue.config.js配置开发环境

- 6实战 | 教你用Python画各种版本的圣诞树_制作圣诞树python

- 7spring boot中常用的安全框架 Security框架 利用Security框架实现用户登录验证token和用户授权(接口权限控制)_springboot的安全框架

- 8【Unity】删除所有子物体保留父物体的2种方式_unity预制体中子对象销毁父对象是否存在

- 9App备案安卓公钥及证书MD5获取指南_app备案公钥

- 10COCO人体关键点检测数据制作_coco关键点检测17点标注模型

当前位置: article > 正文

基于文本内容的垃圾短信识别实战

作者:羊村懒王 | 2024-04-07 08:27:48

赞

踩

垃圾短信识别

1、实战的背景与目标

背景:

垃圾短信形式日益多变,相关报告可以在下面网站查看

360互联网安全中心(http://zt.360.cn/report/)

目标:

基于短信文本内容,建立识别模型,准确地识别出垃圾短信,以解决垃圾短信过滤问题

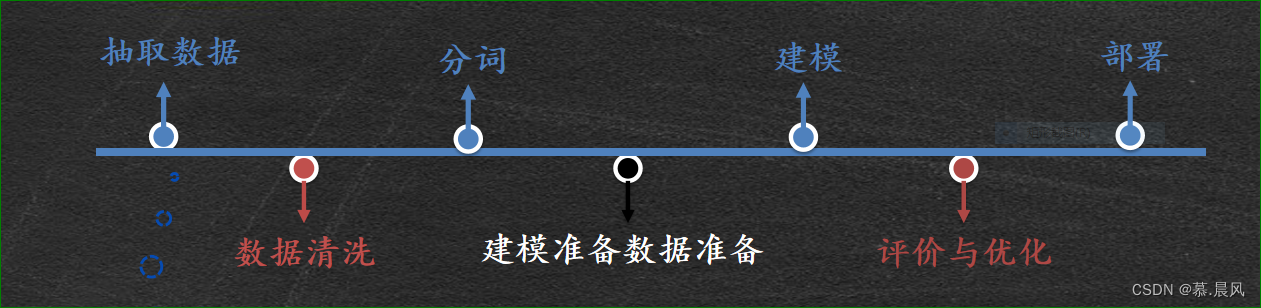

2、总体流程

3、代码实现

1、数据探索

导入数据

- #数据导入,设置第一行不为头标签并设置第一列数据为索引

-

- message = pd.read_csv("./data/message80W1.csv",encoding="UTF-8",header=None,index_col=0)

-

-

更换列名

- message.columns =["label","message"]

-

查看数据形状

- message.shape

-

-

- # (800000, 2)

整体查看数据

- message.info()

-

- """

- <class 'pandas.core.frame.DataFrame'>

- Int64Index: 800000 entries, 1 to 800000

- Data columns (total 2 columns):

- # Column Non-Null Count Dtype

- --- ------ -------------- -----

- 0 label 800000 non-null int64

- 1 message 800000 non-null object

- dtypes: int64(1), object(1)

- memory usage: 18.3+ MB

- """

查看数据是否有重复值(若有目前不清除,等抽样后,再处理样本数据)

- message.duplicated().sum()

-

-

- #13424

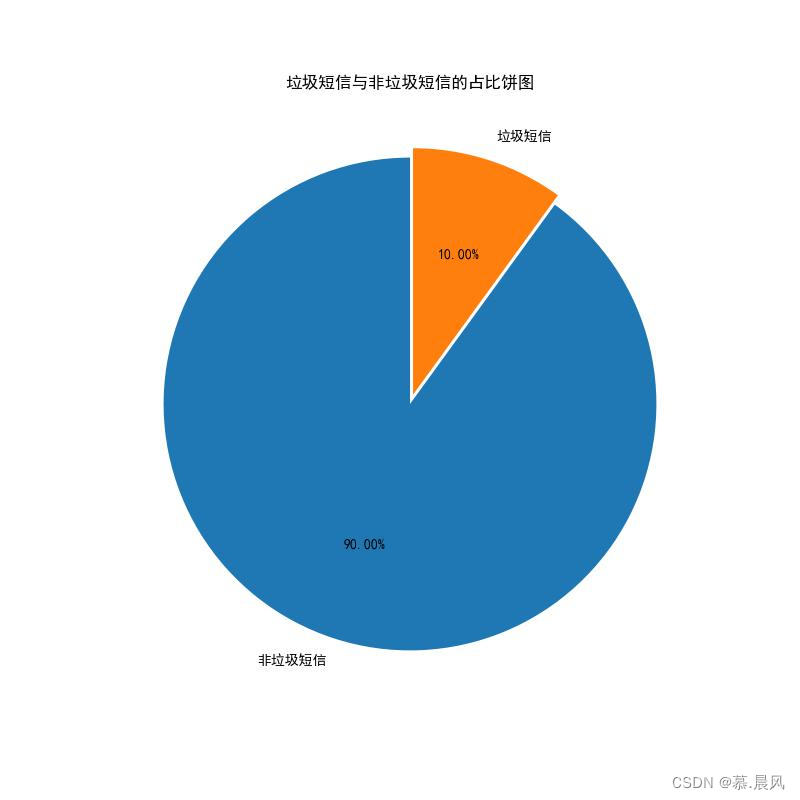

查看垃圾短信与非垃圾短信的占比

- data_group = message.groupby("label").count().reset_index()

-

-

- import matplotlib.pyplot as plt

-

- fig = plt.figure(figsize=(8,8))

-

- plt.rcParams["font.sans-serif"] = ["SimHei"] # 设置中文字体为黑体

- plt.rcParams["axes.unicode_minus"] = False # 设置显示负号

-

- plt.title("垃圾短信与非垃圾短信的占比饼图",fontsize=12)

-

- plt.pie(data_group["message"],labels=["非垃圾短信","垃圾短信"],autopct="%0.2f%%",startangle=90,explode=[0,0.04])

-

- plt.savefig("垃圾短信与非垃圾短信的占比饼图.jpg")

-

- plt.show()

2、进行数据抽样

先抽取抽样操作 ,垃圾短信,非垃圾短信 各1000, 占比为 1:1

- n = 1000

-

- a = message[message["label"] == 0].sample(n) #随机抽样

-

- b = message[message["label"] == 1].sample(n)

-

- data_new = pd.concat([a,b],axis=0) #数据按行合并

-

3、进行数据预处理

数据清洗 去重 去除x序列 (这里的 x序列指 数据中已经用 XXX 隐藏的内容,例如手机号、名字,薪资等敏感数据)

- #去重

-

- data_dup = data_new["message"].drop_duplicates()

-

-

- #去除x序列

- import re

-

- data_qumin = data_dup.apply(lambda x:re.sub("x","",x))

进行 jieba分词操作

- import jieba

-

-

- jieba.load_userdict("./data/newdic1.txt") #向 jieba 模块中添加自定义词语

-

- data_cut = data_qumin.apply(lambda x:jieba.lcut(x)) #进行分词操作

-

-

- #设置停用词

- stopWords = pd.read_csv('./data/stopword.txt', encoding='GB18030', sep='hahaha', header=None) #设置分隔符为 不存在的内容,实现分割

-

- stopWords = ['≮', '≯', '≠', '≮', ' ', '会', '月', '日', '–'] + list(stopWords.iloc[:, 0])

-

-

- #去除停用词

- data_after_stop = data_cut.apply(lambda x:[i for i in x if i not in stopWords])

-

- #提取标签

- labels = data_new.loc[data_after_stop.index,"label"]

-

-

-

- #使用空格分割词语

- adata = data_after_stop.apply(lambda x:" ".join(x))

将上述操作封装成函数

-

- def data_process(file='./data/message80W1.csv'):

- data = pd.read_csv(file, header=None, index_col=0)

- data.columns = ['label', 'message']

-

- n = 10000

-

- a = data[data['label'] == 0].sample(n)

- b = data[data['label'] == 1].sample(n)

- data_new = pd.concat([a, b], axis=0)

-

- data_dup = data_new['message'].drop_duplicates()

- data_qumin = data_dup.apply(lambda x: re.sub('x', '', x))

-

- jieba.load_userdict('./data/newdic1.txt')

- data_cut = data_qumin.apply(lambda x: jieba.lcut(x))

-

- stopWords = pd.read_csv('./data/stopword.txt', encoding='GB18030', sep='hahaha', header=None)

- stopWords = ['≮', '≯', '≠', '≮', ' ', '会', '月', '日', '–'] + list(stopWords.iloc[:, 0])

- data_after_stop = data_cut.apply(lambda x: [i for i in x if i not in stopWords])

- labels = data_new.loc[data_after_stop.index, 'label']

- adata = data_after_stop.apply(lambda x: ' '.join(x))

-

- return adata, data_after_stop, labels

-

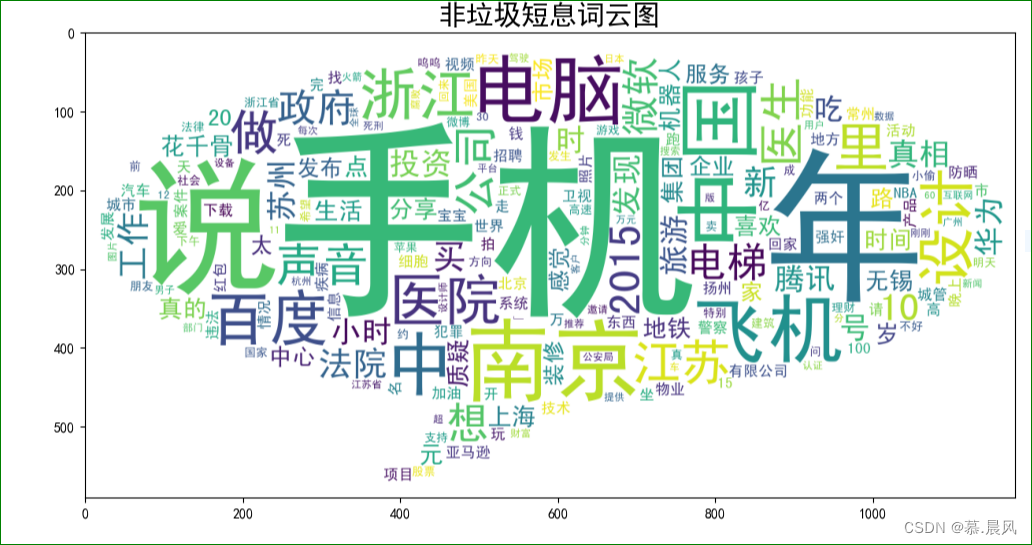

4、进行词云图绘制

调用函数,设置数据

-

- adata,data_after_stop,labels = data_process()

非垃圾短信的词频统计

- word_fre = {}

- for i in data_after_stop[labels == 0]:

- for j in i:

- if j not in word_fre.keys():

- word_fre[j] = 1

- else:

- word_fre[j] += 1

绘图

-

- from wordcloud import WordCloud

- import matplotlib.pyplot as plt

-

- fig = plt.figure(figsize=(12,8))

-

- plt.title("非垃圾短息词云图",fontsize=20)

-

- mask = plt.imread('./data/duihuakuan.jpg')

-

- wc = WordCloud(mask=mask, background_color='white', font_path=r'D:\2023暑假\基于文本内容的垃圾短信分类\基于文本内容的垃圾短信识别-数据&代码\data\simhei.ttf')

-

- wc.fit_words(word_fre)

-

-

-

- plt.imshow(wc)

垃圾短信词云图绘制(方法类似)

- word_fre = {}

- for i in data_after_stop[labels == 1]:

- for j in i:

- if j not in word_fre.keys():

- word_fre[j] = 1

- else:

- word_fre[j] += 1

-

-

- from wordcloud import WordCloud

- import matplotlib.pyplot as plt

-

- fig = plt.figure(figsize=(12,8))

-

- plt.title("垃圾短息词云图",fontsize=20)

-

- mask = plt.imread('./data/duihuakuan.jpg')

-

- wc = WordCloud(mask=mask, background_color='white', font_path=r'D:\2023暑假\基于文本内容的垃圾短信分类\基于文本内容的垃圾短信识别-数据&代码\data\simhei.ttf')

-

- wc.fit_words(word_fre)

-

- plt.imshow(wc)

5、模型的构建

采用 TF-IDF权重策略

权重策略文档中的高频词应具有表征此文档较高的权重,除非该词也是高文档频率词

TF:Term frequency即关键词词频,是指一篇文档中关键词出现的频率

N:单词在某文档中的频次 , M:该文档的单词数

IDF:Inverse document frequency指逆向文本频率,是用于衡量关键词权重的指数

D:总文档数 , Dw:出现了该单词的文档数

调用 Sklearn库中相关模块解释

- sklearn.feature_extraction.text #文本特征提取模块

-

- CosuntVectorizer #转化词频向量函数

-

- fit_transform() #转化词频向量方法

-

- get_feature_names() #获取单词集合方法

-

- toarray() #获取数值矩阵方法

-

- TfidfTransformer #转化tf-idf权重向量函数

-

- fit_transform(counts) #转成tf-idf权重向量方法

-

-

-

-

- transformer = TfidfTransformer() #转化tf-idf权重向量函数

-

- vectorizer = CountVectorizer() #转化词频向量函数

-

- word_vec = vectorizer.fit_transform(corpus) #转成词向量

-

- words = vectorizer.get_feature_names() #单词集合

-

- word_cout = word_vec.toarray() #转成ndarray

-

- tfidf = transformer.fit_transform(word_cout) #转成tf-idf权重向量

-

- tfidf_ma= tfidf.toarray() #转成ndarray

-

采用朴素贝叶斯算法

多项式朴素贝叶斯——用于文本分类 构造方法:

- sklearn.naive_bayes.MultinomialNB(alpha=1.0 #平滑参数,

- fit_prior=True #学习类的先验概率 ,

- class_prior=None) #类的先验概率

模型代码实现

- from sklearn.feature_extraction.text import CountVectorizer,TfidfTransformer

- from sklearn.model_selection import train_test_split

- from sklearn.naive_bayes import GaussianNB

-

-

- data_train,data_test,labels_train,labels_test = train_test_split(adata,labels,test_size=0.2)

-

- countVectorizer = CountVectorizer()

-

-

- data_train = countVectorizer.fit_transform(data_train)

-

- X_train = TfidfTransformer().fit_transform(data_train.toarray()).toarray()

-

-

- data_test = CountVectorizer(vocabulary=countVectorizer.vocabulary_).fit_transform(data_test)

-

- X_test = TfidfTransformer().fit_transform(data_test.toarray()).toarray()

-

-

- model = GaussianNB()

-

-

- model.fit(X_train, labels_train) #训练

-

-

- model.score(X_test,labels_test) #测试

-

-

-

- # 0.9055374592833876

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/羊村懒王/article/detail/377480

推荐阅读

相关标签