热门标签

热门文章

- 1JNI中的log日志_jnilog1699437787981.txta

- 2人工智能和机器学习相关的比较活跃的论坛网址列表_人工智能论坛网站

- 3sklearn包中K近邻分类器 KNeighborsClassifier的使用_from sklearn.neighbors import kneighborsclassifier

- 4【demo】用opencv+qt识别人脸与眼睛_qt opencv获取瞳孔

- 5局域网安全17 dot1x

- 6androidStudio配置安装git以及下载项目_android studio从git上下载项目

- 7【Kafka】Kafka的重复消费和消息丢失问题_kafka重复消费

- 8使用STM32芯片ID作为MAC地址_0x1fff7a10

- 9韩国Meetup | Trias,区块链公链底层的一条“高速公路”

- 10如何在自定义数据集上训练YOLOv8的各个模型_yolov8训练示例

当前位置: article > 正文

Chunjun数据同步工具初体验

作者:木道寻08 | 2024-07-07 12:23:58

赞

踩

chunjun

chunjun (纯钧) 官方文档纯钧

chunjun 有四种运行方式:local、standalone、yarn session、yarn pre-job 。

| 运行方式/环境依赖 | flink环境 | hadoop环境 |

|---|---|---|

| local | × | × |

| standalone | √ | × |

| yarn session | √ | √ |

| yarn pre-job | √ | √ |

1.下载

官网已经提供了编译好的插件压缩包,可以直接下载:https://github.com/DTStack/chunjun/releases

chunjun-dist-1.12-SNAPSHOT.tar.gz

2.解压

先创建 chunjun 目录

再解压 chunjun-dist-1.12-SNAPSHOT.tar.gz 到 chunjun 这个目录当中

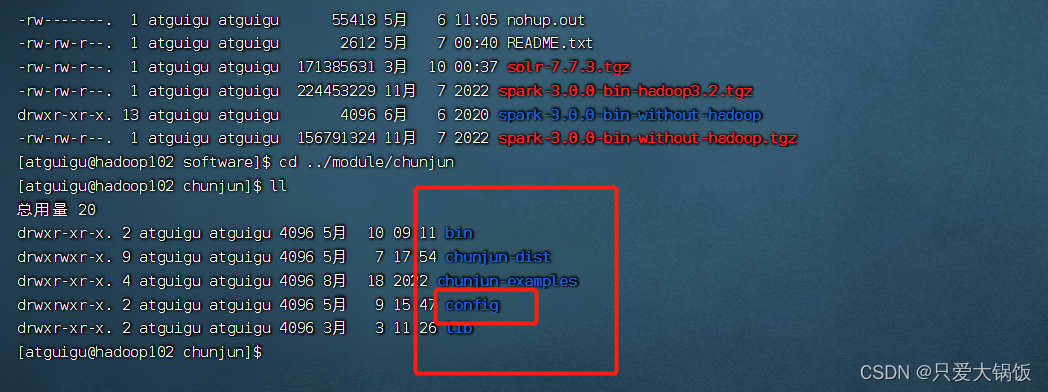

tar -zxvf chunjun-dist-1.12-SNAPSHOT.tar.gz -C ../module/chunjun查看目录结构:config 是自己创建的,取什么名称都行,里面放置 脚本文件

3.案例

mysql->hdfs (local)

根据chunjun 案例 纯钧

编写 mysql 数据同步 hdfs 脚本

vim config/mysql_hdfs_polling.json脚本:

- {

- "job":{

- "content":[

- {

- "reader":{

- "name":"mysqlreader",

- "parameter":{

- "column":[

- {

- "name":"group_id",

- "type":"varchar"

- },

- {

- "name":"company_id",

- "type":"varchar"

- },

- {

- "name":"group_name",

- "type":"varchar"

- }

- ],

- "username":"root",

- "password":"000000",

- "queryTimeOut":2000,

- "connection":[

- {

- "jdbcUrl":[

- "jdbc:mysql://192.168.233.130:3306/gmall?characterEncoding=UTF-8&autoReconnect=true&failOverReadOnly=false"

- ],

- "table":[

- "cus_group_info"

- ]

- }

- ],

- "polling":false,

- "pollingInterval":3000

- }

- },

- "writer":{

- "name":"hdfswriter",

- "parameter":{

- "fileType":"text",

- "path":"hdfs://192.168.233.130:8020/user/hive/warehouse/stg.db/cus_group_info",

- "defaultFS":"hdfs://192.168.233.130:8020",

- "fileName":"cus_group_info",

- "fieldDelimiter":",",

- "encoding":"utf-8",

- "writeMode":"overwrite",

- "column":[

- {

- "name":"group_id",

- "type":"VARCHAR"

- },

- {

- "name":"company_id",

- "type":"VARCHAR"

- },

- {

- "name":"group_name",

- "type":"VARCHAR"

- }

- ]

- }

- }

- }

- ],

- "setting":{

- "speed":{

- "readerChannel":1,

- "writerChannel":1

- }

- }

- }

- }

启动:

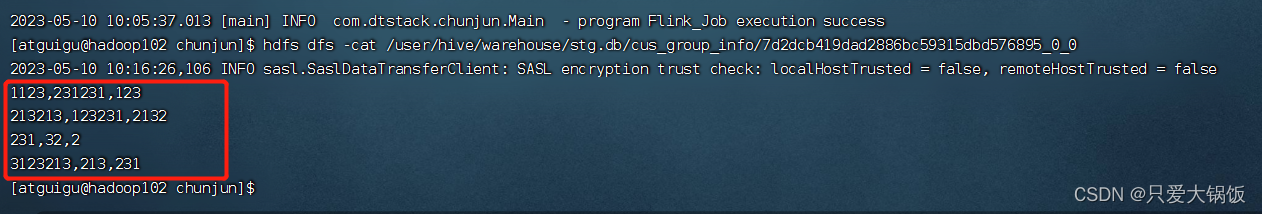

sh bin/chunjun-local.sh -job config/mysql_hdfs_polling.json 运行日志:

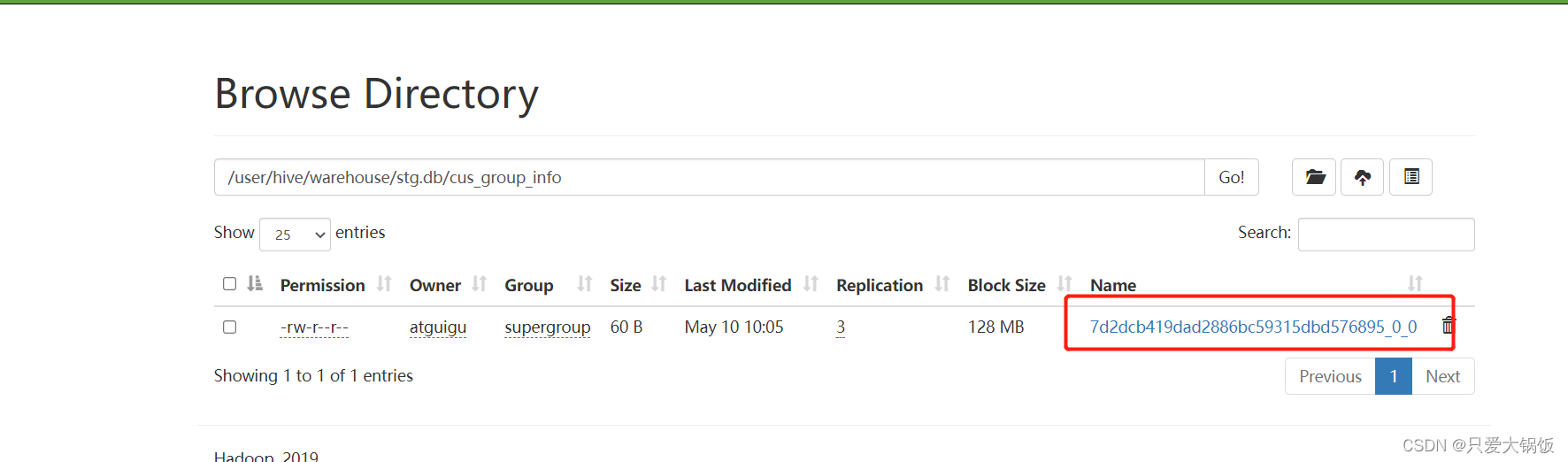

HDFS上的文件:

数据同步成功!

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/木道寻08/article/detail/795636

推荐阅读

相关标签