- 1Big Model Weekly | 第29期

- 2华为OD面试手撕代码最新:最大子数组和_面试 最大数组和

- 3安卓设备如何ROOT?玩转ROOT,安装magisk,让你的安卓手机更强更好用_magisk root

- 4本地上传文件到FastDFS命令上传报错:ERROR - file connection_pool_fastdfs fastfilestorageclient上传文件报错

- 5内网前期准备小结(1),阿里一线架构师技术图谱

- 6模型微调实战:文本生成任务_文本生成模型微调

- 7文心智能体【焦虑粉碎机】——帮你赶走“坏”情绪_智能体的指令设置

- 8[flink]随笔

- 9【软件工具】如何使用 Jenkins 构建并部署一个 WAR 包到相应的服务器上

- 10geometry:MySQL的空间数据类型(Spatial Data Type)与JTS(OSGeo)类型之间的序列化和反序列化_mysql 空间数据库 geometry

ELK部署_elk安装部署手册

赞

踩

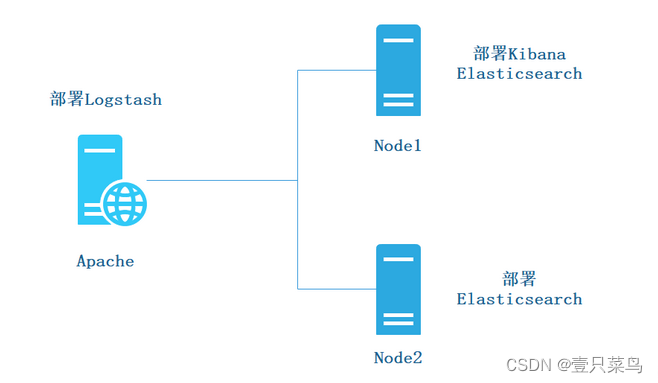

1. 整体部署规划

1.1 服务器规划

| 服务器 | 主机名 | ip地址 | 主要软件 |

|---|---|---|---|

| node1 节点 | node-251 | 192.168.71.251 | ElasticSearch、Kibana |

| node2 节点 | node-252 | 192.168.71.252 | ElasticSearch |

| apache 节点 | node-253 | 192.168.71.253 | Logstash、Apache |

1.2 关闭防火墙,同步时间

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

ntpdate ntp.aliyun.com

- 1

- 2

- 3

2. ElasticSearch集群部署

在Node1、Node2节点上操作

2.1 环境准备

- 配置域名解析

[root@node-252 ~]# cat /etc/hosts

192.168.71.251 node-251

192.168.71.252 node-252

- 1

- 2

- 3

- 查看Java环境

如果没有安装

yum -y install java

- 1

[root@node-251 ~]# java -version

openjdk version "1.8.0_362"

OpenJDK Runtime Environment (build 1.8.0_362-b08)

OpenJDK 64-Bit Server VM (build 25.362-b08, mixed mode)

- 1

- 2

- 3

- 4

2.2 部署 Elasticsearch 软件

-

上传elasticsearch-8.7.0-x86_64.rpm到/opt目录下

[root@node-251 opt]# cd /opt/ [root@node-251 opt]# rpm -ivh elasticsearch-8.7.0-x86_64.rpm- 1

- 2

-

加载系统服务

[root@node-251 opt]# systemctl daemon-reload [root@node-251 opt]# systemctl enable elasticsearch.service Created symlink from /etc/systemd/system/multi-user.target.wants/elasticsearch.service to /usr/lib/systemd/system/elasticsearch.service.- 1

- 2

- 3

-

修改elasticsearch主配置文件

cp /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak vim /etc/elasticsearch/elasticsearch.yml --17--取消注释,指定集群名字 cluster.name: my-elk-cluster --23--取消注释,指定节点名字:Node1节点为node-251,Node2节点为node-252 node.name: node-251 --33--取消注释,指定数据存放路径 path.data: /data/elk_data --37--取消注释,指定日志存放路径 path.logs: /var/log/elasticsearch/ --43--取消注释,改为在启动的时候不锁定内存 bootstrap.memory_lock: false --55--取消注释,设置监听地址,0.0.0.0代表所有地址 network.host: 0.0.0.0 --59--取消注释,ES 服务的默认监听端口为9200 http.port: 9200 --68--取消注释,集群发现通过单播实现,指定要发现的节点 node1、node2 discovery.zen.ping.unicast.hosts: ["node-251", "node-252"]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

新版本的es,还需改下安全相关内容:

[root@node-251 opt]# grep -v "^#" /etc/elasticsearch/elasticsearch.yml cluster.name: yrq-elk-cluster node.name: node-251 path.data: /data/elk_data path.logs: /var/log/elasticsearch bootstrap.memory_lock: false network.host: 0.0.0.0 http.port: 9200 discovery.seed_hosts: ["node-251", "node-252"] xpack.security.enabled: false xpack.security.enrollment.enabled: false xpack.security.http.ssl: enabled: false keystore.path: certs/http.p12 xpack.security.transport.ssl: enabled: false verification_mode: certificate keystore.path: certs/transport.p12 truststore.path: certs/transport.p12 cluster.initial_master_nodes: ["node-251"] http.host: 0.0.0.0- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

-

将node-251的配置文件拷贝一份在node-252上并去node-252上修改

scp /etc/elasticsearch/elasticsearch.yml node2:/etc/elasticsearch/ vim /etc/elasticsearch/elasticsearch.yml --23--取消注释,指定节点名字:Node1节点为node-251,Node2节点为node-252 node.name: node-252- 1

- 2

- 3

- 4

-

创建数据存放路径并授权

mkdir -p /data/elk_data chown elasticsearch:elasticsearch /data/elk_data/- 1

- 2

-

启动elasticsearch是否成功开启

systemctl start elasticsearch.service netstat -antp | grep 9200- 1

- 2

-

查看节点信息

[root@node-252 elasticsearch]# curl node-251:9200 { "name" : "node-251", "cluster_name" : "yrq-elk-cluster", "cluster_uuid" : "Ho9YR64NRJGsdCZtou4dUw", "version" : { "number" : "8.7.0", "build_flavor" : "default", "build_type" : "rpm", "build_hash" : "09520b59b6bc1057340b55750186466ea715e30e", "build_date" : "2023-03-27T16:31:09.816451435Z", "build_snapshot" : false, "lucene_version" : "9.5.0", "minimum_wire_compatibility_version" : "7.17.0", "minimum_index_compatibility_version" : "7.0.0" }, "tagline" : "You Know, for Search" }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

-

选择安装 Elasticsearch-head 插件

该插件主要用来改善es自身在浏览器的可视化效果,由于我们后续在kibana中查看数据,所以选择不安装。这里还是介绍下安装方法:

Elasticsearch 在 5.0 版本后,Elasticsearch-head 插件需要作为独立服务进行安装,需要使用npm工具(NodeJS的包管理工具)安装。安装 Elasticsearch-head 需要提前安装好依赖软件 node 和 phantomjs。

- node:是一个基于 Chrome V8 引擎的 JavaScript 运行环境。

- phantomjs:是一个基于 webkit 的JavaScriptAPI,可以理解为一个隐形的浏览器,任何 基于 webkit 浏览器做的事情,它都可以做到。

(1)#编译安装 node #上传软件包 node-v8.2.1.tar.gz 到/opt yum install gcc gcc-c++ make -y cd /opt tar zxf node-v8.2.1.tar.gz cd node-v8.2.1/ ./configure make -j2 && make install (2)#安装 phantomjs #上传软件包 phantomjs-2.1.1-linux-x86_64.tar.bz2 到 cd /opt tar jxf phantomjs-2.1.1-linux-x86_64.tar.bz2 -C /usr/local/src/ cd /usr/local/src/phantomjs-2.1.1-linux-x86_64/bin cp phantomjs /usr/local/bin (3)#安装 Elasticsearch-head 数据可视化工具 #上传软件包 elasticsearch-head.tar.gz 到/opt cd /opt tar zxf elasticsearch-head.tar.gz -C /usr/local/src/ cd /usr/local/src/elasticsearch-head/ npm install (4)#修改 Elasticsearch 主配置文件 vim /etc/elasticsearch/elasticsearch.yml ...... --末尾添加以下内容-- http.cors.enabled: true #开启跨域访问支持,默认为 false http.cors.allow-origin: "*" #指定跨域访问允许的域名地址为所有 systemctl restart elasticsearch (5)#启动 elasticsearch-head 服务 #必须在解压后的 elasticsearch-head 目录下启动服务,进程会读取该目录下的 gruntfile.js 文件,否则可能启动失败。 cd /usr/local/src/elasticsearch-head/ npm run start & > elasticsearch-head@0.0.0 start /usr/local/src/elasticsearch-head > grunt server Running "connect:server" (connect) task Waiting forever... Started connect web server on http://localhost:9100 #elasticsearch-head 监听的端口是 9100 netstat -natp |grep 9100 (6)#通过 Elasticsearch-head 查看 Elasticsearch 信息通过浏览器访问 http://192.168.59.115:9100/ 地址并连接群集。如果看到群集健康值为 green 绿色,代表群集很健康。访问有问题 可以将localhost 改成ip地址 (7)#插入索引 ##登录192.168.59.115 node1主机##### 索引为index-demo,类型为test,可以看到成功创建 [root@node1 ~]# curl -X PUT 'localhost:9200/index-demo/test/1?pretty&pretty' -H 'content-Type: application/json' -d '{"user":"zhangsan","mesg":"hello world"}' { "_index" : "index-demo", "_type" : "test", "_id" : "1", "_version" : 1, "result" : "created", "_shards" : { "total" : 2, "successful" : 2, "failed" : 0 }, "created" : true }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

效果:

3. ELK Logstash 部署

在 Apache 节点上操作

3.1 安装Logstash,httpd,java

- 安装httpd并启动

yum -y install httpd

systemctl start httpd

- 1

- 2

- 安装java环境

yum -y install java

java -version

- 1

- 2

- 下载logstash

https://www.elastic.co/cn/downloads/logstash - 安装logstash

[root@node-253 opt]# cp /tmp/logstash-8.7.0-x86_64.rpm .

[root@node-253 opt]# rpm -ivh logstash-8.7.0-x86_64.rpm

warning: logstash-8.7.0-x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:logstash-1:8.7.0-1 ################################# [100%]

[root@node-253 opt]# rpm -qc logstash-8.7.0-x86_64.rpm

package logstash-8.7.0-x86_64.rpm is not installed

[root@node-253 opt]# rpm -qc logstash

/etc/default/logstash

/etc/logstash/jvm.options

/etc/logstash/log4j2.properties

/etc/logstash/logstash-sample.conf

/etc/logstash/logstash.yml

/etc/logstash/pipelines.yml

/etc/logstash/startup.options

/lib/systemd/system/logstash.service

systemctl start logstash.service

systemctl enable logstash.service

cd /usr/share/logstash/

ls

ln -s /usr/share/logstash/bin/logstash /usr/local/bin/

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

3.2 测试 Logstash与elasticsearch功能是否能做对接

Logstash命令测试

字段描述解释:

- -f 通过这个选项可以指定logstash的配置文件,根据配置文件配置logstash

- -e 后面跟着字符串 该字符串可以被当做logstash的配置(如果是“空”则默认使用stdin做为输入、stdout作为输出)

- -t 测试配置文件是否正确,然后退出

logstash -f 配置文件名字 去连接elasticsearch

(1)#输入采用标准输入输出采用标准输出---登录192.168.71.253 在Apache服务器上

logstash -e 'input { stdin{} } output { stdout{} }'

16:45:21.422 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

16:45:21.645 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

www.baidu.com ##需要手动输入

2021-12-16T08:46:14.041Z apache www.baidu.com

www.sina.com ##需要手动输入

2021-12-16T08:46:23.548Z apache www.sina.com

(2)#使用 rubydebug 输出详细格式显示,codec 为一种编解码器

logstash -e 'input { stdin{} } output { stdout{ codec=>rubydebug } }'

16:51:13.127 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>250}

16:51:13.174 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

16:51:13.205 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

www.baidu.com ##需要手动输入

{

"@timestamp" => 2021-12-16T08:52:22.528Z,

"@version" => "1",

"host" => "apache",

"message" => "www.baidu.com"

}

(3)##使用logstash将信息写入elasticsearch中

logstash -e 'input { stdin{} } output { elasticsearch { hosts=>["192.168.63.102:9200"] } }'

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

3.3 定义 logstash配置文件

Logstash 配置文件基本由三部分组成:input、output 以及 filter(可选,根据需要选择使用)。

- 日志目录可读权限

chmod o+r /var/log/messages #让 Logstash 可以读取日志

- 1

- 修改 Logstash 配置文件,让其收集系统日志/var/log/messages,并将其输出到 elasticsearch 中。

vim /etc/logstash/conf.d/system.conf

input {

file{

path =>"/var/log/messages" #指定要收集的日志的位置

type =>"system" #自定义日志类型标识

start_position =>"beginning" #表示从开始处收集

}

}

output {

elasticsearch { #输出到 elasticsearch

hosts => ["192.168.71.252:9200"] #指定 elasticsearch 服务器的地址和端口

index =>"system-%{+YYYY.MM.dd}" #指定输出到 elasticsearch 的索引格式

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 重启服务

systemctl restart logstash

- 1

4. ELK Kibana 部署

在 Node1 节点上操作

此处有个小插曲,原本的71.251服务器和252,253本是同一台机器上的虚拟机,但是同时开启es和kibana之后,cpu和内存直接爆了,笔者加内存,加cpu,奈何机器配置有限,加完还是超负荷。所以笔者又在另外一台机器上搭建的虚拟机,4个cpu+4G内存还算跑起来了,不会很卡。

- 下载Kibana

https://www.elastic.co/cn/downloads/kibana - 安装 Kiabana

上传软件包 kibana-5.5.1-x86_64.rpm 到/opt目录

cd /opt

rpm -ivh kibana-5.5.1-x86_64.rpm

- 1

- 2

- 设置 Kibana 的主配置文件

vim /etc/kibana/kibana.yml

--2--取消注释,Kibana 服务的默认监听端口为5601

server.port: 5601

--7--取消注释,设置 Kibana 的监听地址,0.0.0.0代表所有地址

server.host: "0.0.0.0"

--21--取消注释,设置和 Elasticsearch 建立连接的地址和端口

elasticsearch.url: "http://192.168.71.251:9200"

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 启动 Kibana 服务

systemctl start kibana.service

systemctl enable kibana.service

netstat -natp | grep 5601

- 1

- 2

- 3

- 验证 Kibana

浏览器访问 http://192.168.70.251:5601

- 将 Apache 服务器的日志(访问的、错误的)添加到 Elasticsearch 并通过 Kibana 显示

[root@node-253 conf.d]# cat /etc/logstash/conf.d/apache_log.conf

input {

file{

path => "/var/log/httpd/access_log"

type => "access"

start_position => "beginning"

}

file{

path => "/var/log/httpd/error_log"

type => "error"

start_position => "beginning"

}

}

output {

if [type] == "access" {

elasticsearch {

hosts => ["192.168.70.251:9200"]

index => "apache_access-%{+YYYY.MM.dd}"

}

}

if [type] == "error" {

elasticsearch {

hosts => ["192.168.70.251:9200"]

index => "apache_error-%{+YYYY.MM.dd}"

}

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

cd /etc/logstash/conf.d/

/usr/share/logstash/bin/logstash -f apache_log.conf

- 1

- 2

-

浏览器访问http://192.168.70.251:5601

Kibana左侧的Toolbar主要分为一下几块功能:

- Discovery 发现:用于查看和搜索原始数据

- Visualize 可视化:用来创建图表、表格和地图等

- Dashboard:多个图表和合并为一个 Dashboard 仪表盘

- Timelion 时间线:用于分析时序数据,以二维图形的方式展示

- Dev Tools 开发工具:用于进行DSL查询、Query性能分析等

- Management 管理:主要用于创建 Index Patterns,ES中的索引在创建 Index Patterns 之后,才能在 Discover 中被搜索,在 Visualize 和 Dashboard 中制图。