- 13D-DIC数字图像相关法测量流程介绍-数字图像采集_视觉软件中触发周期是什么意思

- 2【windows】亲测-win11系统跳过联网和微软账户登录,实现本地账户登录_win11跳过联网激活

- 3【数据结构和算法初阶(C语言)】时间复杂度(衡量算法快慢的高端玩家,搭配例题详细剖析)_衡量一个算法好坏一般以最坏的时间复杂度为标准

- 4如何在群晖NAS搭建bitwarden密码管理软件并实现无公网IP远程访问_群晖怎么安装bitwarden

- 5基于Hadoop的区块链海量数据存储的设计与实现_区块链 hdfs

- 6iOS(一):Swift纯代码模式iOS开发入门教程_swift 开发ios入门教程

- 7学懂C语言系列(三):C语言基本语法

- 8kafka架构深入

- 9Langchain-chatchat: Langchain核心组件及应用_langchain chatchat

- 10【爬虫】1.4 POST 方法向网站发送数据_网页爬虫 post数据

Python爬虫之基于 selenium 实现文献信息获取_python使用selenium爬取ieee和arxiv文献摘要_爬虫爬取ieee文献数据

赞

踩

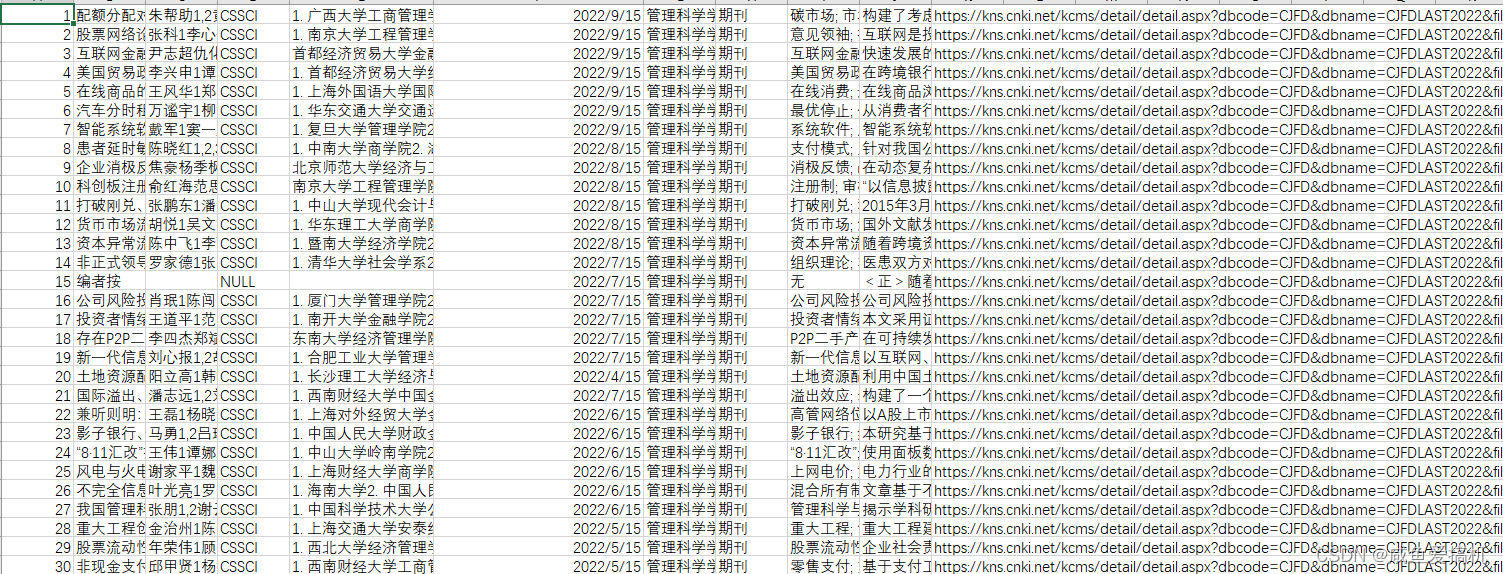

每个条目包含题目、作者、来源等信息

通过对当前页面分析,发现每条文献条目的 xpath 是有规律的

#题名

/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[1]/td[2]

#作者

/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[1]/td[3]

- 1

- 2

- 3

- 4

- 5

- 6

tr[1] 表示本页第一条条目,而 td[2] 中的2-6 分别代表作者、来源、发表时间和数据库

我们在当前页面是无法获取到文献的摘要、关键字等信息,需要进一步点击进入相关文献条目

进入到相关文献页面之后,根据 class name来获取摘要、关键字、是否为CSSCI 这些元素

完成以上知网页面的分析后,我们就可以根据需求开始写代码了!

代码实现

导入所需包

import time

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

- 1

- 2

- 3

- 4

- 5

- 6

- 7

创建浏览器对象

这里我用的是 Edge 浏览器

# get直接返回,不再等待界面加载完成

desired_capabilities = DesiredCapabilities.EDGE

desired_capabilities["pageLoadStrategy"] = "none"

# 设置 Edge 驱动器的环境

options = webdriver.EdgeOptions()

# 设置 Edge 不加载图片,提高速度

options.add_experimental_option("prefs", {"profile.managed\_default\_content\_settings.images": 2})

# 创建一个 Edge 驱动器

driver = webdriver.Edge(options=options)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

传入 url 参数然后模拟对浏览器进行人为操作

适当的加入 time.sleep() 方法,等待页面加载完成

不然页面还没完全加载就执行下一步操作的话会报错

# 打开页面 driver.get("https://kns.cnki.net/kns8/AdvSearch") time.sleep(2) # 传入关键字 WebDriverWait(driver, 100).until( EC.presence_of_element_located((By.XPATH, '''//\*[@id="gradetxt"]/dd[3]/div[2]/input'''))).send_keys(theme) time.sleep(2) # 点击搜索 WebDriverWait(driver, 100).until( EC.presence_of_element_located((By.XPATH, "/html/body/div[2]/div/div[2]/div/div[1]/div[1]/div[2]/div[2]/input"))).click() time.sleep(3) # 点击切换中文文献 WebDriverWait(driver, 100).until( EC.presence_of_element_located((By.XPATH, "/html/body/div[3]/div[1]/div/div/div/a[1]"))).click() time.sleep(3)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

获取总文献数和页数

res_unm = WebDriverWait(driver, 100).until(EC.presence_of_element_located(

(By.XPATH, "/html/body/div[3]/div[2]/div[2]/div[2]/form/div/div[1]/div[1]/span[1]/em"))).text

# 去除千分位的逗号

res_unm = int(res_unm.replace(",", ''))

page_unm = int(res_unm / 20) + 1

print(f"共找到 {res\_unm} 条结果, {page\_unm} 页。")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

对结果页进行解析

def crawl(driver, papers_need, theme): # 赋值序号, 控制爬取的文章数量 count = 1 # 当爬取数量小于需求时,循环网页页码 while count <= papers_need: # 等待加载完全,休眠3S time.sleep(3) title_list = WebDriverWait(driver, 10).until(EC.presence_of_all_elements_located((By.CLASS_NAME, "fz14"))) # 循环网页一页中的条目 for i in range(len(title_list)): try: if count % 20 != 0: term = count % 20 # 本页的第几个条目 else: term = 20 title_xpath = f"/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[{term}]/td[2]" author_xpath = f"/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[{term}]/td[3]" source_xpath = f"/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[{term}]/td[4]" date_xpath = f"/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[{term}]/td[5]" database_xpath = f"/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[{term}]/td[6]" title = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, title_xpath))).text authors = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, author_xpath))).text source = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, source_xpath))).text date = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, date_xpath))).text database = WebDriverWait(driver, 10).until( EC.presence_of_element_located((By.XPATH, database_xpath))).text # 点击条目 title_list[i].click() # 获取driver的句柄 n = driver.window_handles # driver切换至最新生产的页面 driver.switch_to.window(n[-1]) time.sleep(3) # 开始获取页面信息 title = WebDriverWait(driver, 10).until(EC.presence_of_element_located( (By.XPATH, "/html/body/div[2]/div[1]/div[3]/div/div/div[3]/div/h1"))).text authors = WebDriverWait(driver, 10).until(EC.presence_of_element_located( (By.XPATH, "/html/body/div[2]/div[1]/div[3]/div/div/div[3]/div/h3[1]"))).text institute = WebDriverWait(driver, 10).until(EC.presence_of_element_located( (By.XPATH, "/html/body/div[2]/div[1]/div[3]/div/div/div[3]/div/h3[2]"))).text abstract = WebDriverWait(driver, 10).until( EC.presence_of_element_located((By.CLASS_NAME, "abstract-text"))).text try: keywords = WebDriverWait(driver, 10).until( EC.presence_of_element_located((By.CLASS_NAME, "keywords"))).text[:-1] cssci = WebDriverWait(driver, 10).until( EC.presence_of_element_located((By.XPATH, "/html/body/div[2]/div[1]/div[3]/div/div/div[1]/div[1]/a[2]"))).text except: keywords = '无' cssci = 'NULL' url = driver.current_url # 写入文件 res = f"{count}\t{title}\t{authors}\t{cssci}\t{institute}\t{date}\t{source}\t{database}\t{keywords}\t{abstract}\t{url}".replace( "\n", "") + "\n" print(res) with open(f'{theme}.tsv', 'a', encoding='gbk') as f: f.write(res) except: print(f" 第{count} 条爬取失败\n") # 跳过本条,接着下一个 continue finally: # 如果有多个窗口,关闭第二个窗口, 切换回主页 n2 = driver.window_handles if len(n2) > 1: driver.close() driver.switch_to.window(n2[0]) # 计数,判断篇数是否超出限制 count += 1 if count == papers_need: break else: # 切换到下一页 WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, "//a[@id='PageNext']"))).click()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

结果展示:

结果是一个以制表符分隔的表格文件(用 excel 打开),其中包含了论文的基本信息,包括:题目、作者、是否 CSSCI、来源、摘要等

完整代码如下:

import time from selenium import webdriver from selenium.webdriver.support.ui import WebDriverWait from selenium.webdriver.support import expected_conditions as EC from selenium.webdriver.common.by import By from selenium.webdriver.common.desired_capabilities import DesiredCapabilities from urllib.parse import urljoin def open\_page(driver, theme): # 打开页面 driver.get("https://kns.cnki.net/kns8/AdvSearch") time.sleep(2) # 传入关键字 WebDriverWait(driver, 100).until( EC.presence_of_element_located((By.XPATH, '''//\*[@id="gradetxt"]/dd[3]/div[2]/input'''))).send_keys(theme) time.sleep(2) # 点击搜索 WebDriverWait(driver, 100).until( EC.presence_of_element_located((By.XPATH, "/html/body/div[2]/div/div[2]/div/div[1]/div[1]/div[2]/div[2]/input"))).click() time.sleep(3) # 点击切换中文文献 WebDriverWait(driver, 100).until( EC.presence_of_element_located((By.XPATH, "/html/body/div[3]/div[1]/div/div/div/a[1]"))).click() time.sleep(3) # 跳转到第三页 # WebDriverWait(driver, 100).until( # EC.presence\_of\_element\_located((By.XPATH, "/html/body/div[3]/div[2]/div[2]/div[2]/form/div/div[2]/a[2]"))).click() # time.sleep(3) # 获取总文献数和页数 res_unm = WebDriverWait(driver, 100).until(EC.presence_of_element_located( (By.XPATH, "/html/body/div[3]/div[2]/div[2]/div[2]/form/div/div[1]/div[1]/span[1]/em"))).text # 去除千分位里的逗号 res_unm = int(res_unm.replace(",", '')) page_unm = int(res_unm / 20) + 1 print(f"共找到 {res\_unm} 条结果, {page\_unm} 页。") return res_unm def crawl(driver, papers_need, theme): # 赋值序号, 控制爬取的文章数量 count = 1 # 当爬取数量小于需求时,循环网页页码 while count <= papers_need: # 等待加载完全,休眠3S time.sleep(3) title_list = WebDriverWait(driver, 10).until(EC.presence_of_all_elements_located((By.CLASS_NAME, "fz14"))) # 循环网页一页中的条目 for i in range(len(title_list)): try: if count % 20 != 0: term = count % 20 # 本页的第几个条目 else: term = 20 title_xpath = f"/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[{term}]/td[2]" author_xpath = f"/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[{term}]/td[3]" source_xpath = f"/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[{term}]/td[4]" date_xpath = f"/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[{term}]/td[5]" database_xpath = f"/html/body/div[3]/div[2]/div[2]/div[2]/form/div/table/tbody/tr[{term}]/td[6]" title = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, title_xpath))).text authors = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, author_xpath))).text source = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, source_xpath))).text date = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, date_xpath))).text database = WebDriverWait(driver, 10).until( EC.presence_of_element_located((By.XPATH, database_xpath))).text # 点击条目 title_list[i].click() # 获取driver的句柄 n = driver.window_handles # driver切换至最新生产的页面 driver.switch_to.window(n[-1]) time.sleep(3) # 开始获取页面信息 title = WebDriverWait(driver, 10).until(EC.presence_of_element_located( (By.XPATH, "/html/body/div[2]/div[1]/div[3]/div/div/div[3]/div/h1"))).text authors = WebDriverWait(driver, 10).until(EC.presence_of_element_located( (By.XPATH, "/html/body/div[2]/div[1]/div[3]/div/div/div[3]/div/h3[1]"))).text institute = WebDriverWait(driver, 10).until(EC.presence_of_element_located( (By.XPATH, "/html/body/div[2]/div[1]/div[3]/div/div/div[3]/div/h3[2]"))).text abstract = WebDriverWait(driver, 10).until( EC.presence_of_element_located((By.CLASS_NAME, "abstract-text"))).text try: keywords = WebDriverWait(driver, 10).until( EC.presence_of_element_located((By.CLASS_NAME, "keywords"))).text[:-1] cssci = WebDriverWait(driver, 10).until( EC.presence_of_element_located((By.XPATH, "/html/body/div[2]/div[1]/div[3]/div/div/div[1]/div[1]/a[2]"))).text except: keywords = '无' cssci = 'NULL' url = driver.current_url # 获取下载链接 # link = WebDriverWait( driver, 10 ).until( EC.presence\_of\_all\_elements\_located((By.CLASS\_NAME ,"btn-dlcaj") ) )[0].get\_attribute('href') # link = urljoin(driver.current\_url, link) # 写入文件 res = f"{count}\t{title}\t{authors}\t{cssci}\t{institute}\t{date}\t{source}\t{database}\t{keywords}\t{abstract}\t{url}".replace( "\n", "") + "\n" print(res) # with open(f'CNKI\_{theme}.tsv', 'a', encoding='gbk') as f: # f.write(res) count += 1 if count > papers_need: break except: print(f" 第{count} 条爬取失败\n") # 跳过本条,接着下一个 continue finally: # 如果有多个窗口,关闭第二个窗口, 切换回主页 n2 = driver.window_handles if len(n2) > 1: driver.close() driver.switch_to.window(n2[0]) # 计数,判断需求是否足够 # count += 1 if count > papers_need: break else: # 切换到下一页 WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.XPATH, "//a[@id='PageNext']"))).click() def webserver(theme): # get直接返回,不再等待界面加载完成 desired_capabilities = DesiredCapabilities.EDGE desired_capabilities["pageLoadStrategy"] = "none" # 设置微软驱动器的环境 options = webdriver.EdgeOptions() # 设置chrome不加载图片,提高速度 options.add_experimental_option("prefs", {"profile.managed\_default\_content\_settings.images": 2}) # 创建一个微软驱动器 driver = webdriver.Edge(options=options) # 设置所需篇数 papers_need = 41 res_unm = int(open_page(driver, theme)) # 判断所需是否大于总篇数 papers_need = papers_need if (papers_need <= res_unm) else res_unm return driver, papers_need, theme if __name__ == "\_\_main\_\_": # 输入需要搜索的内容 # theme = input("请输入你要搜索的期刊名称:") print('1') theme = "中国人口·资源与环境" driver, papers_need, theme = webserver(theme) print("所需篇数: ", papers_need) crawl(driver, papers_need, theme) # 关闭浏览器 driver.close()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

踩过的坑

网页加载太慢导致元素查找出错

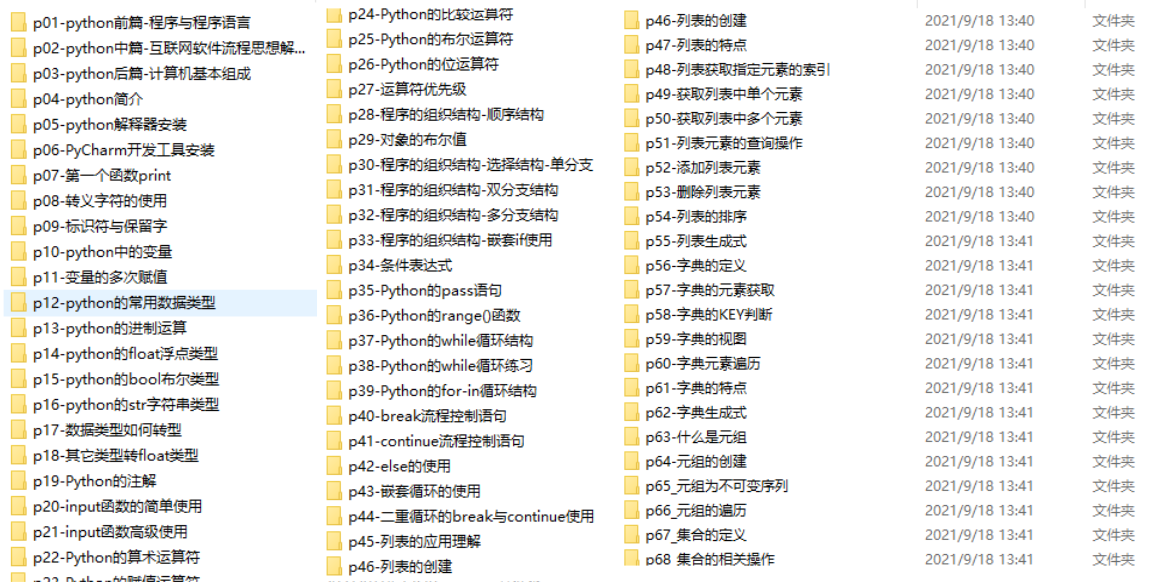

现在能在网上找到很多很多的学习资源,有免费的也有收费的,当我拿到1套比较全的学习资源之前,我并没着急去看第1节,我而是去审视这套资源是否值得学习,有时候也会去问一些学长的意见,如果可以之后,我会对这套学习资源做1个学习计划,我的学习计划主要包括规划图和学习进度表。

分享给大家这份我薅到的免费视频资料,质量还不错,大家可以跟着学习

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!