热门标签

热门文章

- 1iOS:多效果的CategoryView_ios jxcategorytitleview加个数角标

- 2Docker(五)

- 3Leetcode3190. 使所有元素都可以被 3 整除的最少操作数

- 4音乐人值得尝试的十大文本转音乐AI平台

- 5【大唐杯备考】——5G网元功能与接口(学习笔记)_5g网络功能之间的信息交互可以基于两种方式表示的优缺点

- 6LLM Agents调研_有哪些经典的 single agent

- 7【Hadoop大数据技术】——Hive数据仓库(学习笔记)_启动数据仓库之前需要hadoop

- 8Pandas API 文档索引中文翻译版(一)—— Series_pandas中文api

- 9Java面试:分布式框架面试题合集_分布式架构面试题

- 10C语言的数据结构:图的操作

当前位置: article > 正文

Flink学习之旅:(二)构建Flink demo工程并提交到集群执行

作者:weixin_40725706 | 2024-06-24 06:40:12

赞

踩

flink demo

1.创建Maven工程

在idea中创建一个 名为 MyFlinkFirst 工程

2.配置pom.xml

- <properties>

- <flink.version>1.13.0</flink.version>

- <java.version>1.8</java.version>

- <scala.binary.version>2.12</scala.binary.version>

- <slf4j.version>1.7.30</slf4j.version>

- </properties>

- <dependencies>

- <!-- 引入 Flink 相关依赖-->

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-java</artifactId>

- <version>${flink.version}</version>

- </dependency>

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-streaming-java_${scala.binary.version}</artifactId>

- <version>${flink.version}</version>

- </dependency>

- <dependency>

- <groupId>org.apache.flink</groupId>

- <artifactId>flink-clients_${scala.binary.version}</artifactId>

- <version>${flink.version}</version>

- </dependency>

- <!-- 引入日志管理相关依赖-->

- <dependency>

- <groupId>org.slf4j</groupId>

- <artifactId>slf4j-api</artifactId>

- <version>${slf4j.version}</version>

- </dependency>

- <dependency>

- <groupId>org.slf4j</groupId>

- <artifactId>slf4j-log4j12</artifactId>

- <version>${slf4j.version}</version>

- </dependency>

- <dependency>

- <groupId>org.apache.logging.log4j</groupId>

- <artifactId>log4j-to-slf4j</artifactId>

- <version>2.14.0</version>

- </dependency>

- </dependencies>

3.配置日志管理

在目录 src/main/resources 下添加文件:log4j.properties,内容配置如下:

- log4j.rootLogger=error, stdout

- log4j.appender.stdout=org.apache.log4j.ConsoleAppender

- log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

- log4j.appender.stdout.layout.ConversionPattern=%-4r [%t] %-5p %c %x - %m%n

4.编写代码

编写 StreamWordCount 类,单词汇总

- package com.qiyu;

-

- import org.apache.flink.api.common.typeinfo.Types;

- import org.apache.flink.api.java.tuple.Tuple2;

- import org.apache.flink.streaming.api.datastream.DataStreamSource;

- import org.apache.flink.streaming.api.datastream.KeyedStream;

- import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

- import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

- import org.apache.flink.util.Collector;

-

- import java.util.Arrays;

-

- /**

- * @author MR.Liu

- * @version 1.0

- * @data 2023-10-18 14:45

- */

- public class StreamWordCount {

- public static void main(String[] args) throws Exception {

- // 1. 创建流式执行环境

- StreamExecutionEnvironment env =

- StreamExecutionEnvironment.getExecutionEnvironment();

- // 2. 读取文本流

- DataStreamSource<String> lineDSS = env.socketTextStream("192.168.220.130",

- 7777);

- // 3. 转换数据格式

- SingleOutputStreamOperator<Tuple2<String, Long>> wordAndOne = lineDSS

- .flatMap((String line, Collector<String> words) -> {

- Arrays.stream(line.split(" ")).forEach(words::collect);

- })

- .returns(Types.STRING)

- .map(word -> Tuple2.of(word, 1L))

- .returns(Types.TUPLE(Types.STRING, Types.LONG));

- // 4. 分组

- KeyedStream<Tuple2<String, Long>, String> wordAndOneKS = wordAndOne

- .keyBy(t -> t.f0);

- // 5. 求和

- SingleOutputStreamOperator<Tuple2<String, Long>> result = wordAndOneKS

- .sum(1);

- // 6. 打印

- result.print();

- // 7. 执行

- env.execute();

- }

- }

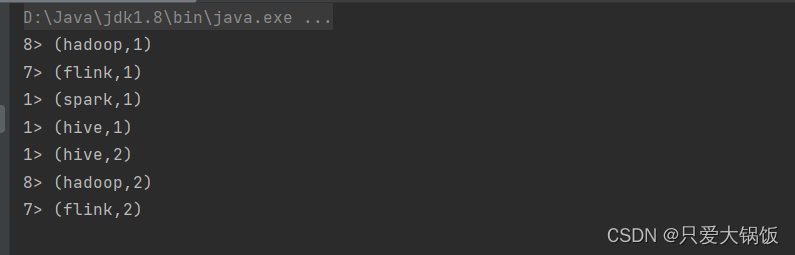

5.测试

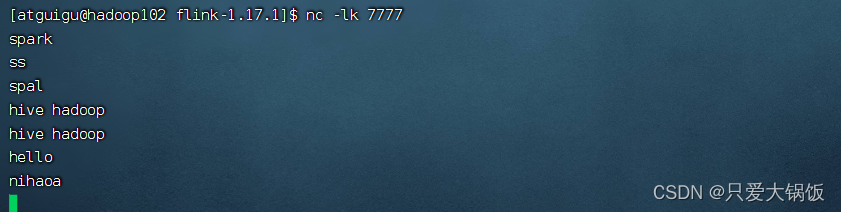

在hadoop102 服务器中 执行:

nc -lk 7777再运行 StreamWordCount java类

在命令行随意疯狂输出

idea 控制台 打印结果:

测试代码正常

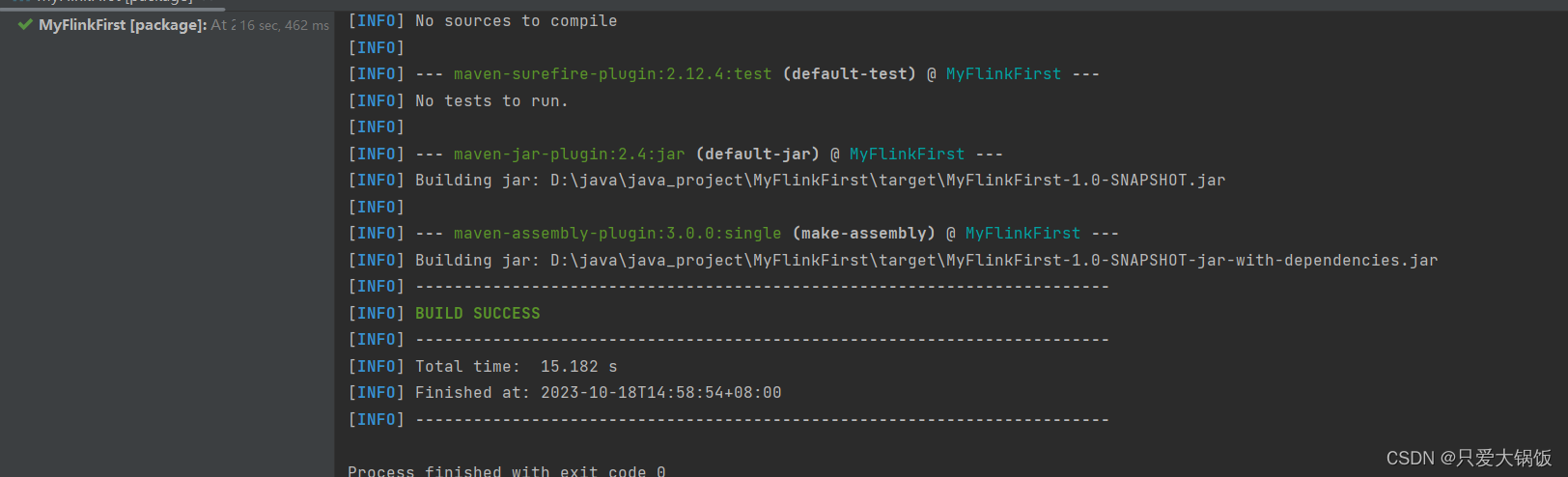

6. 打包程序提交到集群中运行

在pom.xml添加打包插件

- <build>

- <plugins>

- <plugin>

- <groupId>org.apache.maven.plugins</groupId>

- <artifactId>maven-assembly-plugin</artifactId>

- <version>3.0.0</version>

- <configuration>

- <descriptorRefs>

- <descriptorRef>jar-with-dependencies</descriptorRef>

- </descriptorRefs>

- </configuration>

- <executions>

- <execution>

- <id>make-assembly</id>

- <phase>package</phase>

- <goals>

- <goal>single</goal>

- </goals>

- </execution>

- </executions>

- </plugin>

- </plugins>

- </build>

直接使用 maven 中的 package命令,控制台显示 BUILD SUCCESS 就是打包成功!

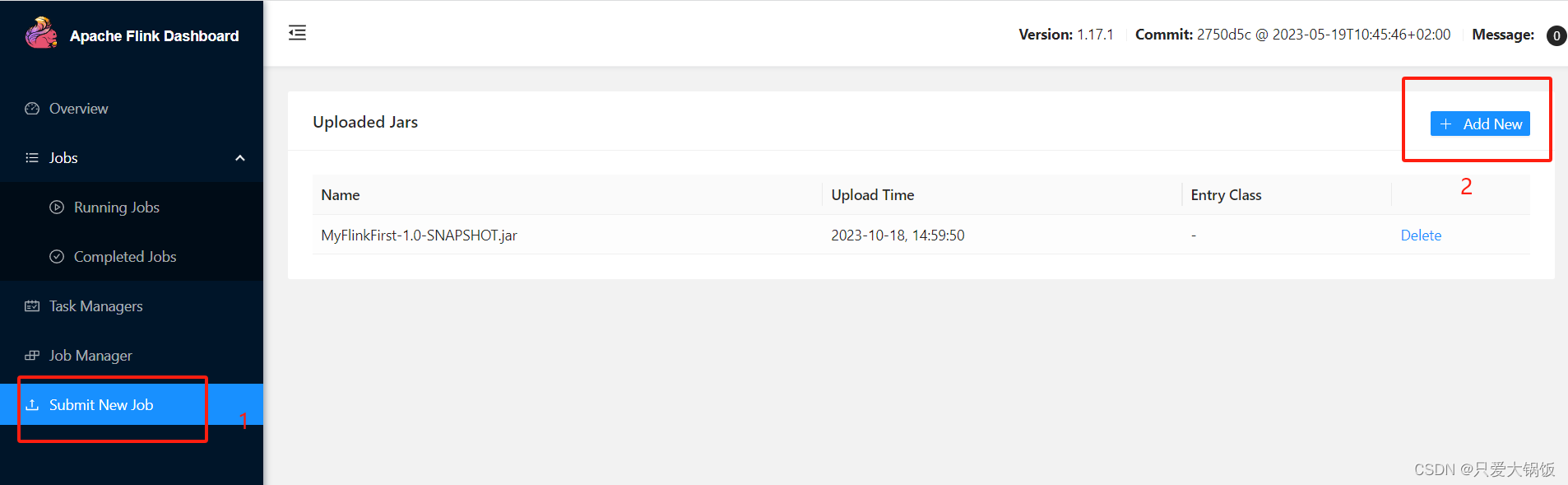

选择 MyFlinkFirst-1.0-SNAPSHOT.jar 提交到 web ui 上

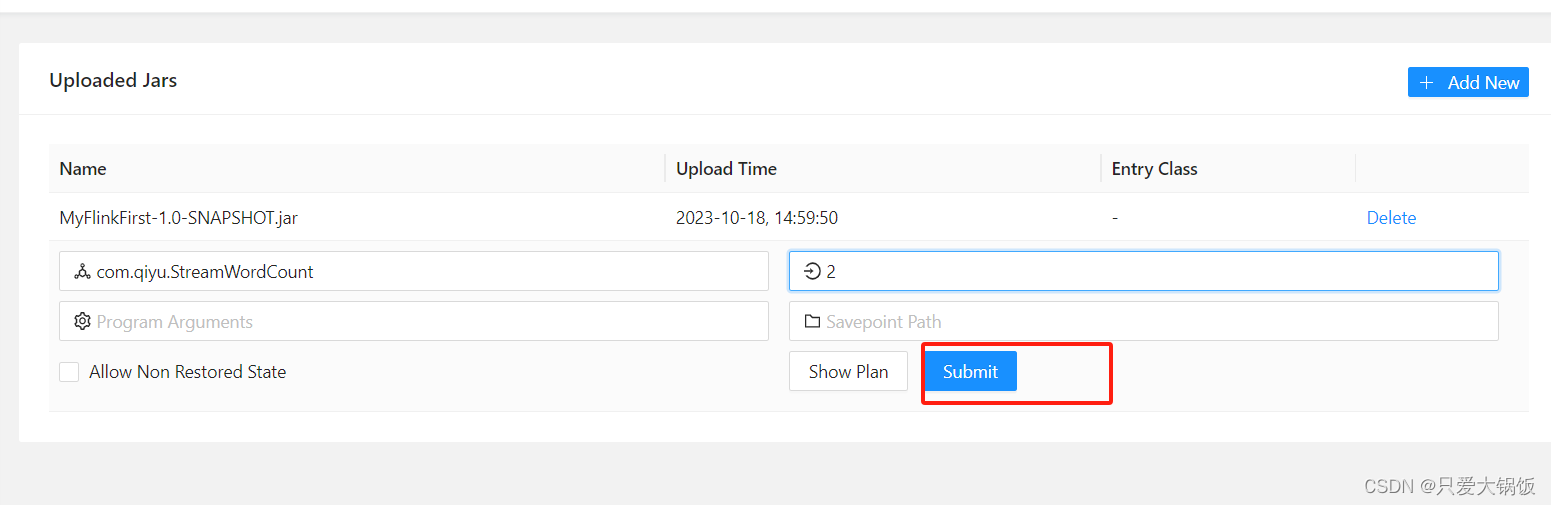

上传 jar 后,点击 jar 包名称 ,填写 主要配置程序入口主类的全类名,任务运行的并行度。完成后 点击 submit

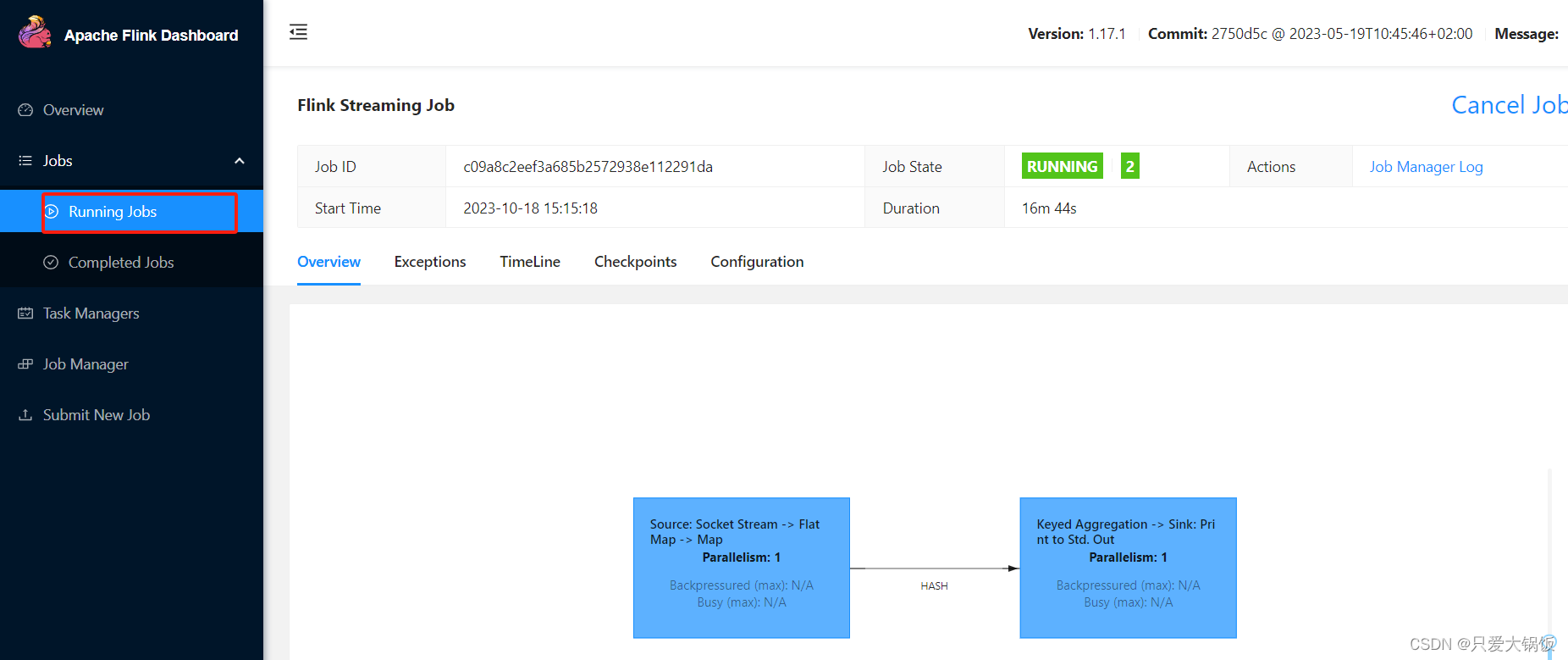

查看 任务运行列表

点击任务

点击“Task Managers”,打开 Stdout,并且在 hadoop102 命令行 疯狂输出

Stdout 就会显示 结果

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/weixin_40725706/article/detail/751952

推荐阅读

相关标签