- 1技术精英求职必备:U3D(Unity 3D)工程师简历指南_u3d简历怎么写注意要点

- 2python实现批量json文件转txt文件_json转txt

- 3GaussDB拿下的安全认证CC EAL4+究竟有多难?_cc认证4级标准

- 4java计算机毕业设计综合测评系统源码+数据库+系统+lw文档+mybatis+运行部署_综测加分系统源码

- 5AI复试面试可能问到的问题总结II_考研复试人工智能常见问题

- 6<Python>将txt文本转为SRT格式字幕_txt转srt

- 7Arcpy基础方法之Tools&Toolboxes_ta import concatenatedateandtimefields as concaten

- 8MATLAB图像大小规范_怎么改变imtool的长和宽

- 9python电影评论的情感分析流浪地球_使用python爬取流浪地球影评并制作词云,看看别人都说了些说什么...

- 10怎么清理mac电脑垃圾文件 怎么清理mac电脑垃圾软件 清洁mac系统 cleanmymac软件卸载 mac软件卸载 卸载mac应用程序

数据分析(三)线性回归模型实现

赞

踩

1. 惩罚线性回归模型概述

线性回归在实际应用时需要对普通最小二乘法进行一些修改。普通最小二乘法只在训练数据上最小化错误,难以顾及所有数据。

惩罚线性回归方法是一族用于克服最小二乘法( OLS)过拟合问题的方法。岭回归是惩罚线性回归的一个特例。岭回归通过对回归系数的平方和进行惩罚来避免过拟合。其他惩罚回归算法使用不同形式的惩罚项。

下面几个特点使得惩罚线性回归方法非常有效:

--模型训练足够快速。

--变量的重要性信息。

--部署时的预测足够快速。

--在各种问题上性能可靠,尤其对样本并不明显多于属性的属性矩阵,或者非常稀疏的矩阵。希望模型为稀疏解(即只使用部分属性进行预测的吝啬模型)。

--问题可能适合使用线性模型来解决。

公式 4-6 可以用如下语言描述:向量 beta 是以及常量 beta 零星是使期望预测的均方

错误最小的值,期望预测的均方错误是指在所有数据行(i=1,...,n)上计算 yi 与预测生成

yi 之间的错误平方的平均。

岭惩罚项对于惩罚回归来说并不是唯一有用的惩罚项。任何关于向量长度的指标都可以。使用不同的长度指标可以改变解的重要性。岭回归应用欧式几何的指标(即 β 的平方和)。另外一个有用的算法称作套索(Lasso)回归,该回归源于出租车的几何路径被称作曼哈顿距离或者 L1 正则化(即 β 的绝对值的和)。ElasticNet 惩罚项包含套索惩罚项以及岭惩罚项。

2. 求解惩罚线性回归问题

有大量通用的数值优化算法可以求解公式 4-6、公式 4-8 以及公式 4-11 对应的优化问题,但是惩罚线性回归问题的重要性促使研究人员开发专用算法,从而能够非常快地生成解。本文将对这些算法进行介绍并且运行相关代码,重点介绍2种算法:最小角度回归 LARS 以及 Glmnet。

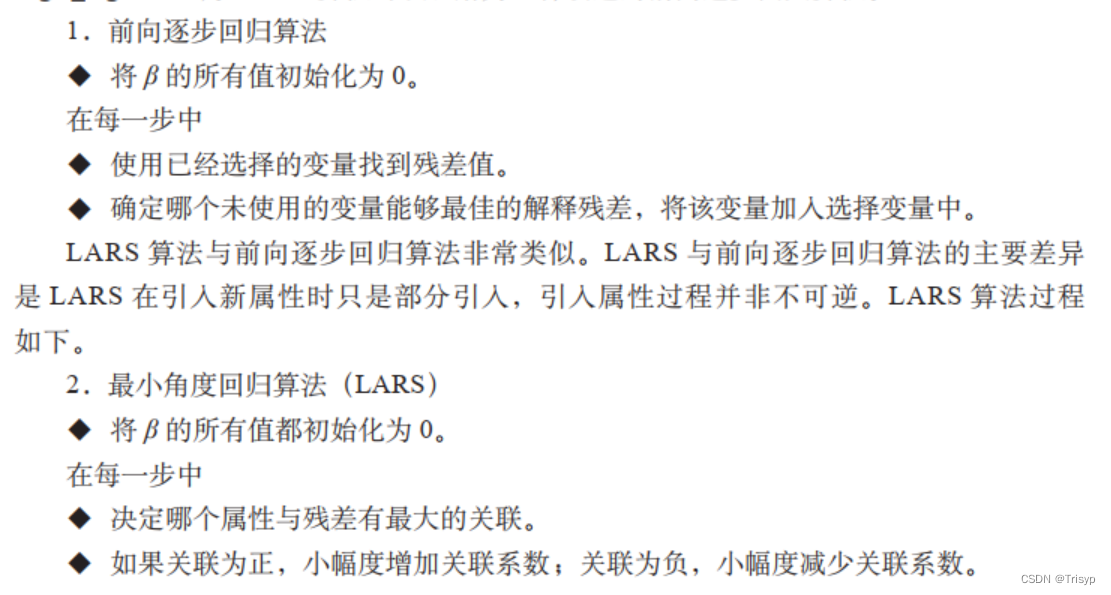

LARS 算法可以理解为一种改进的前向逐步回归算法。

之所以介绍 LARS 算法是因为该算法非常接近于套索以及前向逐步回归, LARS 算法很容易理解并且实现起来相对紧凑。通过研究 LARS 的代码,你会理解针对更一般的 ElasticNet 回归求解的具体过程,并且会了解惩罚回归求解的细节。

3. 完整代码(code)

- from math import sqrt

- import pandas as pd

- import matplotlib.pyplot as plt

- from tqdm import tqdm

-

-

- def x_normalized(xList, xMeans, xSD):

- nrows = len(xList)

- ncols = len(xList[0])

- xNormalized = []

- for i in range(nrows):

- rowNormalized = [(xList[i][j] - xMeans[j]) / xSD[j] for j in range(ncols)]

- xNormalized.append(rowNormalized)

-

-

- def data_normalized(wine):

- nrows, ncols = wine.shape

- wineNormalized = wine

- for i in range(ncols):

- mean = summary.iloc[1, i]

- sd = summary.iloc[2, i]

- wineNormalized.iloc[:, i:(i + 1)] = (wineNormalized.iloc[:, i:(i + 1)] - mean) / sd

- return wineNormalized

-

-

- def calculate_betaMat(nSteps, stepSize, wineNormalized):

- nrows, ncols = wineNormalized.shape

- # initialize a vector of coefficients beta(系数初始化)

- beta = [0.0] * (ncols - 1)

- # initialize matrix of betas at each step(系数矩阵初始化)

- betaMat = []

- betaMat.append(list(beta))

- # initialize residuals list(误差初始化)

- residuals = [0.0] * nrows

- for i in tqdm(range(nSteps)):

- # calculate residuals(计算误差)

- for j in range(nrows):

- residuals[j] = wineNormalized.iloc[j, (ncols - 1)]

- for k in range(ncols - 1):

- residuals[j] += - wineNormalized.iloc[j, k] * beta[k]

-

- # calculate correlation between attribute columns from normalized wine and residual(变量与误差相关系数)

- corr = [0.0] * (ncols - 1)

- for j in range(ncols - 1):

- for k in range(nrows):

- corr[j] += wineNormalized.iloc[k, j] * residuals[k] / nrows

-

- iStar = 0

- corrStar = corr[0]

- for j in range(1, (ncols - 1)):

- if abs(corrStar) < abs(corr[j]): # 相关性大的放前面

- iStar = j

- corrStar = corr[j]

- beta[iStar] += stepSize * corrStar / abs(corrStar) # 系数

- betaMat.append(list(beta))

- return betaMat

-

-

- def plot_betaMat1(betaMat):

- ncols = len(betaMat[0])

- for i in range(ncols):

- # plot range of beta values for each attribute

- coefCurve = betaMat[0:nSteps][i]

- plt.plot(coefCurve)

-

- plt.xlabel("Attribute Index")

- plt.ylabel(("Attribute Values"))

- plt.show()

-

-

- def plot_betaMat2(nSteps, betaMat):

- ncols = len(betaMat[0])

- for i in range(ncols):

- # plot range of beta values for each attribute

- coefCurve = [betaMat[k][i] for k in range(nSteps)]

- xaxis = range(nSteps)

- plt.plot(xaxis, coefCurve)

-

- plt.xlabel("Steps Taken")

- plt.ylabel(("Coefficient Values"))

- plt.show()

-

-

- def S(z, gamma):

- if gamma >= abs(z):

- return 0.0

- return (z / abs(z)) * (abs(z) - gamma)

-

-

- if __name__ == '__main__':

- target_url = "http://archive.ics.uci.edu/ml/machine-learning-databases/wine-quality/winequality-red.csv"

- wine = pd.read_csv(target_url, header=0, sep=";")

-

- # normalize the wine data

- summary = wine.describe()

- print(summary)

-

- # 数据标准化

- wineNormalized = data_normalized(wine)

- # number of steps to take(训练步数)

- nSteps = 100

- stepSize = 0.1

- betaMat = calculate_betaMat(nSteps, stepSize, wineNormalized)

- plot_betaMat1(betaMat)

- # ----------------------------larsWine---------------------------------------------------

- # read data into iterable

- names = wine.columns

- xList = []

- labels = []

- firstLine = True

- for i in range(len(wine)):

- row = wine.iloc[i]

- # put labels in separate array

- labels.append(float(row[-1]))

- # convert row to floats

- floatRow = row[:-1]

- xList.append(floatRow)

- # Normalize columns in x and labels

- nrows = len(xList)

- ncols = len(xList[0])

- # calculate means and variances(计算均值和方差)

- xMeans = []

- xSD = []

- for i in range(ncols):

- col = [xList[j][i] for j in range(nrows)]

- mean = sum(col) / nrows

- xMeans.append(mean)

- colDiff = [(xList[j][i] - mean) for j in range(nrows)]

- sumSq = sum([colDiff[i] * colDiff[i] for i in range(nrows)])

- stdDev = sqrt(sumSq / nrows)

- xSD.append(stdDev)

-

- # use calculate mean and standard deviation to normalize xList(X标准化)

- xNormalized = x_normalized(xList, xMeans, xSD)

- # Normalize labels: 将属性及标签进行归一化

- meanLabel = sum(labels) / nrows

- sdLabel = sqrt(sum([(labels[i] - meanLabel) * (labels[i] - meanLabel) for i in range(nrows)]) / nrows)

- labelNormalized = [(labels[i] - meanLabel) / sdLabel for i in range(nrows)]

-

- # initialize a vector of coefficients beta

- beta = [0.0] * ncols

- # initialize matrix of betas at each step

- betaMat = []

- betaMat.append(list(beta))

- # number of steps to take

- nSteps = 350

- stepSize = 0.004

- nzList = []

- for i in range(nSteps):

- # calculate residuals

- residuals = [0.0] * nrows

- for j in range(nrows):

- labelsHat = sum([xNormalized[j][k] * beta[k] for k in range(ncols)])

- residuals[j] = labelNormalized[j] - labelsHat # 计算残差

-

- # calculate correlation between attribute columns from normalized wine and residual

- corr = [0.0] * ncols

- for j in range(ncols):

- corr[j] = sum([xNormalized[k][j] * residuals[k] for k in range(nrows)]) / nrows # 每个属性和残差的关联

-

- iStar = 0

- corrStar = corr[0]

- for j in range(1, (ncols)): # 逐个判断哪个属性对降低残差贡献最大

- if abs(corrStar) < abs(corr[j]): # 好的(最大关联)特征会排到列表前面,应该保留,不太好的特征会排到最后

- iStar = j

- corrStar = corr[j]

- beta[iStar] += stepSize * corrStar / abs(corrStar) # 固定增加beta变量值,关联为正增量为正;关联为负,增量为负

- betaMat.append(list(beta)) # 求解得到参数结果

-

- nzBeta = [index for index in range(ncols) if beta[index] != 0.0]

- for q in nzBeta:

- if q not in nzList: # 对于每一迭代步,记录非零系数对应索引

- nzList.append(q)

- nameList = [names[nzList[i]] for i in range(len(nzList))]

- print(nameList)

- plot_betaMat2(nSteps, betaMat) # 绘制系数曲线

-

- # -------------------------------larsWine 10折交叉------------------------------------------------

- # Build cross-validation loop to determine best coefficient values.

- # number of cross validation folds

- nxval = 10

- # number of steps and step size

- nSteps = 350

- stepSize = 0.004

- # initialize list for storing errors.

- errors = [] # 记录每一步迭代的错误

- for i in range(nSteps):

- b = []

- errors.append(b)

-

- for ixval in range(nxval): # 10折交叉验证

- # Define test and training index sets

- idxTrain = [a for a in range(nrows) if a % nxval != ixval * nxval]

- idxTest = [a for a in range(nrows) if a % nxval == ixval * nxval]

- # Define test and training attribute and label sets

- xTrain = [xNormalized[r] for r in idxTrain] # 训练集

- labelTrain = [labelNormalized[r] for r in idxTrain]

- xTest = [xNormalized[r] for r in idxTest] # 测试集

- labelTest = [labelNormalized[r] for r in idxTest]

-

- # Train LARS regression on Training Data

- nrowsTrain = len(idxTrain)

- nrowsTest = len(idxTest)

-

- # initialize a vector of coefficients beta

- beta = [0.0] * ncols

-

- # initialize matrix of betas at each step

- betaMat = []

- betaMat.append(list(beta))

- for iStep in range(nSteps):

- # calculate residuals

- residuals = [0.0] * nrows

- for j in range(nrowsTrain):

- labelsHat = sum([xTrain[j][k] * beta[k] for k in range(ncols)])

- residuals[j] = labelTrain[j] - labelsHat

- # calculate correlation between attribute columns from normalized wine and residual

- corr = [0.0] * ncols

- for j in range(ncols):

- corr[j] = sum([xTrain[k][j] * residuals[k] for k in range(nrowsTrain)]) / nrowsTrain

-

- iStar = 0

- corrStar = corr[0]

- for j in range(1, (ncols)):

- if abs(corrStar) < abs(corr[j]):

- iStar = j

- corrStar = corr[j]

- beta[iStar] += stepSize * corrStar / abs(corrStar)

- betaMat.append(list(beta))

-

- # Use beta just calculated to predict and accumulate out of sample error - not being used in the calc of beta

- for j in range(nrowsTest):

- labelsHat = sum([xTest[j][k] * beta[k] for k in range(ncols)])

- err = labelTest[j] - labelsHat

- errors[iStep].append(err)

- cvCurve = []

- for errVect in errors:

- mse = sum([x * x for x in errVect]) / len(errVect)

- cvCurve.append(mse)

- minMse = min(cvCurve)

- minPt = [i for i in range(len(cvCurve)) if cvCurve[i] == minMse][0]

- print("Minimum Mean Square Error", minMse)

- print("Index of Minimum Mean Square Error", minPt)

-

- xaxis = range(len(cvCurve))

- plt.plot(xaxis, cvCurve)

- plt.xlabel("Steps Taken")

- plt.ylabel(("Mean Square Error"))

- plt.show()

-

- # -------------------------------glmnet larsWine2------------------------------------------------

- # select value for alpha parameter

- alpha = 1.0

- # make a pass through the data to determine value of lambda that

- # just suppresses all coefficients.

- # start with betas all equal to zero.

- xy = [0.0] * ncols

- for i in range(nrows):

- for j in range(ncols):

- xy[j] += xNormalized[i][j] * labelNormalized[i]

-

- maxXY = 0.0

- for i in range(ncols):

- val = abs(xy[i]) / nrows

- if val > maxXY:

- maxXY = val

-

- # calculate starting value for lambda

- lam = maxXY / alpha

-

- # this value of lambda corresponds to beta = list of 0's

- # initialize a vector of coefficients beta

- beta = [0.0] * ncols

-

- # initialize matrix of betas at each step

- betaMat = []

- betaMat.append(list(beta))

-

- # begin iteration

- nSteps = 100

- lamMult = 0.93 # 100 steps gives reduction by factor of 1000 in

- # lambda (recommended by authors)

- nzList = []

- for iStep in range(nSteps):

- # make lambda smaller so that some coefficient becomes non-zero

- lam = lam * lamMult

-

- deltaBeta = 100.0

- eps = 0.01

- iterStep = 0

- betaInner = list(beta)

- while deltaBeta > eps:

- iterStep += 1

- if iterStep > 100:

- break

- # cycle through attributes and update one-at-a-time

- # record starting value for comparison

- betaStart = list(betaInner)

- for iCol in range(ncols):

- xyj = 0.0

- for i in range(nrows):

- # calculate residual with current value of beta

- labelHat = sum([xNormalized[i][k] * betaInner[k] for k in range(ncols)])

- residual = labelNormalized[i] - labelHat

-

- xyj += xNormalized[i][iCol] * residual

-

- uncBeta = xyj / nrows + betaInner[iCol]

- betaInner[iCol] = S(uncBeta, lam * alpha) / (1 + lam * (1 - alpha))

-

- sumDiff = sum([abs(betaInner[n] - betaStart[n]) for n in range(ncols)])

- sumBeta = sum([abs(betaInner[n]) for n in range(ncols)])

- deltaBeta = sumDiff / sumBeta

- print(iStep, iterStep)

- beta = betaInner

- # add newly determined beta to list

- betaMat.append(beta)

- # keep track of the order in which the betas become non-zero

- nzBeta = [index for index in range(ncols) if beta[index] != 0.0]

- for q in nzBeta:

- if q not in nzList:

- nzList.append(q)

- # print out the ordered list of betas

- nameList = [names[nzList[i]] for i in range(len(nzList))]

- print(nameList)

- nPts = len(betaMat)

- plot_betaMat2(nPts, betaMat) # 绘制系数曲线