- 1window安装Stable-Diffusion-WebUI详细教程_stable diffusion web ui的项目目录在哪看

- 2android Studio Gradle 离线下载地址_gradle离线下载

- 3vscode配置clangd和clang-format

- 4DevEco Studio 3.1 Release | 动态共享包开发,编译更快,包更小_deveco studio 3.1.1 release

- 5Linux_系统编程day01_当某系统中有n个进程,每个进程都有自己唯一的id(pid)

- 6数据归约_一个数据归约算法应该具备哪些特性?

- 7杰卡德相似度(Jaccard similarity)_jaccard coefficient怎么算

- 8图像匹配 一些基本算法_平均绝对差算法

- 9500个Python模块(库)的详细分类介绍_python有多少模块

- 10kubernetes即将过期的证书更新_kubesphere证书过期

尚硅谷大数据技术Hadoop教程-笔记02【Hadoop-入门】_尚硅谷大数据技术之cdh数仓文档下载

赞

踩

目录

P007【007_尚硅谷_Hadoop_入门_课程介绍】07:29

P008【008_尚硅谷_Hadoop_入门_Hadoop是什么】03:00

P009【009_尚硅谷_Hadoop_入门_Hadoop发展历史】05:52

P010【010_尚硅谷_Hadoop_入门_Hadoop三大发行版本】05:59

P011【011_尚硅谷_Hadoop_入门_Hadoop优势】03:52

P012【012_尚硅谷_Hadoop_入门_Hadoop1.x2.x3.x区别】03:00

P013【013_尚硅谷_Hadoop_入门_HDFS概述】06:26

P014【014_尚硅谷_Hadoop_入门_YARN概述】06:35

P015【015_尚硅谷_Hadoop_入门_MapReduce概述】01:55

P016【016_尚硅谷_Hadoop_入门_HDFS&YARN&MR关系】03:22

P017【017_尚硅谷_Hadoop_入门_大数据技术生态体系】09:17

P018【018_尚硅谷_Hadoop_入门_VMware安装】04:41

P019【019_尚硅谷_Hadoop_入门_Centos7.5软硬件安装】15:56

P020【020_尚硅谷_Hadoop_入门_IP和主机名称配置】10:50

P021【021_尚硅谷_Hadoop_入门_Xshell远程访问工具】09:05

P022【022_尚硅谷_Hadoop_入门_模板虚拟机准备完成】12:25

P023【023_尚硅谷_Hadoop_入门_克隆三台虚拟机】15:01

P024【024_尚硅谷_Hadoop_入门_JDK安装】07:02

P025【025_尚硅谷_Hadoop_入门_Hadoop安装】07:20

P026【026_尚硅谷_Hadoop_入门_本地运行模式】11:56

P027【027_尚硅谷_Hadoop_入门_scp&rsync命令讲解】15:01

P028【028_尚硅谷_Hadoop_入门_xsync分发脚本】18:14

P029【029_尚硅谷_Hadoop_入门_ssh免密登录】11:25

P030【030_尚硅谷_Hadoop_入门_集群配置】13:24

P031【031_尚硅谷_Hadoop_入门_群起集群并测试】16:52

P032【032_尚硅谷_Hadoop_入门_集群崩溃处理办法】08:10

P033【033_尚硅谷_Hadoop_入门_历史服务器配置】05:26

P034【034_尚硅谷_Hadoop_入门_日志聚集功能配置】05:42

P035【035_尚硅谷_Hadoop_入门_两个常用脚本】09:18

P036【036_尚硅谷_Hadoop_入门_两道面试题】04:15

P037【037_尚硅谷_Hadoop_入门_集群时间同步】11:27

P038【038_尚硅谷_Hadoop_入门_常见问题总结】10:57

02_尚硅谷大数据技术之Hadoop(入门)V3.3

P007【007_尚硅谷_Hadoop_入门_课程介绍】07:29

P008【008_尚硅谷_Hadoop_入门_Hadoop是什么】03:00

P009【009_尚硅谷_Hadoop_入门_Hadoop发展历史】05:52

P010【010_尚硅谷_Hadoop_入门_Hadoop三大发行版本】05:59

Hadoop三大发行版本:Apache、Cloudera、Hortonworks。

1)Apache Hadoop

官网地址:http://hadoop.apache.org

下载地址:https://hadoop.apache.org/releases.html

2)Cloudera Hadoop

官网地址:https://www.cloudera.com/downloads/cdh

下载地址:https://docs.cloudera.com/documentation/enterprise/6/release-notes/topics/rg_cdh_6_download.html

(1)2008年成立的Cloudera是最早将Hadoop商用的公司,为合作伙伴提供Hadoop的商用解决方案,主要是包括支持、咨询服务、培训。

(2)2009年Hadoop的创始人Doug Cutting也加盟Cloudera公司。Cloudera产品主要为CDH,Cloudera Manager,Cloudera Support

(3)CDH是Cloudera的Hadoop发行版,完全开源,比Apache Hadoop在兼容性,安全性,稳定性上有所增强。Cloudera的标价为每年每个节点10000美元。

(4)Cloudera Manager是集群的软件分发及管理监控平台,可以在几个小时内部署好一个Hadoop集群,并对集群的节点及服务进行实时监控。

3)Hortonworks Hadoop

官网地址:https://hortonworks.com/products/data-center/hdp/

下载地址:https://hortonworks.com/downloads/#data-platform

(1)2011年成立的Hortonworks是雅虎与硅谷风投公司Benchmark Capital合资组建。

(2)公司成立之初就吸纳了大约25名至30名专门研究Hadoop的雅虎工程师,上述工程师均在2005年开始协助雅虎开发Hadoop,贡献了Hadoop80%的代码。

(3)Hortonworks的主打产品是Hortonworks Data Platform(HDP),也同样是100%开源的产品,HDP除常见的项目外还包括了Ambari,一款开源的安装和管理系统。

(4)2018年Hortonworks目前已经被Cloudera公司收购。

P011【011_尚硅谷_Hadoop_入门_Hadoop优势】03:52

Hadoop优势(4高)

- 高可靠性

- 高拓展性

- 高效性

- 高容错性

P012【012_尚硅谷_Hadoop_入门_Hadoop1.x2.x3.x区别】03:00

P013【013_尚硅谷_Hadoop_入门_HDFS概述】06:26

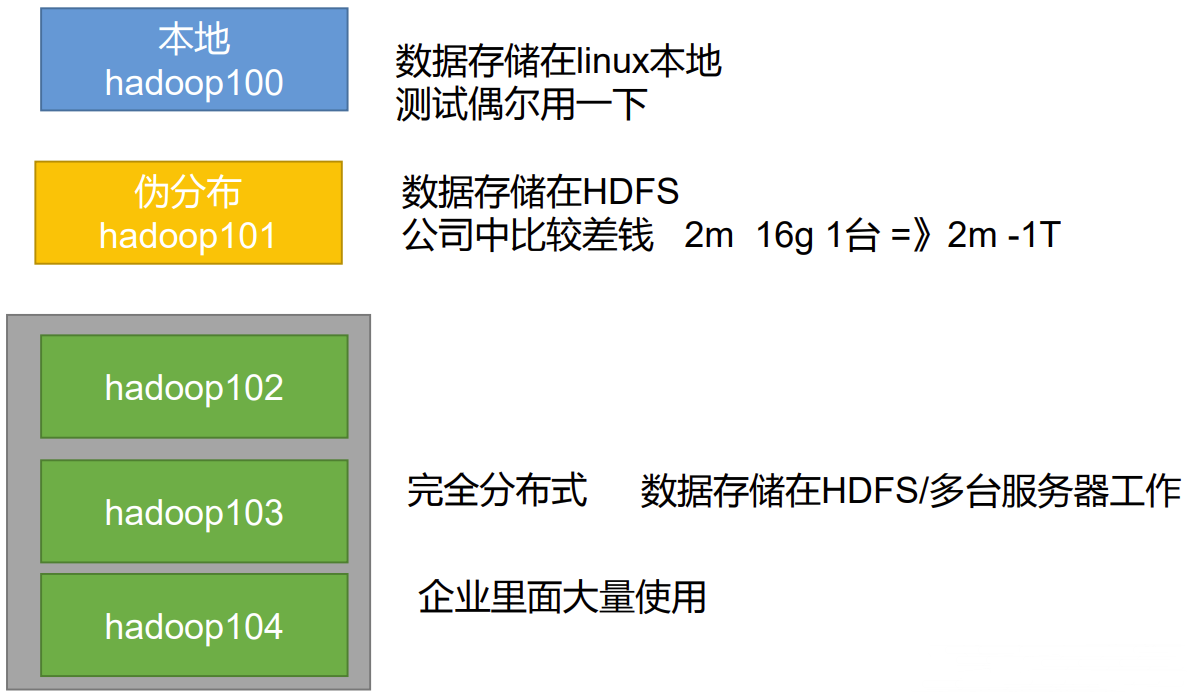

Hadoop Distributed File System,简称 HDFS,是一个分布式文件系统。

- 1)NameNode(nn):存储文件的元数据,如文件名,文件目录结构,文件属性(生成时间、副本数、文件权限),以及每个文件的块列表和块所在的DataNode等。

- 2)DataNode(dn):在本地文件系统存储文件块数据,以及块数据的校验和。

- 3)Secondary NameNode(2nn):每隔一段时间对NameNode元数据备份。

P014【014_尚硅谷_Hadoop_入门_YARN概述】06:35

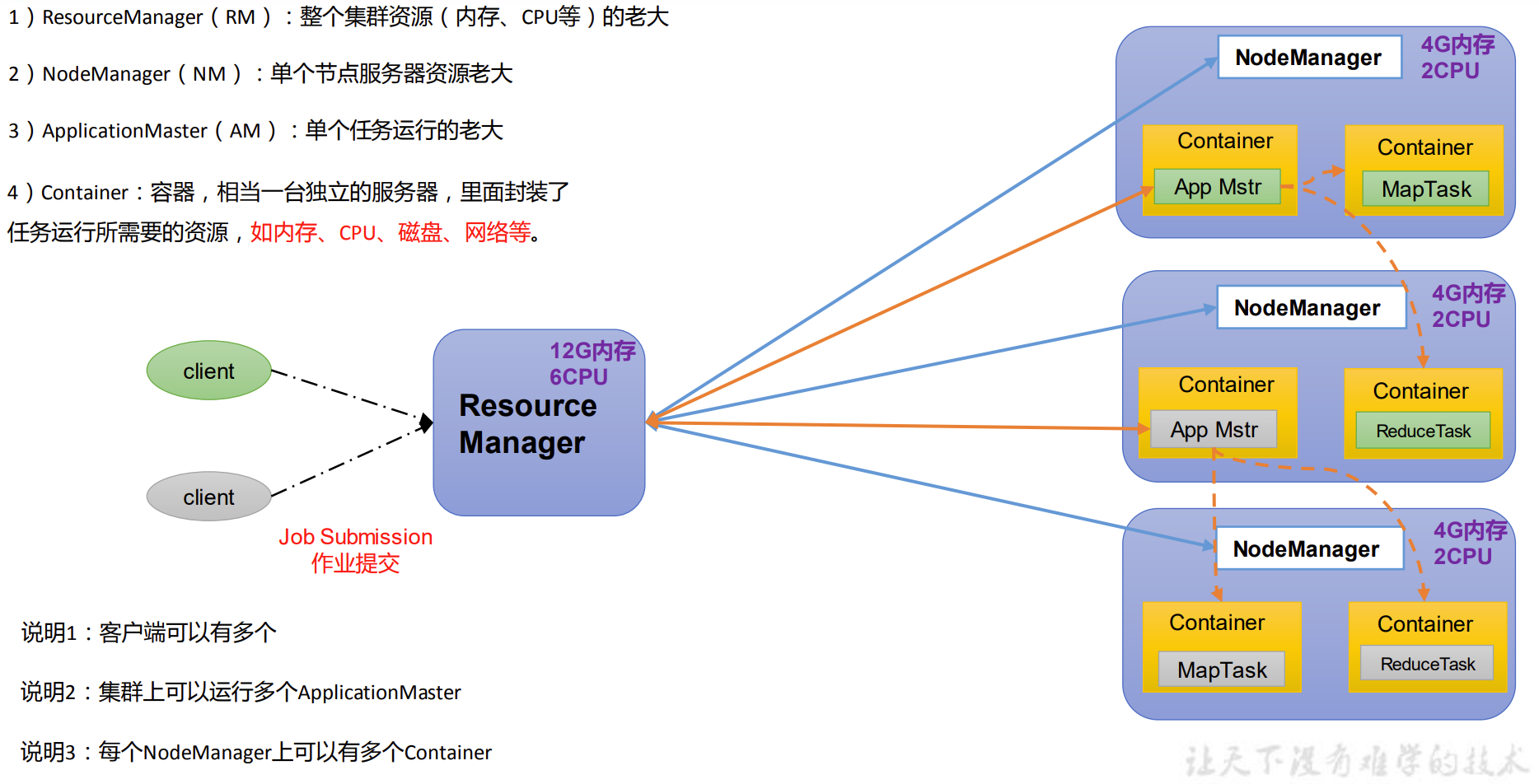

Yet Another Resource Negotiator 简称 YARN ,另一种资源协调者,是 Hadoop 的资源管理器。

P015【015_尚硅谷_Hadoop_入门_MapReduce概述】01:55

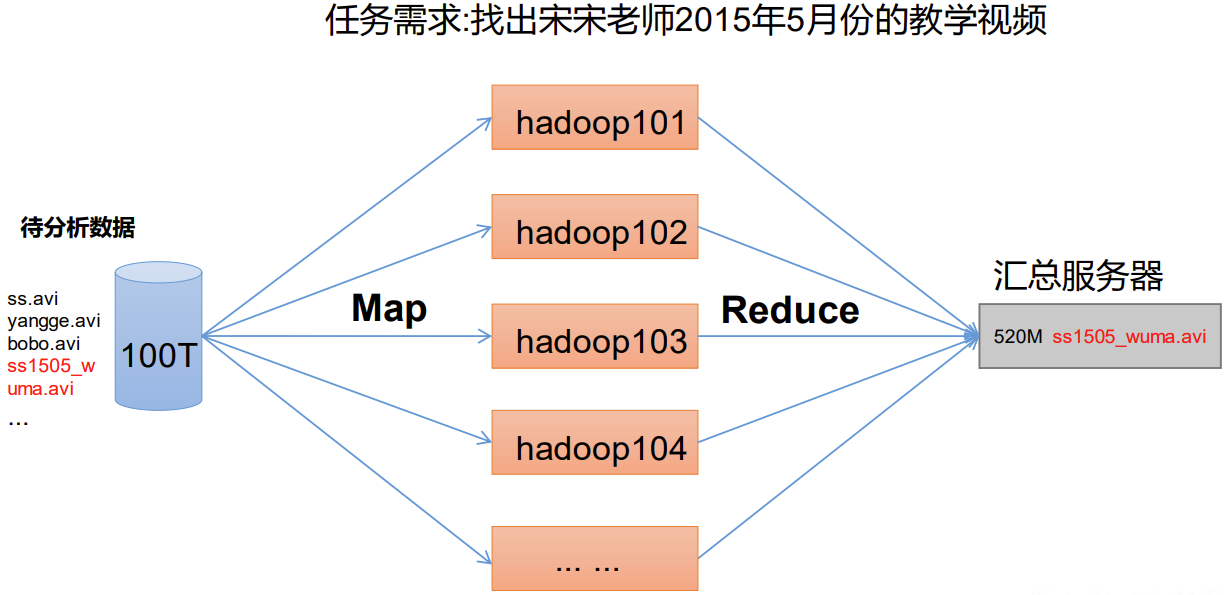

MapReduce 将计算过程分为两个阶段:Map 和 Reduce

- 1)Map 阶段并行处理输入数据

- 2)Reduce 阶段对 Map 结果进行汇总

P016【016_尚硅谷_Hadoop_入门_HDFS&YARN&MR关系】03:22

- HDFS

- NameNode:负责数据存储。

- DataNode:数据存储在哪个节点上。

- SecondaryNameNode:秘书,备份NameNode数据恢复NameNode部分工作。

- YARN:整个集群的资源管理。

- ResourceManager:资源管理,map阶段。

- NodeManager

- MapReduce

P017【017_尚硅谷_Hadoop_入门_大数据技术生态体系】09:17

大数据技术生态体系

推荐系统项目框架

P018【018_尚硅谷_Hadoop_入门_VMware安装】04:41

P019【019_尚硅谷_Hadoop_入门_Centos7.5软硬件安装】15:56

P020【020_尚硅谷_Hadoop_入门_IP和主机名称配置】10:50

- [root@hadoop100 ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

- [root@hadoop100 ~]# ifconfig

- ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

- inet 192.168.88.133 netmask 255.255.255.0 broadcast 192.168.88.255

- inet6 fe80::363b:8659:c323:345d prefixlen 64 scopeid 0x20<link>

- ether 00:0c:29:0f:0a:6d txqueuelen 1000 (Ethernet)

- RX packets 684561 bytes 1003221355 (956.7 MiB)

- RX errors 0 dropped 0 overruns 0 frame 0

- TX packets 53538 bytes 3445292 (3.2 MiB)

- TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

-

- lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

- inet 127.0.0.1 netmask 255.0.0.0

- inet6 ::1 prefixlen 128 scopeid 0x10<host>

- loop txqueuelen 1000 (Local Loopback)

- RX packets 84 bytes 9492 (9.2 KiB)

- RX errors 0 dropped 0 overruns 0 frame 0

- TX packets 84 bytes 9492 (9.2 KiB)

- TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

-

- virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

- inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255

- ether 52:54:00:1c:3c:a9 txqueuelen 1000 (Ethernet)

- RX packets 0 bytes 0 (0.0 B)

- RX errors 0 dropped 0 overruns 0 frame 0

- TX packets 0 bytes 0 (0.0 B)

- TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

-

- [root@hadoop100 ~]# systemctl restart network

- [root@hadoop100 ~]# cat /etc/host

- cat: /etc/host: 没有那个文件或目录

- [root@hadoop100 ~]# cat /etc/hostname

- hadoop100

- [root@hadoop100 ~]# cat /etc/hosts

- 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

- ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

- [root@hadoop100 ~]# vim /etc/hosts

- [root@hadoop100 ~]# ifconfig

- ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

- inet 192.168.88.100 netmask 255.255.255.0 broadcast 192.168.88.255

- inet6 fe80::363b:8659:c323:345d prefixlen 64 scopeid 0x20<link>

- ether 00:0c:29:0f:0a:6d txqueuelen 1000 (Ethernet)

- RX packets 684830 bytes 1003244575 (956.7 MiB)

- RX errors 0 dropped 0 overruns 0 frame 0

- TX packets 53597 bytes 3452600 (3.2 MiB)

- TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

-

- lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

- inet 127.0.0.1 netmask 255.0.0.0

- inet6 ::1 prefixlen 128 scopeid 0x10<host>

- loop txqueuelen 1000 (Local Loopback)

- RX packets 132 bytes 14436 (14.0 KiB)

- RX errors 0 dropped 0 overruns 0 frame 0

- TX packets 132 bytes 14436 (14.0 KiB)

- TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

-

- virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

- inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255

- ether 52:54:00:1c:3c:a9 txqueuelen 1000 (Ethernet)

- RX packets 0 bytes 0 (0.0 B)

- RX errors 0 dropped 0 overruns 0 frame 0

- TX packets 0 bytes 0 (0.0 B)

- TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

-

- [root@hadoop100 ~]# ll

- 总用量 40

- -rw-------. 1 root root 1973 3月 14 10:19 anaconda-ks.cfg

- -rw-r--r--. 1 root root 2021 3月 14 10:26 initial-setup-ks.cfg

- drwxr-xr-x. 2 root root 4096 3月 14 10:27 公共

- drwxr-xr-x. 2 root root 4096 3月 14 10:27 模板

- drwxr-xr-x. 2 root root 4096 3月 14 10:27 视频

- drwxr-xr-x. 2 root root 4096 3月 14 10:27 图片

- drwxr-xr-x. 2 root root 4096 3月 14 10:27 文档

- drwxr-xr-x. 2 root root 4096 3月 14 10:27 下载

- drwxr-xr-x. 2 root root 4096 3月 14 10:27 音乐

- drwxr-xr-x. 2 root root 4096 3月 14 10:27 桌面

- [root@hadoop100 ~]#

vim /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="static"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens33"

UUID="3241b48d-3234-4c23-8a03-b9b393a99a65"

DEVICE="ens33"

ONBOOT="yes"IPADDR=192.168.88.100

GATEWAY=192.168.88.2

DNS1=192.168.88.2vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.88.100 hadoop100

192.168.88.101 hadoop101

192.168.88.102 hadoop102

192.168.88.103 hadoop103

192.168.88.104 hadoop104

192.168.88.105 hadoop105

192.168.88.106 hadoop106

192.168.88.107 hadoop107

192.168.88.108 hadoop108192.168.88.151 node1 node1.itcast.cn

192.168.88.152 node2 node2.itcast.cn

192.168.88.153 node3 node3.itcast.cn

P021【021_尚硅谷_Hadoop_入门_Xshell远程访问工具】09:05

P022【022_尚硅谷_Hadoop_入门_模板虚拟机准备完成】12:25

yum install -y epel-release

systemctl stop firewalld

systemctl disable firewalld.service

P023【023_尚硅谷_Hadoop_入门_克隆三台虚拟机】15:01

vim /etc/sysconfig/network-scripts/ifcfg-ens33

vim /etc/hostname

reboot

P024【024_尚硅谷_Hadoop_入门_JDK安装】07:02

在hadoop102上安装jdk,然后将jdk拷贝到hadoop103与hadoop104上。

P025【025_尚硅谷_Hadoop_入门_Hadoop安装】07:20

同P024图!

P026【026_尚硅谷_Hadoop_入门_本地运行模式】11:56

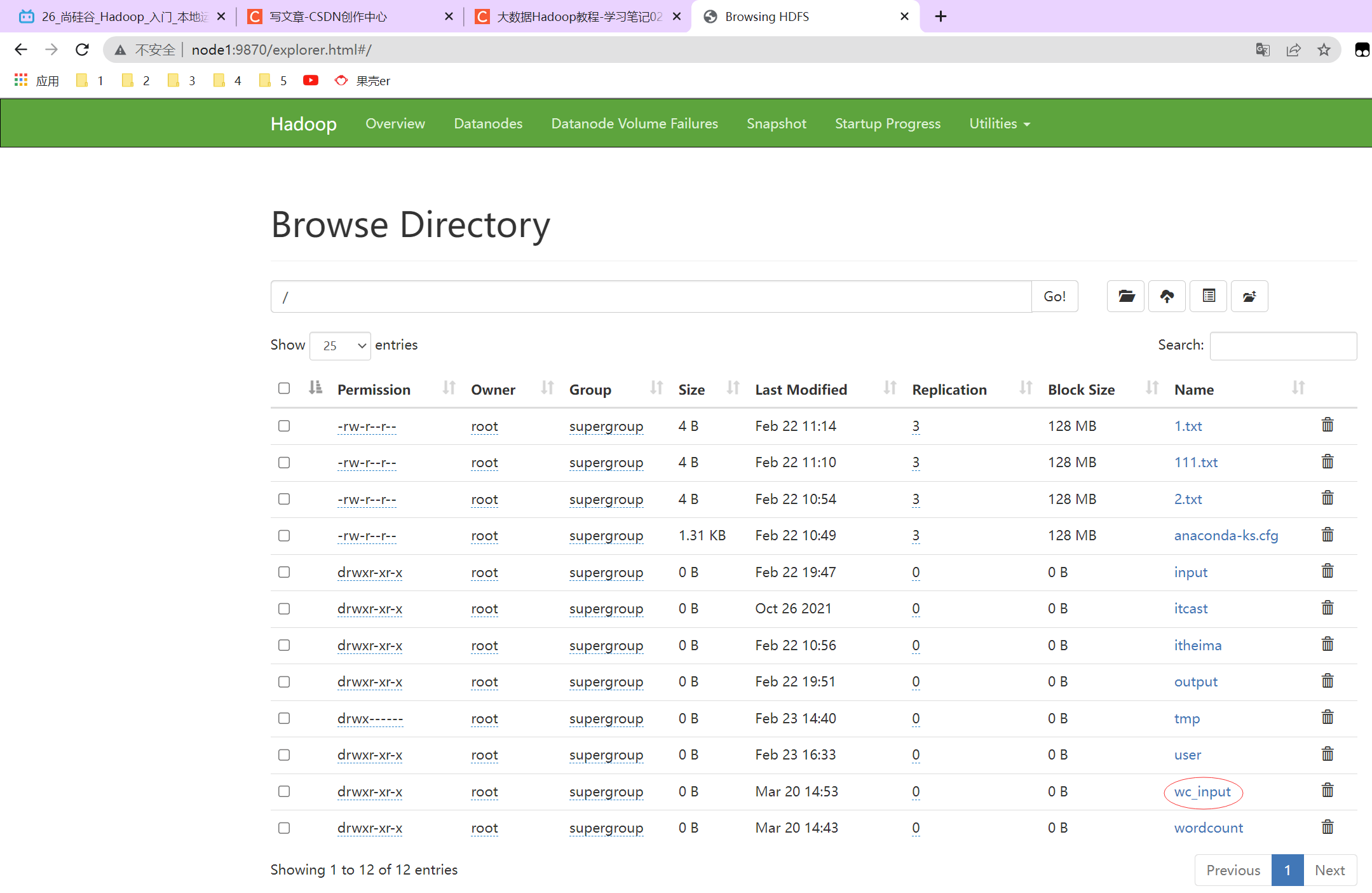

http://node1:9870/explorer.html#/

- [root@node1 ~]# cd /export/server/hadoop-3.3.0/share/hadoop/mapreduce/

- [root@node1 mapreduce]# hadoop jar hadoop-mapreduce-examples-3.3.0.jar wordcount /wordcount/input /wordcount/output

- 2023-03-20 14:43:07,516 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at node1/192.168.88.151:8032

- 2023-03-20 14:43:09,291 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1679293699463_0001

- 2023-03-20 14:43:11,916 INFO input.FileInputFormat: Total input files to process : 1

- 2023-03-20 14:43:12,313 INFO mapreduce.JobSubmitter: number of splits:1

- 2023-03-20 14:43:13,173 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1679293699463_0001

- 2023-03-20 14:43:13,173 INFO mapreduce.JobSubmitter: Executing with tokens: []

- 2023-03-20 14:43:14,684 INFO conf.Configuration: resource-types.xml not found

- 2023-03-20 14:43:14,684 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

- 2023-03-20 14:43:17,054 INFO impl.YarnClientImpl: Submitted application application_1679293699463_0001

- 2023-03-20 14:43:17,123 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1679293699463_0001/

- 2023-03-20 14:43:17,124 INFO mapreduce.Job: Running job: job_1679293699463_0001

- 2023-03-20 14:43:52,340 INFO mapreduce.Job: Job job_1679293699463_0001 running in uber mode : false

- 2023-03-20 14:43:52,360 INFO mapreduce.Job: map 0% reduce 0%

- 2023-03-20 14:44:08,011 INFO mapreduce.Job: map 100% reduce 0%

- 2023-03-20 14:44:16,986 INFO mapreduce.Job: map 100% reduce 100%

- 2023-03-20 14:44:18,020 INFO mapreduce.Job: Job job_1679293699463_0001 completed successfully

- 2023-03-20 14:44:18,579 INFO mapreduce.Job: Counters: 54

- File System Counters

- FILE: Number of bytes read=31

- FILE: Number of bytes written=529345

- FILE: Number of read operations=0

- FILE: Number of large read operations=0

- FILE: Number of write operations=0

- HDFS: Number of bytes read=142

- HDFS: Number of bytes written=17

- HDFS: Number of read operations=8

- HDFS: Number of large read operations=0

- HDFS: Number of write operations=2

- HDFS: Number of bytes read erasure-coded=0

- Job Counters

- Launched map tasks=1

- Launched reduce tasks=1

- Data-local map tasks=1

- Total time spent by all maps in occupied slots (ms)=11303

- Total time spent by all reduces in occupied slots (ms)=6220

- Total time spent by all map tasks (ms)=11303

- Total time spent by all reduce tasks (ms)=6220

- Total vcore-milliseconds taken by all map tasks=11303

- Total vcore-milliseconds taken by all reduce tasks=6220

- Total megabyte-milliseconds taken by all map tasks=11574272

- Total megabyte-milliseconds taken by all reduce tasks=6369280

- Map-Reduce Framework

- Map input records=2

- Map output records=5

- Map output bytes=53

- Map output materialized bytes=31

- Input split bytes=108

- Combine input records=5

- Combine output records=2

- Reduce input groups=2

- Reduce shuffle bytes=31

- Reduce input records=2

- Reduce output records=2

- Spilled Records=4

- Shuffled Maps =1

- Failed Shuffles=0

- Merged Map outputs=1

- GC time elapsed (ms)=546

- CPU time spent (ms)=3680

- Physical memory (bytes) snapshot=499236864

- Virtual memory (bytes) snapshot=5568684032

- Total committed heap usage (bytes)=365953024

- Peak Map Physical memory (bytes)=301096960

- Peak Map Virtual memory (bytes)=2779201536

- Peak Reduce Physical memory (bytes)=198139904

- Peak Reduce Virtual memory (bytes)=2789482496

- Shuffle Errors

- BAD_ID=0

- CONNECTION=0

- IO_ERROR=0

- WRONG_LENGTH=0

- WRONG_MAP=0

- WRONG_REDUCE=0

- File Input Format Counters

- Bytes Read=34

- File Output Format Counters

- Bytes Written=17

- [root@node1 mapreduce]#

-

- [root@node1 mapreduce]# hadoop jar hadoop-mapreduce-examples-3.3.0.jar wordcount /wc_input /wc_output

- 2023-03-20 15:01:48,007 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at node1/192.168.88.151:8032

- 2023-03-20 15:01:49,475 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1679293699463_0002

- 2023-03-20 15:01:50,522 INFO input.FileInputFormat: Total input files to process : 1

- 2023-03-20 15:01:51,010 INFO mapreduce.JobSubmitter: number of splits:1

- 2023-03-20 15:01:51,894 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1679293699463_0002

- 2023-03-20 15:01:51,894 INFO mapreduce.JobSubmitter: Executing with tokens: []

- 2023-03-20 15:01:52,684 INFO conf.Configuration: resource-types.xml not found

- 2023-03-20 15:01:52,687 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

- 2023-03-20 15:01:53,237 INFO impl.YarnClientImpl: Submitted application application_1679293699463_0002

- 2023-03-20 15:01:53,487 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1679293699463_0002/

- 2023-03-20 15:01:53,492 INFO mapreduce.Job: Running job: job_1679293699463_0002

- 2023-03-20 15:02:15,329 INFO mapreduce.Job: Job job_1679293699463_0002 running in uber mode : false

- 2023-03-20 15:02:15,342 INFO mapreduce.Job: map 0% reduce 0%

- 2023-03-20 15:02:26,652 INFO mapreduce.Job: map 100% reduce 0%

- 2023-03-20 15:02:40,297 INFO mapreduce.Job: map 100% reduce 100%

- 2023-03-20 15:02:41,350 INFO mapreduce.Job: Job job_1679293699463_0002 completed successfully

- 2023-03-20 15:02:41,557 INFO mapreduce.Job: Counters: 54

- File System Counters

- FILE: Number of bytes read=60

- FILE: Number of bytes written=529375

- FILE: Number of read operations=0

- FILE: Number of large read operations=0

- FILE: Number of write operations=0

- HDFS: Number of bytes read=149

- HDFS: Number of bytes written=38

- HDFS: Number of read operations=8

- HDFS: Number of large read operations=0

- HDFS: Number of write operations=2

- HDFS: Number of bytes read erasure-coded=0

- Job Counters

- Launched map tasks=1

- Launched reduce tasks=1

- Data-local map tasks=1

- Total time spent by all maps in occupied slots (ms)=8398

- Total time spent by all reduces in occupied slots (ms)=9720

- Total time spent by all map tasks (ms)=8398

- Total time spent by all reduce tasks (ms)=9720

- Total vcore-milliseconds taken by all map tasks=8398

- Total vcore-milliseconds taken by all reduce tasks=9720

- Total megabyte-milliseconds taken by all map tasks=8599552

- Total megabyte-milliseconds taken by all reduce tasks=9953280

- Map-Reduce Framework

- Map input records=4

- Map output records=6

- Map output bytes=69

- Map output materialized bytes=60

- Input split bytes=100

- Combine input records=6

- Combine output records=4

- Reduce input groups=4

- Reduce shuffle bytes=60

- Reduce input records=4

- Reduce output records=4

- Spilled Records=8

- Shuffled Maps =1

- Failed Shuffles=0

- Merged Map outputs=1

- GC time elapsed (ms)=1000

- CPU time spent (ms)=3880

- Physical memory (bytes) snapshot=503771136

- Virtual memory (bytes) snapshot=5568987136

- Total committed heap usage (bytes)=428343296

- Peak Map Physical memory (bytes)=303013888

- Peak Map Virtual memory (bytes)=2782048256

- Peak Reduce Physical memory (bytes)=200757248

- Peak Reduce Virtual memory (bytes)=2786938880

- Shuffle Errors

- BAD_ID=0

- CONNECTION=0

- IO_ERROR=0

- WRONG_LENGTH=0

- WRONG_MAP=0

- WRONG_REDUCE=0

- File Input Format Counters

- Bytes Read=49

- File Output Format Counters

- Bytes Written=38

- [root@node1 mapreduce]# pwd

- /export/server/hadoop-3.3.0/share/hadoop/mapreduce

- [root@node1 mapreduce]#

P027【027_尚硅谷_Hadoop_入门_scp&rsync命令讲解】15:01

第一次同步用scp,后续同步用rsync。

rsync主要用于备份和镜像,具有速度快、避免复制相同内容和支持符号链接的优点。

rsync和scp区别:用rsync做文件的复制要比scp的速度快,rsync只对差异文件做更新。scp是把所有文件都复制过去。

P028【028_尚硅谷_Hadoop_入门_xsync分发脚本】18:14

拷贝同步命令

- scp(secure copy)安全拷贝

- rsync 远程同步工具

- xsync 集群分发脚本

dirname命令:截取文件的路径,去除文件名中的非目录部分,仅显示与目录有关的内容。

[root@node1 ~]# dirname /home/atguigu/a.txt

/home/atguigu

[root@node1 ~]#basename命令:获取文件名称。

[root@node1 atguigu]# basename /home/atguigu/a.txt

a.txt

[root@node1 atguigu]#

- #!/bin/bash

-

- #1. 判断参数个数

- if [ $# -lt 1 ]

- then

- echo Not Enough Arguement!

- exit;

- fi

-

- #2. 遍历集群所有机器

- for host in hadoop102 hadoop103 hadoop104

- do

- echo ==================== $host ====================

- #3. 遍历所有目录,挨个发送

-

- for file in $@

- do

- #4. 判断文件是否存在

- if [ -e $file ]

- then

- #5. 获取父目录

- pdir=$(cd -P $(dirname $file); pwd)

-

- #6. 获取当前文件的名称

- fname=$(basename $file)

- ssh $host "mkdir -p $pdir"

- rsync -av $pdir/$fname $host:$pdir

- else

- echo $file does not exists!

- fi

- done

- done

- [root@node1 bin]# chmod 777 xsync

- [root@node1 bin]# ll

- 总用量 4

- -rwxrwxrwx 1 atguigu atguigu 727 3月 20 16:00 xsync

- [root@node1 bin]# cd ..

- [root@node1 atguigu]# xsync bin/

- ==================== node1 ====================

- sending incremental file list

-

- sent 94 bytes received 17 bytes 222.00 bytes/sec

- total size is 727 speedup is 6.55

- ==================== node2 ====================

- sending incremental file list

- bin/

- bin/xsync

-

- sent 871 bytes received 39 bytes 606.67 bytes/sec

- total size is 727 speedup is 0.80

- ==================== node3 ====================

- sending incremental file list

- bin/

- bin/xsync

-

- sent 871 bytes received 39 bytes 1,820.00 bytes/sec

- total size is 727 speedup is 0.80

- [root@node1 atguigu]# pwd

- /home/atguigu

- [root@node1 atguigu]# ls -al

- 总用量 20

- drwx------ 6 atguigu atguigu 168 3月 20 15:56 .

- drwxr-xr-x. 6 root root 56 3月 20 10:08 ..

- -rw-r--r-- 1 root root 0 3月 20 15:44 a.txt

- -rw------- 1 atguigu atguigu 21 3月 20 11:48 .bash_history

- -rw-r--r-- 1 atguigu atguigu 18 8月 8 2019 .bash_logout

- -rw-r--r-- 1 atguigu atguigu 193 8月 8 2019 .bash_profile

- -rw-r--r-- 1 atguigu atguigu 231 8月 8 2019 .bashrc

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- drwxrwxr-x 3 atguigu atguigu 18 3月 20 10:17 .cache

- drwxrwxr-x 3 atguigu atguigu 18 3月 20 10:17 .config

- drwxr-xr-x 4 atguigu atguigu 39 3月 10 20:04 .mozilla

- -rw------- 1 atguigu atguigu 1261 3月 20 15:56 .viminfo

- [root@node1 atguigu]#

- 连接成功

- Last login: Mon Mar 20 16:01:40 2023

- [root@node1 ~]# su atguigu

- [atguigu@node1 root]$ cd /home/atguigu/

- [atguigu@node1 ~]$ pwd

- /home/atguigu

- [atguigu@node1 ~]$ xsync bin/

- ==================== node1 ====================

- The authenticity of host 'node1 (192.168.88.151)' can't be established.

- ECDSA key fingerprint is SHA256:+eLT3FrOEuEsxBxjOd89raPi/ChJz26WGAfqBpz/KEk.

- ECDSA key fingerprint is MD5:18:42:ad:0f:2b:97:d8:b5:68:14:6a:98:e9:72:db:bb.

- Are you sure you want to continue connecting (yes/no)? yes

- Warning: Permanently added 'node1,192.168.88.151' (ECDSA) to the list of known hosts.

- atguigu@node1's password:

- atguigu@node1's password:

- sending incremental file list

- sent 98 bytes received 17 bytes 17.69 bytes/sec

- total size is 727 speedup is 6.32

- ==================== node2 ====================

- The authenticity of host 'node2 (192.168.88.152)' can't be established.

- ECDSA key fingerprint is SHA256:+eLT3FrOEuEsxBxjOd89raPi/ChJz26WGAfqBpz/KEk.

- ECDSA key fingerprint is MD5:18:42:ad:0f:2b:97:d8:b5:68:14:6a:98:e9:72:db:bb.

- Are you sure you want to continue connecting (yes/no)? yes

- Warning: Permanently added 'node2,192.168.88.152' (ECDSA) to the list of known hosts.

- atguigu@node2's password:

- atguigu@node2's password:

- sending incremental file list

-

- sent 94 bytes received 17 bytes 44.40 bytes/sec

- total size is 727 speedup is 6.55

- ==================== node3 ====================

- The authenticity of host 'node3 (192.168.88.153)' can't be established.

- ECDSA key fingerprint is SHA256:+eLT3FrOEuEsxBxjOd89raPi/ChJz26WGAfqBpz/KEk.

- ECDSA key fingerprint is MD5:18:42:ad:0f:2b:97:d8:b5:68:14:6a:98:e9:72:db:bb.

- Are you sure you want to continue connecting (yes/no)? yes

- Warning: Permanently added 'node3,192.168.88.153' (ECDSA) to the list of known hosts.

- atguigu@node3's password:

- atguigu@node3's password:

- sending incremental file list

- sent 94 bytes received 17 bytes 44.40 bytes/sec

- total size is 727 speedup is 6.55

- [atguigu@node1 ~]$

- ----------------------------------------------------------------------------------------

- 连接成功

- Last login: Mon Mar 20 17:22:20 2023 from 192.168.88.151

- [root@node2 ~]# su atguigu

- [atguigu@node2 root]$ vim /etc/sudoers

- 您在 /var/spool/mail/root 中有新邮件

- [atguigu@node2 root]$ su root

- 密码:

- [root@node2 ~]# vim /etc/sudoers

- [root@node2 ~]# cd /opt/

- [root@node2 opt]# ll

- 总用量 0

- drwxr-xr-x 4 atguigu atguigu 46 3月 20 11:32 module

- drwxr-xr-x. 2 root root 6 10月 31 2018 rh

- drwxr-xr-x 2 atguigu atguigu 67 3月 20 10:47 software

- [root@node2 opt]# su atguigu

- [atguigu@node2 opt]$ cd /home/atguigu/

- [atguigu@node2 ~]$ llk

- bash: llk: 未找到命令

- [atguigu@node2 ~]$ ll

- 总用量 0

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- [atguigu@node2 ~]$ cd ~

- 您在 /var/spool/mail/root 中有新邮件

- [atguigu@node2 ~]$ ll

- 总用量 0

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- [atguigu@node2 ~]$ ll

- 总用量 0

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- 您在 /var/spool/mail/root 中有新邮件

- [atguigu@node2 ~]$ cd bin

- [atguigu@node2 bin]$ ll

- 总用量 4

- -rwxrwxrwx 1 atguigu atguigu 727 3月 20 16:00 xsync

- [atguigu@node2 bin]$

- ----------------------------------------------------------------------------------------

- 连接成功

- Last login: Mon Mar 20 17:22:26 2023 from 192.168.88.152

- [root@node3 ~]# vim /etc/sudoers

- 您在 /var/spool/mail/root 中有新邮件

- [root@node3 ~]# cd /opt/

- [root@node3 opt]# ll

- 总用量 0

- drwxr-xr-x 4 atguigu atguigu 46 3月 20 11:32 module

- drwxr-xr-x. 2 root root 6 10月 31 2018 rh

- drwxr-xr-x 2 atguigu atguigu 67 3月 20 10:47 software

- [root@node3 opt]# cd ~

- 您在 /var/spool/mail/root 中有新邮件

- [root@node3 ~]# ll

- 总用量 4

- -rw-------. 1 root root 1340 9月 11 2020 anaconda-ks.cfg

- -rw------- 1 root root 0 2月 23 16:20 nohup.out

- [root@node3 ~]# ll

- 总用量 4

- -rw-------. 1 root root 1340 9月 11 2020 anaconda-ks.cfg

- -rw------- 1 root root 0 2月 23 16:20 nohup.out

- 您在 /var/spool/mail/root 中有新邮件

- [root@node3 ~]# cd ~

- [root@node3 ~]# ll

- 总用量 4

- -rw-------. 1 root root 1340 9月 11 2020 anaconda-ks.cfg

- -rw------- 1 root root 0 2月 23 16:20 nohup.out

- [root@node3 ~]# su atguigu

- [atguigu@node3 root]$ cd ~

- [atguigu@node3 ~]$ ls

- bin

- [atguigu@node3 ~]$ ll

- 总用量 0

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- [atguigu@node3 ~]$ cd bin

- [atguigu@node3 bin]$ ll

- 总用量 4

- -rwxrwxrwx 1 atguigu atguigu 727 3月 20 16:00 xsync

- [atguigu@node3 bin]$

- ----------------------------------------------------------------------------------------

- 连接成功

- Last login: Mon Mar 20 16:01:40 2023

- [root@node1 ~]# su atguigu

- [atguigu@node1 root]$ cd /home/atguigu/

- [atguigu@node1 ~]$ pwd

- /home/atguigu

- [atguigu@node1 ~]$ xsync bin/

- ==================== node1 ====================

- The authenticity of host 'node1 (192.168.88.151)' can't be established.

- ECDSA key fingerprint is SHA256:+eLT3FrOEuEsxBxjOd89raPi/ChJz26WGAfqBpz/KEk.

- ECDSA key fingerprint is MD5:18:42:ad:0f:2b:97:d8:b5:68:14:6a:98:e9:72:db:bb.

- Are you sure you want to continue connecting (yes/no)? yes

- Warning: Permanently added 'node1,192.168.88.151' (ECDSA) to the list of known hosts.

- atguigu@node1's password:

- atguigu@node1's password:

- sending incremental file list

-

- sent 98 bytes received 17 bytes 17.69 bytes/sec

- total size is 727 speedup is 6.32

- ==================== node2 ====================

- The authenticity of host 'node2 (192.168.88.152)' can't be established.

- ECDSA key fingerprint is SHA256:+eLT3FrOEuEsxBxjOd89raPi/ChJz26WGAfqBpz/KEk.

- ECDSA key fingerprint is MD5:18:42:ad:0f:2b:97:d8:b5:68:14:6a:98:e9:72:db:bb.

- Are you sure you want to continue connecting (yes/no)? yes

- Warning: Permanently added 'node2,192.168.88.152' (ECDSA) to the list of known hosts.

- atguigu@node2's password:

- atguigu@node2's password:

- sending incremental file list

- sent 94 bytes received 17 bytes 44.40 bytes/sec

- total size is 727 speedup is 6.55

- ==================== node3 ====================

- The authenticity of host 'node3 (192.168.88.153)' can't be established.

- ECDSA key fingerprint is SHA256:+eLT3FrOEuEsxBxjOd89raPi/ChJz26WGAfqBpz/KEk.

- ECDSA key fingerprint is MD5:18:42:ad:0f:2b:97:d8:b5:68:14:6a:98:e9:72:db:bb.

- Are you sure you want to continue connecting (yes/no)? yes

- Warning: Permanently added 'node3,192.168.88.153' (ECDSA) to the list of known hosts.

- atguigu@node3's password:

- atguigu@node3's password:

- sending incremental file list

-

- sent 94 bytes received 17 bytes 44.40 bytes/sec

- total size is 727 speedup is 6.55

- [atguigu@node1 ~]$ xsync /etc/profile.d/my_env.sh

- ==================== node1 ====================

- atguigu@node1's password:

- atguigu@node1's password:

- .sending incremental file list

-

- sent 48 bytes received 12 bytes 13.33 bytes/sec

- total size is 223 speedup is 3.72

- ==================== node2 ====================

- atguigu@node2's password:

- atguigu@node2's password:

- sending incremental file list

- my_env.sh

- rsync: mkstemp "/etc/profile.d/.my_env.sh.guTzvB" failed: Permission denied (13)

-

- sent 95 bytes received 126 bytes 88.40 bytes/sec

- total size is 223 speedup is 1.01

- rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1178) [sender=3.1.2]

- ==================== node3 ====================

- atguigu@node3's password:

- atguigu@node3's password:

- sending incremental file list

- my_env.sh

- rsync: mkstemp "/etc/profile.d/.my_env.sh.evDUZa" failed: Permission denied (13)

-

- sent 95 bytes received 126 bytes 88.40 bytes/sec

- total size is 223 speedup is 1.01

- rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1178) [sender=3.1.2]

- [atguigu@node1 ~]$ sudo ./bin/xsync /etc/profile.d/my_env.sh

- ==================== node1 ====================

- sending incremental file list

-

- sent 48 bytes received 12 bytes 120.00 bytes/sec

- total size is 223 speedup is 3.72

- ==================== node2 ====================

- sending incremental file list

- my_env.sh

-

- sent 95 bytes received 41 bytes 272.00 bytes/sec

- total size is 223 speedup is 1.64

- ==================== node3 ====================

- sending incremental file list

- my_env.sh

-

- sent 95 bytes received 41 bytes 272.00 bytes/sec

- total size is 223 speedup is 1.64

- [atguigu@node1 ~]$

P029【029_尚硅谷_Hadoop_入门_ssh免密登录】11:25

- 连接成功

- Last login: Mon Mar 20 19:14:44 2023 from 192.168.88.1

- [root@node1 ~]# su atguigu

- [atguigu@node1 root]$ pwd

- /root

- [atguigu@node1 root]$ cd ~

- [atguigu@node1 ~]$ pwd

- /home/atguigu

- [atguigu@node1 ~]$ ls -al

- 总用量 20

- drwx------ 7 atguigu atguigu 180 3月 20 19:22 .

- drwxr-xr-x. 6 root root 56 3月 20 10:08 ..

- -rw-r--r-- 1 root root 0 3月 20 15:44 a.txt

- -rw------- 1 atguigu atguigu 391 3月 20 19:36 .bash_history

- -rw-r--r-- 1 atguigu atguigu 18 8月 8 2019 .bash_logout

- -rw-r--r-- 1 atguigu atguigu 193 8月 8 2019 .bash_profile

- -rw-r--r-- 1 atguigu atguigu 231 8月 8 2019 .bashrc

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- drwxrwxr-x 3 atguigu atguigu 18 3月 20 10:17 .cache

- drwxrwxr-x 3 atguigu atguigu 18 3月 20 10:17 .config

- drwxr-xr-x 4 atguigu atguigu 39 3月 10 20:04 .mozilla

- drwx------ 2 atguigu atguigu 25 3月 20 19:22 .ssh

- -rw------- 1 atguigu atguigu 1261 3月 20 15:56 .viminfo

- [atguigu@node1 ~]$ cd .ssh

- [atguigu@node1 .ssh]$ ll

- 总用量 4

- -rw-r--r-- 1 atguigu atguigu 546 3月 20 19:23 known_hosts

- [atguigu@node1 .ssh]$ cat known_hosts

- node1,192.168.88.151 ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBBH5t/7/J0WwO0GTeNpg3EjfM5PjoppHMfq+wCWp46lhQ/B6O6kTOdx+2mEZu9QkAJk9oM4RGqiZKA5vmifHkQQ=

- node2,192.168.88.152 ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBBH5t/7/J0WwO0GTeNpg3EjfM5PjoppHMfq+wCWp46lhQ/B6O6kTOdx+2mEZu9QkAJk9oM4RGqiZKA5vmifHkQQ=

- node3,192.168.88.153 ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBBH5t/7/J0WwO0GTeNpg3EjfM5PjoppHMfq+wCWp46lhQ/B6O6kTOdx+2mEZu9QkAJk9oM4RGqiZKA5vmifHkQQ=

- [atguigu@node1 .ssh]$ ssh-keygen -t rsa

- Generating public/private rsa key pair.

- Enter file in which to save the key (/home/atguigu/.ssh/id_rsa):

- Enter passphrase (empty for no passphrase):

- Enter same passphrase again:

- Your identification has been saved in /home/atguigu/.ssh/id_rsa.

- Your public key has been saved in /home/atguigu/.ssh/id_rsa.pub.

- The key fingerprint is:

- SHA256:CBFD39JBRh/GgmTTIsC+gJVjWDQWFyE5riX287PXfzc atguigu@node1.itcast.cn

- The key's randomart image is:

- +---[RSA 2048]----+

- | =O*O=+==.o |

- |..O+.=o=o+.. |

- |.= o..o.o.. |

- |+.+ . o |

- |.=.. . S |

- |. .o |

- | o . |

- | o . . . E |

- | .+ ... . .|

- +----[SHA256]-----+

- [atguigu@node1 .ssh]$

- [atguigu@node1 .ssh]$ ll

- 总用量 12

- -rw------- 1 atguigu atguigu 1679 3月 20 19:40 id_rsa

- -rw-r--r-- 1 atguigu atguigu 405 3月 20 19:40 id_rsa.pub

- -rw-r--r-- 1 atguigu atguigu 546 3月 20 19:23 known_hosts

- [atguigu@node1 .ssh]$ cat id_rsa

- -----BEGIN RSA PRIVATE KEY-----

- MIIEpQIBAAKCAQEA0a3S5+QcIvjewfOYIHTlnlzbQgV7Y92JC6ZMt1XGklva53rw

- CEhf9yvfcM1VTBDNYHyvl6+rTKKU8EHTfpEoYa5blB299ZfKkM4OxPkcE9nz7uTN

- TyYF84wAR1IIEtT1bLVJPyh/Hvh8ye6UMj1PhZIGflNjbGwYkJoDK3wXxwaD4xey

- Y0zVCgL7QDqND0Iw8XQrCSQ8cQVgbBxprUYu97+n/7GOs0WASC6gtrW9IksxHtSB

- pI6ieKVzv9fuWcbhb8C5w7BqdVU6jrKqo7FnQaDKBNdC4weOku4TA+beHpc4W3p8

- f8b+b3U+A0qOj+uRVX7uDoxuunH4xAjqn8TmPQIDAQABAoIBAQCFl0UHn6tZkMyE

- MApdq3zcj/bWMp3x+6SkOnkoWcshVsq6rvYdoNcbqOU8fmZ5Bz+C2Q4bC76NHgzc

- omP4gM2Eps0MKoLr5aEW72Izly+Pak7jhv1UDzq9eBZ5WkdwkCQp9brMNaYAensv

- QQVEmRGAXZArjj+LRbfE8YtReke/8jxyJlRxmVrq+A0a6VAAdOSL/71EJZ9+zJy/

- SpN3UlZj27LndYIaOIsQ/vnhTrtb75l4VH24UNhHzJQv1PcBSUrSVOEWrIq/sOzU

- b4RW3Fuo51ZLB9ysvxZd5KnwC+yX63XKf8IJqfpWt1KrJ3IV6acvs1UEU+DELfUY

- b7v0GkhhAoGBAOuswY5qI0zUiBSEGxysDml5ZG9n4i2JnzmKmnVMAGwQq7ZzUv0o

- VwObDmFgp+A8NDAstxR6My5kKky2MOSv/ckJdAEzY9iVI3vXtkT54HYhHstIzNYg

- ube1MylcLUttaR/OpbJpyN8BavTQEtydJP7Xchorw6DaZOGLhWjX8EjpAoGBAOPD

- IVSfi+51s9h5cIDvvm6IiKDn05kf8D/VrD3awm/hrQrRwF3ouD6JBr/T9OfWqh1W

- v9xjn5uurTflO8CZOU91VB/nihXxN0pT6CREi8/I9QSAZbrCkCIWZ6ku7seyEZg6

- fp756zCyVeKNSZPpDbKH5LCSyafkroZBxcZKFp41AoGAXff0+SbiyliXpa6C7OzB

- llabsDv4l/Wesh/MtGZIaM5A2S+kcGJsR3jExBj49tSqbmb13MlYrO+tWgbu+dAe

- XdFSGsR11D6q9k8tUtVbJV7RW3a8jchgpJowOxaQzNlkKBWKRdgeCqUTE2f/jU1v

- Gdmnmj3G89UAklnCKOqo2TkCgYEAuGBVEgkaIQ7daQdd4LKzaQ1T9VXWAGZPeY2C

- oov9zM5W46RK4nqq88y/TvjJkAhBrAB2znVDVqcACHikd1RShZVIZY9tRDgB90SX

- bwyiVbGrT1qVf6tTPJUAk3+vwq7O+XmY2R8dmk0zo3OWtYr7EKRbp+kcH7LK6VpD

- PTLqvmUCgYEAt8rZWnAjGiipc/lLHMkoeKMK+JvA42HETVxQkdG17hTRzrotMMaF

- CajslMcQ9m+ALHko2uyvsHVOdm66tQO65IKr5iavpcq8ZHKh51jJPdJpQwAJE9vr

- d4ASXHEESfNK5/YPzMAIy019lgJal4bsy8tE8i6LIv6/PHVhNDs3Rsg=

- -----END RSA PRIVATE KEY-----

- [atguigu@node1 .ssh]$ cat id_rsa.pub

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDRrdLn5Bwi+N7B85ggdOWeXNtCBXtj3YkLpky3VcaSW9rnevAISF/3K99wzVVMEM1gfK+Xr6tMopTwQdN+kShhrluUHb31l8qQzg7E+RwT2fPu5M1PJgXzjABHUggS1PVstUk/KH8e+HzJ7pQyPU+FkgZ+U2NsbBiQmgMrfBfHBoPjF7JjTNUKAvtAOo0PQjDxdCsJJDxxBWBsHGmtRi73v6f/sY6zRYBILqC2tb0iSzEe1IGkjqJ4pXO/1+5ZxuFvwLnDsGp1VTqOsqqjsWdBoMoE10LjB46S7hMD5t4elzhbenx/xv5vdT4DSo6P65FVfu4OjG66cfjECOqfxOY9 atguigu@node1.itcast.cn

- [atguigu@node1 .ssh]$ ssh-copy-id node2

- /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/atguigu/.ssh/id_rsa.pub"

- /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

- /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

- atguigu@node2's password:

- Permission denied, please try again.

- atguigu@node2's password:

- Number of key(s) added: 1

- Now try logging into the machine, with: "ssh 'node2'"

- and check to make sure that only the key(s) you wanted were added.

- [atguigu@node1 .ssh]$ ssh node2

- Last login: Mon Mar 20 19:37:14 2023

- [atguigu@node2 ~]$ hostname

- node2.itcast.cn

- [atguigu@node2 ~]$ exit

- 登出

- Connection to node2 closed.

- [atguigu@node1 .ssh]$ ssh-copy-id node3

- /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/atguigu/.ssh/id_rsa.pub"

- /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

- /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

- atguigu@node3's password:

-

- Number of key(s) added: 1

-

- Now try logging into the machine, with: "ssh 'node3'"

- and check to make sure that only the key(s) you wanted were added.

-

- [atguigu@node1 .ssh]$ ssh node3

- Last login: Mon Mar 20 19:37:33 2023

- [atguigu@node3 ~]$ hostname

- node3.itcast.cn

- [atguigu@node3 ~]$ exit

- 登出

- Connection to node3 closed.

- [atguigu@node1 .ssh]$ ssh node1

- atguigu@node1's password:

- Last login: Mon Mar 20 19:36:46 2023

- [atguigu@node1 ~]$ exit

- 登出

- Connection to node1 closed.

- [atguigu@node1 .ssh]$ ssh-copy-id node1

- /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/atguigu/.ssh/id_rsa.pub"

- /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

- /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

- atguigu@node1's password:

-

- Number of key(s) added: 1

-

- Now try logging into the machine, with: "ssh 'node1'"

- and check to make sure that only the key(s) you wanted were added.

-

- [atguigu@node1 .ssh]$ ll

- 总用量 16

- -rw------- 1 atguigu atguigu 405 3月 20 19:45 authorized_keys

- -rw------- 1 atguigu atguigu 1679 3月 20 19:40 id_rsa

- -rw-r--r-- 1 atguigu atguigu 405 3月 20 19:40 id_rsa.pub

- -rw-r--r-- 1 atguigu atguigu 546 3月 20 19:23 known_hosts

- [atguigu@node1 .ssh]$ cat authorized_keys

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDRrdLn5Bwi+N7B85ggdOWeXNtCBXtj3YkLpky3VcaSW9rnevAISF/3K99wzVVMEM1gfK+Xr6tMopTwQdN+kShhrluUHb31l8qQzg7E+RwT2fPu5M1PJgXzjABHUggS1PVstUk/KH8e+HzJ7pQyPU+FkgZ+U2NsbBiQmgMrfBfHBoPjF7JjTNUKAvtAOo0PQjDxdCsJJDxxBWBsHGmtRi73v6f/sY6zRYBILqC2tb0iSzEe1IGkjqJ4pXO/1+5ZxuFvwLnDsGp1VTqOsqqjsWdBoMoE10LjB46S7hMD5t4elzhbenx/xv5vdT4DSo6P65FVfu4OjG66cfjECOqfxOY9 atguigu@node1.itcast.cn

- [atguigu@node1 .ssh]$ pwd

- /home/atguigu/.ssh

- [atguigu@node1 .ssh]$ su root

- 密码:

- [root@node1 .ssh]# ll

- 总用量 16

- -rw------- 1 atguigu atguigu 810 3月 20 19:51 authorized_keys

- -rw------- 1 atguigu atguigu 1679 3月 20 19:40 id_rsa

- -rw-r--r-- 1 atguigu atguigu 405 3月 20 19:40 id_rsa.pub

- -rw-r--r-- 1 atguigu atguigu 546 3月 20 19:23 known_hosts

- [root@node1 .ssh]# ssh-keygen -t rsa

- Generating public/private rsa key pair.

- Enter file in which to save the key (/root/.ssh/id_rsa):

- /root/.ssh/id_rsa already exists.

- Overwrite (y/n)?

- [root@node1 .ssh]# ssh-copy-id node1

- /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

- /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

-

- /usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

- (if you think this is a mistake, you may want to use -f option)

-

- [root@node1 .ssh]# ssh-copy-id node2

- /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

- /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

-

- /usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

- (if you think this is a mistake, you may want to use -f option)

-

- [root@node1 .ssh]# ssh-copy-id node3

- /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

- /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

-

- /usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

- (if you think this is a mistake, you may want to use -f option)

-

- [root@node1 .ssh]# su atguigu

- [atguigu@node1 .ssh]$ cd ~

- [atguigu@node1 ~]$ xsync hello.txt

- ==================== node1 ====================

- hello.txt does not exists!

- ==================== node2 ====================

- hello.txt does not exists!

- ==================== node3 ====================

- hello.txt does not exists!

- [atguigu@node1 ~]$ pwd

- /home/atguigu

- [atguigu@node1 ~]$ cd /home/atguigu/

- [atguigu@node1 ~]$ xsync hello.txt

- ==================== node1 ====================

- hello.txt does not exists!

- ==================== node2 ====================

- hello.txt does not exists!

- ==================== node3 ====================

- hello.txt does not exists!

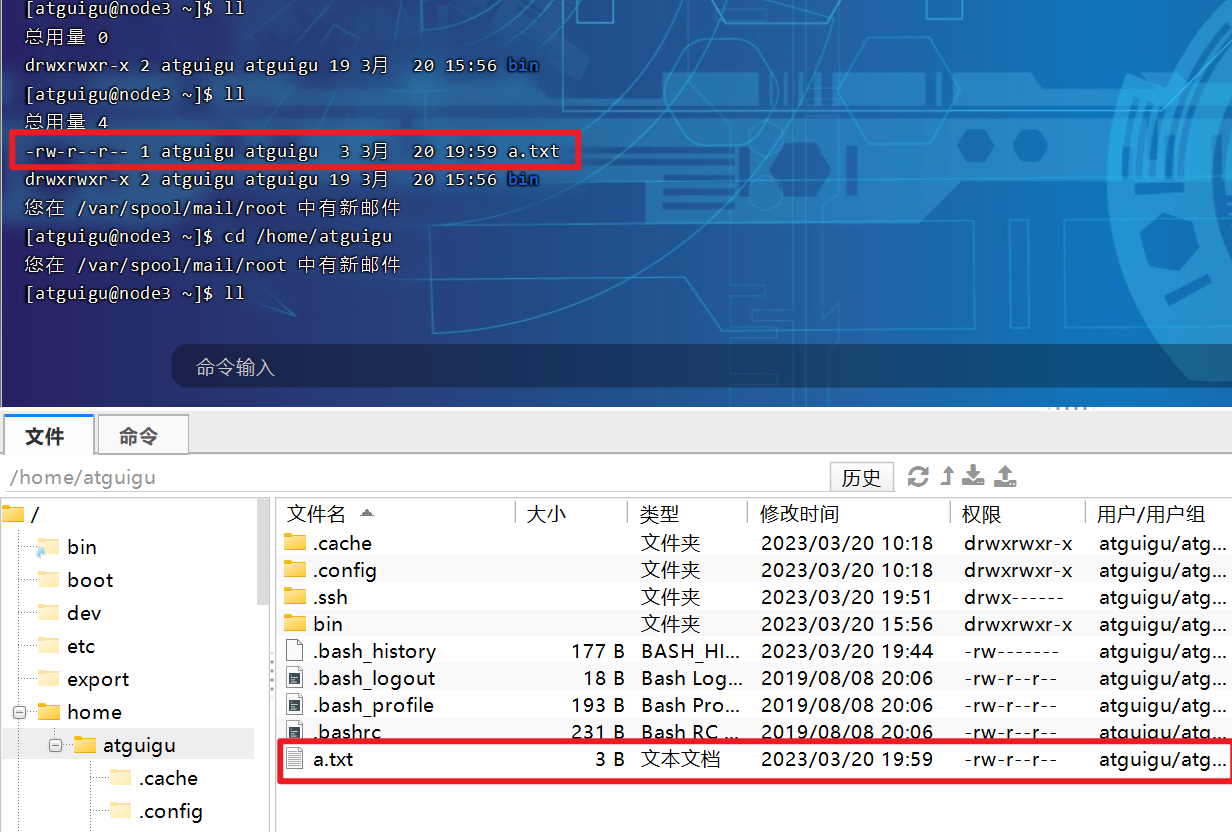

- [atguigu@node1 ~]$ xsync a.txt

- ==================== node1 ====================

- sending incremental file list

-

- sent 43 bytes received 12 bytes 110.00 bytes/sec

- total size is 3 speedup is 0.05

- ==================== node2 ====================

- sending incremental file list

- a.txt

-

- sent 93 bytes received 35 bytes 256.00 bytes/sec

- total size is 3 speedup is 0.02

- ==================== node3 ====================

- sending incremental file list

- a.txt

-

- sent 93 bytes received 35 bytes 256.00 bytes/sec

- total size is 3 speedup is 0.02

- [atguigu@node1 ~]$

- ----------------------------------------------------------------------------------------

- 连接成功

- Last login: Mon Mar 20 19:17:38 2023

- [root@node2 ~]# su atguigu

- [atguigu@node2 root]$ cd ~

- [atguigu@node2 ~]$ pwd

- /home/atguigu

- [atguigu@node2 ~]$ ls -al

- 总用量 20

- drwx------ 5 atguigu atguigu 139 3月 20 19:17 .

- drwxr-xr-x. 3 root root 21 3月 20 10:08 ..

- -rw------- 1 atguigu atguigu 108 3月 20 19:36 .bash_history

- -rw-r--r-- 1 atguigu atguigu 18 8月 8 2019 .bash_logout

- -rw-r--r-- 1 atguigu atguigu 193 8月 8 2019 .bash_profile

- -rw-r--r-- 1 atguigu atguigu 231 8月 8 2019 .bashrc

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- drwxrwxr-x 3 atguigu atguigu 18 3月 20 10:17 .cache

- drwxrwxr-x 3 atguigu atguigu 18 3月 20 10:17 .config

- -rw------- 1 atguigu atguigu 557 3月 20 19:17 .viminfo

- [atguigu@node2 ~]$

- 连接断开

- 连接成功

- Last login: Mon Mar 20 19:36:35 2023 from 192.168.88.1

- [root@node2 ~]# cd /home/atguigu/.ssh/

- 您在 /var/spool/mail/root 中有新邮件

- [root@node2 .ssh]# ll

- 总用量 4

- -rw------- 1 atguigu atguigu 405 3月 20 19:43 authorized_keys

- [root@node2 .ssh]# cat authorized_keys

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDRrdLn5Bwi+N7B85ggdOWeXNtCBXtj3YkLpky3VcaSW9rnevAISF/3K99wzVVMEM1gfK+Xr6tMopTwQdN+kShhrluUHb31l8qQzg7E+RwT2fPu5M1PJgXzjABHUggS1PVstUk/KH8e+HzJ7pQyPU+FkgZ+U2NsbBiQmgMrfBfHBoPjF7JjTNUKAvtAOo0PQjDxdCsJJDxxBWBsHGmtRi73v6f/sY6zRYBILqC2tb0iSzEe1IGkjqJ4pXO/1+5ZxuFvwLnDsGp1VTqOsqqjsWdBoMoE10LjB46S7hMD5t4elzhbenx/xv5vdT4DSo6P65FVfu4OjG66cfjECOqfxOY9 atguigu@node1.itcast.cn

- [root@node2 .ssh]# ssh-keygen -t rsa

- Generating public/private rsa key pair.

- Enter file in which to save the key (/root/.ssh/id_rsa):

- /root/.ssh/id_rsa already exists.

- Overwrite (y/n)? y

- Enter passphrase (empty for no passphrase):

- Enter same passphrase again:

- Your identification has been saved in /root/.ssh/id_rsa.

- Your public key has been saved in /root/.ssh/id_rsa.pub.

- The key fingerprint is:

- SHA256:rKXFOBLTEYhuY0iBovwDyguTlvqAZozIMIiAHWhaWyI root@node2.itcast.cn

- The key's randomart image is:

- +---[RSA 2048]----+

- |.oo. .o. |

- |E++.o. . |

- |X=.+o . |

- |Bo* o + |

- |B++.. o S |

- |%= o . * |

- |O*. . o |

- |+o |

- | .. |

- +----[SHA256]-----+

- 您在 /var/spool/mail/root 中有新邮件

- [root@node2 .ssh]# ssh-copy-id node1

- /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

- /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

- /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

- root@node1's password:

-

- Number of key(s) added: 1

-

- Now try logging into the machine, with: "ssh 'node1'"

- and check to make sure that only the key(s) you wanted were added.

-

- [root@node2 .ssh]# ll

- 总用量 4

- -rw------- 1 atguigu atguigu 405 3月 20 19:43 authorized_keys

- [root@node2 .ssh]# ssh-copy-id node3

- /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

- /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

- /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

- root@node3's password:

- Number of key(s) added: 1

- Now try logging into the machine, with: "ssh 'node3'"

- and check to make sure that only the key(s) you wanted were added.

- [root@node2 .ssh]# ll

- 总用量 4

- -rw------- 1 atguigu atguigu 810 3月 20 19:51 authorized_keys

- 您在 /var/spool/mail/root 中有新邮件

- [root@node2 .ssh]# ssh node2

- root@node2's password:

- Last login: Mon Mar 20 19:38:58 2023 from 192.168.88.1

- [root@node2 ~]# ls -al

- 总用量 56

- dr-xr-x---. 7 root root 4096 3月 20 19:18 .

- dr-xr-xr-x. 18 root root 258 10月 26 2021 ..

- -rw-r--r-- 1 root root 4 2月 22 11:10 111.txt

- -rw-r--r-- 1 root root 2 2月 22 11:08 1.txt

- -rw-r--r-- 1 root root 2 2月 22 11:09 2.txt

- -rw-r--r-- 1 root root 2 2月 22 11:09 3.txt

- -rw-------. 1 root root 1340 9月 11 2020 anaconda-ks.cfg

- -rw-------. 1 root root 3555 3月 20 19:38 .bash_history

- -rw-r--r--. 1 root root 18 12月 29 2013 .bash_logout

- -rw-r--r--. 1 root root 176 12月 29 2013 .bash_profile

- -rw-r--r--. 1 root root 176 12月 29 2013 .bashrc

- drwxr-xr-x. 3 root root 18 9月 11 2020 .cache

- drwxr-xr-x. 3 root root 18 9月 11 2020 .config

- -rw-r--r--. 1 root root 100 12月 29 2013 .cshrc

- drwxr-xr-x. 2 root root 40 9月 11 2020 .oracle_jre_usage

- drwxr----- 3 root root 19 3月 20 10:05 .pki

- drwx------. 2 root root 80 3月 20 19:49 .ssh

- -rw-r--r--. 1 root root 129 12月 29 2013 .tcshrc

- -rw-r--r-- 1 root root 0 3月 13 19:40 test.txt

- -rw------- 1 root root 4620 3月 20 19:18 .viminfo

- [root@node2 ~]# pwd

- /root

- [root@node2 ~]# cd .ssh

- 您在 /var/spool/mail/root 中有新邮件

- [root@node2 .ssh]# ll

- 总用量 16

- -rw-------. 1 root root 402 9月 11 2020 authorized_keys

- -rw-------. 1 root root 1679 3月 20 19:48 id_rsa

- -rw-r--r--. 1 root root 402 3月 20 19:48 id_rsa.pub

- -rw-r--r--. 1 root root 1254 3月 20 09:25 known_hosts

- [root@node2 .ssh]# su atguigu

- [atguigu@node2 .ssh]$ cd ~

- [atguigu@node2 ~]$ ll

- 总用量 0

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- [atguigu@node2 ~]$ ll

- 总用量 4

- -rw-r--r-- 1 atguigu atguigu 3 3月 20 19:59 a.txt

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- 您在 /var/spool/mail/root 中有新邮件

- [atguigu@node2 ~]$

- ----------------------------------------------------------------------------------------

- 连接成功

- Last login: Mon Mar 20 19:14:48 2023 from 192.168.88.1

- [root@node3 ~]# su atguigu

- [atguigu@node3 root]$ cd ~

- [atguigu@node3 ~]$ pwd

- /home/atguigu

- [atguigu@node3 ~]$ ls -al

- 总用量 16

- drwx------ 5 atguigu atguigu 123 3月 20 17:25 .

- drwxr-xr-x. 3 root root 21 3月 20 10:08 ..

- -rw------- 1 atguigu atguigu 163 3月 20 19:36 .bash_history

- -rw-r--r-- 1 atguigu atguigu 18 8月 8 2019 .bash_logout

- -rw-r--r-- 1 atguigu atguigu 193 8月 8 2019 .bash_profile

- -rw-r--r-- 1 atguigu atguigu 231 8月 8 2019 .bashrc

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- drwxrwxr-x 3 atguigu atguigu 18 3月 20 10:18 .cache

- drwxrwxr-x 3 atguigu atguigu 18 3月 20 10:18 .config

- [atguigu@node3 ~]$ cd /home/atguigu/.ssh/

- 您在 /var/spool/mail/root 中有新邮件

- [atguigu@node3 .ssh]$ ll

- 总用量 4

- -rw------- 1 atguigu atguigu 405 3月 20 19:44 authorized_keys

- [atguigu@node3 .ssh]$ cat authorized_keys

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDRrdLn5Bwi+N7B85ggdOWeXNtCBXtj3YkLpky3VcaSW9rnevAISF/3K99wzVVMEM1gfK+Xr6tMopTwQdN+kShhrluUHb31l8qQzg7E+RwT2fPu5M1PJgXzjABHUggS1PVstUk/KH8e+HzJ7pQyPU+FkgZ+U2NsbBiQmgMrfBfHBoPjF7JjTNUKAvtAOo0PQjDxdCsJJDxxBWBsHGmtRi73v6f/sY6zRYBILqC2tb0iSzEe1IGkjqJ4pXO/1+5ZxuFvwLnDsGp1VTqOsqqjsWdBoMoE10LjB46S7hMD5t4elzhbenx/xv5vdT4DSo6P65FVfu4OjG66cfjECOqfxOY9 atguigu@node1.itcast.cn

- [atguigu@node3 .ssh]$ ssh-keygen -t rsa

- Generating public/private rsa key pair.

- Enter file in which to save the key (/home/atguigu/.ssh/id_rsa):

- Enter passphrase (empty for no passphrase):

- Enter same passphrase again:

- Your identification has been saved in /home/atguigu/.ssh/id_rsa.

- Your public key has been saved in /home/atguigu/.ssh/id_rsa.pub.

- The key fingerprint is:

- SHA256:UXniCTC0jqCGYUsYfBRoUBrlaei8V6dWx7lAvRypEko atguigu@node3.itcast.cn

- The key's randomart image is:

- +---[RSA 2048]----+

- |*o=o..+. .. |

- |.X o oo.+ . |

- |*o*E ....* + |

- |*+o..oo +.* |

- |o= ..o.=S* |

- |. . . = o . |

- | . . o . |

- | . . |

- | |

- +----[SHA256]-----+

- 您在 /var/spool/mail/root 中有新邮件

- [atguigu@node3 .ssh]$ ssh-copy-id node1

- /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/atguigu/.ssh/id_rsa.pub"

- The authenticity of host 'node1 (192.168.88.151)' can't be established.

- ECDSA key fingerprint is SHA256:+eLT3FrOEuEsxBxjOd89raPi/ChJz26WGAfqBpz/KEk.

- ECDSA key fingerprint is MD5:18:42:ad:0f:2b:97:d8:b5:68:14:6a:98:e9:72:db:bb.

- Are you sure you want to continue connecting (yes/no)?

- /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

- The authenticity of host 'node1 (192.168.88.151)' can't be established.

- ECDSA key fingerprint is SHA256:+eLT3FrOEuEsxBxjOd89raPi/ChJz26WGAfqBpz/KEk.

- ECDSA key fingerprint is MD5:18:42:ad:0f:2b:97:d8:b5:68:14:6a:98:e9:72:db:bb.

- Are you sure you want to continue connecting (yes/no)? yes

- /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

- atguigu@node1's password:

-

- Number of key(s) added: 1

-

- Now try logging into the machine, with: "ssh 'node1'"

- and check to make sure that only the key(s) you wanted were added.

-

- [atguigu@node3 .ssh]$ ssh-copy-id node2

- /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/atguigu/.ssh/id_rsa.pub"

- The authenticity of host 'node2 (192.168.88.152)' can't be established.

- ECDSA key fingerprint is SHA256:+eLT3FrOEuEsxBxjOd89raPi/ChJz26WGAfqBpz/KEk.

- ECDSA key fingerprint is MD5:18:42:ad:0f:2b:97:d8:b5:68:14:6a:98:e9:72:db:bb.

- Are you sure you want to continue connecting (yes/no)? yes

- /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

- /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

- atguigu@node2's password:

-

- Number of key(s) added: 1

-

- Now try logging into the machine, with: "ssh 'node2'"

- and check to make sure that only the key(s) you wanted were added.

-

- [atguigu@node3 .ssh]$ ll

- 总用量 16

- -rw------- 1 atguigu atguigu 405 3月 20 19:44 authorized_keys

- -rw------- 1 atguigu atguigu 1675 3月 20 19:50 id_rsa

- -rw-r--r-- 1 atguigu atguigu 405 3月 20 19:50 id_rsa.pub

- -rw-r--r-- 1 atguigu atguigu 364 3月 20 19:51 known_hosts

- 您在 /var/spool/mail/root 中有新邮件

- [atguigu@node3 .ssh]$ cd ~

- 您在 /var/spool/mail/root 中有新邮件

- [atguigu@node3 ~]$ ll

- 总用量 0

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- [atguigu@node3 ~]$ ll

- 总用量 4

- -rw-r--r-- 1 atguigu atguigu 3 3月 20 19:59 a.txt

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- 您在 /var/spool/mail/root 中有新邮件

- [atguigu@node3 ~]$ cd /home/atguigu

- 您在 /var/spool/mail/root 中有新邮件

- [atguigu@node3 ~]$ ll

- 总用量 4

- -rw-r--r-- 1 atguigu atguigu 3 3月 20 19:59 a.txt

- drwxrwxr-x 2 atguigu atguigu 19 3月 20 15:56 bin

- [atguigu@node3 ~]$

P030【030_尚硅谷_Hadoop_入门_集群配置】13:24

注意:

- NameNode和SecondaryNameNode不要安装在同一台服务器。

- ResourceManager也很消耗内存,不要和NameNode、SecondaryNameNode配置在同一台机器上。

hadoop102

hadoop103

hadoop104

HDFS

NameNode

DataNode

DataNode

SecondaryNameNode

DataNode

YARN

NodeManager

ResourceManager

NodeManager

NodeManager

要获取的默认文件

文件存放在Hadoop的jar包中的位置

[core-default.xml]

hadoop-common-3.1.3.jar/core-default.xml

[hdfs-default.xml]

hadoop-hdfs-3.1.3.jar/hdfs-default.xml

[yarn-default.xml]

hadoop-yarn-common-3.1.3.jar/yarn-default.xml

[mapred-default.xml]

hadoop-mapreduce-client-core-3.1.3.jar/mapred-default.xml

P031【031_尚硅谷_Hadoop_入门_群起集群并测试】16:52

hadoop集群启动后datanode没有启动_hadoop datanode没有启动!

[atguigu@hadoop102 hadoop-3.1.3]$ sbin/start-dfs.sh

[atguigu@hadoop103 hadoop-3.1.3]$ sbin/start-yarn.sh

yarn:资源调度。

- 连接成功

- Last login: Wed Mar 22 09:16:44 2023

- [atguigu@node1 ~]$ cd /opt/module/hadoop-3.1.3

- [atguigu@node1 hadoop-3.1.3]$ sbin/start-dfs.sh

- Starting namenodes on [node1]

- Starting datanodes

- Starting secondary namenodes [node3]

- [atguigu@node1 hadoop-3.1.3]$ jps

- 5619 DataNode

- 5398 NameNode

- 6647 Jps

- 6457 NodeManager

- [atguigu@node1 hadoop-3.1.3]$ hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /wcinput /wcoutput

- 2023-03-22 09:22:26,672 INFO impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

- 2023-03-22 09:22:26,954 INFO impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

- 2023-03-22 09:22:26,954 INFO impl.MetricsSystemImpl: JobTracker metrics system started

- 2023-03-22 09:22:28,713 INFO input.FileInputFormat: Total input files to process : 1

- 2023-03-22 09:22:28,764 INFO mapreduce.JobSubmitter: number of splits:1

- 2023-03-22 09:22:29,208 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local321834777_0001

- 2023-03-22 09:22:29,218 INFO mapreduce.JobSubmitter: Executing with tokens: []

- 2023-03-22 09:22:29,515 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

- 2023-03-22 09:22:29,520 INFO mapreduce.Job: Running job: job_local321834777_0001

- 2023-03-22 09:22:29,525 INFO mapred.LocalJobRunner: OutputCommitter set in config null

- 2023-03-22 09:22:29,551 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

- 2023-03-22 09:22:29,551 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

- 2023-03-22 09:22:29,553 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

- 2023-03-22 09:22:29,785 INFO mapred.LocalJobRunner: Waiting for map tasks

- 2023-03-22 09:22:29,791 INFO mapred.LocalJobRunner: Starting task: attempt_local321834777_0001_m_000000_0

- 2023-03-22 09:22:29,908 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

- 2023-03-22 09:22:29,910 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

- 2023-03-22 09:22:30,037 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

- 2023-03-22 09:22:30,056 INFO mapred.MapTask: Processing split: hdfs://node1:8020/wcinput/word.txt:0+45

- 2023-03-22 09:22:30,532 INFO mapreduce.Job: Job job_local321834777_0001 running in uber mode : false

- 2023-03-22 09:22:30,547 INFO mapreduce.Job: map 0% reduce 0%

- 2023-03-22 09:22:31,234 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

- 2023-03-22 09:22:31,235 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

- 2023-03-22 09:22:31,235 INFO mapred.MapTask: soft limit at 83886080

- 2023-03-22 09:22:31,235 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

- 2023-03-22 09:22:31,235 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

- 2023-03-22 09:22:31,277 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

- 2023-03-22 09:22:31,542 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

- 2023-03-22 09:22:36,432 INFO mapred.LocalJobRunner:

- 2023-03-22 09:22:36,463 INFO mapred.MapTask: Starting flush of map output

- 2023-03-22 09:22:36,463 INFO mapred.MapTask: Spilling map output

- 2023-03-22 09:22:36,463 INFO mapred.MapTask: bufstart = 0; bufend = 69; bufvoid = 104857600

- 2023-03-22 09:22:36,463 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214376(104857504); length = 21/6553600

- 2023-03-22 09:22:36,615 INFO mapred.MapTask: Finished spill 0

- 2023-03-22 09:22:36,655 INFO mapred.Task: Task:attempt_local321834777_0001_m_000000_0 is done. And is in the process of committing

- 2023-03-22 09:22:36,701 INFO mapred.LocalJobRunner: map

- 2023-03-22 09:22:36,701 INFO mapred.Task: Task 'attempt_local321834777_0001_m_000000_0' done.

- 2023-03-22 09:22:36,738 INFO mapred.Task: Final Counters for attempt_local321834777_0001_m_000000_0: Counters: 23

- File System Counters

- FILE: Number of bytes read=316543

- FILE: Number of bytes written=822653

- FILE: Number of read operations=0

- FILE: Number of large read operations=0

- FILE: Number of write operations=0

- HDFS: Number of bytes read=45

- HDFS: Number of bytes written=0

- HDFS: Number of read operations=5

- HDFS: Number of large read operations=0

- HDFS: Number of write operations=1

- Map-Reduce Framework

- Map input records=4

- Map output records=6

- Map output bytes=69

- Map output materialized bytes=60

- Input split bytes=99

- Combine input records=6

- Combine output records=4

- Spilled Records=4

- Failed Shuffles=0

- Merged Map outputs=0

- GC time elapsed (ms)=0

- Total committed heap usage (bytes)=271056896

- File Input Format Counters

- Bytes Read=45

- 2023-03-22 09:22:36,739 INFO mapred.LocalJobRunner: Finishing task: attempt_local321834777_0001_m_000000_0

- 2023-03-22 09:22:36,810 INFO mapred.LocalJobRunner: map task executor complete.

- 2023-03-22 09:22:36,849 INFO mapred.LocalJobRunner: Waiting for reduce tasks

- 2023-03-22 09:22:36,876 INFO mapred.LocalJobRunner: Starting task: attempt_local321834777_0001_r_000000_0

- 2023-03-22 09:22:37,033 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

- 2023-03-22 09:22:37,033 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

- 2023-03-22 09:22:37,035 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

- 2023-03-22 09:22:37,043 INFO mapred.ReduceTask: Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@4db08ce7

- 2023-03-22 09:22:37,046 WARN impl.MetricsSystemImpl: JobTracker metrics system already initialized!

- 2023-03-22 09:22:37,178 INFO reduce.MergeManagerImpl: MergerManager: memoryLimit=642252800, maxSingleShuffleLimit=160563200, mergeThreshold=423886880, ioSortFactor=10, memToMemMergeOutputsThreshold=10

- 2023-03-22 09:22:37,216 INFO reduce.EventFetcher: attempt_local321834777_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

- 2023-03-22 09:22:37,376 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local321834777_0001_m_000000_0 decomp: 56 len: 60 to MEMORY

- 2023-03-22 09:22:37,409 INFO reduce.InMemoryMapOutput: Read 56 bytes from map-output for attempt_local321834777_0001_m_000000_0

- 2023-03-22 09:22:37,421 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 56, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->56

- 2023-03-22 09:22:37,457 INFO reduce.EventFetcher: EventFetcher is interrupted.. Returning

- 2023-03-22 09:22:37,460 INFO mapred.LocalJobRunner: 1 / 1 copied.

- 2023-03-22 09:22:37,460 INFO reduce.MergeManagerImpl: finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

- 2023-03-22 09:22:37,504 INFO mapreduce.Job: map 100% reduce 0%

- 2023-03-22 09:22:37,534 INFO mapred.Merger: Merging 1 sorted segments

- 2023-03-22 09:22:37,534 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 46 bytes

- 2023-03-22 09:22:37,536 INFO reduce.MergeManagerImpl: Merged 1 segments, 56 bytes to disk to satisfy reduce memory limit

- 2023-03-22 09:22:37,537 INFO reduce.MergeManagerImpl: Merging 1 files, 60 bytes from disk

- 2023-03-22 09:22:37,541 INFO reduce.MergeManagerImpl: Merging 0 segments, 0 bytes from memory into reduce

- 2023-03-22 09:22:37,542 INFO mapred.Merger: Merging 1 sorted segments

- 2023-03-22 09:22:37,547 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 46 bytes

- 2023-03-22 09:22:37,708 INFO mapred.LocalJobRunner: 1 / 1 copied.

- 2023-03-22 09:22:37,831 INFO Configuration.deprecation: mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

- 2023-03-22 09:22:38,001 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

- 2023-03-22 09:22:39,978 INFO mapred.Task: Task:attempt_local321834777_0001_r_000000_0 is done. And is in the process of committing

- 2023-03-22 09:22:39,989 INFO mapred.LocalJobRunner: 1 / 1 copied.

- 2023-03-22 09:22:39,990 INFO mapred.Task: Task attempt_local321834777_0001_r_000000_0 is allowed to commit now

- 2023-03-22 09:22:40,106 INFO output.FileOutputCommitter: Saved output of task 'attempt_local321834777_0001_r_000000_0' to hdfs://node1:8020/wcoutput

- 2023-03-22 09:22:40,111 INFO mapred.LocalJobRunner: reduce > reduce

- 2023-03-22 09:22:40,111 INFO mapred.Task: Task 'attempt_local321834777_0001_r_000000_0' done.

- 2023-03-22 09:22:40,112 INFO mapred.Task: Final Counters for attempt_local321834777_0001_r_000000_0: Counters: 29

- File System Counters

- FILE: Number of bytes read=316695

- FILE: Number of bytes written=822713

- FILE: Number of read operations=0

- FILE: Number of large read operations=0

- FILE: Number of write operations=0

- HDFS: Number of bytes read=45

- HDFS: Number of bytes written=38

- HDFS: Number of read operations=10

- HDFS: Number of large read operations=0

- HDFS: Number of write operations=3

- Map-Reduce Framework

- Combine input records=0

- Combine output records=0

- Reduce input groups=4

- Reduce shuffle bytes=60

- Reduce input records=4

- Reduce output records=4

- Spilled Records=4

- Shuffled Maps =1

- Failed Shuffles=0

- Merged Map outputs=1

- GC time elapsed (ms)=159

- Total committed heap usage (bytes)=272105472

- Shuffle Errors

- BAD_ID=0

- CONNECTION=0

- IO_ERROR=0

- WRONG_LENGTH=0

- WRONG_MAP=0

- WRONG_REDUCE=0

- File Output Format Counters

- Bytes Written=38

- 2023-03-22 09:22:40,112 INFO mapred.LocalJobRunner: Finishing task: attempt_local321834777_0001_r_000000_0

- 2023-03-22 09:22:40,115 INFO mapred.LocalJobRunner: reduce task executor complete.

- 2023-03-22 09:22:40,507 INFO mapreduce.Job: map 100% reduce 100%

- 2023-03-22 09:22:40,507 INFO mapreduce.Job: Job job_local321834777_0001 completed successfully

- 2023-03-22 09:22:40,529 INFO mapreduce.Job: Counters: 35

- File System Counters

- FILE: Number of bytes read=633238

- FILE: Number of bytes written=1645366

- FILE: Number of read operations=0

- FILE: Number of large read operations=0

- FILE: Number of write operations=0

- HDFS: Number of bytes read=90

- HDFS: Number of bytes written=38

- HDFS: Number of read operations=15

- HDFS: Number of large read operations=0

- HDFS: Number of write operations=4

- Map-Reduce Framework

- Map input records=4

- Map output records=6

- Map output bytes=69

- Map output materialized bytes=60

- Input split bytes=99

- Combine input records=6

- Combine output records=4

- Reduce input groups=4

- Reduce shuffle bytes=60

- Reduce input records=4

- Reduce output records=4

- Spilled Records=8

- Shuffled Maps =1

- Failed Shuffles=0

- Merged Map outputs=1

- GC time elapsed (ms)=159

- Total committed heap usage (bytes)=543162368

- Shuffle Errors

- BAD_ID=0

- CONNECTION=0

- IO_ERROR=0

- WRONG_LENGTH=0

- WRONG_MAP=0

- WRONG_REDUCE=0

- File Input Format Counters

- Bytes Read=45

- File Output Format Counters

- Bytes Written=38

- [atguigu@node1 hadoop-3.1.3]$

P032【032_尚硅谷_Hadoop_入门_集群崩溃处理办法】08:10

先停掉dfs和yarn,sbin/stop-dfs.sh、sbin/stop-yarn.sh,再删除/data,重新格式化hdfs namenode -format。

- [atguigu@node1 hadoop-3.1.3]$ jps

- 5619 DataNode

- 5398 NameNode

- 18967 Jps

- 6457 NodeManager

- [atguigu@node1 hadoop-3.1.3]$ kill -9 5619

- [atguigu@node1 hadoop-3.1.3]$ jps

- 20036 Jps

- 5398 NameNode

- 6457 NodeManager

- [atguigu@node1 hadoop-3.1.3]$ sbin/stop-dfs.sh

- Stopping namenodes on [node1]

- Stopping datanodes

- Stopping secondary namenodes [node3]

- [atguigu@node1 hadoop-3.1.3]$ jps

- 32126 Jps

- [atguigu@node1 hadoop-3.1.3]$

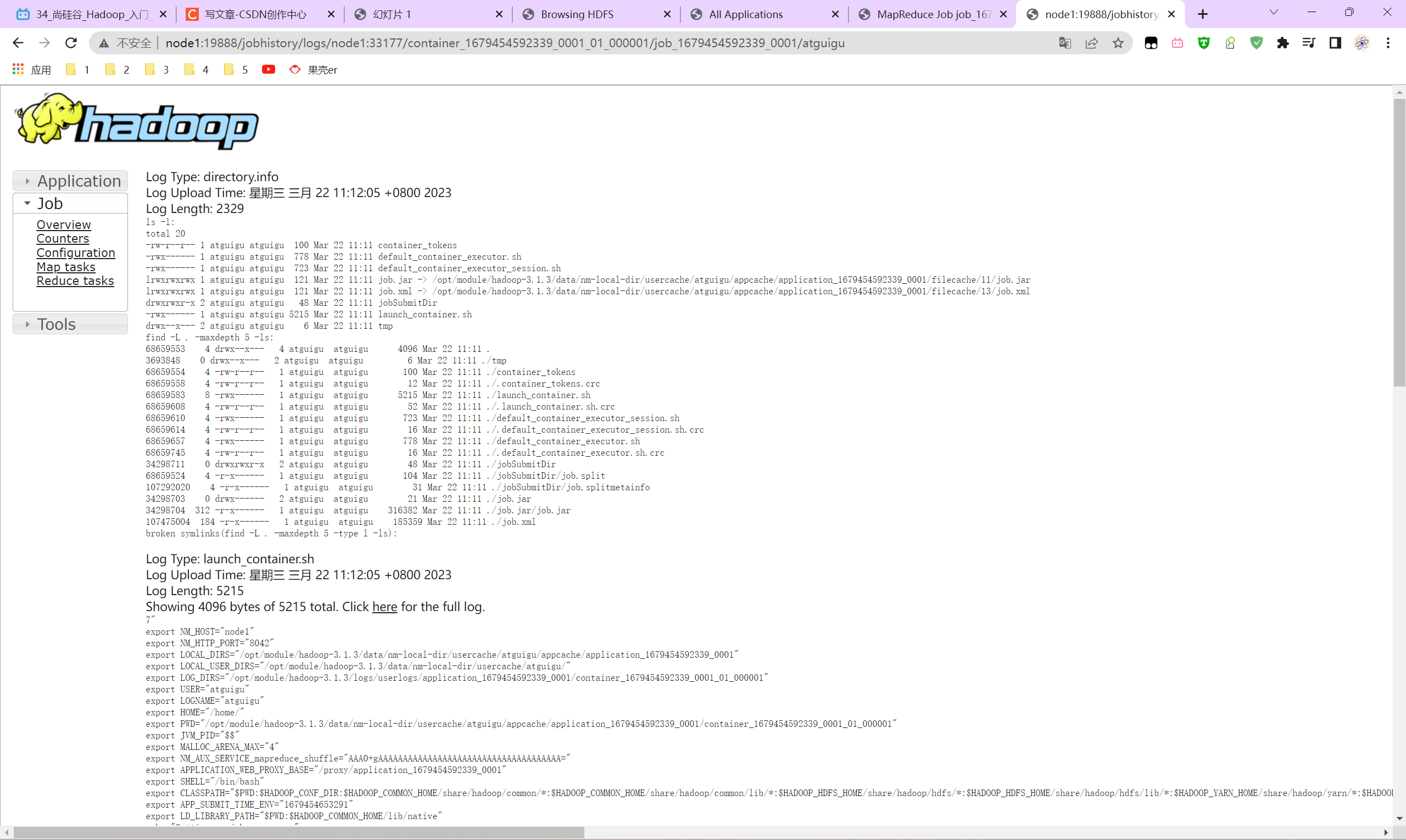

P033【033_尚硅谷_Hadoop_入门_历史服务器配置】05:26

node1:mapred --daemon start historyserver

- [atguigu@node1 hadoop-3.1.3]$ mapred --daemon start historyserver

- [atguigu@node1 hadoop-3.1.3]$ jps

- 27061 DataNode

- 37557 NodeManager

- 42666 JobHistoryServer

- 26879 NameNode

- 42815 Jps

- [atguigu@node1 hadoop-3.1.3]$ hadoop fs -put wcinput/word.txt /input

- 2023-03-22 09:58:16,749 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

- [atguigu@node1 hadoop-3.1.3]$ hadoop jar share/hadoop/

- client/ common/ hdfs/ mapreduce/ tools/ yarn/

- [atguigu@node1 hadoop-3.1.3]$ hadoop jar share/hadoop/

- client/ common/ hdfs/ mapreduce/ tools/ yarn/

- [atguigu@node1 hadoop-3.1.3]$ hadoop jar share/hadoop/mapreduce/

- hadoop-mapreduce-client-app-3.1.3.jar hadoop-mapreduce-client-jobclient-3.1.3.jar hadoop-mapreduce-examples-3.1.3.jar

- hadoop-mapreduce-client-common-3.1.3.jar hadoop-mapreduce-client-jobclient-3.1.3-tests.jar jdiff/

- hadoop-mapreduce-client-core-3.1.3.jar hadoop-mapreduce-client-nativetask-3.1.3.jar lib/

- hadoop-mapreduce-client-hs-3.1.3.jar hadoop-mapreduce-client-shuffle-3.1.3.jar lib-examples/

- hadoop-mapreduce-client-hs-plugins-3.1.3.jar hadoop-mapreduce-client-uploader-3.1.3.jar sources/

- [atguigu@node1 hadoop-3.1.3]$ hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output

- 2023-03-22 09:59:43,045 INFO impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

- 2023-03-22 09:59:43,486 INFO impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

- 2023-03-22 09:59:43,486 INFO impl.MetricsSystemImpl: JobTracker metrics system started

- 2023-03-22 09:59:45,880 INFO input.FileInputFormat: Total input files to process : 1

- 2023-03-22 09:59:45,985 INFO mapreduce.JobSubmitter: number of splits:1

- 2023-03-22 09:59:46,637 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local146698941_0001

- 2023-03-22 09:59:46,642 INFO mapreduce.JobSubmitter: Executing with tokens: []

- 2023-03-22 09:59:46,972 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

- 2023-03-22 09:59:46,974 INFO mapreduce.Job: Running job: job_local146698941_0001

- 2023-03-22 09:59:47,033 INFO mapred.LocalJobRunner: OutputCommitter set in config null

- 2023-03-22 09:59:47,054 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

- 2023-03-22 09:59:47,055 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

- 2023-03-22 09:59:47,058 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

- 2023-03-22 09:59:47,181 INFO mapred.LocalJobRunner: Waiting for map tasks

- 2023-03-22 09:59:47,182 INFO mapred.LocalJobRunner: Starting task: attempt_local146698941_0001_m_000000_0

- 2023-03-22 09:59:47,251 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

- 2023-03-22 09:59:47,255 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

- 2023-03-22 09:59:47,376 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

- 2023-03-22 09:59:47,390 INFO mapred.MapTask: Processing split: hdfs://node1:8020/input/word.txt:0+45

- 2023-03-22 09:59:48,125 INFO mapreduce.Job: Job job_local146698941_0001 running in uber mode : false

- 2023-03-22 09:59:48,150 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

- 2023-03-22 09:59:48,150 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

- 2023-03-22 09:59:48,150 INFO mapred.MapTask: soft limit at 83886080

- 2023-03-22 09:59:48,150 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

- 2023-03-22 09:59:48,150 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

- 2023-03-22 09:59:48,186 INFO mapreduce.Job: map 0% reduce 0%

- 2023-03-22 09:59:48,202 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

- 2023-03-22 09:59:49,223 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

- 2023-03-22 09:59:50,371 INFO mapred.LocalJobRunner:

- 2023-03-22 09:59:50,416 INFO mapred.MapTask: Starting flush of map output

- 2023-03-22 09:59:50,416 INFO mapred.MapTask: Spilling map output

- 2023-03-22 09:59:50,416 INFO mapred.MapTask: bufstart = 0; bufend = 69; bufvoid = 104857600

- 2023-03-22 09:59:50,416 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214376(104857504); length = 21/6553600

- 2023-03-22 09:59:50,543 INFO mapred.MapTask: Finished spill 0

- 2023-03-22 09:59:50,733 INFO mapred.Task: Task:attempt_local146698941_0001_m_000000_0 is done. And is in the process of committing

- 2023-03-22 09:59:50,764 INFO mapred.LocalJobRunner: map

- 2023-03-22 09:59:50,764 INFO mapred.Task: Task 'attempt_local146698941_0001_m_000000_0' done.

- 2023-03-22 09:59:50,847 INFO mapred.Task: Final Counters for attempt_local146698941_0001_m_000000_0: Counters: 23

- File System Counters

- FILE: Number of bytes read=316541

- FILE: Number of bytes written=822643

- FILE: Number of read operations=0

- FILE: Number of large read operations=0

- FILE: Number of write operations=0

- HDFS: Number of bytes read=45

- HDFS: Number of bytes written=0

- HDFS: Number of read operations=5

- HDFS: Number of large read operations=0

- HDFS: Number of write operations=1

- Map-Reduce Framework

- Map input records=4

- Map output records=6

- Map output bytes=69

- Map output materialized bytes=60

- Input split bytes=97

- Combine input records=6

- Combine output records=4

- Spilled Records=4

- Failed Shuffles=0

- Merged Map outputs=0

- GC time elapsed (ms)=0

- Total committed heap usage (bytes)=267386880

- File Input Format Counters

- Bytes Read=45

- 2023-03-22 09:59:50,848 INFO mapred.LocalJobRunner: Finishing task: attempt_local146698941_0001_m_000000_0

- 2023-03-22 09:59:50,946 INFO mapred.LocalJobRunner: map task executor complete.

- 2023-03-22 09:59:51,007 INFO mapred.LocalJobRunner: Waiting for reduce tasks

- 2023-03-22 09:59:51,025 INFO mapred.LocalJobRunner: Starting task: attempt_local146698941_0001_r_000000_0

- 2023-03-22 09:59:51,156 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

- 2023-03-22 09:59:51,157 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

- 2023-03-22 09:59:51,158 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

- 2023-03-22 09:59:51,213 INFO mapreduce.Job: map 100% reduce 0%

- 2023-03-22 09:59:51,226 INFO mapred.ReduceTask: Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@1f0445e7

- 2023-03-22 09:59:51,238 WARN impl.MetricsSystemImpl: JobTracker metrics system already initialized!

- 2023-03-22 09:59:51,338 INFO reduce.MergeManagerImpl: MergerManager: memoryLimit=642252800, maxSingleShuffleLimit=160563200, mergeThreshold=423886880, ioSortFactor=10, memToMemMergeOutputsThreshold=10

- 2023-03-22 09:59:51,355 INFO reduce.EventFetcher: attempt_local146698941_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

- 2023-03-22 09:59:51,632 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local146698941_0001_m_000000_0 decomp: 56 len: 60 to MEMORY

- 2023-03-22 09:59:51,665 INFO reduce.InMemoryMapOutput: Read 56 bytes from map-output for attempt_local146698941_0001_m_000000_0

- 2023-03-22 09:59:51,675 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 56, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->56

- 2023-03-22 09:59:51,683 INFO reduce.EventFetcher: EventFetcher is interrupted.. Returning

- 2023-03-22 09:59:51,689 INFO mapred.LocalJobRunner: 1 / 1 copied.

- 2023-03-22 09:59:51,693 INFO reduce.MergeManagerImpl: finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

- 2023-03-22 09:59:51,715 INFO mapred.Merger: Merging 1 sorted segments

- 2023-03-22 09:59:51,716 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 46 bytes

- 2023-03-22 09:59:51,719 INFO reduce.MergeManagerImpl: Merged 1 segments, 56 bytes to disk to satisfy reduce memory limit

- 2023-03-22 09:59:51,720 INFO reduce.MergeManagerImpl: Merging 1 files, 60 bytes from disk

- 2023-03-22 09:59:51,725 INFO reduce.MergeManagerImpl: Merging 0 segments, 0 bytes from memory into reduce

- 2023-03-22 09:59:51,725 INFO mapred.Merger: Merging 1 sorted segments

- 2023-03-22 09:59:51,728 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 46 bytes

- 2023-03-22 09:59:51,729 INFO mapred.LocalJobRunner: 1 / 1 copied.

- 2023-03-22 09:59:51,867 INFO Configuration.deprecation: mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

- 2023-03-22 09:59:52,038 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

- 2023-03-22 09:59:52,284 INFO mapred.Task: Task:attempt_local146698941_0001_r_000000_0 is done. And is in the process of committing

- 2023-03-22 09:59:52,302 INFO mapred.LocalJobRunner: 1 / 1 copied.

- 2023-03-22 09:59:52,302 INFO mapred.Task: Task attempt_local146698941_0001_r_000000_0 is allowed to commit now

- 2023-03-22 09:59:52,339 INFO output.FileOutputCommitter: Saved output of task 'attempt_local146698941_0001_r_000000_0' to hdfs://node1:8020/output

- 2023-03-22 09:59:52,343 INFO mapred.LocalJobRunner: reduce > reduce

- 2023-03-22 09:59:52,343 INFO mapred.Task: Task 'attempt_local146698941_0001_r_000000_0' done.

- 2023-03-22 09:59:52,344 INFO mapred.Task: Final Counters for attempt_local146698941_0001_r_000000_0: Counters: 29

- File System Counters

- FILE: Number of bytes read=316693

- FILE: Number of bytes written=822703