热门标签

热门文章

- 1git和github的关系_git和github 什么关系

- 21024程序员日:有奖答题,全“猿”狂欢!(无套路,100%中奖)_1024问答趣味题吗

- 3功能测试与性能测试的区别

- 4OFD2IMG 一个OFD发票转PNG的python开源库。_ofd2png

- 5idea配置外置gradle_idea gradle配置

- 63分钟带你重温 SelectDB 产品发布会亮点!

- 7删除数组中的0元素_删除数组零元素

- 8Android 8.0 Activity的启动流程

- 9利用魔搭中的开源模型进行动物识别_动物识别模型 开源

- 102024年最全Axios发送请求的方法(3),字节跳动Andorid岗25k+的面试题_axios如何发送请求

当前位置: article > 正文

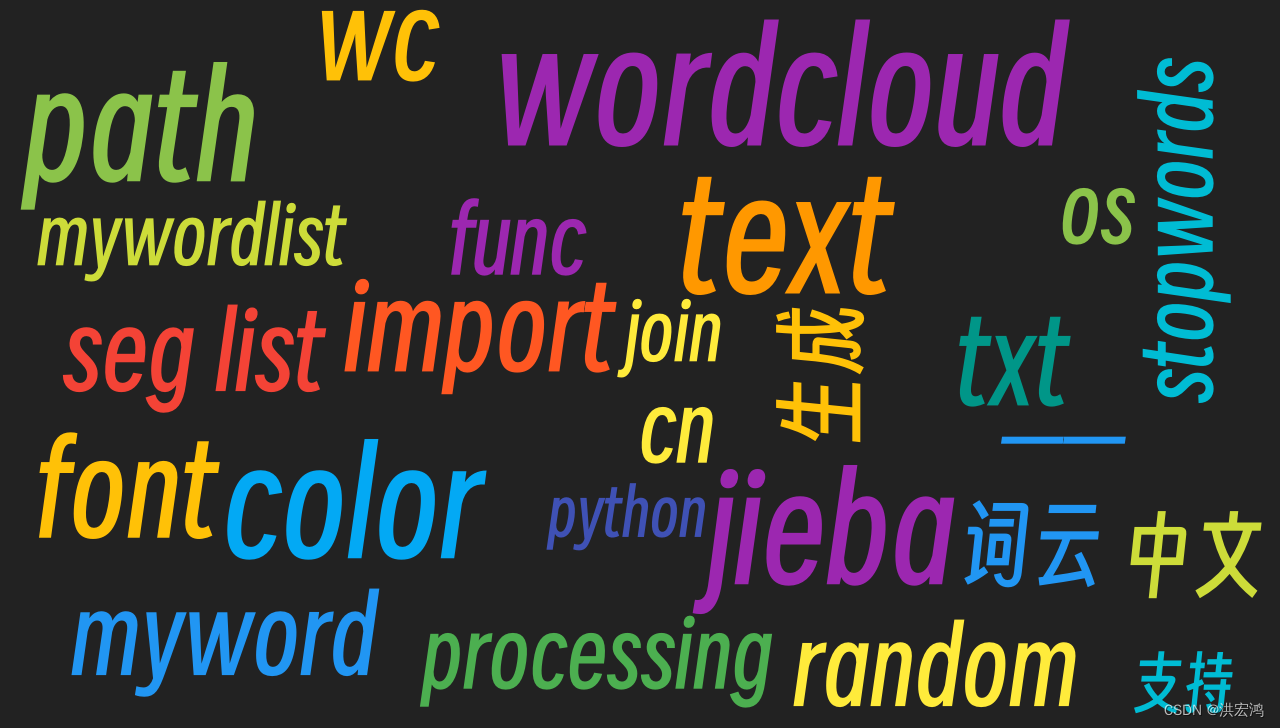

使用 jieba 跟 wordcloud 生成中文词云_jieba wordcloud 配合

作者:菜鸟追梦旅行 | 2024-06-17 00:36:23

赞

踩

jieba wordcloud 配合

使用 jieba 跟 wordcloud 生成词云

其实原来 wordcloud 是不支持中文的,

所以我们需要继承 jieba 来支持中文

字体使用的是得意黑

得意黑显示中文还是挺好看的

#!/usr/bin/env python """ 结合中文的例子 =============== 使用 wordcloud 生成一个方形词云 """ import random import jieba import os from os import path from wordcloud import WordCloud # 获取数据路径 # 在 IPython notebook 下则使用 getcwd() d = path.dirname(__file__) if "__file__" in locals() else os.getcwd() stopwords_path = d + '/wc_cn/stopwords_cn_en.txt' # Chinese fonts must be set font_path = d + '/fonts/smiley-sans/SmileySans-Oblique.otf' # define a random color function colors = ['#F44336', '#E91E63', '#9C27B0', '#673AB7', '#3F51B5', '#2196F3', '#03A9F4', '#00BCD4', '#009688', '#4CAF50', '#8BC34A', '#CDDC39', '#FFEB3B', '#FFC107', '#FF9800', '#FF5722'] color_func = lambda *args, **kwargs: colors[random.randint(0, len(colors)-1)] # The function for processing text with Jieba def jieba_processing_txt(text): # 加载自定义词典 jieba.load_userdict('./wc_cn/userdict.txt') mywordlist = [] seg_list = jieba.cut(text, cut_all=False) liststr = "/ ".join(seg_list) with open(stopwords_path, encoding='utf-8') as f_stop: f_stop_text = f_stop.read() f_stop_seg_list = f_stop_text.splitlines() for myword in liststr.split('/'): if not (myword.strip() in f_stop_seg_list) and len(myword.strip()) > 1: mywordlist.append(myword) return ' '.join(mywordlist) # 读取 constitution.txt 的文本 text = open(path.join(d, d + '/blackboard.md'), encoding='utf-8').read() text = str(text) wc = WordCloud(font_path=font_path, max_words=25, min_word_length=2, max_font_size=150, random_state=42, width=1280, height=728, margin=3, background_color='#222222', ) # 生成一个词云图片 wordcloud = wc.generate(jieba_processing_txt(text)) wc.recolor(color_func=color_func) with open('wordcloud.png', 'wb') as f: wordcloud.to_image().save(f, 'PNG')

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

运行生成

E:\python\wordcloud>python my_wordcloud_cn.py

Building prefix dict from the default dictionary ...

Loading model from cache C:\Users\hong\AppData\Local\Temp\jieba.cache

Loading model cost 0.580 seconds.

Prefix dict has been built successfully.

- 1

- 2

- 3

- 4

- 5

结果

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/菜鸟追梦旅行/article/detail/728919

推荐阅读

相关标签