- 1【Unity】 在Unity中实现Tcp通讯(4)———心跳机制_unity如何做心跳机制

- 2思科vpn配置笔记

- 3大数据综合案例-网站日志分析

- 4基于Hadoop和Hive的聊天数据(FineBI)可视化分析_finebi如何链接hive

- 5Redis 提升篇之 Redisson_redis redisson

- 6Springcloud elasticsearch基础介绍_springcloud 和elasticserrch

- 7我的Java研发实习面试经历_java面试上来问9个球

- 8ds1302模块 树莓派_一个基于树莓派的边缘视频AI Demo案例

- 9电商AI开源与闭源:AI大语言模型的技术选型与决策_开源的决策ai模型

- 10TensorFlow框架介绍-深度学习_什么是tensorflow框架

x264源代码简单分析:编码器主干部分-1_x264源码解读

赞

踩

=====================================================

H.264源代码分析文章列表:

【编码 - x264】

x264源代码简单分析:x264命令行工具(x264.exe)

x264源代码简单分析:x264_slice_write()

x264源代码简单分析:宏块分析(Analysis)部分-帧内宏块(Intra)

x264源代码简单分析:宏块分析(Analysis)部分-帧间宏块(Inter)

x264源代码简单分析:熵编码(Entropy Encoding)部分

【解码 - libavcodec H.264 解码器】

FFmpeg的H.264解码器源代码简单分析:解析器(Parser)部分

FFmpeg的H.264解码器源代码简单分析:解码器主干部分

FFmpeg的H.264解码器源代码简单分析:熵解码(EntropyDecoding)部分

FFmpeg的H.264解码器源代码简单分析:宏块解码(Decode)部分-帧内宏块(Intra)

FFmpeg的H.264解码器源代码简单分析:宏块解码(Decode)部分-帧间宏块(Inter)

FFmpeg的H.264解码器源代码简单分析:环路滤波(Loop Filter)部分

=====================================================

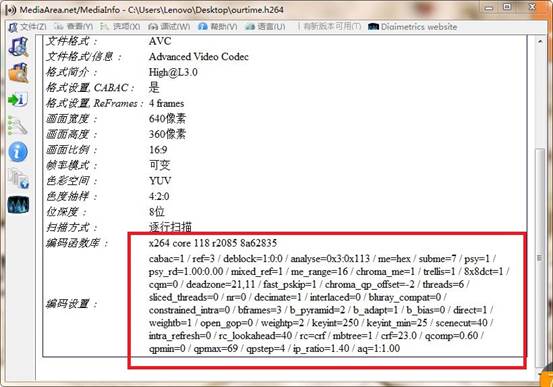

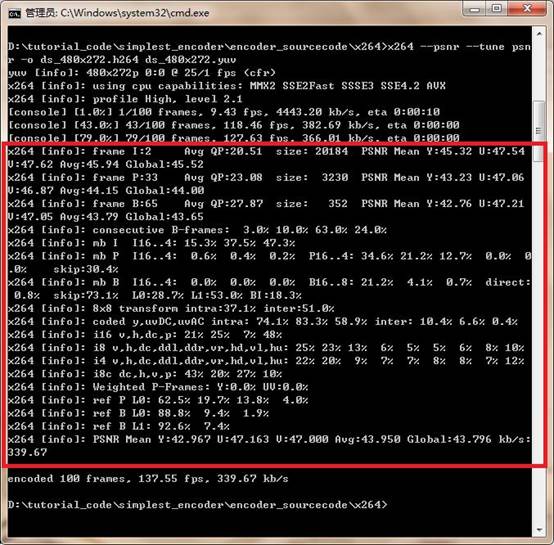

本文分析x264编码器主干部分的源代码。“主干部分”指的就是libx264中最核心的接口函数——x264_encoder_encode(),以及相关的几个接口函数x264_encoder_open(),x264_encoder_headers(),和x264_encoder_close()。这一部分源代码比较复杂,现在看了半天依然感觉很多地方不太清晰,暂且把已经理解的地方整理出来,以后再慢慢补充还不太清晰的地方。由于主干部分内容比较多,因此打算分成两篇文章来记录:第一篇文章记录x264_encoder_open(),x264_encoder_headers(),和x264_encoder_close()这三个函数,第二篇文章记录x264_encoder_encode()函数。

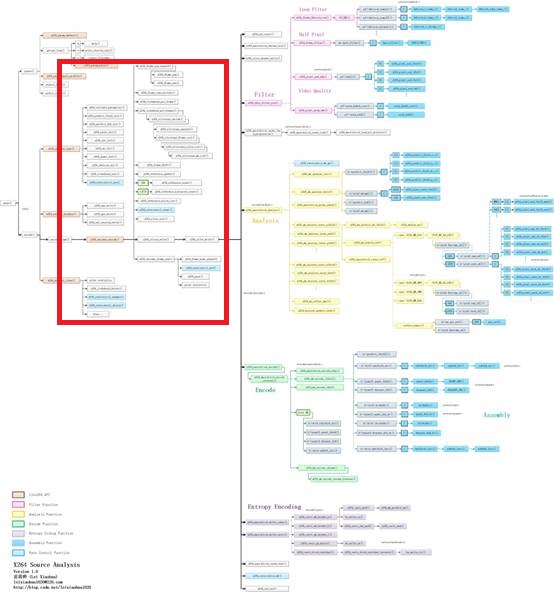

函数调用关系图

X264编码器主干部分的源代码在整个x264中的位置如下图所示。X264编码器主干部分的函数调用关系如下图所示。

从图中可以看出,x264主干部分最复杂的函数就是x264_encoder_encode(),该函数完成了编码一帧YUV为H.264码流的工作。与之配合的还有打开编码器的函数x264_encoder_open(),关闭编码器的函数x264_encoder_close(),以及输出SPS/PPS/SEI这样的头信息的x264_encoder_headers()。

x264_encoder_open()用于打开编码器,其中初始化了libx264编码所需要的各种变量。它调用了下面的函数:x264_validate_parameters():检查输入参数(例如输入图像的宽高是否为正数)。x264_predict_16x16_init():初始化Intra16x16帧内预测汇编函数。x264_predict_4x4_init():初始化Intra4x4帧内预测汇编函数。x264_pixel_init():初始化像素值计算相关的汇编函数(包括SAD、SATD、SSD等)。x264_dct_init():初始化DCT变换和DCT反变换相关的汇编函数。x264_mc_init():初始化运动补偿相关的汇编函数。x264_quant_init():初始化量化和反量化相关的汇编函数。x264_deblock_init():初始化去块效应滤波器相关的汇编函数。x264_lookahead_init():初始化Lookahead相关的变量。x264_ratecontrol_new():初始化码率控制相关的变量。

x264_encoder_headers()输出SPS/PPS/SEI这些H.264码流的头信息。它调用了下面的函数:x264_sps_write():输出SPSx264_pps_write():输出PPSx264_sei_version_write():输出SEI

x264_encoder_encode()编码一帧YUV为H.264码流。它调用了下面的函数:

x264_frame_pop_unused():获取1个x264_frame_t类型结构体fenc。如果frames.unused[]队列不为空,就调用x264_frame_pop()从unused[]队列取1个现成的;否则就调用x264_frame_new()创建一个新的。x264_frame_copy_picture():将输入的图像数据拷贝至fenc。x264_lookahead_put_frame():将fenc放入lookahead.next.list[]队列,等待确定帧类型。x264_lookahead_get_frames():通过lookahead分析帧类型。该函数调用了x264_slicetype_decide(),x264_slicetype_analyse()和x264_slicetype_frame_cost()等函数。经过一些列分析之后,最终确定了帧类型信息,并且将帧放入frames.current[]队列。x264_frame_shift():从frames.current[]队列取出1帧用于编码。x264_reference_update():更新参考帧列表。x264_reference_reset():如果为IDR帧,调用该函数清空参考帧列表。x264_reference_hierarchy_reset():如果是I(非IDR帧)、P帧、B帧(可做为参考帧),调用该函数。x264_reference_build_list():创建参考帧列表list0和list1。x264_ratecontrol_start():开启码率控制。x264_slice_init():创建 Slice Header。x264_slices_write():编码数据(最关键的步骤)。其中调用了x264_slice_write()完成了编码的工作(注意“x264_slices_write()”和“x264_slice_write()”名字差了一个“s”)。x264_encoder_frame_end():编码结束后做一些后续处理,例如记录一些统计信息。其中调用了x264_frame_push_unused()将fenc重新放回frames.unused[]队列,并且调用x264_ratecontrol_end()关闭码率控制。

x264_encoder_close()用于关闭解码器,同时输出一些统计信息。它调用了下面的函数:

x264_lookahead_delete():释放Lookahead相关的变量。x264_ratecontrol_summary():汇总码率控制信息。x264_ratecontrol_delete():关闭码率控制。

本文将会记录x264_encoder_open(),x264_encoder_headers(),和x264_encoder_close()这三个函数的源代码。下一篇文章记录x264_encoder_encode()函数。

x264_encoder_open()

x264_encoder_open()是一个libx264的API。该函数用于打开编码器,其中初始化了libx264编码所需要的各种变量。该函数的声明如下所示。

- /* x264_encoder_open:

- * create a new encoder handler, all parameters from x264_param_t are copied */

- x264_t *x264_encoder_open( x264_param_t * );

- /****************************************************************************

- * x264_encoder_open:

- * 注释和处理:雷霄骅

- * http://blog.csdn.net/leixiaohua1020

- * leixiaohua1020@126.com

- ****************************************************************************/

- //打开编码器

- x264_t *x264_encoder_open( x264_param_t *param )

- {

- x264_t *h;

- char buf[1000], *p;

- int qp, i_slicetype_length;

-

- CHECKED_MALLOCZERO( h, sizeof(x264_t) );

-

- /* Create a copy of param */

- //将参数拷贝进来

- memcpy( &h->param, param, sizeof(x264_param_t) );

-

- if( param->param_free )

- param->param_free( param );

-

- if( x264_threading_init() )

- {

- x264_log( h, X264_LOG_ERROR, "unable to initialize threading\n" );

- goto fail;

- }

- //检查输入参数

- if( x264_validate_parameters( h, 1 ) < 0 )

- goto fail;

-

- if( h->param.psz_cqm_file )

- if( x264_cqm_parse_file( h, h->param.psz_cqm_file ) < 0 )

- goto fail;

-

- if( h->param.rc.psz_stat_out )

- h->param.rc.psz_stat_out = strdup( h->param.rc.psz_stat_out );

- if( h->param.rc.psz_stat_in )

- h->param.rc.psz_stat_in = strdup( h->param.rc.psz_stat_in );

-

- x264_reduce_fraction( &h->param.i_fps_num, &h->param.i_fps_den );

- x264_reduce_fraction( &h->param.i_timebase_num, &h->param.i_timebase_den );

-

- /* Init x264_t */

- h->i_frame = -1;

- h->i_frame_num = 0;

-

- if( h->param.i_avcintra_class )

- h->i_idr_pic_id = 5;

- else

- h->i_idr_pic_id = 0;

-

- if( (uint64_t)h->param.i_timebase_den * 2 > UINT32_MAX )

- {

- x264_log( h, X264_LOG_ERROR, "Effective timebase denominator %u exceeds H.264 maximum\n", h->param.i_timebase_den );

- goto fail;

- }

-

- x264_set_aspect_ratio( h, &h->param, 1 );

- //初始化SPS和PPS

- x264_sps_init( h->sps, h->param.i_sps_id, &h->param );

- x264_pps_init( h->pps, h->param.i_sps_id, &h->param, h->sps );

- //检查级Level-通过宏块个数等等

- x264_validate_levels( h, 1 );

-

- h->chroma_qp_table = i_chroma_qp_table + 12 + h->pps->i_chroma_qp_index_offset;

-

- if( x264_cqm_init( h ) < 0 )

- goto fail;

- //各种赋值

- h->mb.i_mb_width = h->sps->i_mb_width;

- h->mb.i_mb_height = h->sps->i_mb_height;

- h->mb.i_mb_count = h->mb.i_mb_width * h->mb.i_mb_height;

-

- h->mb.chroma_h_shift = CHROMA_FORMAT == CHROMA_420 || CHROMA_FORMAT == CHROMA_422;

- h->mb.chroma_v_shift = CHROMA_FORMAT == CHROMA_420;

-

- /* Adaptive MBAFF and subme 0 are not supported as we require halving motion

- * vectors during prediction, resulting in hpel mvs.

- * The chosen solution is to make MBAFF non-adaptive in this case. */

- h->mb.b_adaptive_mbaff = PARAM_INTERLACED && h->param.analyse.i_subpel_refine;

-

- /* Init frames. */

- if( h->param.i_bframe_adaptive == X264_B_ADAPT_TRELLIS && !h->param.rc.b_stat_read )

- h->frames.i_delay = X264_MAX(h->param.i_bframe,3)*4;

- else

- h->frames.i_delay = h->param.i_bframe;

- if( h->param.rc.b_mb_tree || h->param.rc.i_vbv_buffer_size )

- h->frames.i_delay = X264_MAX( h->frames.i_delay, h->param.rc.i_lookahead );

- i_slicetype_length = h->frames.i_delay;

- h->frames.i_delay += h->i_thread_frames - 1;

- h->frames.i_delay += h->param.i_sync_lookahead;

- h->frames.i_delay += h->param.b_vfr_input;

- h->frames.i_bframe_delay = h->param.i_bframe ? (h->param.i_bframe_pyramid ? 2 : 1) : 0;

-

- h->frames.i_max_ref0 = h->param.i_frame_reference;

- h->frames.i_max_ref1 = X264_MIN( h->sps->vui.i_num_reorder_frames, h->param.i_frame_reference );

- h->frames.i_max_dpb = h->sps->vui.i_max_dec_frame_buffering;

- h->frames.b_have_lowres = !h->param.rc.b_stat_read

- && ( h->param.rc.i_rc_method == X264_RC_ABR

- || h->param.rc.i_rc_method == X264_RC_CRF

- || h->param.i_bframe_adaptive

- || h->param.i_scenecut_threshold

- || h->param.rc.b_mb_tree

- || h->param.analyse.i_weighted_pred );

- h->frames.b_have_lowres |= h->param.rc.b_stat_read && h->param.rc.i_vbv_buffer_size > 0;

- h->frames.b_have_sub8x8_esa = !!(h->param.analyse.inter & X264_ANALYSE_PSUB8x8);

-

- h->frames.i_last_idr =

- h->frames.i_last_keyframe = - h->param.i_keyint_max;

- h->frames.i_input = 0;

- h->frames.i_largest_pts = h->frames.i_second_largest_pts = -1;

- h->frames.i_poc_last_open_gop = -1;

- //CHECKED_MALLOCZERO(var, size)

- //调用malloc()分配内存,然后调用memset()置零

- CHECKED_MALLOCZERO( h->frames.unused[0], (h->frames.i_delay + 3) * sizeof(x264_frame_t *) );

- /* Allocate room for max refs plus a few extra just in case. */

- CHECKED_MALLOCZERO( h->frames.unused[1], (h->i_thread_frames + X264_REF_MAX + 4) * sizeof(x264_frame_t *) );

- CHECKED_MALLOCZERO( h->frames.current, (h->param.i_sync_lookahead + h->param.i_bframe

- + h->i_thread_frames + 3) * sizeof(x264_frame_t *) );

- if( h->param.analyse.i_weighted_pred > 0 )

- CHECKED_MALLOCZERO( h->frames.blank_unused, h->i_thread_frames * 4 * sizeof(x264_frame_t *) );

- h->i_ref[0] = h->i_ref[1] = 0;

- h->i_cpb_delay = h->i_coded_fields = h->i_disp_fields = 0;

- h->i_prev_duration = ((uint64_t)h->param.i_fps_den * h->sps->vui.i_time_scale) / ((uint64_t)h->param.i_fps_num * h->sps->vui.i_num_units_in_tick);

- h->i_disp_fields_last_frame = -1;

- //RDO初始化

- x264_rdo_init();

-

- /* init CPU functions */

- //初始化包含汇编优化的函数

- //帧内预测

- x264_predict_16x16_init( h->param.cpu, h->predict_16x16 );

- x264_predict_8x8c_init( h->param.cpu, h->predict_8x8c );

- x264_predict_8x16c_init( h->param.cpu, h->predict_8x16c );

- x264_predict_8x8_init( h->param.cpu, h->predict_8x8, &h->predict_8x8_filter );

- x264_predict_4x4_init( h->param.cpu, h->predict_4x4 );

- //SAD等和像素计算有关的函数

- x264_pixel_init( h->param.cpu, &h->pixf );

- //DCT

- x264_dct_init( h->param.cpu, &h->dctf );

- //“之”字扫描

- x264_zigzag_init( h->param.cpu, &h->zigzagf_progressive, &h->zigzagf_interlaced );

- memcpy( &h->zigzagf, PARAM_INTERLACED ? &h->zigzagf_interlaced : &h->zigzagf_progressive, sizeof(h->zigzagf) );

- //运动补偿

- x264_mc_init( h->param.cpu, &h->mc, h->param.b_cpu_independent );

- //量化

- x264_quant_init( h, h->param.cpu, &h->quantf );

- //去块效应滤波

- x264_deblock_init( h->param.cpu, &h->loopf, PARAM_INTERLACED );

- x264_bitstream_init( h->param.cpu, &h->bsf );

- //初始化CABAC或者是CAVLC

- if( h->param.b_cabac )

- x264_cabac_init( h );

- else

- x264_stack_align( x264_cavlc_init, h );

-

- //决定了像素比较的时候用SAD还是SATD

- mbcmp_init( h );

- chroma_dsp_init( h );

- //CPU属性

- p = buf + sprintf( buf, "using cpu capabilities:" );

- for( int i = 0; x264_cpu_names[i].flags; i++ )

- {

- if( !strcmp(x264_cpu_names[i].name, "SSE")

- && h->param.cpu & (X264_CPU_SSE2) )

- continue;

- if( !strcmp(x264_cpu_names[i].name, "SSE2")

- && h->param.cpu & (X264_CPU_SSE2_IS_FAST|X264_CPU_SSE2_IS_SLOW) )

- continue;

- if( !strcmp(x264_cpu_names[i].name, "SSE3")

- && (h->param.cpu & X264_CPU_SSSE3 || !(h->param.cpu & X264_CPU_CACHELINE_64)) )

- continue;

- if( !strcmp(x264_cpu_names[i].name, "SSE4.1")

- && (h->param.cpu & X264_CPU_SSE42) )

- continue;

- if( !strcmp(x264_cpu_names[i].name, "BMI1")

- && (h->param.cpu & X264_CPU_BMI2) )

- continue;

- if( (h->param.cpu & x264_cpu_names[i].flags) == x264_cpu_names[i].flags

- && (!i || x264_cpu_names[i].flags != x264_cpu_names[i-1].flags) )

- p += sprintf( p, " %s", x264_cpu_names[i].name );

- }

- if( !h->param.cpu )

- p += sprintf( p, " none!" );

- x264_log( h, X264_LOG_INFO, "%s\n", buf );

-

- float *logs = x264_analyse_prepare_costs( h );

- if( !logs )

- goto fail;

- for( qp = X264_MIN( h->param.rc.i_qp_min, QP_MAX_SPEC ); qp <= h->param.rc.i_qp_max; qp++ )

- if( x264_analyse_init_costs( h, logs, qp ) )

- goto fail;

- if( x264_analyse_init_costs( h, logs, X264_LOOKAHEAD_QP ) )

- goto fail;

- x264_free( logs );

-

- static const uint16_t cost_mv_correct[7] = { 24, 47, 95, 189, 379, 757, 1515 };

- /* Checks for known miscompilation issues. */

- if( h->cost_mv[X264_LOOKAHEAD_QP][2013] != cost_mv_correct[BIT_DEPTH-8] )

- {

- x264_log( h, X264_LOG_ERROR, "MV cost test failed: x264 has been miscompiled!\n" );

- goto fail;

- }

-

- /* Must be volatile or else GCC will optimize it out. */

- volatile int temp = 392;

- if( x264_clz( temp ) != 23 )

- {

- x264_log( h, X264_LOG_ERROR, "CLZ test failed: x264 has been miscompiled!\n" );

- #if ARCH_X86 || ARCH_X86_64

- x264_log( h, X264_LOG_ERROR, "Are you attempting to run an SSE4a/LZCNT-targeted build on a CPU that\n" );

- x264_log( h, X264_LOG_ERROR, "doesn't support it?\n" );

- #endif

- goto fail;

- }

-

- h->out.i_nal = 0;

- h->out.i_bitstream = X264_MAX( 1000000, h->param.i_width * h->param.i_height * 4

- * ( h->param.rc.i_rc_method == X264_RC_ABR ? pow( 0.95, h->param.rc.i_qp_min )

- : pow( 0.95, h->param.rc.i_qp_constant ) * X264_MAX( 1, h->param.rc.f_ip_factor )));

-

- h->nal_buffer_size = h->out.i_bitstream * 3/2 + 4 + 64; /* +4 for startcode, +64 for nal_escape assembly padding */

- CHECKED_MALLOC( h->nal_buffer, h->nal_buffer_size );

-

- CHECKED_MALLOC( h->reconfig_h, sizeof(x264_t) );

-

- if( h->param.i_threads > 1 &&

- x264_threadpool_init( &h->threadpool, h->param.i_threads, (void*)x264_encoder_thread_init, h ) )

- goto fail;

- if( h->param.i_lookahead_threads > 1 &&

- x264_threadpool_init( &h->lookaheadpool, h->param.i_lookahead_threads, NULL, NULL ) )

- goto fail;

-

- #if HAVE_OPENCL

- if( h->param.b_opencl )

- {

- h->opencl.ocl = x264_opencl_load_library();

- if( !h->opencl.ocl )

- {

- x264_log( h, X264_LOG_WARNING, "failed to load OpenCL\n" );

- h->param.b_opencl = 0;

- }

- }

- #endif

-

- h->thread[0] = h;

- for( int i = 1; i < h->param.i_threads + !!h->param.i_sync_lookahead; i++ )

- CHECKED_MALLOC( h->thread[i], sizeof(x264_t) );

- if( h->param.i_lookahead_threads > 1 )

- for( int i = 0; i < h->param.i_lookahead_threads; i++ )

- {

- CHECKED_MALLOC( h->lookahead_thread[i], sizeof(x264_t) );

- *h->lookahead_thread[i] = *h;

- }

- *h->reconfig_h = *h;

-

- for( int i = 0; i < h->param.i_threads; i++ )

- {

- int init_nal_count = h->param.i_slice_count + 3;

- int allocate_threadlocal_data = !h->param.b_sliced_threads || !i;

- if( i > 0 )

- *h->thread[i] = *h;

-

- if( x264_pthread_mutex_init( &h->thread[i]->mutex, NULL ) )

- goto fail;

- if( x264_pthread_cond_init( &h->thread[i]->cv, NULL ) )

- goto fail;

-

- if( allocate_threadlocal_data )

- {

- h->thread[i]->fdec = x264_frame_pop_unused( h, 1 );

- if( !h->thread[i]->fdec )

- goto fail;

- }

- else

- h->thread[i]->fdec = h->thread[0]->fdec;

-

- CHECKED_MALLOC( h->thread[i]->out.p_bitstream, h->out.i_bitstream );

- /* Start each thread with room for init_nal_count NAL units; it'll realloc later if needed. */

- CHECKED_MALLOC( h->thread[i]->out.nal, init_nal_count*sizeof(x264_nal_t) );

- h->thread[i]->out.i_nals_allocated = init_nal_count;

-

- if( allocate_threadlocal_data && x264_macroblock_cache_allocate( h->thread[i] ) < 0 )

- goto fail;

- }

-

- #if HAVE_OPENCL

- if( h->param.b_opencl && x264_opencl_lookahead_init( h ) < 0 )

- h->param.b_opencl = 0;

- #endif

- //初始化lookahead

- if( x264_lookahead_init( h, i_slicetype_length ) )

- goto fail;

-

- for( int i = 0; i < h->param.i_threads; i++ )

- if( x264_macroblock_thread_allocate( h->thread[i], 0 ) < 0 )

- goto fail;

- //创建码率控制

- if( x264_ratecontrol_new( h ) < 0 )

- goto fail;

-

- if( h->param.i_nal_hrd )

- {

- x264_log( h, X264_LOG_DEBUG, "HRD bitrate: %i bits/sec\n", h->sps->vui.hrd.i_bit_rate_unscaled );

- x264_log( h, X264_LOG_DEBUG, "CPB size: %i bits\n", h->sps->vui.hrd.i_cpb_size_unscaled );

- }

-

- if( h->param.psz_dump_yuv )

- {

- /* create or truncate the reconstructed video file */

- FILE *f = x264_fopen( h->param.psz_dump_yuv, "w" );

- if( !f )

- {

- x264_log( h, X264_LOG_ERROR, "dump_yuv: can't write to %s\n", h->param.psz_dump_yuv );

- goto fail;

- }

- else if( !x264_is_regular_file( f ) )

- {

- x264_log( h, X264_LOG_ERROR, "dump_yuv: incompatible with non-regular file %s\n", h->param.psz_dump_yuv );

- goto fail;

- }

- fclose( f );

- }

- //这写法......

- const char *profile = h->sps->i_profile_idc == PROFILE_BASELINE ? "Constrained Baseline" :

- h->sps->i_profile_idc == PROFILE_MAIN ? "Main" :

- h->sps->i_profile_idc == PROFILE_HIGH ? "High" :

- h->sps->i_profile_idc == PROFILE_HIGH10 ? (h->sps->b_constraint_set3 == 1 ? "High 10 Intra" : "High 10") :

- h->sps->i_profile_idc == PROFILE_HIGH422 ? (h->sps->b_constraint_set3 == 1 ? "High 4:2:2 Intra" : "High 4:2:2") :

- h->sps->b_constraint_set3 == 1 ? "High 4:4:4 Intra" : "High 4:4:4 Predictive";

- char level[4];

- snprintf( level, sizeof(level), "%d.%d", h->sps->i_level_idc/10, h->sps->i_level_idc%10 );

- if( h->sps->i_level_idc == 9 || ( h->sps->i_level_idc == 11 && h->sps->b_constraint_set3 &&

- (h->sps->i_profile_idc == PROFILE_BASELINE || h->sps->i_profile_idc == PROFILE_MAIN) ) )

- strcpy( level, "1b" );

- //输出型和级

- if( h->sps->i_profile_idc < PROFILE_HIGH10 )

- {

- x264_log( h, X264_LOG_INFO, "profile %s, level %s\n",

- profile, level );

- }

- else

- {

- static const char * const subsampling[4] = { "4:0:0", "4:2:0", "4:2:2", "4:4:4" };

- x264_log( h, X264_LOG_INFO, "profile %s, level %s, %s %d-bit\n",

- profile, level, subsampling[CHROMA_FORMAT], BIT_DEPTH );

- }

-

- return h;

- fail:

- //释放

- x264_free( h );

- return NULL;

- }

由于源代码中已经做了比较详细的注释,在这里就不重复叙述了。下面根据函数调用的顺序,看一下x264_encoder_open()调用的下面几个函数:

x264_sps_init():根据输入参数生成H.264码流的SPS信息。

x264_pps_init():根据输入参数生成H.264码流的PPS信息。

x264_predict_16x16_init():初始化Intra16x16帧内预测汇编函数。

x264_predict_4x4_init():初始化Intra4x4帧内预测汇编函数。

x264_pixel_init():初始化像素值计算相关的汇编函数(包括SAD、SATD、SSD等)。

x264_dct_init():初始化DCT变换和DCT反变换相关的汇编函数。

x264_mc_init():初始化运动补偿相关的汇编函数。

x264_quant_init():初始化量化和反量化相关的汇编函数。

x264_deblock_init():初始化去块效应滤波器相关的汇编函数。

mbcmp_init():决定像素比较的时候使用SAD还是SATD。

x264_sps_init()

x264_sps_init()根据输入参数生成H.264码流的SPS (Sequence Parameter Set,序列参数集)信息。该函数的定义位于encoder\set.c,如下所示。- //初始化SPS

- void x264_sps_init( x264_sps_t *sps, int i_id, x264_param_t *param )

- {

- int csp = param->i_csp & X264_CSP_MASK;

-

- sps->i_id = i_id;

- //以宏块为单位的宽度

- sps->i_mb_width = ( param->i_width + 15 ) / 16;

- //以宏块为单位的高度

- sps->i_mb_height= ( param->i_height + 15 ) / 16;

- //色度取样格式

- sps->i_chroma_format_idc = csp >= X264_CSP_I444 ? CHROMA_444 :

- csp >= X264_CSP_I422 ? CHROMA_422 : CHROMA_420;

-

- sps->b_qpprime_y_zero_transform_bypass = param->rc.i_rc_method == X264_RC_CQP && param->rc.i_qp_constant == 0;

- //型profile

- if( sps->b_qpprime_y_zero_transform_bypass || sps->i_chroma_format_idc == CHROMA_444 )

- sps->i_profile_idc = PROFILE_HIGH444_PREDICTIVE;//YUV444的时候

- else if( sps->i_chroma_format_idc == CHROMA_422 )

- sps->i_profile_idc = PROFILE_HIGH422;

- else if( BIT_DEPTH > 8 )

- sps->i_profile_idc = PROFILE_HIGH10;

- else if( param->analyse.b_transform_8x8 || param->i_cqm_preset != X264_CQM_FLAT )

- sps->i_profile_idc = PROFILE_HIGH;//高型 High Profile 目前最常见

- else if( param->b_cabac || param->i_bframe > 0 || param->b_interlaced || param->b_fake_interlaced || param->analyse.i_weighted_pred > 0 )

- sps->i_profile_idc = PROFILE_MAIN;//主型

- else

- sps->i_profile_idc = PROFILE_BASELINE;//基本型

-

- sps->b_constraint_set0 = sps->i_profile_idc == PROFILE_BASELINE;

- /* x264 doesn't support the features that are in Baseline and not in Main,

- * namely arbitrary_slice_order and slice_groups. */

- sps->b_constraint_set1 = sps->i_profile_idc <= PROFILE_MAIN;

- /* Never set constraint_set2, it is not necessary and not used in real world. */

- sps->b_constraint_set2 = 0;

- sps->b_constraint_set3 = 0;

- //级level

- sps->i_level_idc = param->i_level_idc;

- if( param->i_level_idc == 9 && ( sps->i_profile_idc == PROFILE_BASELINE || sps->i_profile_idc == PROFILE_MAIN ) )

- {

- sps->b_constraint_set3 = 1; /* level 1b with Baseline or Main profile is signalled via constraint_set3 */

- sps->i_level_idc = 11;

- }

- /* Intra profiles */

- if( param->i_keyint_max == 1 && sps->i_profile_idc > PROFILE_HIGH )

- sps->b_constraint_set3 = 1;

-

- sps->vui.i_num_reorder_frames = param->i_bframe_pyramid ? 2 : param->i_bframe ? 1 : 0;

- /* extra slot with pyramid so that we don't have to override the

- * order of forgetting old pictures */

- //参考帧数量

- sps->vui.i_max_dec_frame_buffering =

- sps->i_num_ref_frames = X264_MIN(X264_REF_MAX, X264_MAX4(param->i_frame_reference, 1 + sps->vui.i_num_reorder_frames,

- param->i_bframe_pyramid ? 4 : 1, param->i_dpb_size));

- sps->i_num_ref_frames -= param->i_bframe_pyramid == X264_B_PYRAMID_STRICT;

- if( param->i_keyint_max == 1 )

- {

- sps->i_num_ref_frames = 0;

- sps->vui.i_max_dec_frame_buffering = 0;

- }

-

- /* number of refs + current frame */

- int max_frame_num = sps->vui.i_max_dec_frame_buffering * (!!param->i_bframe_pyramid+1) + 1;

- /* Intra refresh cannot write a recovery time greater than max frame num-1 */

- if( param->b_intra_refresh )

- {

- int time_to_recovery = X264_MIN( sps->i_mb_width - 1, param->i_keyint_max ) + param->i_bframe - 1;

- max_frame_num = X264_MAX( max_frame_num, time_to_recovery+1 );

- }

-

- sps->i_log2_max_frame_num = 4;

- while( (1 << sps->i_log2_max_frame_num) <= max_frame_num )

- sps->i_log2_max_frame_num++;

- //POC类型

- sps->i_poc_type = param->i_bframe || param->b_interlaced ? 0 : 2;

- if( sps->i_poc_type == 0 )

- {

- int max_delta_poc = (param->i_bframe + 2) * (!!param->i_bframe_pyramid + 1) * 2;

- sps->i_log2_max_poc_lsb = 4;

- while( (1 << sps->i_log2_max_poc_lsb) <= max_delta_poc * 2 )

- sps->i_log2_max_poc_lsb++;

- }

-

- sps->b_vui = 1;

-

- sps->b_gaps_in_frame_num_value_allowed = 0;

- sps->b_frame_mbs_only = !(param->b_interlaced || param->b_fake_interlaced);

- if( !sps->b_frame_mbs_only )

- sps->i_mb_height = ( sps->i_mb_height + 1 ) & ~1;

- sps->b_mb_adaptive_frame_field = param->b_interlaced;

- sps->b_direct8x8_inference = 1;

-

- sps->crop.i_left = param->crop_rect.i_left;

- sps->crop.i_top = param->crop_rect.i_top;

- sps->crop.i_right = param->crop_rect.i_right + sps->i_mb_width*16 - param->i_width;

- sps->crop.i_bottom = (param->crop_rect.i_bottom + sps->i_mb_height*16 - param->i_height) >> !sps->b_frame_mbs_only;

- sps->b_crop = sps->crop.i_left || sps->crop.i_top ||

- sps->crop.i_right || sps->crop.i_bottom;

-

- sps->vui.b_aspect_ratio_info_present = 0;

- if( param->vui.i_sar_width > 0 && param->vui.i_sar_height > 0 )

- {

- sps->vui.b_aspect_ratio_info_present = 1;

- sps->vui.i_sar_width = param->vui.i_sar_width;

- sps->vui.i_sar_height= param->vui.i_sar_height;

- }

-

- sps->vui.b_overscan_info_present = param->vui.i_overscan > 0 && param->vui.i_overscan <= 2;

- if( sps->vui.b_overscan_info_present )

- sps->vui.b_overscan_info = ( param->vui.i_overscan == 2 ? 1 : 0 );

-

- sps->vui.b_signal_type_present = 0;

- sps->vui.i_vidformat = ( param->vui.i_vidformat >= 0 && param->vui.i_vidformat <= 5 ? param->vui.i_vidformat : 5 );

- sps->vui.b_fullrange = ( param->vui.b_fullrange >= 0 && param->vui.b_fullrange <= 1 ? param->vui.b_fullrange :

- ( csp >= X264_CSP_BGR ? 1 : 0 ) );

- sps->vui.b_color_description_present = 0;

-

- sps->vui.i_colorprim = ( param->vui.i_colorprim >= 0 && param->vui.i_colorprim <= 9 ? param->vui.i_colorprim : 2 );

- sps->vui.i_transfer = ( param->vui.i_transfer >= 0 && param->vui.i_transfer <= 15 ? param->vui.i_transfer : 2 );

- sps->vui.i_colmatrix = ( param->vui.i_colmatrix >= 0 && param->vui.i_colmatrix <= 10 ? param->vui.i_colmatrix :

- ( csp >= X264_CSP_BGR ? 0 : 2 ) );

- if( sps->vui.i_colorprim != 2 ||

- sps->vui.i_transfer != 2 ||

- sps->vui.i_colmatrix != 2 )

- {

- sps->vui.b_color_description_present = 1;

- }

-

- if( sps->vui.i_vidformat != 5 ||

- sps->vui.b_fullrange ||

- sps->vui.b_color_description_present )

- {

- sps->vui.b_signal_type_present = 1;

- }

-

- /* FIXME: not sufficient for interlaced video */

- sps->vui.b_chroma_loc_info_present = param->vui.i_chroma_loc > 0 && param->vui.i_chroma_loc <= 5 &&

- sps->i_chroma_format_idc == CHROMA_420;

- if( sps->vui.b_chroma_loc_info_present )

- {

- sps->vui.i_chroma_loc_top = param->vui.i_chroma_loc;

- sps->vui.i_chroma_loc_bottom = param->vui.i_chroma_loc;

- }

-

- sps->vui.b_timing_info_present = param->i_timebase_num > 0 && param->i_timebase_den > 0;

-

- if( sps->vui.b_timing_info_present )

- {

- sps->vui.i_num_units_in_tick = param->i_timebase_num;

- sps->vui.i_time_scale = param->i_timebase_den * 2;

- sps->vui.b_fixed_frame_rate = !param->b_vfr_input;

- }

-

- sps->vui.b_vcl_hrd_parameters_present = 0; // we don't support VCL HRD

- sps->vui.b_nal_hrd_parameters_present = !!param->i_nal_hrd;

- sps->vui.b_pic_struct_present = param->b_pic_struct;

-

- // NOTE: HRD related parts of the SPS are initialised in x264_ratecontrol_init_reconfigurable

-

- sps->vui.b_bitstream_restriction = param->i_keyint_max > 1;

- if( sps->vui.b_bitstream_restriction )

- {

- sps->vui.b_motion_vectors_over_pic_boundaries = 1;

- sps->vui.i_max_bytes_per_pic_denom = 0;

- sps->vui.i_max_bits_per_mb_denom = 0;

- sps->vui.i_log2_max_mv_length_horizontal =

- sps->vui.i_log2_max_mv_length_vertical = (int)log2f( X264_MAX( 1, param->analyse.i_mv_range*4-1 ) ) + 1;

- }

- }

从源代码可以看出,x264_sps_init()根据输入参数集x264_param_t中的信息,初始化了SPS结构体中的成员变量。有关这些成员变量的具体信息,可以参考《H.264标准》。

x264_pps_init()

x264_pps_init()根据输入参数生成H.264码流的PPS(Picture Parameter Set,图像参数集)信息。该函数的定义位于encoder\set.c,如下所示。- //初始化PPS

- void x264_pps_init( x264_pps_t *pps, int i_id, x264_param_t *param, x264_sps_t *sps )

- {

- pps->i_id = i_id;

- //所属的SPS

- pps->i_sps_id = sps->i_id;

- //是否使用CABAC?

- pps->b_cabac = param->b_cabac;

-

- pps->b_pic_order = !param->i_avcintra_class && param->b_interlaced;

- pps->i_num_slice_groups = 1;

- //目前参考帧队列的长度

- //注意是这个队列中当前实际的、已存在的参考帧数目,这从它的名字“active”中也可以看出来。

- pps->i_num_ref_idx_l0_default_active = param->i_frame_reference;

- pps->i_num_ref_idx_l1_default_active = 1;

- //加权预测

- pps->b_weighted_pred = param->analyse.i_weighted_pred > 0;

- pps->b_weighted_bipred = param->analyse.b_weighted_bipred ? 2 : 0;

- //量化参数QP的初始值

- pps->i_pic_init_qp = param->rc.i_rc_method == X264_RC_ABR || param->b_stitchable ? 26 + QP_BD_OFFSET : SPEC_QP( param->rc.i_qp_constant );

- pps->i_pic_init_qs = 26 + QP_BD_OFFSET;

-

- pps->i_chroma_qp_index_offset = param->analyse.i_chroma_qp_offset;

- pps->b_deblocking_filter_control = 1;

- pps->b_constrained_intra_pred = param->b_constrained_intra;

- pps->b_redundant_pic_cnt = 0;

-

- pps->b_transform_8x8_mode = param->analyse.b_transform_8x8 ? 1 : 0;

-

- pps->i_cqm_preset = param->i_cqm_preset;

-

- switch( pps->i_cqm_preset )

- {

- case X264_CQM_FLAT:

- for( int i = 0; i < 8; i++ )

- pps->scaling_list[i] = x264_cqm_flat16;

- break;

- case X264_CQM_JVT:

- for( int i = 0; i < 8; i++ )

- pps->scaling_list[i] = x264_cqm_jvt[i];

- break;

- case X264_CQM_CUSTOM:

- /* match the transposed DCT & zigzag */

- transpose( param->cqm_4iy, 4 );

- transpose( param->cqm_4py, 4 );

- transpose( param->cqm_4ic, 4 );

- transpose( param->cqm_4pc, 4 );

- transpose( param->cqm_8iy, 8 );

- transpose( param->cqm_8py, 8 );

- transpose( param->cqm_8ic, 8 );

- transpose( param->cqm_8pc, 8 );

- pps->scaling_list[CQM_4IY] = param->cqm_4iy;

- pps->scaling_list[CQM_4PY] = param->cqm_4py;

- pps->scaling_list[CQM_4IC] = param->cqm_4ic;

- pps->scaling_list[CQM_4PC] = param->cqm_4pc;

- pps->scaling_list[CQM_8IY+4] = param->cqm_8iy;

- pps->scaling_list[CQM_8PY+4] = param->cqm_8py;

- pps->scaling_list[CQM_8IC+4] = param->cqm_8ic;

- pps->scaling_list[CQM_8PC+4] = param->cqm_8pc;

- for( int i = 0; i < 8; i++ )

- for( int j = 0; j < (i < 4 ? 16 : 64); j++ )

- if( pps->scaling_list[i][j] == 0 )

- pps->scaling_list[i] = x264_cqm_jvt[i];

- break;

- }

- }

从源代码可以看出,x264_pps_init()根据输入参数集x264_param_t中的信息,初始化了PPS结构体中的成员变量。有关这些成员变量的具体信息,可以参考《H.264标准》。

x264_predict_16x16_init()

x264_predict_16x16_init()用于初始化Intra16x16帧内预测汇编函数。该函数的定义位于x264\common\predict.c,如下所示。- //Intra16x16帧内预测汇编函数初始化

- void x264_predict_16x16_init( int cpu, x264_predict_t pf[7] )

- {

- //C语言版本

- //================================================

- //垂直 Vertical

- pf[I_PRED_16x16_V ] = x264_predict_16x16_v_c;

- //水平 Horizontal

- pf[I_PRED_16x16_H ] = x264_predict_16x16_h_c;

- //DC

- pf[I_PRED_16x16_DC] = x264_predict_16x16_dc_c;

- //Plane

- pf[I_PRED_16x16_P ] = x264_predict_16x16_p_c;

- //这几种是啥?

- pf[I_PRED_16x16_DC_LEFT]= x264_predict_16x16_dc_left_c;

- pf[I_PRED_16x16_DC_TOP ]= x264_predict_16x16_dc_top_c;

- pf[I_PRED_16x16_DC_128 ]= x264_predict_16x16_dc_128_c;

- //================================================

- //MMX版本

- #if HAVE_MMX

- x264_predict_16x16_init_mmx( cpu, pf );

- #endif

- //ALTIVEC版本

- #if HAVE_ALTIVEC

- if( cpu&X264_CPU_ALTIVEC )

- x264_predict_16x16_init_altivec( pf );

- #endif

- //ARMV6版本

- #if HAVE_ARMV6

- x264_predict_16x16_init_arm( cpu, pf );

- #endif

- //AARCH64版本

- #if ARCH_AARCH64

- x264_predict_16x16_init_aarch64( cpu, pf );

- #endif

- }

从源代码可看出,x264_predict_16x16_init()首先对帧内预测函数指针数组x264_predict_t[]中的元素赋值了C语言版本的函数x264_predict_16x16_v_c(),x264_predict_16x16_h_c(),x264_predict_16x16_dc_c(),x264_predict_16x16_p_c();然后会判断系统平台的特性,如果平台支持的话,会调用x264_predict_16x16_init_mmx(),x264_predict_16x16_init_arm()等给x264_predict_t[]中的元素赋值经过汇编优化的函数。下文将会简单看几个其中的函数。

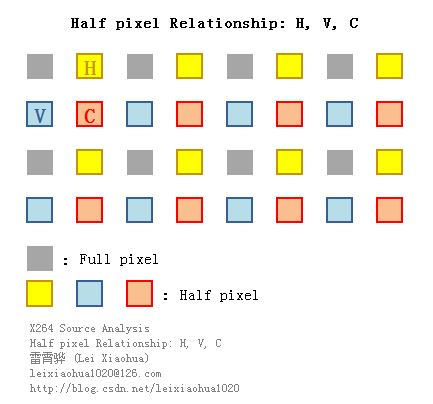

相关知识简述

简单记录一下帧内预测的方法。帧内预测根据宏块左边和上边的边界像素值推算宏块内部的像素值,帧内预测的效果如下图所示。其中左边的图为图像原始画面,右边的图为经过帧内预测后没有叠加残差的画面。

模式 | 描述 |

Vertical | 由上边像素推出相应像素值 |

Horizontal | 由左边像素推出相应像素值 |

DC | 由上边和左边像素平均值推出相应像素值 |

Plane | 由上边和左边像素推出相应像素值 |

4x4帧内预测模式一共有9种,如下图所示。

x264_predict_16x16_v_c()

x264_predict_16x16_v_c()实现了Intra16x16的Vertical预测模式。该函数的定义位于common\predict.c,如下所示。- //16x16帧内预测

- //垂直预测(Vertical)

- void x264_predict_16x16_v_c( pixel *src )

- {

- /*

- * Vertical预测方式

- * |X1 X2 X3 X4

- * --+-----------

- * |X1 X2 X3 X4

- * |X1 X2 X3 X4

- * |X1 X2 X3 X4

- * |X1 X2 X3 X4

- *

- */

- /*

- * 【展开宏定义】

- * uint32_t v0 = ((x264_union32_t*)(&src[ 0-FDEC_STRIDE]))->i;

- * uint32_t v1 = ((x264_union32_t*)(&src[ 4-FDEC_STRIDE]))->i;

- * uint32_t v2 = ((x264_union32_t*)(&src[ 8-FDEC_STRIDE]))->i;

- * uint32_t v3 = ((x264_union32_t*)(&src[12-FDEC_STRIDE]))->i;

- * 在这里,上述代码实际上相当于:

- * uint32_t v0 = *((uint32_t*)(&src[ 0-FDEC_STRIDE]));

- * uint32_t v1 = *((uint32_t*)(&src[ 4-FDEC_STRIDE]));

- * uint32_t v2 = *((uint32_t*)(&src[ 8-FDEC_STRIDE]));

- * uint32_t v3 = *((uint32_t*)(&src[12-FDEC_STRIDE]));

- * 即分成4次,每次取出4个像素(一共16个像素),分别赋值给v0,v1,v2,v3

- * 取出的值源自于16x16块上面的一行像素

- * 0| 4 8 12 16

- * || v0 | v1 | v2 | v3 |

- * ---++==========+==========+==========+==========+

- * ||

- * ||

- * ||

- * ||

- * ||

- * ||

- *

- */

- //pixel4实际上是uint32_t(占用32bit),存储4个像素的值(每个像素占用8bit)

-

- pixel4 v0 = MPIXEL_X4( &src[ 0-FDEC_STRIDE] );

- pixel4 v1 = MPIXEL_X4( &src[ 4-FDEC_STRIDE] );

- pixel4 v2 = MPIXEL_X4( &src[ 8-FDEC_STRIDE] );

- pixel4 v3 = MPIXEL_X4( &src[12-FDEC_STRIDE] );

-

- //循环赋值16行

- for( int i = 0; i < 16; i++ )

- {

- //【展开宏定义】

- //(((x264_union32_t*)(src+ 0))->i) = v0;

- //(((x264_union32_t*)(src+ 4))->i) = v1;

- //(((x264_union32_t*)(src+ 8))->i) = v2;

- //(((x264_union32_t*)(src+12))->i) = v3;

- //即分成4次,每次赋值4个像素

- //

- MPIXEL_X4( src+ 0 ) = v0;

- MPIXEL_X4( src+ 4 ) = v1;

- MPIXEL_X4( src+ 8 ) = v2;

- MPIXEL_X4( src+12 ) = v3;

- //下一行

- //FDEC_STRIDE=32,是重建宏块缓存fdec_buf一行的数据量

- src += FDEC_STRIDE;

- }

- }

从源代码可以看出,x264_predict_16x16_v_c()首先取出了16x16图像块上面一行16个像素的值存储在v0,v1,v2,v3四个变量中(每个变量存储4个像素),然后循环16次将v0,v1,v2,v3赋值给16x16图像块的16行。

看完C语言版本Intra16x16的Vertical预测模式的实现函数之后,我们可以继续看一下该预测模式汇编语言版本的实现函数。从前面的初始化函数中已经可以看出,当系统支持X86汇编的时候,会调用x264_predict_16x16_init_mmx()初始化x86汇编优化过的函数;当系统支持ARM的时候,会调用x264_predict_16x16_init_arm()初始化ARM汇编优化过的函数。x264_predict_16x16_init_mmx()

x264_predict_16x16_init_mmx()用于初始化经过x86汇编优化过的Intra16x16的帧内预测函数。该函数的定义位于common\x86\predict-c.c(在“x86”子文件夹下),如下所示。- //Intra16x16帧内预测汇编函数-MMX版本

- void x264_predict_16x16_init_mmx( int cpu, x264_predict_t pf[7] )

- {

- if( !(cpu&X264_CPU_MMX2) )

- return;

- pf[I_PRED_16x16_DC] = x264_predict_16x16_dc_mmx2;

- pf[I_PRED_16x16_DC_TOP] = x264_predict_16x16_dc_top_mmx2;

- pf[I_PRED_16x16_DC_LEFT] = x264_predict_16x16_dc_left_mmx2;

- pf[I_PRED_16x16_V] = x264_predict_16x16_v_mmx2;

- pf[I_PRED_16x16_H] = x264_predict_16x16_h_mmx2;

- #if HIGH_BIT_DEPTH

- if( !(cpu&X264_CPU_SSE) )

- return;

- pf[I_PRED_16x16_V] = x264_predict_16x16_v_sse;

- if( !(cpu&X264_CPU_SSE2) )

- return;

- pf[I_PRED_16x16_DC] = x264_predict_16x16_dc_sse2;

- pf[I_PRED_16x16_DC_TOP] = x264_predict_16x16_dc_top_sse2;

- pf[I_PRED_16x16_DC_LEFT] = x264_predict_16x16_dc_left_sse2;

- pf[I_PRED_16x16_H] = x264_predict_16x16_h_sse2;

- pf[I_PRED_16x16_P] = x264_predict_16x16_p_sse2;

- if( !(cpu&X264_CPU_AVX) )

- return;

- pf[I_PRED_16x16_V] = x264_predict_16x16_v_avx;

- if( !(cpu&X264_CPU_AVX2) )

- return;

- pf[I_PRED_16x16_H] = x264_predict_16x16_h_avx2;

- #else

- #if !ARCH_X86_64

- pf[I_PRED_16x16_P] = x264_predict_16x16_p_mmx2;

- #endif

- if( !(cpu&X264_CPU_SSE) )

- return;

- pf[I_PRED_16x16_V] = x264_predict_16x16_v_sse;

- if( !(cpu&X264_CPU_SSE2) )

- return;

- pf[I_PRED_16x16_DC] = x264_predict_16x16_dc_sse2;

- if( cpu&X264_CPU_SSE2_IS_SLOW )

- return;

- pf[I_PRED_16x16_DC_TOP] = x264_predict_16x16_dc_top_sse2;

- pf[I_PRED_16x16_DC_LEFT] = x264_predict_16x16_dc_left_sse2;

- pf[I_PRED_16x16_P] = x264_predict_16x16_p_sse2;

- if( !(cpu&X264_CPU_SSSE3) )

- return;

- if( !(cpu&X264_CPU_SLOW_PSHUFB) )

- pf[I_PRED_16x16_H] = x264_predict_16x16_h_ssse3;

- #if HAVE_X86_INLINE_ASM

- pf[I_PRED_16x16_P] = x264_predict_16x16_p_ssse3;

- #endif

- if( !(cpu&X264_CPU_AVX) )

- return;

- pf[I_PRED_16x16_P] = x264_predict_16x16_p_avx;

- #endif // HIGH_BIT_DEPTH

-

- if( cpu&X264_CPU_AVX2 )

- {

- pf[I_PRED_16x16_P] = x264_predict_16x16_p_avx2;

- pf[I_PRED_16x16_DC] = x264_predict_16x16_dc_avx2;

- pf[I_PRED_16x16_DC_TOP] = x264_predict_16x16_dc_top_avx2;

- pf[I_PRED_16x16_DC_LEFT] = x264_predict_16x16_dc_left_avx2;

- }

- }

可以看出,针对Intra16x16的Vertical帧内预测模式,x264_predict_16x16_init_mmx()会根据系统的特型初始化2个函数:如果系统仅支持MMX指令集,就会初始化x264_predict_16x16_v_mmx2();如果系统还支持SSE指令集,就会初始化x264_predict_16x16_v_sse()。下面看一下这2个函数的代码。

x264_predict_16x16_v_mmx2()

x264_predict_16x16_v_sse()

在x264中,x264_predict_16x16_v_mmx2()和x264_predict_16x16_v_sse()这两个函数的定义是写到一起的。它们的定义位于common\x86\predict-a.asm,如下所示。;-----------------------------------------------------------------------------

; void predict_16x16_v( pixel *src )

; Intra16x16帧内预测Vertical模式

;-----------------------------------------------------------------------------

;SIZEOF_PIXEL取值为1

;FDEC_STRIDEB为重建宏块缓存fdec_buf一行像素的大小,取值为32

;

;平台相关的信息位于x86inc.asm

;INIT_MMX中

; mmsize为8

; mova为movq

;INIT_XMM中:

; mmsize为16

; mova为movdqa

;

;STORE16的定义在前面,用于循环16行存储数据

%macro PREDICT_16x16_V 0

cglobal predict_16x16_v, 1,2

%assign %%i 0

%rep 16*SIZEOF_PIXEL/mmsize ;rep循环执行,拷贝16x16块上方的1行像素数据至m0,m1...

;mmssize为指令1次处理比特数

mova m %+ %%i, [r0-FDEC_STRIDEB+%%i*mmsize] ;移入m0,m1...

%assign %%i %%i+1

%endrep

%if 16*SIZEOF_PIXEL/mmsize == 4 ;1行需要处理4次

STORE16 m0, m1, m2, m3 ;循环存储16行,每次存储4个寄存器

%elif 16*SIZEOF_PIXEL/mmsize == 2 ;1行需要处理2次

STORE16 m0, m1 ;循环存储16行,每次存储2个寄存器

%else ;1行需要处理1次

STORE16 m0 ;循环存储16行,每次存储1个寄存器

%endif

RET

%endmacro

INIT_MMX mmx2

PREDICT_16x16_V

INIT_XMM sse

PREDICT_16x16_V

从汇编代码可以看出,x264_predict_16x16_v_mmx2()和x264_predict_16x16_v_sse()的逻辑是一模一样的。它们之间的不同主要在于一条指令处理的数据量:MMX指令的MOVA对应的是MOVQ,一次处理8Byte(8个像素);SSE指令的MOVA对应的是MOVDQA,一次处理16Byte(16个像素,正好是16x16块中的一行像素)。

作为对比,我们可以看一下ARM平台下汇编优化过的Intra16x16的帧内预测函数。这些汇编函数的初始化函数是x264_predict_16x16_init_arm()。

x264_predict_16x16_init_arm()

x264_predict_16x16_init_arm()用于初始化ARM平台下汇编优化过的Intra16x16的帧内预测函数。该函数的定义位于common\arm\predict-c.c(“arm”文件夹下),如下所示。- void x264_predict_16x16_init_arm( int cpu, x264_predict_t pf[7] )

- {

- if (!(cpu&X264_CPU_NEON))

- return;

-

- #if !HIGH_BIT_DEPTH

- pf[I_PRED_16x16_DC ] = x264_predict_16x16_dc_neon;

- pf[I_PRED_16x16_DC_TOP] = x264_predict_16x16_dc_top_neon;

- pf[I_PRED_16x16_DC_LEFT]= x264_predict_16x16_dc_left_neon;

- pf[I_PRED_16x16_H ] = x264_predict_16x16_h_neon;

- pf[I_PRED_16x16_V ] = x264_predict_16x16_v_neon;

- pf[I_PRED_16x16_P ] = x264_predict_16x16_p_neon;

- #endif // !HIGH_BIT_DEPTH

- }

从源代码可以看出,针对Vertical预测模式,x264_predict_16x16_init_arm()初始化了经过NEON指令集优化的函数x264_predict_16x16_v_neon()。

x264_predict_16x16_v_neon()

x264_predict_16x16_v_neon()的定义位于common\arm\predict-a.S,如下所示。/*

* Intra16x16帧内预测Vertical模式-NEON

*

*/

/* FDEC_STRIDE=32Bytes,为重建宏块一行像素的大小 */

/* R0存储16x16像素块地址 */

function x264_predict_16x16_v_neon

sub r0, r0, #FDEC_STRIDE /* r0=r0-FDEC_STRIDE */

mov ip, #FDEC_STRIDE /* ip=32 */

/* VLD向量加载: 内存->NEON寄存器 */

/* d0,d1为64bit双字寄存器,共16Byte,在这里存储16x16块上方一行像素 */

vld1.64 {d0-d1}, [r0,:128], ip /* 将R0指向的数据从内存加载到d0和d1寄存器(64bit) */

/* r0=r0+ip */

.rept 16 /* 循环16次,一次处理1行 */

/* VST向量存储: NEON寄存器->内存 */

vst1.64 {d0-d1}, [r0,:128], ip /* 将d0和d1寄存器中的数据传递给R0指向的内存 */

/* r0=r0+ip */

.endr

bx lr /* 子程序返回 */

endfunc

可以看出,x264_predict_16x16_v_neon()使用vld1.64指令载入16x16块上方的一行像素,然后在一个16次的循环中,使用vst1.64指令将该行像素值赋值给16x16块的每一行。

至此有关Intra16x16的Vertical帧内预测方式的源代码就分析完了。后文为了简便,都只讨论C语言版本汇编函数。

x264_predict_4x4_init()

x264_predict_4x4_init()用于初始化Intra4x4帧内预测汇编函数。该函数的定义位于common\predict.c,如下所示。- //Intra4x4帧内预测汇编函数初始化

- void x264_predict_4x4_init( int cpu, x264_predict_t pf[12] )

- {

- //9种Intra4x4预测方式

- pf[I_PRED_4x4_V] = x264_predict_4x4_v_c;

- pf[I_PRED_4x4_H] = x264_predict_4x4_h_c;

- pf[I_PRED_4x4_DC] = x264_predict_4x4_dc_c;

- pf[I_PRED_4x4_DDL] = x264_predict_4x4_ddl_c;

- pf[I_PRED_4x4_DDR] = x264_predict_4x4_ddr_c;

- pf[I_PRED_4x4_VR] = x264_predict_4x4_vr_c;

- pf[I_PRED_4x4_HD] = x264_predict_4x4_hd_c;

- pf[I_PRED_4x4_VL] = x264_predict_4x4_vl_c;

- pf[I_PRED_4x4_HU] = x264_predict_4x4_hu_c;

- //这些是?

- pf[I_PRED_4x4_DC_LEFT]= x264_predict_4x4_dc_left_c;

- pf[I_PRED_4x4_DC_TOP] = x264_predict_4x4_dc_top_c;

- pf[I_PRED_4x4_DC_128] = x264_predict_4x4_dc_128_c;

-

- #if HAVE_MMX

- x264_predict_4x4_init_mmx( cpu, pf );

- #endif

-

- #if HAVE_ARMV6

- x264_predict_4x4_init_arm( cpu, pf );

- #endif

-

- #if ARCH_AARCH64

- x264_predict_4x4_init_aarch64( cpu, pf );

- #endif

- }

从源代码可看出,x264_predict_4x4_init()首先对帧内预测函数指针数组x264_predict_t[]中的元素赋值了C语言版本的函数x264_predict_4x4_v_c(),x264_predict_4x4_h_c(),x264_predict_4x4_dc_c(),x264_predict_4x4_p_c()等一系列函数(Intra4x4有9种,后面那几种是怎么回事?);然后会判断系统平台的特性,如果平台支持的话,会调用x264_predict_4x4_init_mmx(),x264_predict_4x4_init_arm()等给x264_predict_t[]中的元素赋值经过汇编优化的函数。作为例子,下文看一个Intra4x4的Vertical帧内预测模式的C语言函数。

相关知识简述

Intra4x4的帧内预测模式一共有9种。如下图所示。

x264_predict_4x4_v_c()

x264_predict_4x4_v_c()实现了Intra4x4的Vertical帧内预测方式。该函数的定义位于common\predict.c,如下所示。- void x264_predict_4x4_v_c( pixel *src )

- {

- /*

- * Vertical预测方式

- * |X1 X2 X3 X4

- * --+-----------

- * |X1 X2 X3 X4

- * |X1 X2 X3 X4

- * |X1 X2 X3 X4

- * |X1 X2 X3 X4

- *

- */

-

- /*

- * 宏展开后的结果如下所示

- * 注:重建宏块缓存fdec_buf一行的数据量为32Byte

- *

- * (((x264_union32_t*)(&src[(0)+(0)*32]))->i) =

- * (((x264_union32_t*)(&src[(0)+(1)*32]))->i) =

- * (((x264_union32_t*)(&src[(0)+(2)*32]))->i) =

- * (((x264_union32_t*)(&src[(0)+(3)*32]))->i) = (((x264_union32_t*)(&src[(0)+(-1)*32]))->i);

- */

- PREDICT_4x4_DC(SRC_X4(0,-1));

- }

x264_predict_4x4_v_c()函数的函数体极其简单,只有一个宏定义“PREDICT_4x4_DC(SRC_X4(0,-1));”。如果把该宏展开后,可以看出它取了4x4块上面一行4个像素的值,然后分别赋值给4x4块的4行像素。

x264_pixel_init()

x264_pixel_init()初始化像素值计算相关的汇编函数(包括SAD、SATD、SSD等)。该函数的定义位于common\pixel.c,如下所示。- /****************************************************************************

- * x264_pixel_init:

- ****************************************************************************/

- //SAD等和像素计算有关的函数

- void x264_pixel_init( int cpu, x264_pixel_function_t *pixf )

- {

- memset( pixf, 0, sizeof(*pixf) );

-

- //初始化2个函数-16x16,16x8

- #define INIT2_NAME( name1, name2, cpu ) \

- pixf->name1[PIXEL_16x16] = x264_pixel_##name2##_16x16##cpu;\

- pixf->name1[PIXEL_16x8] = x264_pixel_##name2##_16x8##cpu;

- //初始化4个函数-(16x16,16x8),8x16,8x8

- #define INIT4_NAME( name1, name2, cpu ) \

- INIT2_NAME( name1, name2, cpu ) \

- pixf->name1[PIXEL_8x16] = x264_pixel_##name2##_8x16##cpu;\

- pixf->name1[PIXEL_8x8] = x264_pixel_##name2##_8x8##cpu;

- //初始化5个函数-(16x16,16x8,8x16,8x8),8x4

- #define INIT5_NAME( name1, name2, cpu ) \

- INIT4_NAME( name1, name2, cpu ) \

- pixf->name1[PIXEL_8x4] = x264_pixel_##name2##_8x4##cpu;

- //初始化6个函数-(16x16,16x8,8x16,8x8,8x4),4x8

- #define INIT6_NAME( name1, name2, cpu ) \

- INIT5_NAME( name1, name2, cpu ) \

- pixf->name1[PIXEL_4x8] = x264_pixel_##name2##_4x8##cpu;

- //初始化7个函数-(16x16,16x8,8x16,8x8,8x4,4x8),4x4

- #define INIT7_NAME( name1, name2, cpu ) \

- INIT6_NAME( name1, name2, cpu ) \

- pixf->name1[PIXEL_4x4] = x264_pixel_##name2##_4x4##cpu;

- #define INIT8_NAME( name1, name2, cpu ) \

- INIT7_NAME( name1, name2, cpu ) \

- pixf->name1[PIXEL_4x16] = x264_pixel_##name2##_4x16##cpu;

-

- //重新起个名字

- #define INIT2( name, cpu ) INIT2_NAME( name, name, cpu )

- #define INIT4( name, cpu ) INIT4_NAME( name, name, cpu )

- #define INIT5( name, cpu ) INIT5_NAME( name, name, cpu )

- #define INIT6( name, cpu ) INIT6_NAME( name, name, cpu )

- #define INIT7( name, cpu ) INIT7_NAME( name, name, cpu )

- #define INIT8( name, cpu ) INIT8_NAME( name, name, cpu )

-

- #define INIT_ADS( cpu ) \

- pixf->ads[PIXEL_16x16] = x264_pixel_ads4##cpu;\

- pixf->ads[PIXEL_16x8] = x264_pixel_ads2##cpu;\

- pixf->ads[PIXEL_8x8] = x264_pixel_ads1##cpu;

- //8个sad函数

- INIT8( sad, );

- INIT8_NAME( sad_aligned, sad, );

- //7个sad函数-一次性计算3次

- INIT7( sad_x3, );

- //7个sad函数-一次性计算4次

- INIT7( sad_x4, );

- //8个ssd函数

- //ssd可以用来计算PSNR

- INIT8( ssd, );

- //8个satd函数

- //satd计算的是经过Hadamard变换后的值

- INIT8( satd, );

- //8个satd函数-一次性计算3次

- INIT7( satd_x3, );

- //8个satd函数-一次性计算4次

- INIT7( satd_x4, );

- INIT4( hadamard_ac, );

- INIT_ADS( );

-

- pixf->sa8d[PIXEL_16x16] = x264_pixel_sa8d_16x16;

- pixf->sa8d[PIXEL_8x8] = x264_pixel_sa8d_8x8;

- pixf->var[PIXEL_16x16] = x264_pixel_var_16x16;

- pixf->var[PIXEL_8x16] = x264_pixel_var_8x16;

- pixf->var[PIXEL_8x8] = x264_pixel_var_8x8;

- pixf->var2[PIXEL_8x16] = x264_pixel_var2_8x16;

- pixf->var2[PIXEL_8x8] = x264_pixel_var2_8x8;

- //计算UV的

- pixf->ssd_nv12_core = pixel_ssd_nv12_core;

- //计算SSIM

- pixf->ssim_4x4x2_core = ssim_4x4x2_core;

- pixf->ssim_end4 = ssim_end4;

- pixf->vsad = pixel_vsad;

- pixf->asd8 = pixel_asd8;

-

- pixf->intra_sad_x3_4x4 = x264_intra_sad_x3_4x4;

- pixf->intra_satd_x3_4x4 = x264_intra_satd_x3_4x4;

- pixf->intra_sad_x3_8x8 = x264_intra_sad_x3_8x8;

- pixf->intra_sa8d_x3_8x8 = x264_intra_sa8d_x3_8x8;

- pixf->intra_sad_x3_8x8c = x264_intra_sad_x3_8x8c;

- pixf->intra_satd_x3_8x8c = x264_intra_satd_x3_8x8c;

- pixf->intra_sad_x3_8x16c = x264_intra_sad_x3_8x16c;

- pixf->intra_satd_x3_8x16c = x264_intra_satd_x3_8x16c;

- pixf->intra_sad_x3_16x16 = x264_intra_sad_x3_16x16;

- pixf->intra_satd_x3_16x16 = x264_intra_satd_x3_16x16;

-

- //后面的初始化基本上都是汇编优化过的函数

-

- #if HIGH_BIT_DEPTH

- #if HAVE_MMX

- if( cpu&X264_CPU_MMX2 )

- {

- INIT7( sad, _mmx2 );

- INIT7_NAME( sad_aligned, sad, _mmx2 );

- INIT7( sad_x3, _mmx2 );

- INIT7( sad_x4, _mmx2 );

- INIT8( satd, _mmx2 );

- INIT7( satd_x3, _mmx2 );

- INIT7( satd_x4, _mmx2 );

- INIT4( hadamard_ac, _mmx2 );

- INIT8( ssd, _mmx2 );

- INIT_ADS( _mmx2 );

-

- pixf->ssd_nv12_core = x264_pixel_ssd_nv12_core_mmx2;

- pixf->var[PIXEL_16x16] = x264_pixel_var_16x16_mmx2;

- pixf->var[PIXEL_8x8] = x264_pixel_var_8x8_mmx2;

- #if ARCH_X86

- pixf->var2[PIXEL_8x8] = x264_pixel_var2_8x8_mmx2;

- pixf->var2[PIXEL_8x16] = x264_pixel_var2_8x16_mmx2;

- #endif

-

- pixf->intra_sad_x3_4x4 = x264_intra_sad_x3_4x4_mmx2;

- pixf->intra_satd_x3_4x4 = x264_intra_satd_x3_4x4_mmx2;

- pixf->intra_sad_x3_8x8 = x264_intra_sad_x3_8x8_mmx2;

- pixf->intra_sad_x3_8x8c = x264_intra_sad_x3_8x8c_mmx2;

- pixf->intra_satd_x3_8x8c = x264_intra_satd_x3_8x8c_mmx2;

- pixf->intra_sad_x3_8x16c = x264_intra_sad_x3_8x16c_mmx2;

- pixf->intra_satd_x3_8x16c = x264_intra_satd_x3_8x16c_mmx2;

- pixf->intra_sad_x3_16x16 = x264_intra_sad_x3_16x16_mmx2;

- pixf->intra_satd_x3_16x16 = x264_intra_satd_x3_16x16_mmx2;

- }

- if( cpu&X264_CPU_SSE2 )

- {

- INIT4_NAME( sad_aligned, sad, _sse2_aligned );

- INIT5( ssd, _sse2 );

- INIT6( satd, _sse2 );

- pixf->satd[PIXEL_4x16] = x264_pixel_satd_4x16_sse2;

-

- pixf->sa8d[PIXEL_16x16] = x264_pixel_sa8d_16x16_sse2;

- pixf->sa8d[PIXEL_8x8] = x264_pixel_sa8d_8x8_sse2;

- #if ARCH_X86_64

- pixf->intra_sa8d_x3_8x8 = x264_intra_sa8d_x3_8x8_sse2;

- pixf->sa8d_satd[PIXEL_16x16] = x264_pixel_sa8d_satd_16x16_sse2;

- #endif

- pixf->intra_sad_x3_4x4 = x264_intra_sad_x3_4x4_sse2;

- pixf->ssd_nv12_core = x264_pixel_ssd_nv12_core_sse2;

- pixf->ssim_4x4x2_core = x264_pixel_ssim_4x4x2_core_sse2;

- pixf->ssim_end4 = x264_pixel_ssim_end4_sse2;

- pixf->var[PIXEL_16x16] = x264_pixel_var_16x16_sse2;

- pixf->var[PIXEL_8x8] = x264_pixel_var_8x8_sse2;

- pixf->var2[PIXEL_8x8] = x264_pixel_var2_8x8_sse2;

- pixf->var2[PIXEL_8x16] = x264_pixel_var2_8x16_sse2;

- pixf->intra_sad_x3_8x8 = x264_intra_sad_x3_8x8_sse2;

- }

- //此处省略大量的X86、ARM等平台的汇编函数初始化代码

- }

x264_pixel_init()的源代码非常的长,主要原因在于它把C语言版本的函数以及各种平台的汇编函数都写到一块了(不知道现在最新的版本是不是还是这样)。x264_pixel_init()包含了大量和像素计算有关的函数,包括SAD、SATD、SSD、SSIM等等。它的输入参数x264_pixel_function_t是一个结构体,其中包含了各种像素计算的函数接口。x264_pixel_function_t的定义如下所示。

- typedef struct

- {

- x264_pixel_cmp_t sad[8];

- x264_pixel_cmp_t ssd[8];

- x264_pixel_cmp_t satd[8];

- x264_pixel_cmp_t ssim[7];

- x264_pixel_cmp_t sa8d[4];

- x264_pixel_cmp_t mbcmp[8]; /* either satd or sad for subpel refine and mode decision */

- x264_pixel_cmp_t mbcmp_unaligned[8]; /* unaligned mbcmp for subpel */

- x264_pixel_cmp_t fpelcmp[8]; /* either satd or sad for fullpel motion search */

- x264_pixel_cmp_x3_t fpelcmp_x3[7];

- x264_pixel_cmp_x4_t fpelcmp_x4[7];

- x264_pixel_cmp_t sad_aligned[8]; /* Aligned SAD for mbcmp */

- int (*vsad)( pixel *, intptr_t, int );

- int (*asd8)( pixel *pix1, intptr_t stride1, pixel *pix2, intptr_t stride2, int height );

- uint64_t (*sa8d_satd[1])( pixel *pix1, intptr_t stride1, pixel *pix2, intptr_t stride2 );

-

- uint64_t (*var[4])( pixel *pix, intptr_t stride );

- int (*var2[4])( pixel *pix1, intptr_t stride1,

- pixel *pix2, intptr_t stride2, int *ssd );

- uint64_t (*hadamard_ac[4])( pixel *pix, intptr_t stride );

-

- void (*ssd_nv12_core)( pixel *pixuv1, intptr_t stride1,

- pixel *pixuv2, intptr_t stride2, int width, int height,

- uint64_t *ssd_u, uint64_t *ssd_v );

- void (*ssim_4x4x2_core)( const pixel *pix1, intptr_t stride1,

- const pixel *pix2, intptr_t stride2, int sums[2][4] );

- float (*ssim_end4)( int sum0[5][4], int sum1[5][4], int width );

-

- /* multiple parallel calls to cmp. */

- x264_pixel_cmp_x3_t sad_x3[7];

- x264_pixel_cmp_x4_t sad_x4[7];

- x264_pixel_cmp_x3_t satd_x3[7];

- x264_pixel_cmp_x4_t satd_x4[7];

-

- /* abs-diff-sum for successive elimination.

- * may round width up to a multiple of 16. */

- int (*ads[7])( int enc_dc[4], uint16_t *sums, int delta,

- uint16_t *cost_mvx, int16_t *mvs, int width, int thresh );

-

- /* calculate satd or sad of V, H, and DC modes. */

- void (*intra_mbcmp_x3_16x16)( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_satd_x3_16x16) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_sad_x3_16x16) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_mbcmp_x3_4x4) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_satd_x3_4x4) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_sad_x3_4x4) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_mbcmp_x3_chroma)( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_satd_x3_chroma) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_sad_x3_chroma) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_mbcmp_x3_8x16c) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_satd_x3_8x16c) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_sad_x3_8x16c) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_mbcmp_x3_8x8c) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_satd_x3_8x8c) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_sad_x3_8x8c) ( pixel *fenc, pixel *fdec, int res[3] );

- void (*intra_mbcmp_x3_8x8) ( pixel *fenc, pixel edge[36], int res[3] );

- void (*intra_sa8d_x3_8x8) ( pixel *fenc, pixel edge[36], int res[3] );

- void (*intra_sad_x3_8x8) ( pixel *fenc, pixel edge[36], int res[3] );

- /* find minimum satd or sad of all modes, and set fdec.

- * may be NULL, in which case just use pred+satd instead. */

- int (*intra_mbcmp_x9_4x4)( pixel *fenc, pixel *fdec, uint16_t *bitcosts );

- int (*intra_satd_x9_4x4) ( pixel *fenc, pixel *fdec, uint16_t *bitcosts );

- int (*intra_sad_x9_4x4) ( pixel *fenc, pixel *fdec, uint16_t *bitcosts );

- int (*intra_mbcmp_x9_8x8)( pixel *fenc, pixel *fdec, pixel edge[36], uint16_t *bitcosts, uint16_t *satds );

- int (*intra_sa8d_x9_8x8) ( pixel *fenc, pixel *fdec, pixel edge[36], uint16_t *bitcosts, uint16_t *satds );

- int (*intra_sad_x9_8x8) ( pixel *fenc, pixel *fdec, pixel edge[36], uint16_t *bitcosts, uint16_t *satds );

- } x264_pixel_function_t;

- pixf->sad[PIXEL_16x16] = x264_pixel_sad_16x16;

- pixf->sad[PIXEL_16x8] = x264_pixel_sad_16x8;

- pixf->sad[PIXEL_8x16] = x264_pixel_sad_8x16;

- pixf->sad[PIXEL_8x8] = x264_pixel_sad_8x8;

- pixf->sad[PIXEL_8x4] = x264_pixel_sad_8x4;

- pixf->sad[PIXEL_4x8] = x264_pixel_sad_4x8;

- pixf->sad[PIXEL_4x4] = x264_pixel_sad_4x4;

- pixf->sad[PIXEL_4x16] = x264_pixel_sad_4x16;

- pixf->ssd[PIXEL_16x16] = x264_pixel_ssd_16x16;

- pixf->ssd[PIXEL_16x8] = x264_pixel_ssd_16x8;

- pixf->ssd[PIXEL_8x16] = x264_pixel_ssd_8x16;

- pixf->ssd[PIXEL_8x8] = x264_pixel_ssd_8x8;

- pixf->ssd[PIXEL_8x4] = x264_pixel_ssd_8x4;

- pixf->ssd[PIXEL_4x8] = x264_pixel_ssd_4x8;

- pixf->ssd[PIXEL_4x4] = x264_pixel_ssd_4x4;

- pixf->ssd[PIXEL_4x16] = x264_pixel_ssd_4x16;

- pixf->satd[PIXEL_16x16] = x264_pixel_satd_16x16;

- pixf->satd[PIXEL_16x8] = x264_pixel_satd_16x8;

- pixf->satd[PIXEL_8x16] = x264_pixel_satd_8x16;

- pixf->satd[PIXEL_8x8] = x264_pixel_satd_8x8;

- pixf->satd[PIXEL_8x4] = x264_pixel_satd_8x4;

- pixf->satd[PIXEL_4x8] = x264_pixel_satd_4x8;

- pixf->satd[PIXEL_4x4] = x264_pixel_satd_4x4;

- pixf->satd[PIXEL_4x16] = x264_pixel_satd_4x16;

相关知识简述

简单记录几个像素计算中的概念。SAD和SATD主要用于帧内预测模式以及帧间预测模式的判断。有关SAD、SATD、SSD的定义如下:SAD(Sum of Absolute Difference)也可以称为SAE(Sum of Absolute Error),即绝对误差和。它的计算方法就是求出两个像素块对应像素点的差值,将这些差值分别求绝对值之后再进行累加。

SATD(Sum of Absolute Transformed Difference)即Hadamard变换后再绝对值求和。它和SAD的区别在于多了一个“变换”。

SSD(Sum of Squared Difference)也可以称为SSE(Sum of Squared Error),即差值的平方和。它和SAD的区别在于多了一个“平方”。

H.264中使用SAD和SATD进行宏块预测模式的判断。早期的编码器使用SAD进行计算,近期的编码器多使用SATD进行计算。为什么使用SATD而不使用SAD呢?关键原因在于编码之后码流的大小是和图像块DCT变换后频域信息紧密相关的,而和变换前的时域信息关联性小一些。SAD只能反应时域信息;SATD却可以反映频域信息,而且计算复杂度也低于DCT变换,因此是比较合适的模式选择的依据。

使用SAD进行模式选择的示例如下所示。下面这张图代表了一个普通的Intra16x16的宏块的像素。它的下方包含了使用Vertical,Horizontal,DC和Plane四种帧内预测模式预测的像素。通过计算可以得到这几种预测像素和原始像素之间的SAD(SAE)分别为3985,5097,4991,2539。由于Plane模式的SAD取值最小,由此可以断定Plane模式对于这个宏块来说是最好的帧内预测模式。

x264_pixel_sad_4x4()

x264_pixel_sad_4x4()用于计算4x4块的SAD。该函数的定义位于common\pixel.c,如下所示。- static int x264_pixel_sad_4x4( pixel *pix1, intptr_t i_stride_pix1,

- pixel *pix2, intptr_t i_stride_pix2 )

- {

- int i_sum = 0;

- for( int y = 0; y < 4; y++ ) //4个像素

- {

- for( int x = 0; x < 4; x++ ) //4个像素

- {

- i_sum += abs( pix1[x] - pix2[x] );//相减之后求绝对值,然后累加

- }

- pix1 += i_stride_pix1;

- pix2 += i_stride_pix2;

- }

- return i_sum;

- }

x264_pixel_sad_x4_4x4()

x264_pixel_sad_4x4()用于计算4个4x4块的SAD。该函数的定义位于common\pixel.c,如下所示。- static void x264_pixel_sad_x4_4x4( pixel *fenc, pixel *pix0, pixel *pix1,pixel *pix2, pixel *pix3,

- intptr_t i_stride, int scores[4] )

- {

- scores[0] = x264_pixel_sad_4x4( fenc, 16, pix0, i_stride );

- scores[1] = x264_pixel_sad_4x4( fenc, 16, pix1, i_stride );

- scores[2] = x264_pixel_sad_4x4( fenc, 16, pix2, i_stride );

- scores[3] = x264_pixel_sad_4x4( fenc, 16, pix3, i_stride );

- }

x264_pixel_ssd_4x4()

x264_pixel_ssd_4x4()用于计算4x4块的SSD。该函数的定义位于common\pixel.c,如下所示。- static int x264_pixel_ssd_4x4( pixel *pix1, intptr_t i_stride_pix1,

- pixel *pix2, intptr_t i_stride_pix2 )

- {

- int i_sum = 0;

- for( int y = 0; y < 4; y++ ) //4个像素

- {

- for( int x = 0; x < 4; x++ ) //4个像素

- {

- int d = pix1[x] - pix2[x]; //相减

- i_sum += d*d; //平方之后,累加

- }

- pix1 += i_stride_pix1;

- pix2 += i_stride_pix2;

- }

- return i_sum;

- }

x264_pixel_satd_4x4()

x264_pixel_satd_4x4()用于计算4x4块的SATD。该函数的定义位于common\pixel.c,如下所示。- //SAD(Sum of Absolute Difference)=SAE(Sum of Absolute Error)即绝对误差和

- //SATD(Sum of Absolute Transformed Difference)即hadamard变换后再绝对值求和

- //

- //为什么帧内模式选择要用SATD?

- //SAD即绝对误差和,仅反映残差时域差异,影响PSNR值,不能有效反映码流的大小。

- //SATD即将残差经哈德曼变换的4x4块的预测残差绝对值总和,可以将其看作简单的时频变换,其值在一定程度上可以反映生成码流的大小。

- //4x4的SATD

- static NOINLINE int x264_pixel_satd_4x4( pixel *pix1, intptr_t i_pix1, pixel *pix2, intptr_t i_pix2 )

- {

- sum2_t tmp[4][2];

- sum2_t a0, a1, a2, a3, b0, b1;

- sum2_t sum = 0;

-

- for( int i = 0; i < 4; i++, pix1 += i_pix1, pix2 += i_pix2 )

- {

- a0 = pix1[0] - pix2[0];

- a1 = pix1[1] - pix2[1];

- b0 = (a0+a1) + ((a0-a1)<<BITS_PER_SUM);

- a2 = pix1[2] - pix2[2];

- a3 = pix1[3] - pix2[3];

- b1 = (a2+a3) + ((a2-a3)<<BITS_PER_SUM);

- tmp[i][0] = b0 + b1;

- tmp[i][1] = b0 - b1;

- }

- for( int i = 0; i < 2; i++ )

- {

- HADAMARD4( a0, a1, a2, a3, tmp[0][i], tmp[1][i], tmp[2][i], tmp[3][i] );

- a0 = abs2(a0) + abs2(a1) + abs2(a2) + abs2(a3);

- sum += ((sum_t)a0) + (a0>>BITS_PER_SUM);

- }

- return sum >> 1;

- }

x264_dct_init()

x264_dct_init()用于初始化DCT变换和DCT反变换相关的汇编函数。该函数的定义位于common\dct.c,如下所示。- /****************************************************************************

- * x264_dct_init:

- ****************************************************************************/

- void x264_dct_init( int cpu, x264_dct_function_t *dctf )

- {

- //C语言版本

- //4x4DCT变换

- dctf->sub4x4_dct = sub4x4_dct;

- dctf->add4x4_idct = add4x4_idct;

- //8x8块:分解成4个4x4DCT变换,调用4次sub4x4_dct()

- dctf->sub8x8_dct = sub8x8_dct;

- dctf->sub8x8_dct_dc = sub8x8_dct_dc;

- dctf->add8x8_idct = add8x8_idct;

- dctf->add8x8_idct_dc = add8x8_idct_dc;

-

- dctf->sub8x16_dct_dc = sub8x16_dct_dc;

- //16x16块:分解成4个8x8块,调用4次sub8x8_dct()

- //实际上每个sub8x8_dct()又分解成4个4x4DCT变换,调用4次sub4x4_dct()

- dctf->sub16x16_dct = sub16x16_dct;

- dctf->add16x16_idct = add16x16_idct;

- dctf->add16x16_idct_dc = add16x16_idct_dc;

- //8x8DCT,注意:后缀是_dct8

- dctf->sub8x8_dct8 = sub8x8_dct8;

- dctf->add8x8_idct8 = add8x8_idct8;

-

- dctf->sub16x16_dct8 = sub16x16_dct8;

- dctf->add16x16_idct8 = add16x16_idct8;

- //Hadamard变换

- dctf->dct4x4dc = dct4x4dc;

- dctf->idct4x4dc = idct4x4dc;

-

- dctf->dct2x4dc = dct2x4dc;

-

- #if HIGH_BIT_DEPTH

- #if HAVE_MMX

- if( cpu&X264_CPU_MMX )

- {

- dctf->sub4x4_dct = x264_sub4x4_dct_mmx;

- dctf->sub8x8_dct = x264_sub8x8_dct_mmx;

- dctf->sub16x16_dct = x264_sub16x16_dct_mmx;

- }

- if( cpu&X264_CPU_SSE2 )

- {

- dctf->add4x4_idct = x264_add4x4_idct_sse2;

- dctf->dct4x4dc = x264_dct4x4dc_sse2;

- dctf->idct4x4dc = x264_idct4x4dc_sse2;

- dctf->sub8x8_dct8 = x264_sub8x8_dct8_sse2;

- dctf->sub16x16_dct8 = x264_sub16x16_dct8_sse2;

- dctf->add8x8_idct = x264_add8x8_idct_sse2;

- dctf->add16x16_idct = x264_add16x16_idct_sse2;

- dctf->add8x8_idct8 = x264_add8x8_idct8_sse2;

- dctf->add16x16_idct8 = x264_add16x16_idct8_sse2;

- dctf->sub8x8_dct_dc = x264_sub8x8_dct_dc_sse2;

- dctf->add8x8_idct_dc = x264_add8x8_idct_dc_sse2;

- dctf->sub8x16_dct_dc = x264_sub8x16_dct_dc_sse2;

- dctf->add16x16_idct_dc= x264_add16x16_idct_dc_sse2;

- }

- if( cpu&X264_CPU_SSE4 )

- {

- dctf->sub8x8_dct8 = x264_sub8x8_dct8_sse4;

- dctf->sub16x16_dct8 = x264_sub16x16_dct8_sse4;

- }

- if( cpu&X264_CPU_AVX )

- {

- dctf->add4x4_idct = x264_add4x4_idct_avx;

- dctf->dct4x4dc = x264_dct4x4dc_avx;

- dctf->idct4x4dc = x264_idct4x4dc_avx;

- dctf->sub8x8_dct8 = x264_sub8x8_dct8_avx;

- dctf->sub16x16_dct8 = x264_sub16x16_dct8_avx;

- dctf->add8x8_idct = x264_add8x8_idct_avx;

- dctf->add16x16_idct = x264_add16x16_idct_avx;

- dctf->add8x8_idct8 = x264_add8x8_idct8_avx;

- dctf->add16x16_idct8 = x264_add16x16_idct8_avx;

- dctf->add8x8_idct_dc = x264_add8x8_idct_dc_avx;

- dctf->sub8x16_dct_dc = x264_sub8x16_dct_dc_avx;

- dctf->add16x16_idct_dc= x264_add16x16_idct_dc_avx;

- }

- #endif // HAVE_MMX

- #else // !HIGH_BIT_DEPTH

- //MMX版本

- #if HAVE_MMX

- if( cpu&X264_CPU_MMX )

- {

- dctf->sub4x4_dct = x264_sub4x4_dct_mmx;

- dctf->add4x4_idct = x264_add4x4_idct_mmx;

- dctf->idct4x4dc = x264_idct4x4dc_mmx;

- dctf->sub8x8_dct_dc = x264_sub8x8_dct_dc_mmx2;

- //此处省略大量的X86、ARM等平台的汇编函数初始化代码

- }

从源代码可以看出,x264_dct_init()初始化了一系列的DCT变换的函数,这些DCT函数名称有如下规律:

(1)DCT函数名称前面有“sub”,代表对两块像素相减得到残差之后,再进行DCT变换。

(2)DCT反变换函数名称前面有“add”,代表将DCT反变换之后的残差数据叠加到预测数据上。

(3)以“dct8”为结尾的函数使用了8x8DCT,其余函数是用的都是4x4DCT。

x264_dct_init()的输入参数x264_dct_function_t是一个结构体,其中包含了各种DCT函数的接口。x264_dct_function_t的定义如下所示。

- typedef struct

- {

- // pix1 stride = FENC_STRIDE

- // pix2 stride = FDEC_STRIDE

- // p_dst stride = FDEC_STRIDE

- void (*sub4x4_dct) ( dctcoef dct[16], pixel *pix1, pixel *pix2 );

- void (*add4x4_idct) ( pixel *p_dst, dctcoef dct[16] );

-

- void (*sub8x8_dct) ( dctcoef dct[4][16], pixel *pix1, pixel *pix2 );

- void (*sub8x8_dct_dc)( dctcoef dct[4], pixel *pix1, pixel *pix2 );

- void (*add8x8_idct) ( pixel *p_dst, dctcoef dct[4][16] );

- void (*add8x8_idct_dc) ( pixel *p_dst, dctcoef dct[4] );

-

- void (*sub8x16_dct_dc)( dctcoef dct[8], pixel *pix1, pixel *pix2 );

-

- void (*sub16x16_dct) ( dctcoef dct[16][16], pixel *pix1, pixel *pix2 );

- void (*add16x16_idct)( pixel *p_dst, dctcoef dct[16][16] );

- void (*add16x16_idct_dc) ( pixel *p_dst, dctcoef dct[16] );

-

- void (*sub8x8_dct8) ( dctcoef dct[64], pixel *pix1, pixel *pix2 );

- void (*add8x8_idct8) ( pixel *p_dst, dctcoef dct[64] );

-

- void (*sub16x16_dct8) ( dctcoef dct[4][64], pixel *pix1, pixel *pix2 );

- void (*add16x16_idct8)( pixel *p_dst, dctcoef dct[4][64] );

-

- void (*dct4x4dc) ( dctcoef d[16] );

- void (*idct4x4dc)( dctcoef d[16] );

-

- void (*dct2x4dc)( dctcoef dct[8], dctcoef dct4x4[8][16] );

-

- } x264_dct_function_t;

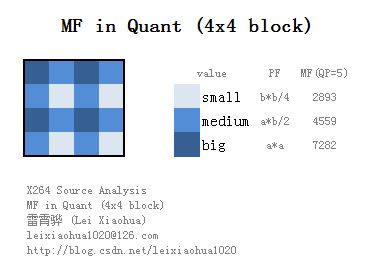

相关知识简述

简单记录一下DCT相关的知识。DCT变换的核心理念就是把图像的低频信息(对应大面积平坦区域)变换到系数矩阵的左上角,而把高频信息变换到系数矩阵的右下角,这样就可以在压缩的时候(量化)去除掉人眼不敏感的高频信息(位于矩阵右下角的系数)从而达到压缩数据的目的。二维8x8DCT变换常见的示意图如下所示。

4x4整数DCT变换的公式如下所示。

sub4x4_dct()

sub4x4_dct()可以将两块4x4的图像相减求残差后,进行DCT变换。该函数的定义位于common\dct.c,如下所示。- /*

- * 求残差用

- * 注意求的是一个“方块”形像素

- *

- * 参数的含义如下:

- * diff:输出的残差数据

- * i_size:方块的大小

- * pix1:输入数据1

- * i_pix1:输入数据1一行像素大小(stride)

- * pix2:输入数据2

- * i_pix2:输入数据2一行像素大小(stride)

- *

- */

- static inline void pixel_sub_wxh( dctcoef *diff, int i_size,

- pixel *pix1, int i_pix1, pixel *pix2, int i_pix2 )

- {

- for( int y = 0; y < i_size; y++ )

- {

- for( int x = 0; x < i_size; x++ )

- diff[x + y*i_size] = pix1[x] - pix2[x];//求残差

- pix1 += i_pix1;//前进到下一行

- pix2 += i_pix2;

- }

- }

- //4x4DCT变换

- //注意首先获取pix1和pix2两块数据的残差,然后再进行变换

- //返回dct[16]

- static void sub4x4_dct( dctcoef dct[16], pixel *pix1, pixel *pix2 )

- {

- dctcoef d[16];

- dctcoef tmp[16];

- //获取残差数据,存入d[16]

- //pix1一般为编码帧(enc)

- //pix2一般为重建帧(dec)

- pixel_sub_wxh( d, 4, pix1, FENC_STRIDE, pix2, FDEC_STRIDE );

-

- //处理残差d[16]

- //蝶形算法:横向4个像素

- for( int i = 0; i < 4; i++ )

- {

- int s03 = d[i*4+0] + d[i*4+3];

- int s12 = d[i*4+1] + d[i*4+2];

- int d03 = d[i*4+0] - d[i*4+3];

- int d12 = d[i*4+1] - d[i*4+2];

-

- tmp[0*4+i] = s03 + s12;

- tmp[1*4+i] = 2*d03 + d12;

- tmp[2*4+i] = s03 - s12;

- tmp[3*4+i] = d03 - 2*d12;

- }

- //蝶形算法:纵向

- for( int i = 0; i < 4; i++ )

- {

- int s03 = tmp[i*4+0] + tmp[i*4+3];

- int s12 = tmp[i*4+1] + tmp[i*4+2];

- int d03 = tmp[i*4+0] - tmp[i*4+3];

- int d12 = tmp[i*4+1] - tmp[i*4+2];

-

- dct[i*4+0] = s03 + s12;

- dct[i*4+1] = 2*d03 + d12;

- dct[i*4+2] = s03 - s12;

- dct[i*4+3] = d03 - 2*d12;

- }

- }

add4x4_idct()

add4x4_idct()可以将残差数据进行DCT反变换,并将变换后得到的残差像素数据叠加到预测数据上。该函数的定义位于common\dct.c,如下所示。- //4x4DCT反变换(“add”代表叠加到已有的像素上)

- static void add4x4_idct( pixel *p_dst, dctcoef dct[16] )

- {

- dctcoef d[16];

- dctcoef tmp[16];

-

- for( int i = 0; i < 4; i++ )

- {

- int s02 = dct[0*4+i] + dct[2*4+i];

- int d02 = dct[0*4+i] - dct[2*4+i];

- int s13 = dct[1*4+i] + (dct[3*4+i]>>1);

- int d13 = (dct[1*4+i]>>1) - dct[3*4+i];

-

- tmp[i*4+0] = s02 + s13;

- tmp[i*4+1] = d02 + d13;

- tmp[i*4+2] = d02 - d13;

- tmp[i*4+3] = s02 - s13;

- }

-

- for( int i = 0; i < 4; i++ )

- {

- int s02 = tmp[0*4+i] + tmp[2*4+i];

- int d02 = tmp[0*4+i] - tmp[2*4+i];

- int s13 = tmp[1*4+i] + (tmp[3*4+i]>>1);

- int d13 = (tmp[1*4+i]>>1) - tmp[3*4+i];

-

- d[0*4+i] = ( s02 + s13 + 32 ) >> 6;

- d[1*4+i] = ( d02 + d13 + 32 ) >> 6;

- d[2*4+i] = ( d02 - d13 + 32 ) >> 6;

- d[3*4+i] = ( s02 - s13 + 32 ) >> 6;

- }

-

-

- for( int y = 0; y < 4; y++ )

- {

- for( int x = 0; x < 4; x++ )

- p_dst[x] = x264_clip_pixel( p_dst[x] + d[y*4+x] );

- p_dst += FDEC_STRIDE;

- }

- }

sub8x8_dct()

sub8x8_dct()可以将两块8x8的图像相减求残差后,进行4x4DCT变换。该函数的定义位于common\dct.c,如下所示。- //8x8块:分解成4个4x4DCT变换,调用4次sub4x4_dct()

- //返回dct[4][16]

- static void sub8x8_dct( dctcoef dct[4][16], pixel *pix1, pixel *pix2 )

- {

- /*

- * 8x8 宏块被划分为4个4x4子块

- *

- * +---+---+

- * | 0 | 1 |

- * +---+---+

- * | 2 | 3 |

- * +---+---+

- *

- */

- sub4x4_dct( dct[0], &pix1[0], &pix2[0] );

- sub4x4_dct( dct[1], &pix1[4], &pix2[4] );

- sub4x4_dct( dct[2], &pix1[4*FENC_STRIDE+0], &pix2[4*FDEC_STRIDE+0] );

- sub4x4_dct( dct[3], &pix1[4*FENC_STRIDE+4], &pix2[4*FDEC_STRIDE+4] );

- }

sub16x16_dct()

sub16x16_dct()可以将两块16x16的图像相减求残差后,进行4x4DCT变换。该函数的定义位于common\dct.c,如下所示。- //16x16块:分解成4个8x8的块做DCT变换,调用4次sub8x8_dct()

- //返回dct[16][16]

- static void sub16x16_dct( dctcoef dct[16][16], pixel *pix1, pixel *pix2 )

- {

- /*

- * 16x16 宏块被划分为4个8x8子块

- *

- * +--------+--------+

- * | | |

- * | 0 | 1 |

- * | | |

- * +--------+--------+

- * | | |

- * | 2 | 3 |

- * | | |

- * +--------+--------+

- *

- */

- sub8x8_dct( &dct[ 0], &pix1[0], &pix2[0] ); //0

- sub8x8_dct( &dct[ 4], &pix1[8], &pix2[8] ); //1

- sub8x8_dct( &dct[ 8], &pix1[8*FENC_STRIDE+0], &pix2[8*FDEC_STRIDE+0] ); //2

- sub8x8_dct( &dct[12], &pix1[8*FENC_STRIDE+8], &pix2[8*FDEC_STRIDE+8] ); //3

- }

dct4x4dc()

dct4x4dc()可以将输入的4x4图像块进行Hadamard变换。该函数的定义位于common\dct.c,如下所示。- //Hadamard变换

- static void dct4x4dc( dctcoef d[16] )

- {

- dctcoef tmp[16];

-

- //蝶形算法:横向的4个像素

- for( int i = 0; i < 4; i++ )

- {

-

- int s01 = d[i*4+0] + d[i*4+1];

- int d01 = d[i*4+0] - d[i*4+1];

- int s23 = d[i*4+2] + d[i*4+3];

- int d23 = d[i*4+2] - d[i*4+3];

-

- tmp[0*4+i] = s01 + s23;

- tmp[1*4+i] = s01 - s23;

- tmp[2*4+i] = d01 - d23;

- tmp[3*4+i] = d01 + d23;

- }

- //蝶形算法:纵向

- for( int i = 0; i < 4; i++ )

- {

- int s01 = tmp[i*4+0] + tmp[i*4+1];

- int d01 = tmp[i*4+0] - tmp[i*4+1];

- int s23 = tmp[i*4+2] + tmp[i*4+3];

- int d23 = tmp[i*4+2] - tmp[i*4+3];

-

- d[i*4+0] = ( s01 + s23 + 1 ) >> 1;

- d[i*4+1] = ( s01 - s23 + 1 ) >> 1;

- d[i*4+2] = ( d01 - d23 + 1 ) >> 1;

- d[i*4+3] = ( d01 + d23 + 1 ) >> 1;

- }

- }

x264_mc_init()

x264_mc_init()用于初始化运动补偿相关的汇编函数。该函数的定义位于common\mc.c,如下所示。- //运动补偿

- void x264_mc_init( int cpu, x264_mc_functions_t *pf, int cpu_independent )

- {

- //亮度运动补偿

- pf->mc_luma = mc_luma;

- //获得匹配块

- pf->get_ref = get_ref;

-

- pf->mc_chroma = mc_chroma;

- //求平均

- pf->avg[PIXEL_16x16]= pixel_avg_16x16;

- pf->avg[PIXEL_16x8] = pixel_avg_16x8;

- pf->avg[PIXEL_8x16] = pixel_avg_8x16;

- pf->avg[PIXEL_8x8] = pixel_avg_8x8;

- pf->avg[PIXEL_8x4] = pixel_avg_8x4;

- pf->avg[PIXEL_4x16] = pixel_avg_4x16;

- pf->avg[PIXEL_4x8] = pixel_avg_4x8;

- pf->avg[PIXEL_4x4] = pixel_avg_4x4;

- pf->avg[PIXEL_4x2] = pixel_avg_4x2;

- pf->avg[PIXEL_2x8] = pixel_avg_2x8;

- pf->avg[PIXEL_2x4] = pixel_avg_2x4;

- pf->avg[PIXEL_2x2] = pixel_avg_2x2;

- //加权相关

- pf->weight = x264_mc_weight_wtab;

- pf->offsetadd = x264_mc_weight_wtab;

- pf->offsetsub = x264_mc_weight_wtab;

- pf->weight_cache = x264_weight_cache;

- //赋值-只包含了方形的

- pf->copy_16x16_unaligned = mc_copy_w16;

- pf->copy[PIXEL_16x16] = mc_copy_w16;

- pf->copy[PIXEL_8x8] = mc_copy_w8;

- pf->copy[PIXEL_4x4] = mc_copy_w4;

-

- pf->store_interleave_chroma = store_interleave_chroma;

- pf->load_deinterleave_chroma_fenc = load_deinterleave_chroma_fenc;

- pf->load_deinterleave_chroma_fdec = load_deinterleave_chroma_fdec;

- //拷贝像素-不论像素块大小

- pf->plane_copy = x264_plane_copy_c;

- pf->plane_copy_interleave = x264_plane_copy_interleave_c;

- pf->plane_copy_deinterleave = x264_plane_copy_deinterleave_c;

- pf->plane_copy_deinterleave_rgb = x264_plane_copy_deinterleave_rgb_c;

- pf->plane_copy_deinterleave_v210 = x264_plane_copy_deinterleave_v210_c;

- //关键:半像素内插

- pf->hpel_filter = hpel_filter;

- //几个空函数

- pf->prefetch_fenc_420 = prefetch_fenc_null;

- pf->prefetch_fenc_422 = prefetch_fenc_null;

- pf->prefetch_ref = prefetch_ref_null;

- pf->memcpy_aligned = memcpy;

- pf->memzero_aligned = memzero_aligned;

- //降低分辨率-线性内插(不是半像素内插)

- pf->frame_init_lowres_core = frame_init_lowres_core;

-

- pf->integral_init4h = integral_init4h;

- pf->integral_init8h = integral_init8h;

- pf->integral_init4v = integral_init4v;

- pf->integral_init8v = integral_init8v;

-

- pf->mbtree_propagate_cost = mbtree_propagate_cost;

- pf->mbtree_propagate_list = mbtree_propagate_list;

- //各种汇编版本

- #if HAVE_MMX

- x264_mc_init_mmx( cpu, pf );

- #endif

- #if HAVE_ALTIVEC

- if( cpu&X264_CPU_ALTIVEC )

- x264_mc_altivec_init( pf );

- #endif

- #if HAVE_ARMV6

- x264_mc_init_arm( cpu, pf );

- #endif

- #if ARCH_AARCH64

- x264_mc_init_aarch64( cpu, pf );

- #endif

-

- if( cpu_independent )

- {

- pf->mbtree_propagate_cost = mbtree_propagate_cost;

- pf->mbtree_propagate_list = mbtree_propagate_list;

- }

- }

从源代码可以看出,x264_mc_init()中包含了大量的像素内插、拷贝、求平均的函数。这些函数都是用于在H.264编码过程中进行运动估计和运动补偿的。x264_mc_init()的参数x264_mc_functions_t是一个结构体,其中包含了运动补偿函数相关的函数接口。x264_mc_functions_t的定义如下。

- typedef struct

- {

- void (*mc_luma)( pixel *dst, intptr_t i_dst, pixel **src, intptr_t i_src,

- int mvx, int mvy, int i_width, int i_height, const x264_weight_t *weight );

-

- /* may round up the dimensions if they're not a power of 2 */

- pixel* (*get_ref)( pixel *dst, intptr_t *i_dst, pixel **src, intptr_t i_src,

- int mvx, int mvy, int i_width, int i_height, const x264_weight_t *weight );

-

- /* mc_chroma may write up to 2 bytes of garbage to the right of dst,

- * so it must be run from left to right. */

- void (*mc_chroma)( pixel *dstu, pixel *dstv, intptr_t i_dst, pixel *src, intptr_t i_src,

- int mvx, int mvy, int i_width, int i_height );

-

- void (*avg[12])( pixel *dst, intptr_t dst_stride, pixel *src1, intptr_t src1_stride,

- pixel *src2, intptr_t src2_stride, int i_weight );

-

- /* only 16x16, 8x8, and 4x4 defined */

- void (*copy[7])( pixel *dst, intptr_t dst_stride, pixel *src, intptr_t src_stride, int i_height );

- void (*copy_16x16_unaligned)( pixel *dst, intptr_t dst_stride, pixel *src, intptr_t src_stride, int i_height );

-

- void (*store_interleave_chroma)( pixel *dst, intptr_t i_dst, pixel *srcu, pixel *srcv, int height );

- void (*load_deinterleave_chroma_fenc)( pixel *dst, pixel *src, intptr_t i_src, int height );

- void (*load_deinterleave_chroma_fdec)( pixel *dst, pixel *src, intptr_t i_src, int height );

-

- void (*plane_copy)( pixel *dst, intptr_t i_dst, pixel *src, intptr_t i_src, int w, int h );

- void (*plane_copy_interleave)( pixel *dst, intptr_t i_dst, pixel *srcu, intptr_t i_srcu,

- pixel *srcv, intptr_t i_srcv, int w, int h );

- /* may write up to 15 pixels off the end of each plane */

- void (*plane_copy_deinterleave)( pixel *dstu, intptr_t i_dstu, pixel *dstv, intptr_t i_dstv,

- pixel *src, intptr_t i_src, int w, int h );

- void (*plane_copy_deinterleave_rgb)( pixel *dsta, intptr_t i_dsta, pixel *dstb, intptr_t i_dstb,

- pixel *dstc, intptr_t i_dstc, pixel *src, intptr_t i_src, int pw, int w, int h );

- void (*plane_copy_deinterleave_v210)( pixel *dsty, intptr_t i_dsty,

- pixel *dstc, intptr_t i_dstc,

- uint32_t *src, intptr_t i_src, int w, int h );

- void (*hpel_filter)( pixel *dsth, pixel *dstv, pixel *dstc, pixel *src,

- intptr_t i_stride, int i_width, int i_height, int16_t *buf );

-

- /* prefetch the next few macroblocks of fenc or fdec */

- void (*prefetch_fenc) ( pixel *pix_y, intptr_t stride_y, pixel *pix_uv, intptr_t stride_uv, int mb_x );

- void (*prefetch_fenc_420)( pixel *pix_y, intptr_t stride_y, pixel *pix_uv, intptr_t stride_uv, int mb_x );

- void (*prefetch_fenc_422)( pixel *pix_y, intptr_t stride_y, pixel *pix_uv, intptr_t stride_uv, int mb_x );

- /* prefetch the next few macroblocks of a hpel reference frame */

- void (*prefetch_ref)( pixel *pix, intptr_t stride, int parity );

-

- void *(*memcpy_aligned)( void *dst, const void *src, size_t n );

- void (*memzero_aligned)( void *dst, size_t n );

-

- /* successive elimination prefilter */

- void (*integral_init4h)( uint16_t *sum, pixel *pix, intptr_t stride );

- void (*integral_init8h)( uint16_t *sum, pixel *pix, intptr_t stride );

- void (*integral_init4v)( uint16_t *sum8, uint16_t *sum4, intptr_t stride );

- void (*integral_init8v)( uint16_t *sum8, intptr_t stride );

-

- void (*frame_init_lowres_core)( pixel *src0, pixel *dst0, pixel *dsth, pixel *dstv, pixel *dstc,

- intptr_t src_stride, intptr_t dst_stride, int width, int height );

- weight_fn_t *weight;

- weight_fn_t *offsetadd;

- weight_fn_t *offsetsub;

- void (*weight_cache)( x264_t *, x264_weight_t * );

-

- void (*mbtree_propagate_cost)( int16_t *dst, uint16_t *propagate_in, uint16_t *intra_costs,

- uint16_t *inter_costs, uint16_t *inv_qscales, float *fps_factor, int len );

-

- void (*mbtree_propagate_list)( x264_t *h, uint16_t *ref_costs, int16_t (*mvs)[2],

- int16_t *propagate_amount, uint16_t *lowres_costs,

- int bipred_weight, int mb_y, int len, int list );

- } x264_mc_functions_t;

相关知识简述

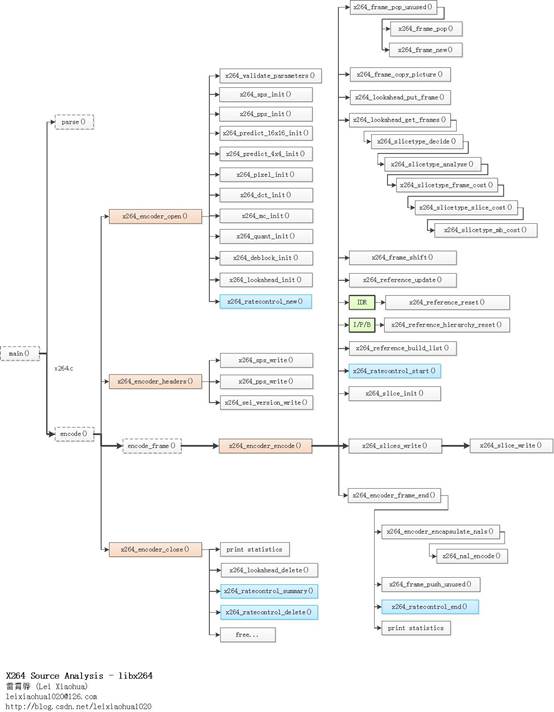

简单记录一下半像素插值的知识。《H.264标准》中规定,运动估计为1/4像素精度。因此在H.264编码和解码的过程中,需要将画面中的像素进行插值——简单地说就是把原先的1个像素点拓展成4x4一共16个点。下图显示了H.264编码和解码过程中像素插值情况。可以看出原先的G点的右下方通过插值的方式产生了a、b、c、d等一共16个点。

(1)半像素内插。这一步通过6抽头滤波器获得5个半像素点。

(2)线性内插。这一步通过简单的线性内插获得剩余的1/4像素点。

图中半像素内插点为b、m、h、s、j五个点。半像素内插方法是对整像素点进行6 抽头滤波得出,滤波器的权重为(1/32, -5/32, 5/8, 5/8, -5/32, 1/32)。例如b的计算公式为:

b=round( (E - 5F + 20G + 20H - 5I + J ) / 32)

剩下几个半像素点的计算关系如下:m:由B、D、H、N、S、U计算

h:由A、C、G、M、R、T计算

s:由K、L、M、N、P、Q计算

j:由cc、dd、h、m、ee、ff计算。需要注意j点的运算量比较大,因为cc、dd、ee、ff都需要通过半像素内插方法进行计算。

在获得半像素点之后,就可以通过简单的线性内插获得1/4像素内插点了。1/4像素内插的方式如下图所示。例如图中a点的计算公式如下:

A=round( (G+b)/2 )

在这里有一点需要注意:位于4个角的e、g、p、r四个点并不是通过j点计算计算的,而是通过b、h、s、m四个半像素点计算的。hpel_filter()

hpel_filter()用于进行半像素插值。该函数的定义位于common\mc.c,如下所示。- //半像素插值公式

- //b= (E - 5F + 20G + 20H - 5I + J)/32