热门标签

当前位置: article > 正文

深度学习——LSTM做情感分类任务_google colab 情感分类

作者:笔触狂放9 | 2024-04-04 15:14:32

赞

踩

google colab 情感分类

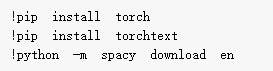

Google Colab环境下运行

在Colab环境下安装包

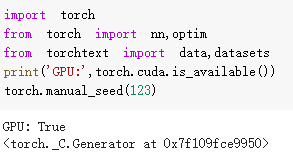

使用GPU

获得训练集与测试集

定义LSTM模型

class RNN(nn.Module): def __init__(self,vocab_size,embedding_dim,hidden_dim): super(RNN,self).__init__() # [vocab_size 10000] => [embedding_dim:100] self.embedding = nn.Embedding(vocab_size,embedding_dim) # [embedding_dim 100] => [hidden_dim 256] 两层 双向lstm dropout防止过拟合 self.rnn = nn.LSTM(embedding_dim,hidden_dim,num_layers=2,bidirectional=True,dropout=0.5) # lstm输出的h层信息[256*2] => 1 self.fc = nn.Linear(hidden_dim*2,1) self.dropout = nn.Dropout(0.5) def forward(self,x): # [seq,b,1] => [seq,b,100] embedding = self.dropout(self.embedding(x)) # output:[seq,b,hidden*2] # hidden/h:[num_layers*2,b,hid_dim] # cell/c:[num_layers*2,b,hid_dim] output,(hidden,cell) = self.rnn(embedding) # [num_layers*2,b,hid_dim] => 拼接2个[b,hid_dim] => [b,hid_dim*2] hidden = torch.cat([hidden[-2],hidden[-1]],dim=1) hidden = self.dropout(hidden) # [b,hid_dim*2] => [b,1] out = self.fc(hidden) return out

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

定义训练和评估函数

def train(rnn,iterator,optimizer,criterion): avg_acc = [] rnn.train() for i,batch in enumerate(iterator): # [seq,b] => [b,1] = [b] pred = rnn(batch.text).squeeze(1) loss = criterion(pred,batch.label) acc = binary_acc(pred,batch.label).item() avg_acc.append(acc) optimizer.zero_grad() loss.backward() optimizer.step() if i%10==0: print(i,acc) avg_acc = np.array(avg_acc).mean() print('avg_acc',avg_acc)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

def binary_acc(preds,y): preds = torch.round(torch.sigmoid(preds)) correct = torch.eq(preds,y).float() acc = correct.sum()/len(correct) return acc def eval(rnn,iterator,criterion): avg_acc = [] rnn.eval() with torch.no_grad(): for batch in iterator: pred = rnn(batch.text).squeeze(1) loss = criterion(pred,batch.label) acc = binary_acc(pred,batch.label).item() avg_acc.append(acc) avg_acc = np.array(avg_acc).mean() print('>>test:',avg_acc)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

开始训练

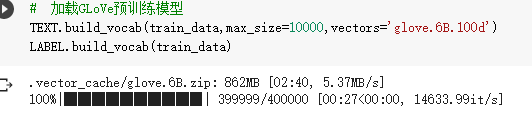

- 加载GLoVe预训练模型

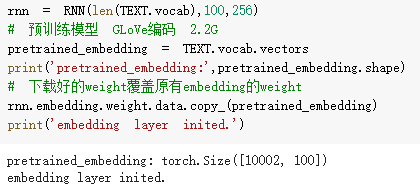

2. 初始化模型 用GLoVe编码的权重 替换 模型的embedding权重

-

设置batch_size optimizer criterion

-

epoch 训练

**关于embedding层的权重替换

用Gensim/GLoVe/Bert预训练获得的词向量进行LSTM训练 会获得更好的性能

可以见这篇blogNLP 预训练

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/笔触狂放9/article/detail/359434

推荐阅读

相关标签