热门标签

热门文章

- 1Python_13_正则表达式_06_编译正则表达式_python正则表达式编译

- 2机器学习--处理分类问题常用的算法(一)_机器学习中对于多变量二分类问题方法

- 3全面剖析OpenAI发布的GPT-4比其他GPT模型强在哪里_gpt-4 模型区别

- 4【AIGC调研系列】Gitlab的Duo Chat与其他辅助编程助手相比的优缺点_gitlab duo

- 5哨兵模式(sentinel)

- 6解决高风险代码:Access Control: Database

- 7GitHub克隆远程项目到本地(可以克隆指定文件夹)以及从本地上传文件到GitHub(Linux和Windows均可,对虚拟机也适用)_git clone 指定 git上指定的文件

- 8Google colab免费GPU使用教程_colab免费时长

- 9全网最全的Java项目系统源码+LW

- 10Python3 * 和 ** 运算符

当前位置: article > 正文

【自学记录】【Pytorch2.0深度学习从零开始学 王晓华】第四章 深度学习的理论基础_王晓华 pytorch 2.0

作者:神奇cpp | 2024-06-19 08:50:42

赞

踩

王晓华 pytorch 2.0

4.3.5 反馈神经网络原理的Python实现

遇到的疑问:

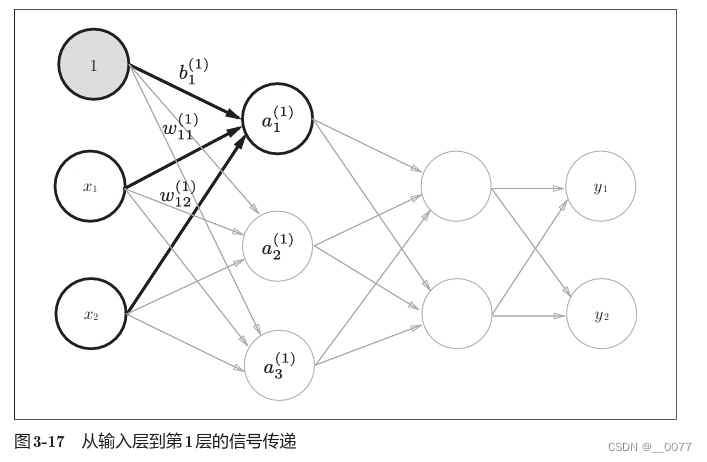

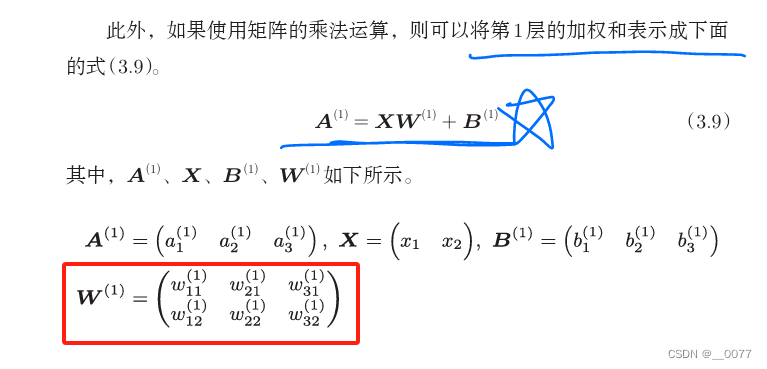

1、对神经网络前向计算中,关于系数矩阵W的讨论。

上一章讲到了层结构是【out,in】,所以我觉得在计算Y=WX+b的时候,W矩阵也应该是【out,in】的形状。但是该代码(或者正规代码实现流程)不是的,他是一个这样的结构:

所以,W矩阵还是【in,out】结构,a1=X1W11+X2W12+b1,为了计算a1,会以列优先循环W矩阵:

for j in range(self.hidden_n):

total = 0.0

for i in range(self.input_n):

total += self.input_cells[i] * self.input_weights[i][j] #列优先循环W矩阵

- 1

- 2

- 3

- 4

以上self.input_weights[i][j]代码:

j=1,依次输出(1,1),(2,1),对应W11,W12

j=2,依次输出(1,2),(2,2),对应W21,W22

j=3,依次输出(1,3),(2,3),对应W31,W32

以下是神经网络前向传播函数:

def predict(self,inputs):

for i in range(self.input_n - 1):

self.input_cells[i] = inputs[i]

for j in range(self.hidden_n):

total = 0.0

for i in range(self.input_n):

total += self.input_cells[i] * self.input_weights[i][j]

self.hidden_cells[j] = sigmoid(total)

for k in range(self.output_n):

total = 0.0

for j in range(self.hidden_n):

total += self.hidden_cells[j] * self.output_weights[j][k]

self.output_cells[k] = sigmoid(total)

return self.output_cells[:]#浅拷贝

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

2、有关self.output_cells[:]浅拷贝

神经网络前向传播函数最后使用 return self.output_cells[:] 而不是 return self.output_cells 的主要目的是返回 self.output_cells 的一个浅拷贝(shallow copy),而不是原始对象的引用。这意味着调用者将获得输出值的一个新列表,而不是对原始列表的引用。这可以确保原始 self.output_cells 列表的内部状态在函数返回后不会被意外修改,从而保持对象的封装性和数据的安全性。

源码\第四章\4_3.py

import numpy as np import math import random def rand(a, b): return (b - a) * random.random() + a def make_matrix(m,n,fill=0.0): mat = [] for i in range(m): mat.append([fill] * n) return mat def sigmoid(x): return 1.0 / (1.0 + math.exp(-x)) def sigmod_derivate(x): return x * (1 - x) class BPNeuralNetwork: def __init__(self): self.input_n = 0 self.hidden_n = 0 self.output_n = 0 self.input_cells = [] self.hidden_cells = [] self.output_cells = [] self.input_weights = [] self.output_weights = [] def setup(self,ni,nh,no): self.input_n = ni + 1 #+1是加了一个隐藏层 self.hidden_n = nh self.output_n = no self.input_cells = [1.0] * self.input_n self.hidden_cells = [1.0] * self.hidden_n self.output_cells = [1.0] * self.output_n self.input_weights = make_matrix(self.input_n,self.hidden_n)####这里,权重矩阵设定的是【in,out】 self.output_weights = make_matrix(self.hidden_n,self.output_n)####这里,权重矩阵设定的是【in,out】 # random activate for i in range(self.input_n): for h in range(self.hidden_n): self.input_weights[i][h] = rand(-0.2, 0.2) for h in range(self.hidden_n): for o in range(self.output_n): self.output_weights[h][o] = rand(-2.0, 2.0) def predict(self,inputs): for i in range(self.input_n - 1): self.input_cells[i] = inputs[i] for j in range(self.hidden_n): total = 0.0 for i in range(self.input_n): total += self.input_cells[i] * self.input_weights[i][j] self.hidden_cells[j] = sigmoid(total) for k in range(self.output_n): total = 0.0 for j in range(self.hidden_n): total += self.hidden_cells[j] * self.output_weights[j][k] self.output_cells[k] = sigmoid(total) return self.output_cells[:]#浅拷贝 def back_propagate(self,case,label,learn): self.predict(case) #计算输出层的误差 output_deltas = [0.0] * self.output_n for k in range(self.output_n): error = label[k] - self.output_cells[k] output_deltas[k] = sigmod_derivate(self.output_cells[k]) * error#误差项 #计算隐藏层的误差 hidden_deltas = [0.0] * self.hidden_n for j in range(self.hidden_n): error = 0.0 for k in range(self.output_n): error += output_deltas[k] * self.output_weights[j][k] hidden_deltas[j] = sigmod_derivate(self.hidden_cells[j]) * error #更新输出层权重 for j in range(self.hidden_n): for k in range(self.output_n): self.output_weights[j][k] += learn * output_deltas[k] * self.hidden_cells[j] #更新隐藏层权重 for i in range(self.input_n): for j in range(self.hidden_n): self.input_weights[i][j] += learn * hidden_deltas[j] * self.input_cells[i] error = 0 for o in range(len(label)): error += 0.5 * (label[o] - self.output_cells[o]) ** 2 return error def train(self,cases,labels,limit = 100,learn = 0.05): for i in range(limit): error = 0 for i in range(len(cases)): label = labels[i] case = cases[i] error += self.back_propagate(case, label, learn) pass def test(self): cases = [ [0, 0], [0, 1], [1, 0], [1, 1], ] labels = [[0], [1], [1], [0]] self.setup(2, 5, 1) self.train(cases, labels, 1000000, 0.05) for case in cases: print(self.predict(case)) if __name__ == '__main__': nn = BPNeuralNetwork() nn.test()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

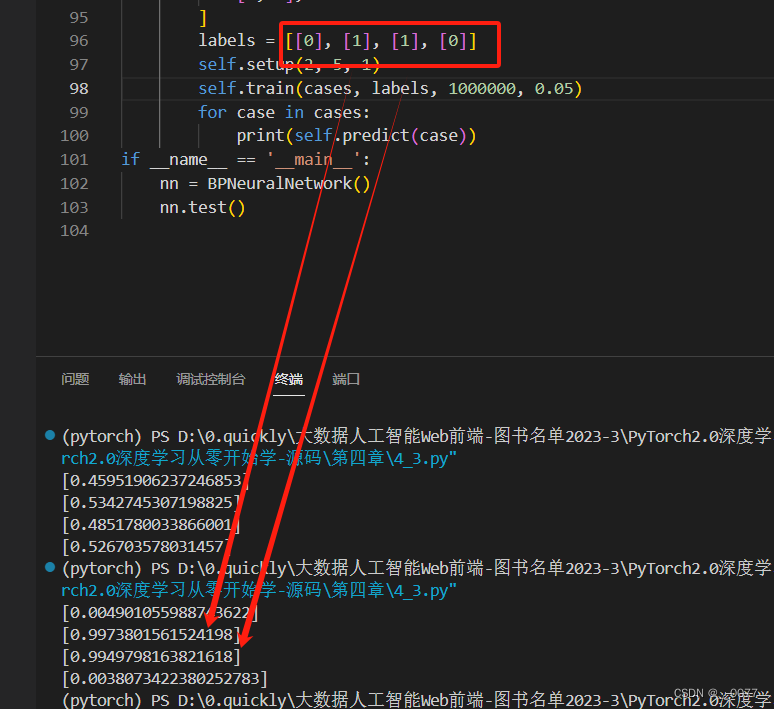

运行结果:原有的训练结果不太理想,直接把训练次数后面加了2个0,效果好多了~

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/神奇cpp/article/detail/735949

推荐阅读

相关标签