- 1mac如何安装svn_mac安装svn

- 2K8S的calico-node错误日志 listen tcp: lookup localhost on 8.8.4.4:53: no such host(容器无法正常运行)

- 3Netdata安装手册(离线版本)_netdata离线安装

- 4【学习笔记】尚硅谷大数据项目之Flink实时数仓---数据采集_尚硅谷大数据项目之电商实时数仓

- 52023-04深度操作系统deepin20.9版本发布_deepin最流畅的版本

- 6如何提前预防网络威胁

- 7毕业设计:基于python图书推荐系统 协同过滤推荐算法 书籍推荐系统 Django框架(源码)✅_图书推荐算法

- 86.1 maven配置阿里云仓库——《跟老吕学Apache Maven》

- 9自然语言处理中的命名实体识别与命名实体链接

- 10HEIC图片转换成其他格式图片_heic 转换格式 c#

kafka架构四之数据文件_kafka数据文件目录

赞

踩

Kafka高效文件存储设计特点

- Kafka把topic中一个parition大文件分成多个小文件段,通过多个小文件段,就容易- 定期清除或删除已经消费完文件,减少磁盘占用。

- 通过索引信息可以快速定位message和确定response的最大大小。

- 通过index元数据全部映射到memory,可以避免segment file的IO磁盘操作。

- 通过索引文件稀疏存储,可以大幅降低index文件元数据占用空间大小。

配置Broker

我在笔记本上搭建了kafka本地集群,共3个Broker。集群搭建参考 kafka 的架构一之入门

- 第一台Broker的server.properties文件的配置:

broker.id=0

listeners=PLAINTEXT://:9092

log.dirs=/server/kafka/log/node1

zookeeper.connect=localhost:2181

offsets.topic.replication.factor=3

- 1

- 2

- 3

- 4

- 5

- 第二台Broker的server.properties文件的配置:

broker.id=1

listeners=PLAINTEXT://:9093

log.dirs=/server/kafka/log/node2

zookeeper.connect=localhost:2181

offsets.topic.replication.factor=3

- 1

- 2

- 3

- 4

- 5

- 第三台Broker的server.properties文件的配置:

broker.id=2

listeners=PLAINTEXT://:9094

log.dirs=/server/kafka/log/node3

zookeeper.connect=localhost:2181

offsets.topic.replication.factor=3

- 1

- 2

- 3

- 4

- 5

配置好之后,分别启动3个Broker。

创建topic

我们创建2个topic,观察生产的数据文件。

- 创建一个topic=test1 ,分区数量为3,副本为3的消息主题

bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 3 --partitions 3 --topic test1

Created topic test1.

bin/kafka-topics.sh --describe --zookeeper localhost:2181 --topic test1

Topic: test1 PartitionCount: 3 ReplicationFactor: 3 Configs:

Topic: test1 Partition: 0 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2

Topic: test1 Partition: 1 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0

Topic: test1 Partition: 2 Leader: 0 Replicas: 0,2,1 Isr: 0,2,1

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 创建一个topic=order ,分区数量为3,副本为4的消息主题

bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 3 --partitions 4 --topic order

Created topic order.

bin/kafka-topics.sh --describe --zookeeper localhost:2181 --topic order

Topic: order PartitionCount: 4 ReplicationFactor: 3 Configs:

Topic: order Partition: 0 Leader: 0 Replicas: 0,2,1 Isr: 0,2,1

Topic: order Partition: 1 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2

Topic: order Partition: 2 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0

Topic: order Partition: 3 Leader: 0 Replicas: 0,1,2 Isr: 0,1,2

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

然后启动一个消费者客户端来消费数据。启动一个生产者客户端来发送数据到kafka集群。生产和消费数据参考 kafka架构二之partition。

Kafka文件的存储机制

- Partition在服务器上的表现形式就是一个一个的文件夹(命名规则:topic名称+分区序号),每个 partition 文件夹下面会有多组 segment(逻辑分组,并不是真实存在),每个 segment 对应三个文件 (.log文件、.index文件、.timeindex文件),log文件实际是存储 message 的地方,而 index 和 timeindex 文件为索引文件,用于检索消息。

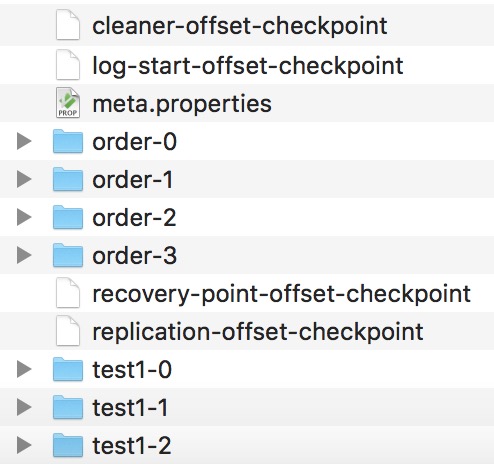

test1消息主题被分为三个分区,数据文件则包含三个目录,目录名分别为test1-0,test1-1,test1-2

order消息主题被分为四个分区,数据文件则包含四个目录,目录名分别为order-0,order-1,order-2,order-3

文件内容如图:

- 每一个partition目录下的文件被平均切割成大小相等(默认一个文件是500兆,可以手动去设置)的数据文件,每一个数据文件都被称为一个段(segment file),但每个段消息数量不一定相等,这种特性能够使得老的segment可以被快速清除。默认保留7天的数据。

- 每一个partition目录下的文件被平均切割成大小相等(默认一个文件是500兆,可以手动去设置)的数据文件,每一个数据文件都被称为一个段(segment file),但每个段消息数量不一定相等,这种特性能够使得老的segment可以被快速清除。默认保留7天的数据。

ZBMAC-2f32839f6:node1 yangyanping$ cd test1-0

ZBMAC-2f32839f6:test1-0 yangyanping$ ls -l

total 16

-rw-r--r-- 1 yangyanping 1603212982 10485760 12 11 10:26 00000000000000000000.index

-rw-r--r-- 1 yangyanping 1603212982 288 12 11 10:38 00000000000000000000.log

-rw-r--r-- 1 yangyanping 1603212982 10485756 12 11 10:26 00000000000000000000.timeindex

-rw-r--r-- 1 yangyanping 1603212982 8 12 11 10:38 leader-epoch-checkpoint

- 1

- 2

- 3

- 4

- 5

- 6

- 7

每个日志文件都是“log entries”序列,每一个log entry包含一个4字节整型数(值为N),其后跟N个字节的消息体。每条消息都有一个当前partition下唯一的64字节的offset,它指明了这条消息的起始位置。磁盘上存储的消息格式如下:

baseOffset: 0 lastOffset: 0 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 0 CreateTime: 1607763432580 size: 96 magic: 2 compresscodec: NONE crc: 3503205227 isvalid: true | offset: 0 CreateTime: 1607763432580 keysize: -1 valuesize: 28 sequence: -1 headerKeys: [] payload: 你好,这是第1条数据

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

这个“log entries”并非由一个文件构成,而是分成多个segment,每个segment名为该segment第一条消息的offset和“.log”组成。另外会有一个索引文件,它标明了每个segment下包含的log entry的offset范围,如下图所示。

因为每条消息都被append到该partition中,是顺序写磁盘,因此效率非常高(经验证,顺序写磁盘效率比随机写内存还要高,这是Kafka高吞吐率的一个很重要的保证)。

- __consumer_offsets

消费者偏移量__consumer_offsets 默认分区等于50,启动3个Broker时__consumer_offsets分区不会被创建,它会在消费者开始消费数据的时候被创建。在三个Broker节点node1,node2,node3生成同样的__consumer_offsets文件。

ZBMAC-****:node1 yangyanping$ ls -l total 32 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-0 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-1 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-10 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-11 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-12 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-13 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-14 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-15 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-16 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-17 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-18 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-19 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-2 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-20 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-21 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-22 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-23 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-24 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-25 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-26 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-27 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-28 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-29 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-3 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-30 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-31 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-32 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-33 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-34 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-35 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-36 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-37 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-38 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-39 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-4 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-40 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-41 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-42 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-43 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-44 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-45 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-46 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-47 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-48 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-49 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-5 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-6 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-7 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-8 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:56 __consumer_offsets-9 -rw-r--r-- 1 yangyanping 1603212982 0 12 12 16:49 cleaner-offset-checkpoint -rw-r--r-- 1 yangyanping 1603212982 4 12 12 17:19 log-start-offset-checkpoint -rw-r--r-- 1 yangyanping 1603212982 88 12 12 16:50 meta.properties drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:52 order-0 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:52 order-1 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:52 order-2 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:52 order-3 -rw-r--r-- 1 yangyanping 1603212982 1265 12 12 17:19 recovery-point-offset-checkpoint -rw-r--r-- 1 yangyanping 1603212982 1268 12 12 17:19 replication-offset-checkpoint drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:52 test1-0 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:57 test1-1 drwxr-xr-x 6 yangyanping 1603212982 192 12 12 16:57 test1-2

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

常用命令

- 查看所有topic

./bin/kafka-topics.sh --bootstrap-server 127.0.0.1:9092 --list

order

test1

- 1

- 2

- 3

- 查看所有的group

ZBMAC-*****:node1 yangyanping$ ./bin/kafka-consumer-groups.sh --all-groups --bootstrap-server 127.0.0.1:9092 --list

GroupA

GroupB

- 1

- 2

- 3

- 通过 bin/kafka-consumer-groups.sh 进行消费偏移量查询,查询GroupA消费偏移量

ZB***C-***:node1 yangyanping$ ./bin/kafka-consumer-groups.sh --describe --group GroupA --bootstrap-server 127.0.0.1:9092

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-ID

GroupA test1 0 3 3 0 consumer-1-1560749b-f1e9-403d-8eb9-e822566712f9 /10.0.*.* consumer-1

GroupA test1 2 4 4 0 consumer-1-9eac8e94-b3da-4ce4-b121-b04cca65a185 /10.0.*.* consumer-1

GroupA test1 1 3 3 0 consumer-1-6529440d-576e-4a48-800b-5037c2e59d91 /10.0.*.* consumer-1

GroupA order 2 3 3 0 - - -

GroupA order 3 2 2 0 - - -

GroupA order 0 3 3 0 - - -

GroupA order 1 2 2 0 - - -

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 查询__consumer_offsets topic所有内容

./bin/kafka-console-consumer.sh --topic __consumer_offsets --group GroupA --bootstrap-server 127.0.0.1:9092 --formatter

"kafka.coordinator.group.GroupMetadataManager\$OffsetsMessageFormatter" --consumer.config config/consumer.properties --from-beginning

[GroupA,test1,1]::OffsetAndMetadata(offset=3, leaderEpoch=Optional.empty, metadata=, commitTimestamp=1607673916329, expireTimestamp=None)

[GroupA,test1,0]::OffsetAndMetadata(offset=3, leaderEpoch=Optional.empty, metadata=, commitTimestamp=1607673916686, expireTimestamp=None)

[GroupA,test1,2]::OffsetAndMetadata(offset=4, leaderEpoch=Optional.empty, metadata=, commitTimestamp=1607673918063, expireTimestamp=None)

- 1

- 2

- 3

- 4

- 5

- 6

分组的位移信息

- 计算指定consumer group在__consumer_offsets topic中分区信息

Kafka会使用下面公式计算该group位移保存在__consumer_offsets的哪个分区上(消费者偏移量__consumer_offsets 默认分区等于50),所以在本例中,对应的分区=Math.abs(“GroupA”.hashCode()) % 50 = 40,即__consumer_offsets的分区40保存了这个GroupA的位移信息,下面让我们验证一下。

Math.abs(groupID.hashCode()) % 50

- 1

- 获取指定consumer group的位移信息

ZBMAC-****:node1 yangyanping$ ./bin/kafka-console-consumer.sh --topic __consumer_offsets --partition 40 --bootstrap-server 127.0.0.1:9092 --formatter "kafka.coordinator.group.GroupMetadataManager\$OffsetsMessageFormatter"

[GroupA,test1,2]::OffsetAndMetadata(offset=4, leaderEpoch=Optional.empty, metadata=, commitTimestamp=1607683019020, expireTimestamp=None)

[GroupA,test1,1]::OffsetAndMetadata(offset=3, leaderEpoch=Optional.empty, metadata=, commitTimestamp=1607683019295, expireTimestamp=None)

[GroupA,test1,0]::OffsetAndMetadata(offset=3, leaderEpoch=Optional.empty, metadata=, commitTimestamp=1607683019879, expireTimestamp=None)

- 1

- 2

- 3

- 4

可以看到__consumer_offsets topic的每一日志项的格式都是:[Group, Topic, Partition]::[OffsetAndMetadata[Offset, Metadata], commitTimestamp, expireTimestamp]

kafka-文件存储格式

kafka的消息和偏移量保存在文件里。保存在磁盘上的数据格式与从生产者发送过来或者发送给消费者的消息格式是一样的。因为使用了相同的消息格式进行磁盘存储和网络传输,kafka可以使用零复制技术给消费者发送消息,同时避免了对生产者已经压缩过的消息进行解压和再压缩。

除了键、值和偏移量外,消息里还包含了消息大小、校验和、消息格式版本号、压缩算法(Snappy、GZip或LZ4)和时间戳。时间戳可以是生产者发送消息的时间,也可以是消息到达broker的时间,这个是可配置的。

如果生产者发送的是压缩过的消息,那么同一个批次的消息会被压缩在一起,被当做“包装消息”进行发送。于是,broker就会收到一个这样的消息,然后再把它发送给消费者。消费者在解压这个消息之后,会看到整个批次的消息,它们都有自己的时间戳和偏移量。

如果在生产者端使用了压缩功能,那么发送的批次越大,就意味着在网络传输和磁盘存储方面会获得越好的压缩性能,同时意味着如果修改了消费者使用的消息格式,那么网络传输和磁盘存储的格式也要随之修改,而且broker要知道如何处理包含了两种消息格式的文件。

kafka附带了一个叫DumpLogSegment的工具,可以用它查看片段的内容。它可以显示每个消息的偏移量、校验和、魔术数字节、消息大小和压缩算法。运行该工具的方式如下

/kafka-run-class.sh kafka.tools.DumpLogSegments

如果使用–deep-iteration参数,可以显示被压缩到包装消息里的消息。

–files参数,用于指定想查看的分区片段

–print-data-log参数,指定打印详细内容

使用[kafka架构二之partition]中发送消息的代码,发送1000条数据

ZBMAC-2f32839f6:kafka yangyanping$ ./node1/bin/kafka-run-class.sh kafka.tools.DumpLogSegments --print-data-log --files log/node1//test1-0/00000000000000000000.log Dumping log/node1/test1-0/00000000000000000000.log Starting offset: 0 baseOffset: 0 lastOffset: 0 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 0 CreateTime: 1607763432580 size: 96 magic: 2 compresscodec: NONE crc: 3503205227 isvalid: true | offset: 0 CreateTime: 1607763432580 keysize: -1 valuesize: 28 sequence: -1 headerKeys: [] payload: 你好,这是第1条数据 baseOffset: 1 lastOffset: 1 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 96 CreateTime: 1607763432903 size: 96 magic: 2 compresscodec: NONE crc: 3339763189 isvalid: true | offset: 1 CreateTime: 1607763432903 keysize: -1 valuesize: 28 sequence: -1 headerKeys: [] payload: 你好,这是第4条数据 baseOffset: 2 lastOffset: 2 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 192 CreateTime: 1607763433208 size: 96 magic: 2 compresscodec: NONE crc: 427826089 isvalid: true | offset: 2 CreateTime: 1607763433208 keysize: -1 valuesize: 28 sequence: -1 headerKeys: [] payload: 你好,这是第7条数据 baseOffset: 3 lastOffset: 3 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 288 CreateTime: 1607763433510 size: 97 magic: 2 compresscodec: NONE crc: 325346822 isvalid: true | offset: 3 CreateTime: 1607763433510 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第10条数据 baseOffset: 4 lastOffset: 4 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 385 CreateTime: 1607766512778 size: 97 magic: 2 compresscodec: NONE crc: 3774139041 isvalid: true | offset: 4 CreateTime: 1607766512778 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第12条数据 baseOffset: 5 lastOffset: 5 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 482 CreateTime: 1607766513081 size: 97 magic: 2 compresscodec: NONE crc: 303197626 isvalid: true | offset: 5 CreateTime: 1607766513081 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第15条数据 baseOffset: 6 lastOffset: 6 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 579 CreateTime: 1607766513388 size: 97 magic: 2 compresscodec: NONE crc: 1456128128 isvalid: true | offset: 6 CreateTime: 1607766513388 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第18条数据 baseOffset: 7 lastOffset: 7 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 676 CreateTime: 1607766513692 size: 97 magic: 2 compresscodec: NONE crc: 1358806338 isvalid: true | offset: 7 CreateTime: 1607766513692 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第21条数据 baseOffset: 8 lastOffset: 8 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 773 CreateTime: 1607766514001 size: 97 magic: 2 compresscodec: NONE crc: 1627468606 isvalid: true | offset: 8 CreateTime: 1607766514001 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第24条数据 baseOffset: 9 lastOffset: 9 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 870 CreateTime: 1607766514310 size: 97 magic: 2 compresscodec: NONE crc: 3374708368 isvalid: true | offset: 9 CreateTime: 1607766514310 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第27条数据 baseOffset: 10 lastOffset: 10 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 967 CreateTime: 1607766514616 size: 97 magic: 2 compresscodec: NONE crc: 3530470813 isvalid: true | offset: 10 CreateTime: 1607766514616 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第30条数据 baseOffset: 11 lastOffset: 11 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 1064 CreateTime: 1607766514925 size: 97 magic: 2 compresscodec: NONE crc: 3770451461 isvalid: true | offset: 11 CreateTime: 1607766514925 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第33条数据 baseOffset: 12 lastOffset: 12 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 1161 CreateTime: 1607766515231 size: 97 magic: 2 compresscodec: NONE crc: 529667187 isvalid: true | offset: 12 CreateTime: 1607766515231 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第36条数据 baseOffset: 13 lastOffset: 13 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 1258 CreateTime: 1607766515538 size: 97 magic: 2 compresscodec: NONE crc: 2841601712 isvalid: true | offset: 13 CreateTime: 1607766515538 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第39条数据 baseOffset: 14 lastOffset: 14 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 1355 CreateTime: 1607766515845 size: 97 magic: 2 compresscodec: NONE crc: 1806100829 isvalid: true | offset: 14 CreateTime: 1607766515845 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第42条数据 baseOffset: 15 lastOffset: 15 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 1452 CreateTime: 1607766516154 size: 97 magic: 2 compresscodec: NONE crc: 529096697 isvalid: true | offset: 15 CreateTime: 1607766516154 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第45条数据 baseOffset: 16 lastOffset: 16 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 1549 CreateTime: 1607766516460 size: 97 magic: 2 compresscodec: NONE crc: 3111030888 isvalid: true | offset: 16 CreateTime: 1607766516460 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第48条数据 baseOffset: 17 lastOffset: 17 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 1646 CreateTime: 1607766516763 size: 97 magic: 2 compresscodec: NONE crc: 161022195 isvalid: true | offset: 17 CreateTime: 1607766516763 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第51条数据 baseOffset: 18 lastOffset: 18 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 1743 CreateTime: 1607766517070 size: 97 magic: 2 compresscodec: NONE crc: 807131904 isvalid: true | offset: 18 CreateTime: 1607766517070 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第54条数据 baseOffset: 19 lastOffset: 19 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 1840 CreateTime: 1607766517378 size: 97 magic: 2 compresscodec: NONE crc: 1920870794 isvalid: true | offset: 19 CreateTime: 1607766517378 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第57条数据 baseOffset: 20 lastOffset: 20 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 1937 CreateTime: 1607766517685 size: 97 magic: 2 compresscodec: NONE crc: 2930901588 isvalid: true | offset: 20 CreateTime: 1607766517685 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第60条数据 baseOffset: 21 lastOffset: 21 count: 1 baseSequence: -1 lastSequence: -1 producerId: -1 producerEpoch: -1 partitionLeaderEpoch: 0 isTransactional: false isControl: false position: 2034 CreateTime: 1607766517988 size: 97 magic: 2 compresscodec: NONE crc: 2860258910 isvalid: true | offset: 21 CreateTime: 1607766517988 keysize: -1 valuesize: 29 sequence: -1 headerKeys: [] payload: 你好,这是第63条数据

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 偏移量索引文件

用来建立消息偏移量(offset)到物理地址之间的映射关系,方便快速定位消息所在的物理文件位置

***-***:kafka yangyanping$ ./node1/bin/kafka-run-class.sh kafka.tools.DumpLogSegments --print-data-log --files log/node1/test1-2/00000000000000000000.index

Dumping log/node1/test1-2/00000000000000000000.index

offset: 43 position: 4179

offset: 85 position: 8295

offset: 127 position: 12411

offset: 169 position: 16527

offset: 211 position: 20643

offset: 253 position: 24759

offset: 295 position: 28875

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 时间戳索引文件

则根据指定的时间戳(timestamp)来查找对应的偏移量信息。

ZBMAC-2f32839f6:kafka yangyanping$ ./node1/bin/kafka-run-class.sh kafka.tools.DumpLogSegments --print-data-log --files log/node1/test1-2/00000000000000000000.timeindex

Dumping log/node1/test1-2/00000000000000000000.timeindex

timestamp: 1607766525142 offset: 43

timestamp: 1607766538045 offset: 85

timestamp: 1607766550963 offset: 127

timestamp: 1607766563812 offset: 169

timestamp: 1607766576686 offset: 211

timestamp: 1607766589535 offset: 253

timestamp: 1607766602370 offset: 295

ZBMAC-2f32839f6:kafka yangyanping$

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 00000000000000000000.index 索引文件

- 00000000000000000000.log 数据文件

- 关系图

零拷贝

- 调用read(file,tmp_buf,len)方法,文件A中的内容被复制到啦内核模式下的Read Buffer 中。

- CPU 控制将内核模式数据复制到用户模式下。

- 调用write(socket,tem_buf,len)时,将用户模式下的内容复制到内核模式下的Socket Buffer 中。

- 将内核模式下的Socket Buffer 的数据复制到网卡设备中传送。