- 1Java课程设计:基于Javaweb的图书管理系统(内附源码)_javaweb图书管理系统源代码

- 2ObjectScript的命名规范

- 3内网穿透和frp配置_natfrp

- 4如何申请华为HarmonyOS应用开发者高级证书_鸿蒙开发证书

- 5[Docker]十.Docker Swarm讲解_swarm管理docker

- 6如何查看python安装了哪些库?_查看python包含的库

- 7AI的Prompt是什么_aiprompt

- 82022下半年软考「集成」100题---【建议背诵】_软考系统集成项目管理真题22

- 9在CentOS环境下mysql如何远程连接_centos 远程服务器mysql

- 10Python_对于空列表,ls.pop()会触发异常

Huggingface trainer、model.from_pretrained、tokenizer()简单介绍(笔记)_transformes trainer 如何保存优化器的状态

赞

踩

目录

1.1、在trainer训练时,怎么控制模型保存的数量的同时,还可以保存最优的模型参数呢?

1.2、使用trainer训练ds ZeRO3或fsdp时,怎么保存模型为huggingface格式呢?

二、PreTrained Model中的from_pretrained常见的参数

五、Trainer 中的 _inner_training_loop 函数

六、Trainer 中的 _load_best_model函数

七、Trainer 中的_save_checkpoint函数

7.2、保存优化器状态和学习率调度_save_optimizer_and_scheduler

十一、模型加载函数deepspeed_load_checkpoint与_load_from_checkpoint

11.1、deepspeed_load_checkpoint 加载deepspeed模型

11.2、_load_from_checkpoint 加载除了deepspeed情况的模型

12.1 梯度检查点(PEFTmodel,PreTrainedModel)

12.2 transformer logger、set_seed()

12.4.2 tokenizer 参数及使用方法(encode,encode_plus,batch_encode_plus,decode...)

十三、TrainingArguments 与Seq2SeqTrainingArguments 常见的参数

13.1 Seq2SeqTrainingArguments 参数

13.2 Seq2SeqTrainingArguments 参数中GenerationConfig的常见参数(用于 generate 推理函数)

本文主要简单介绍 huggingface 中常见函数的使用

torch版本:2.1

Transformers 版本:4.39-4.40.1、4.30

huggingface transformers 中文deepspeed、trainer等文档:

一、trainer保存模型的问题

1.1、在trainer训练时,怎么控制模型保存的数量的同时,还可以保存最优的模型参数呢?

transformers:4.39

在使用Hugging Face的

Trainer进行模型训练时,通过设置TrainingArguments中的一些参数,你可以控制保存模型的数量,并确保最优模型不会被删除。主要涉及以下几个参数:

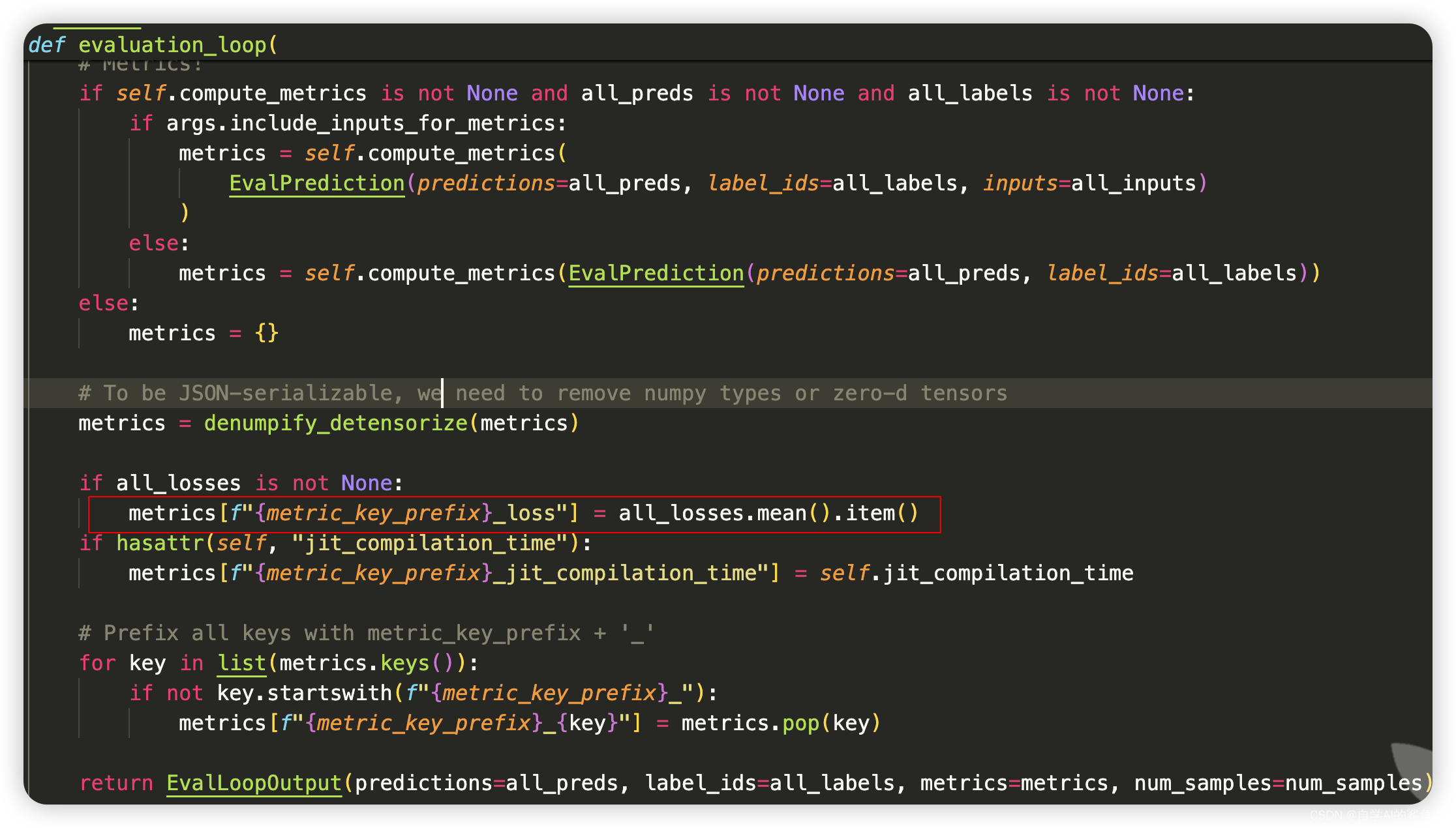

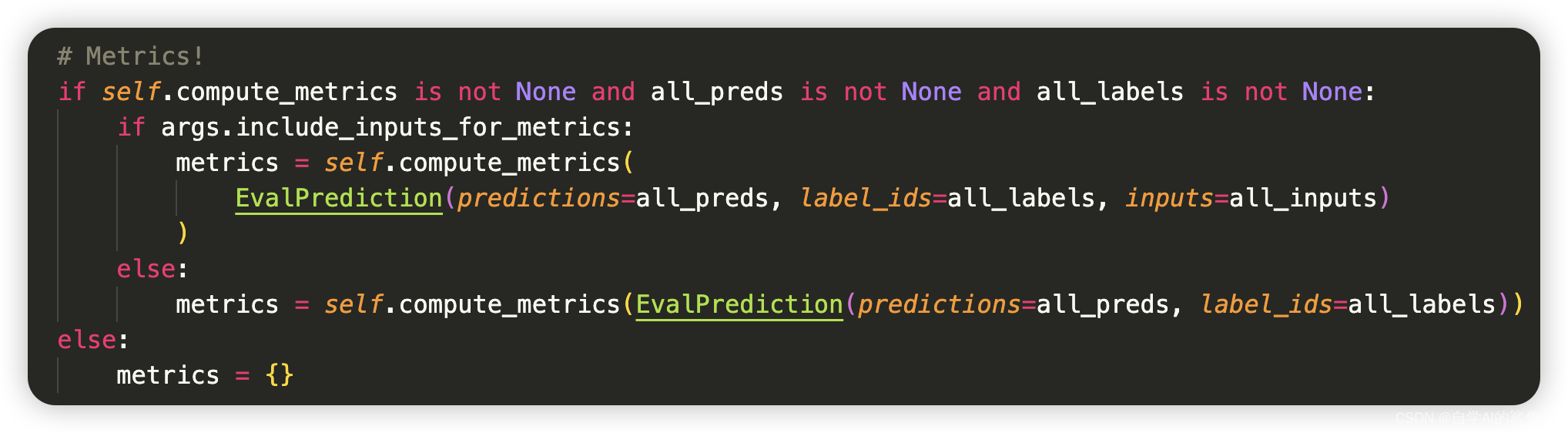

save_strategy:定义了模型保存的策略,可以是"no"(不保存模型),"epoch"(每个epoch结束时保存),或"steps"(每指定步数后保存)。save_total_limit:指定同时保存的模型检查点的最大数量。如果设置了这个参数,当保存新的检查点时,超出这个数量限制的最旧的检查点将被删除。evaluation_strategy:定义了评估的策略,与save_strategy类似,可以是"no","epoch"或"steps"。load_best_model_at_end:如果设置为True,在训练结束时将加载评价指标最好的模型(需要同时设置evaluation_strategy)。metric_for_best_model:"eval_loss", 指定哪个评价指标用于评估最佳模型,一般用的是自定义的compute_metrics函数返回的字典的某个key。这个设置要求evaluation_strategy不为"no"。

- 需要注意的是,"eval_loss" 系统会自己算,无需自定义compute_metrics

- include_inputs_for_metrics=True True 表示调用compute_metrics会将输入input_ids 也进行导入,方便计算

- compute_metrics:自定义用于计算评估指标的函数

- 可以参考chatglm3的代码:https://github.com/THUDM/ChatGLM3/blob/main/finetune_demo/finetune_hf.py

这里有一个简单的参考示例:

1.2、使用trainer训练ds ZeRO3或fsdp时,怎么保存模型为huggingface格式呢?

transformers:4.39

新版trainer中存在函数

self.accelerator.get_state_dict,这个函数可以将ZeRO3切片在其他设备上的参数加载过来,然后使用self._save()保存,具体见下文_save_checkpoint、save_model、_save 函数

二、PreTrained Model中的from_pretrained常见的参数

transformers:4.39

- # from_pretrained 是一个类方法,用于从预训练模型中加载模型实例

- @classmethod

- def from_pretrained(

- cls,

- # pretrained_model_name_or_path: 预训练模型的名称或路径,可以是本地路径或在线路径

- pretrained_model_name_or_path: Optional[Union[str, os.PathLike]],

- # model_args: 模型的初始化参数,将传递给模型的构造函数

- *model_args,

- # config: 模型配置对象或其路径,如果未提供,将尝试从 pretrained_model_name_or_path 加载

- config: Optional[Union[PretrainedConfig, str, os.PathLike]] = None,

- # cache_dir: 用于缓存下载的模型文件的目录

- cache_dir: Optional[Union[str, os.PathLike]] = None,

- # ignore_mismatched_sizes: 是否忽略权重大小不匹配的情况

- ignore_mismatched_sizes: bool = False,

- # force_download: 是否强制重新下载模型权重

- force_download: bool = False,

- # local_files_only: 是否只使用本地文件,不尝试从远程下载

- local_files_only: bool = False,

- # token: Hugging Face Hub 的访问令牌,用于下载模型权重

- token: Optional[Union[str, bool]] = None,

- # revision: 要使用的模型修订版本

- revision: str = "main",

- # use_safetensors: 是否使用 safetensors 格式加载模型权重

- use_safetensors: bool = None,

- **kwargs,

- ):

- # 从 kwargs 中提取一些常用参数

- state_dict = kwargs.pop("state_dict", None)

- from_tf = kwargs.pop("from_tf", False)

- from_flax = kwargs.pop("from_flax", False)

- resume_download = kwargs.pop("resume_download", False)

- proxies = kwargs.pop("proxies", None)

- output_loading_info = kwargs.pop("output_loading_info", False)

- use_auth_token = kwargs.pop("use_auth_token", None)

- trust_remote_code = kwargs.pop("trust_remote_code", None) # 使用远端加载模型文件

- _ = kwargs.pop("mirror", None)

- from_pipeline = kwargs.pop("_from_pipeline", None)

- from_auto_class = kwargs.pop("_from_auto", False)

- _fast_init = kwargs.pop("_fast_init", True)

- torch_dtype = kwargs.pop("torch_dtype", None) # 模型加载的数据类型,type.bfloat16 等

- low_cpu_mem_usage = kwargs.pop("low_cpu_mem_usage", None) # 低占用

- device_map = kwargs.pop("device_map", None) # auto 的话 accelerate 会自动分配设备

- max_memory = kwargs.pop("max_memory", None)

- offload_folder = kwargs.pop("offload_folder", None)

- offload_state_dict = kwargs.pop("offload_state_dict", False)

- offload_buffers = kwargs.pop("offload_buffers", False)

- load_in_8bit = kwargs.pop("load_in_8bit", False)

- load_in_4bit = kwargs.pop("load_in_4bit", False) # 4bit加载(未来删除),现在使用 quantization_config 参数

- quantization_config = kwargs.pop("quantization_config", None) # 量化的参数

- subfolder = kwargs.pop("subfolder", "")

- commit_hash = kwargs.pop("_commit_hash", None)

- variant = kwargs.pop("variant", None)

- adapter_kwargs = kwargs.pop("adapter_kwargs", {})

- adapter_name = kwargs.pop("adapter_name", "default") # peft adapter_name, 默认即可

- use_flash_attention_2 = kwargs.pop("use_flash_attention_2", False) # 未来弃用,使用参数 attn_implementation 来控制,使用方法见下文

-

- # 如果启用了 FSDP,则强制启用 low_cpu_mem_usage

- if is_fsdp_enabled():

- low_cpu_mem_usage = True

-

- # 对于 use_auth_token 参数的处理,已弃用,建议使用 token 参数代替

- if use_auth_token is not None:

- warnings.warn(

- "The `use_auth_token` argument is deprecated and will be removed in v5 of Transformers. Please use `token` instead.",

- FutureWarning,

- )

- if token is not None:

- raise ValueError(

- "`token` and `use_auth_token` are both specified. Please set only the argument `token`."

- )

- token = use_auth_token

-

- # 如果提供了 token 和 adapter_kwargs,则将 token 添加到 adapter_kwargs 中

- if token is not None and adapter_kwargs is not None and "token" not in adapter_kwargs:

- adapter_kwargs["token"] = token

-

- # 处理 use_safetensors 参数

- if use_safetensors is None and not is_safetensors_available():

- use_safetensors = False

- if trust_remote_code is True:

- logger.warning(

- "The argument `trust_remote_code` is to be used with Auto classes. It has no effect here and is"

- " ignored."

- )

-

- # 尝试从预训练模型路径获取 commit_hash

- if commit_hash is None:

- if not isinstance(config, PretrainedConfig):

- resolved_config_file = cached_file(

- pretrained_model_name_or_path,

- CONFIG_NAME,

- cache_dir=cache_dir,

- force_download=force_download,

- resume_download=resume_download,

- proxies=proxies,

- local_files_only=local_files_only,

- token=token,

- revision=revision,

- subfolder=subfolder,

- _raise_exceptions_for_gated_repo=False,

- _raise_exceptions_for_missing_entries=False,

- _raise_exceptions_for_connection_errors=False,

- )

- commit_hash = extract_commit_hash(resolved_config_file, commit_hash)

- else:

- commit_hash = getattr(config, "_commit_hash", None)

-

- # 如果使用了 PEFT 并且可用,则尝试加载适配器配置文件

- if is_peft_available():

- _adapter_model_path = adapter_kwargs.pop("_adapter_model_path", None)

-

- if _adapter_model_path is None:

- _adapter_model_path = find_adapter_config_file(

- pretrained_model_name_or_path,

- cache_dir=cache_dir,

- force_download=force_download,

- resume_download=resume_download,

- proxies=proxies,

- local_files_only=local_files_only,

- _commit_hash=commit_hash,

- **adapter_kwargs,

- )

- if _adapter_model_path is not None and os.path.isfile(_adapter_model_path):

- with open(_adapter_model_path, "r", encoding="utf-8") as f:

- _adapter_model_path = pretrained_model_name_or_path

- pretrained_model_name_or_path = json.load(f)["base_model_name_or_path"]

- else:

- _adapter_model_path = None

-

- # 处理 device_map 参数,将其转换为适当的格式

- if isinstance(device_map, torch.device):

- device_map = {"": device_map}

- elif isinstance(device_map, str) and device_map not in ["auto", "balanced", "balanced_low_0", "sequential"]:

- try:

- device_map = {"": torch.device(device_map)}

- except RuntimeError:

- raise ValueError(

- "When passing device_map as a string, the value needs to be a device name (e.g. cpu, cuda:0) or "

- f"'auto', 'balanced', 'balanced_low_0', 'sequential' but found {device_map}."

- )

- elif isinstance(device_map, int):

- if device_map < 0:

- raise ValueError(

- "You can't pass device_map as a negative int. If you want to put the model on the cpu, pass device_map = 'cpu' "

- )

- else:

- device_map = {"": device_map}

-

- # 如果提供了 device_map,则强制启用 low_cpu_mem_usage

- if device_map is not None:

- if low_cpu_mem_usage is None:

- low_cpu_mem_usage = True

- elif not low_cpu_mem_usage:

- raise ValueError("Passing along a `device_map` requires `low_cpu_mem_usage=True`")

-

- # 如果启用了 low_cpu_mem_usage,但未安装 Accelerate,则引发异常

- if low_cpu_mem_usage:

- if is_deepspeed_zero3_enabled():

- raise ValueError(

- "DeepSpeed Zero-3 is not compatible with `low_cpu_mem_usage=True` or with passing a `device_map`."

- )

- elif not is_accelerate_available():

- raise ImportError(

- "Using `low_cpu_mem_usage=True` or a `device_map` requires Accelerate: `pip install accelerate`"

- )

-

- # 处理 quantization_config 参数

- if load_in_4bit or load_in_8bit:

- if quantization_config is not None:

- raise ValueError(

- "You can't pass `load_in_4bit`or `load_in_8bit` as a kwarg when passing "

- "`quantization_config` argument at the same time."

- )

-

- config_dict = {k: v for k, v in kwargs.items() if k in inspect.signature(BitsAndBytesConfig).parameters}

- config_dict = {**config_dict, "load_in_4bit": load_in_4bit, "load_in_8bit": load_in_8bit}

- quantization_config, kwargs = BitsAndBytesConfig.from_dict(

- config_dict=config_dict, return_unused_kwargs=True, **kwargs

- )

- logger.warning(

- "The `load_in_4bit` and `load_in_8bit` arguments are deprecated and will be removed in the future versions. "

- "Please, pass a `BitsAndBytesConfig` object in `quantization_config` argument instead."

- )

-

- from_pt = not (from_tf | from_flax)

-

- # 设置用户代理字符串,用于下载模型权重

- user_agent = {"file_type": "model", "framework": "pytorch", "from_auto_class": from_auto_class}

- if from_pipeline is not None:

- user_agent["using_pipeline"] = from_pipeline

-

- # 如果处于离线模式,则强制启用 local_files_only

- if is_offline_mode() and not local_files_only:

- logger.info("Offline mode: forcing local_files_only=True")

- local_files_only = True

-

- # 加载模型配置

- if not isinstance(config, PretrainedConfig):

- config_path = config if config is not None else pretrained_model_name_or_path

- config, model_kwargs = cls.config_class.from_pretrained(

- config_path,

- cache_dir=cache_dir,

- return_unused_kwargs=True,

- force_download=force_download,

- resume_download=resume_download,

- proxies=proxies,

- local_files_only=local_files_only,

- token=token,

- revision=revision,

- subfolder=subfolder,

- _from_auto=from_auto_class,

- _from_pipeline=from_pipeline,

- **kwargs,

- )

- else:

- # 如果直接提供了配置对象,则复制一份,以免修改原始配置

- config = copy.deepcopy(config)

-

- # 处理 attn_implementation 参数

- kwarg_attn_imp = kwargs.pop("attn_implementation", None)

- if kwarg_attn_imp is not None and config._attn_implementation != kwarg_attn_imp:

- config._attn_implementation = kwarg_attn_imp

- model_kwargs = kwargs

-

- # 处理量化相关配置

- pre_quantized = getattr(config, "quantization_config", None) is not None

- if pre_quantized or quantization_config is not None:

- if pre_quantized:

- config.quantization_config = AutoHfQuantizer.merge_quantization_configs(

- config.quantization_config, quantization_config

- )

- else:

- config.quantization_config = quantization_config

- hf_quantizer = AutoHfQuantizer.from_config(config.quantization_config, pre_quantized=pre_quantized)

- else:

- hf_quantizer = None

-

- # 如果启用了量化,则需要验证环境和调整一些参数

- if hf_quantizer is not None:

- hf_quantizer.validate_environment(

- torch_dtype=torch_dtype, from_tf=from_tf, from_flax=from_flax, device_map=device_map

- )

- torch_dtype = hf_quantizer.update_torch_dtype(torch_dtype)

- device_map = hf_quantizer.update_device_map(device_map)

-

- if low_cpu_mem_usage is None:

- low_cpu_mem_usage = True

- logger.warning("`low_cpu_mem_usage` was None, now set to True since model is quantized.")

- is_quantized = hf_quantizer is not None

-

- # 检查是否为分片加载检查点

- is_sharded = False

- sharded_metadata = None

-

- # 加载模型权重

- loading_info = None

- keep_in_fp32_modules = None

- use_keep_in_fp32_modules = False

-

- (......)

一些简单的加载案例

- attn_implementation 为新的参数, 使用类型有“spda”,“eager”(原生的attention),“flash attention”,需要注意的,attn_implementation = "eager",需要config 额外指定config._attn_implementation_internal = "eager" 才会生效,具体用法见下面例子:

- 为什么需要额外指定config._attn_implementation_internal = "eager",可以看源码PreTrainedModel中 ._autoset_attn_implementation 函数的实现过程:

transformers/src/transformers/modeling_utils.py at main · huggingface/transformers · GitHub

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/知新_RL/article/detail/835310

Copyright © 2003-2013 www.wpsshop.cn 版权所有,并保留所有权利。