- 1目标检测算法:anchor_free系列解读_anchorfree是什么意思

- 2rabbitMQ对优先级队列的使用_java设置rabbitmq队列优先级

- 3内网安全之-NTLM协议详解_ntlmssp

- 4ICCV 2023 | 刷新十三个暗光增强榜单!清华Ð提出Retinexformer:低光照图像增强新网络...

- 5Python类的构造方法__init__(self)和析构函数__del__详解_使用python定义一个类定义一个实力参数,需要在-init-方法中添加它,也可以使用构造

- 6Altium Designer实现原理图到PCB板的转换_ad如何将原理图转化为pcb

- 7Git系列:git restore 高效恢复代码的技巧与实践

- 8Transformer教程之循环神经网络(RNN)和长短期记忆网络(LSTM)

- 9【java】【maven】【基础】MAVEN安装配置介绍_maven配置文件

- 10ELK本地虚拟机部署(8.12版本)_logstash8.12 配置文件

Hive基础知识(九):Hive对数据库表的增删改查操作_hive数据库如何修改数据

赞

踩

1. 创建表

1)建表语法

- CREATE [EXTERNAL] TABLE [IF NOT EXISTS] table_name #EXTERNAL:外部的

- [(col_name data_type [COMMENT col_comment],...)]

- [COMMENT table_comment]

- [PARTITIONED BY (col_name data_type [COMMENT col_comment],...)]#PARTITIONED BY:分区表

- [CLUSTERED BY (col_name, col_name,...)#CLUSTERED BY:分桶表

- [SORTED BY (col_name [ASC|DESC],...)] INTO num_buckets BUCKETS]#分桶表

- [ROW FORMAT row_format]#行格式

- [STORED AS file_format]#指定文件格式

- [LOCATION hdfs_path] #指定表的位置信息

- [TBLPROPERTIES (property_name=property_value,...)]#额外属性

- [AS select_statement] #

2)字段解释说明

(1)CREATE TABLE 创建一个指定名字的表。如果相同名字的表已经存在,则抛出异常;用户可以用 IF NOT EXISTS 选项来忽略这个异常。

(2)EXTERNAL 关键字可以让用户创建一个外部表,在建表的同时可以指定一个指向实际数据的路径(LOCATION),在删除表的时候,内部表的元数据和数据会被一起删除,而外部表只删除元数据,不删除数据。

(3)COMMENT:为表和列添加注释。

(4)PARTITIONED BY 创建分区表

(5)CLUSTERED BY 创建分桶表

(6)SORTED BY 不常用,对桶中的一个或多个列另外排序

(7)ROW FORMAT DELIMITED FIELDS TERMINATED BY charMAP KEYS TERMINATED BY char| SERDE serde_name [WITH SERDEPROPERTIES (property_name=property_value, property_name=property_value,...)]用户在建表的时候可以自定义 SerDe 或者使用自带的 SerDe。如果没有指定 ROW FORMAT 或者 ROW FORMAT DELIMITED,将会使用自带的 SerDe。在建表的时候,用户还需要为表指定列,用户在指定表的列的同时也会指定自定义的 SerDe,Hive 通过 SerDe 确定表的具体的列的数据。 SerDe 是 Serialize/Deserilize 的简称, hive 使用 Serde 进行行对象的序列与反序列化。

(8)STORED AS 指定存储文件类型常用的存储文件类型:SEQUENCEFILE(二进制序列文件)、TEXTFILE(文本)、RCFILE(列式存储格式文件)如果文件数据是纯文本,可以使用STORED AS TEXTFILE。如果数据需要压缩,使用 STORED AS SEQUENCEFILE。

(9)LOCATION :指定表在 HDFS 上的存储位置。

(10)AS:后跟查询语句,根据查询结果创建表。

(11)LIKE 允许用户复制现有的表结构,但是不复制数据。

1.1 管理表

1)理论

默认创建的表都是所谓的管理表,有时也被称为内部表。因为这种表,Hive 会(或多或少地)控制着数据的生命周期。Hive 默认情况下会将这些表的数据存储在由配置项hive.metastore.warehouse.dir(例如,/user/hive/warehouse)所定义的目录的子目录下。

当我们删除一个管理表时,Hive 也会删除这个表中数据。管理表不适合和其他工具共享数据。

2)案例实操

(0)原始数据

- 1001 ss1

- 1002 ss2

- 1003 ss3

- 1004 ss4

- 1005 ss5

- 1006 ss6

- 1007 ss7

- 1008 ss8

- 1009 ss9

- 1010 ss10

- 1011 ss11

- 1012 ss12

- 1013 ss13

- 1014 ss14

- 1015 ss15

- 1016 ss16

上传到hadoop

[root@hadoop100 ~]$ hadoop fs -put student.txt /user/hive/warehouse/student (1)普通创建表

- hive (hive3)> create table if not exists student(id int,name string)

- > row format delimited fields terminated by '\t'

- > stored as textfile

- > location '/user/hive/warehouse/student';

- OK

- Time taken: 1.417 seconds

(2)根据查询结果创建表(查询的结果会添加到新创建的表中)

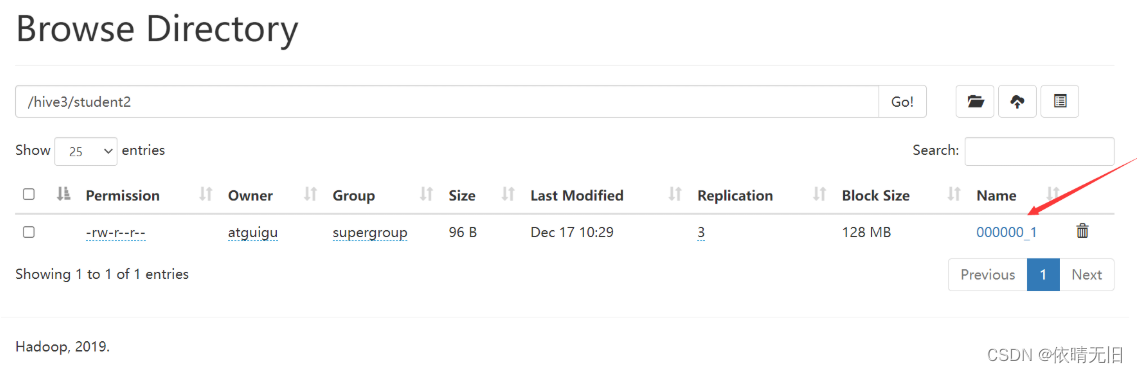

- hive (hive3)> create table if not exists student2 as select id,name from student;

- Query ID = atguigu_20211217102911_8a4f1a46-8e34-4a33-8f49-801c72a41aa7

- Total jobs = 3

- Launching Job 1 out of 3

- Number of reduce tasks is set to 0 since there's no reduce operator

- Starting Job = job_1639638039622_0002, Tracking URL = http://hadoop101:8088/proxy/application_1639638039622_0002/

- Kill Command = /opt/module/hadoop-3.1.3/bin/mapred job -kill job_1639638039622_0002

- Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

- 2021-12-17 10:29:37,602 Stage-1 map = 0%, reduce = 0%

- 2021-12-17 10:29:51,642 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.52 sec

- MapReduce Total cumulative CPU time: 1 seconds 520 msec

- Ended Job = job_1639638039622_0002

- Stage-4 is selected by condition resolver.

- Stage-3 is filtered out by condition resolver.

- Stage-5 is filtered out by condition resolver.

- Moving data to directory hdfs://hadoop100:8020/hive3/.hive-staging_hive_2021-12-17_10-29-11_615_1154320876271755629-1/-ext-10002

- Moving data to directory hdfs://hadoop100:8020/hive3/student2

- MapReduce Jobs Launched:

- Stage-Stage-1: Map: 1 Cumulative CPU: 1.52 sec HDFS Read: 4868 HDFS Write: 166 SUCCESS

- Total MapReduce CPU Time Spent: 1 seconds 520 msec

- OK

- id name

- Time taken: 42.57 seconds

(3)根据已经存在的表结构创建表

- hive (hive3)> create table if not exists student3 like student;

- OK

- Time taken: 0.31 seconds

(4)查询表的类型

- hive (hive3)> desc formatted student;

- OK

- col_name data_type comment

- # col_name data_type comment

- id int

- name string

-

- # Detailed Table Information

- Database: hive3

- OwnerType: USER

- Owner: atguigu

- CreateTime: Fri Dec 17 10:20:47 CST 2021

- LastAccessTime: UNKNOWN

- Retention: 0

- Location: hdfs://hadoop100:8020/user/hive/warehouse/student

- Table Type: MANAGED_TABLE

- Table Parameters:

- COLUMN_STATS_ACCURATE {\"BASIC_STATS\":\"true\",\"COLUMN_STATS\":{\"id\":\"true\",\"name\":\"true\"}}

- bucketing_version 2

- numFiles 0

- numRows 0

- rawDataSize 0

- totalSize 0

- transient_lastDdlTime 1639707647

-

- # Storage Information

- SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

- InputFormat: org.apache.hadoop.mapred.TextInputFormat

- OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

- Compressed: No

- Num Buckets: -1

- Bucket Columns: []

- Sort Columns: []

- Storage Desc Params:

- field.delim \t

- serialization.format \t

- Time taken: 0.305 seconds, Fetched: 33 row(s)

1.2 外部表

1)理论

因为表是外部表,所以 Hive 并非认为其完全拥有这份数据。删除该表并不会删除掉这份数据,不过描述表的元数据信息会被删除掉。

2)管理表和外部表的使用场景

每天将收集到的网站日志定期流入 HDFS 文本文件。在外部表(原始日志表)的基础上做大量的统计分析,用到的中间表、结果表使用内部表存储,数据通过 SELECT+INSERT 进入内部表。

3)案例实操

分别创建部门和员工外部表,并向表中导入数据。

(0)原始数据

- dept:

- 10 ACCOUNTING 1700

- 20 RESEARCH 1800

- 30 SALES 1900

- 40 OPERATIONS 1700

- emp:

- 7369 SMITH CLERK 7902 1980-12-17 800.00 20

- 7499 ALLEN SALESMAN 7698 1981-2-20 1600.00 300.00 30

- 7521 WARD SALESMAN 7698 1981-2-22 1250.00 500.00 30

- 7566 JONES MANAGER 7839 1981-4-2 2975.00 20

- 7654 MARTIN SALESMAN 7698 1981-9-28 1250.00 1400.00 30

- 7698 BLAKE MANAGER 7839 1981-5-1 2850.00 30

- 7782 CLARK MANAGER 7839 1981-6-9 2450.00 10

- 7788 SCOTT ANALYST 7566 1987-4-19 3000.00 20

- 7839 KING PRESIDENT 1981-11-17 5000.00 10

- 7844 TURNER SALESMAN 7698 1981-9-8 1500.00 0.00 30

- 7876 ADAMS CLERK 7788 1987-5-23 1100.00 20

- 7900 JAMES CLERK 7698 1981-12-3 950.00 30

- 7902 FORD ANALYST 7566 1981-12-3 3000.00 20

- 7934 MILLER CLERK 7782 1982-1-23 1300.00 10

(1)上传数据到 HDFS

- [root@hadoop100 ~]$ hadoop fs -put emp.txt /hive3/emp

- 2021-12-17 10:47:20,774 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

- [root@hadoop100 ~]$ hadoop fs -put dept /hive3/dept

- 2021-12-17 10:47:47,312 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

(2)建表语句,创建外部表

创建部门表

- hive (hive3)> create external table if not exists dept(

- deptno int,

- dname string,

- loc int

- )

- row format delimited fields terminated by '\t';

- OK

- Time taken: 0.148 seconds

创建员工表

- hive (hive3)> create external table if not exists emp(

- empno int,

- ename string,

- job string,

- mgr int,

- hiredate string,

- sal double,

- comm double,

- deptno int)

- row format delimited fields terminated by '\t';

- OK

- Time taken: 0.167 seconds

(3)查看创建的表

- hive (hive3)> show tables;

- OK

- tab_name

- dept

- emp

- student

- student2

- student3

- Time taken: 0.06 seconds, Fetched: 5 row(s)

(4)查看表格式化数据

hive (hive3)> desc formatted emp;(5)删除外部表

hive (default)> drop table dept;外部表删除后,hdfs 中的数据还在,但是 metadata 中 dept 的元数据已被删除。

1.3 管理表与外部表的互相转换

(1)查询表的类型

- hive (hive3)> desc formatted student2;

- Table Type: MANAGED_TABLE

(2)修改内部表 student2 为外部表

- hive (hive3)> alter table student2 set tblproperties('EXTERNAL'='TRUE');

- OK

- Time taken: 0.163 seconds

(3)查询表的类型

- hive (hive3)> desc formatted student2;

- Table Type: EXTERNAL_TABLE

(4)修改外部表 student2 为内部表

- hive (hive3)> alter table student2 set tblproperties('EXTERNAL'='FALSE');

- OK

- Time taken: 0.189 seconds

(5)查询表的类型

- hive (hive3)> desc formatted student2;

- Table Type: MANAGED_TABLE

注意:('EXTERNAL'='TRUE')和('EXTERNAL'='FALSE')为固定写法,区分大小写!

如果需要修改分隔符

ALTER TABLE location SET SERDEPROPERTIES ('field.delim'= '','serialization.format'='');2. 修改表

修改表的语法基本是 alter table name (你要对表的操作)

2.1 重命名表

1)语法

ALTER TABLE table_name RENAME TO new_table_name2)实操案例

hive (default)> alter table dept_partition2 rename to dept_partition3;2.2 增加/修改/替换列信息

1)语法

(1)更新列

- ALTER TABLE table_name CHANGE [COLUMN] col_old_name col_new_name

- column_type [COMMENT col_comment][FIRST|AFTER column_name]

(2)增加和替换列

ALTER TABLE table_name ADD|REPLACE COLUMNS (col_name data_type [COMMENT col_comment],...)注:ADD是代表新增一字段,字段位置在所有列后面(partition 列前),REPLACE则是表示替换表中所有字段。

2)实操案例

(1)查询表结构

hive> desc dept; (2)添加列

- hive (hive3)> alter table dept add columns(deptdesc string);

- OK

- Time taken: 0.359 seconds

(3)查询表结构

hive> desc dept;(4)更新列

- hive (hive3)> alter table dept change column deptdesc desc string;

- OK

- Time taken: 0.265 seconds

(5)查询表结构

hive> desc dept; (6)替换列

hive (default)> alter table dept replace columns(deptno string, dname string, loc string); (7)查询表结构

- hive (hive3)> desc dept;

- OK

- col_name data_type comment

- deptno string

- dname string

- loc string

- Time taken: 0.08 seconds, Fetched: 3 row(s)

3. 删除表

hive (default)> drop table dept;