热门标签

热门文章

- 1Windows Azure部署和虚拟IP_azure vrrp virtual ip address github

- 2【Vulnhub】之Nagini_vulnhub靶场下载

- 3Linux中关于时间修改的命令_linux 改时间命令

- 4如何使用Docker本地部署Wiki.js容器并结合内网穿透实现知识库共享_docker wiki部署

- 5鸿蒙HarmonyOS开发实战—AI功能开发(图像超分辨率)_鸿蒙中如何调用ai算法

- 6【PX4-AutoPilot教程-仿真环境架构】梳理PX4&Gazebo&MAVLink&MAVROS&ROS&ROS2之间的关系_px4-autopilot无人机目标搜索gazebo仿真

- 7在Spring Boot环境中使用Mockito进行单元测试_springboot mockito

- 8Docker如何对镜像进行命名_docker build 指定镜像名称

- 9spring boot + vue + element-ui全栈开发入门——基于Electron桌面应用开发(一)_springboot vue electron结合

- 10关于5G无人机的最强科普_诺巴曼无人机5g控制原理

当前位置: article > 正文

机器学习 | Kaggle鸢尾花数据集Iris训练_kaggle鸢尾花竞赛题目

作者:知新_RL | 2024-03-31 16:18:35

赞

踩

kaggle鸢尾花竞赛题目

机器学习 | Kaggle鸢尾花数据集Iris训练

Wenxuan Zeng 2020.10.3

一、准备工作:引入机器学习库

# 引入机器学习库

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.preprocessing import LabelBinarizer

from sklearn import svm

from sklearn import model_selection

from sklearn import metrics

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

import numpy as np

# 忽略不必要的报错

import warnings

warnings.filterwarnings("ignore")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

二、数据可视化分析

- 该题数据量很小(150组)特征值不多(4类)标签简单(3种),且题目要求在于分类,所以在做数据可视化分析的时候,不需要像考虑

Titanic/Crime_prediction那样深度挖掘不同特征之间微妙的联系,我们直接分情况将散点图绘制在同一坐标系下即可观察。

# 载入sklearn库中的iris数据集

from sklearn.datasets import load_iris

iris = load_iris()

print (iris.data)

- 1

- 2

- 3

- 4

[[5.1 3.5 1.4 0.2] [4.9 3. 1.4 0.2] [4.7 3.2 1.3 0.2] [4.6 3.1 1.5 0.2] [5. 3.6 1.4 0.2] [5.4 3.9 1.7 0.4] [4.6 3.4 1.4 0.3] [5. 3.4 1.5 0.2] [4.4 2.9 1.4 0.2] [4.9 3.1 1.5 0.1] [5.4 3.7 1.5 0.2] [4.8 3.4 1.6 0.2] [4.8 3. 1.4 0.1] [4.3 3. 1.1 0.1] [5.8 4. 1.2 0.2] [5.7 4.4 1.5 0.4] [5.4 3.9 1.3 0.4] [5.1 3.5 1.4 0.3] [5.7 3.8 1.7 0.3] [5.1 3.8 1.5 0.3] [5.4 3.4 1.7 0.2] [5.1 3.7 1.5 0.4] [4.6 3.6 1. 0.2] [5.1 3.3 1.7 0.5] [4.8 3.4 1.9 0.2] [5. 3. 1.6 0.2] [5. 3.4 1.6 0.4] [5.2 3.5 1.5 0.2] [5.2 3.4 1.4 0.2] [4.7 3.2 1.6 0.2] [4.8 3.1 1.6 0.2] [5.4 3.4 1.5 0.4] [5.2 4.1 1.5 0.1] [5.5 4.2 1.4 0.2] [4.9 3.1 1.5 0.2] [5. 3.2 1.2 0.2] [5.5 3.5 1.3 0.2] [4.9 3.6 1.4 0.1] [4.4 3. 1.3 0.2] [5.1 3.4 1.5 0.2] [5. 3.5 1.3 0.3] [4.5 2.3 1.3 0.3] [4.4 3.2 1.3 0.2] [5. 3.5 1.6 0.6] [5.1 3.8 1.9 0.4] [4.8 3. 1.4 0.3] [5.1 3.8 1.6 0.2] [4.6 3.2 1.4 0.2] [5.3 3.7 1.5 0.2] [5. 3.3 1.4 0.2] [7. 3.2 4.7 1.4] [6.4 3.2 4.5 1.5] [6.9 3.1 4.9 1.5] [5.5 2.3 4. 1.3] [6.5 2.8 4.6 1.5] [5.7 2.8 4.5 1.3] [6.3 3.3 4.7 1.6] [4.9 2.4 3.3 1. ] [6.6 2.9 4.6 1.3] [5.2 2.7 3.9 1.4] [5. 2. 3.5 1. ] [5.9 3. 4.2 1.5] [6. 2.2 4. 1. ] [6.1 2.9 4.7 1.4] [5.6 2.9 3.6 1.3] [6.7 3.1 4.4 1.4] [5.6 3. 4.5 1.5] [5.8 2.7 4.1 1. ] [6.2 2.2 4.5 1.5] [5.6 2.5 3.9 1.1] [5.9 3.2 4.8 1.8] [6.1 2.8 4. 1.3] [6.3 2.5 4.9 1.5] [6.1 2.8 4.7 1.2] [6.4 2.9 4.3 1.3] [6.6 3. 4.4 1.4] [6.8 2.8 4.8 1.4] [6.7 3. 5. 1.7] [6. 2.9 4.5 1.5] [5.7 2.6 3.5 1. ] [5.5 2.4 3.8 1.1] [5.5 2.4 3.7 1. ] [5.8 2.7 3.9 1.2] [6. 2.7 5.1 1.6] [5.4 3. 4.5 1.5] [6. 3.4 4.5 1.6] [6.7 3.1 4.7 1.5] [6.3 2.3 4.4 1.3] [5.6 3. 4.1 1.3] [5.5 2.5 4. 1.3] [5.5 2.6 4.4 1.2] [6.1 3. 4.6 1.4] [5.8 2.6 4. 1.2] [5. 2.3 3.3 1. ] [5.6 2.7 4.2 1.3] [5.7 3. 4.2 1.2] [5.7 2.9 4.2 1.3] [6.2 2.9 4.3 1.3] [5.1 2.5 3. 1.1] [5.7 2.8 4.1 1.3] [6.3 3.3 6. 2.5] [5.8 2.7 5.1 1.9] [7.1 3. 5.9 2.1] [6.3 2.9 5.6 1.8] [6.5 3. 5.8 2.2] [7.6 3. 6.6 2.1] [4.9 2.5 4.5 1.7] [7.3 2.9 6.3 1.8] [6.7 2.5 5.8 1.8] [7.2 3.6 6.1 2.5] [6.5 3.2 5.1 2. ] [6.4 2.7 5.3 1.9] [6.8 3. 5.5 2.1] [5.7 2.5 5. 2. ] [5.8 2.8 5.1 2.4] [6.4 3.2 5.3 2.3] [6.5 3. 5.5 1.8] [7.7 3.8 6.7 2.2] [7.7 2.6 6.9 2.3] [6. 2.2 5. 1.5] [6.9 3.2 5.7 2.3] [5.6 2.8 4.9 2. ] [7.7 2.8 6.7 2. ] [6.3 2.7 4.9 1.8] [6.7 3.3 5.7 2.1] [7.2 3.2 6. 1.8] [6.2 2.8 4.8 1.8] [6.1 3. 4.9 1.8] [6.4 2.8 5.6 2.1] [7.2 3. 5.8 1.6] [7.4 2.8 6.1 1.9] [7.9 3.8 6.4 2. ] [6.4 2.8 5.6 2.2] [6.3 2.8 5.1 1.5] [6.1 2.6 5.6 1.4] [7.7 3. 6.1 2.3] [6.3 3.4 5.6 2.4] [6.4 3.1 5.5 1.8] [6. 3. 4.8 1.8] [6.9 3.1 5.4 2.1] [6.7 3.1 5.6 2.4] [6.9 3.1 5.1 2.3] [5.8 2.7 5.1 1.9] [6.8 3.2 5.9 2.3] [6.7 3.3 5.7 2.5] [6.7 3. 5.2 2.3] [6.3 2.5 5. 1.9] [6.5 3. 5.2 2. ] [6.2 3.4 5.4 2.3] [5.9 3. 5.1 1.8]]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

# iris的三个种类 各有50个 共150个

print (iris.target)

print (len(iris.target))

# 共150个数据 每个iris有4个特征属性

print (iris.data.shape)

- 1

- 2

- 3

- 4

- 5

[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2]

150

(150, 4)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- iris中包含iris.data和iris.target,其中iris.data是150x4的矩阵,存放四特征(花瓣和花萼长宽值),iris.target是150x1的矩阵,存放150朵花的标签(类别:0,1,2)。这三种类别的花分别50朵,分布在数据集的前50,中50,后50.

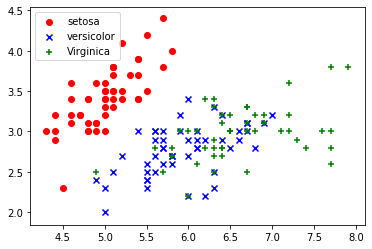

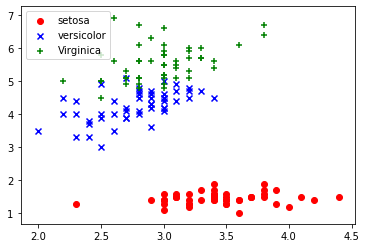

# 获取花卉一二列特征数据集(花萼特征) DD = iris.data X = [x[0] for x in DD] print (X) Y = [x[1] for x in DD] print (Y) #plt.scatter(X, Y, c=iris.target, marker='x') # 第一类 前50个样本 plt.scatter(X[:50], Y[:50], color='red', marker='o', label='setosa') # 第二类 中间50个样本 plt.scatter(X[50:100], Y[50:100], color='blue', marker='x', label='versicolor') # 第三类 后50个样本 plt.scatter(X[100:], Y[100:],color='green', marker='+', label='Virginica') # 图例 plt.legend(loc=2) #左上角 plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

[5.1, 4.9, 4.7, 4.6, 5.0, 5.4, 4.6, 5.0, 4.4, 4.9, 5.4, 4.8, 4.8, 4.3, 5.8, 5.7, 5.4, 5.1, 5.7, 5.1, 5.4, 5.1, 4.6, 5.1, 4.8, 5.0, 5.0, 5.2, 5.2, 4.7, 4.8, 5.4, 5.2, 5.5, 4.9, 5.0, 5.5, 4.9, 4.4, 5.1, 5.0, 4.5, 4.4, 5.0, 5.1, 4.8, 5.1, 4.6, 5.3, 5.0, 7.0, 6.4, 6.9, 5.5, 6.5, 5.7, 6.3, 4.9, 6.6, 5.2, 5.0, 5.9, 6.0, 6.1, 5.6, 6.7, 5.6, 5.8, 6.2, 5.6, 5.9, 6.1, 6.3, 6.1, 6.4, 6.6, 6.8, 6.7, 6.0, 5.7, 5.5, 5.5, 5.8, 6.0, 5.4, 6.0, 6.7, 6.3, 5.6, 5.5, 5.5, 6.1, 5.8, 5.0, 5.6, 5.7, 5.7, 6.2, 5.1, 5.7, 6.3, 5.8, 7.1, 6.3, 6.5, 7.6, 4.9, 7.3, 6.7, 7.2, 6.5, 6.4, 6.8, 5.7, 5.8, 6.4, 6.5, 7.7, 7.7, 6.0, 6.9, 5.6, 7.7, 6.3, 6.7, 7.2, 6.2, 6.1, 6.4, 7.2, 7.4, 7.9, 6.4, 6.3, 6.1, 7.7, 6.3, 6.4, 6.0, 6.9, 6.7, 6.9, 5.8, 6.8, 6.7, 6.7, 6.3, 6.5, 6.2, 5.9]

[3.5, 3.0, 3.2, 3.1, 3.6, 3.9, 3.4, 3.4, 2.9, 3.1, 3.7, 3.4, 3.0, 3.0, 4.0, 4.4, 3.9, 3.5, 3.8, 3.8, 3.4, 3.7, 3.6, 3.3, 3.4, 3.0, 3.4, 3.5, 3.4, 3.2, 3.1, 3.4, 4.1, 4.2, 3.1, 3.2, 3.5, 3.6, 3.0, 3.4, 3.5, 2.3, 3.2, 3.5, 3.8, 3.0, 3.8, 3.2, 3.7, 3.3, 3.2, 3.2, 3.1, 2.3, 2.8, 2.8, 3.3, 2.4, 2.9, 2.7, 2.0, 3.0, 2.2, 2.9, 2.9, 3.1, 3.0, 2.7, 2.2, 2.5, 3.2, 2.8, 2.5, 2.8, 2.9, 3.0, 2.8, 3.0, 2.9, 2.6, 2.4, 2.4, 2.7, 2.7, 3.0, 3.4, 3.1, 2.3, 3.0, 2.5, 2.6, 3.0, 2.6, 2.3, 2.7, 3.0, 2.9, 2.9, 2.5, 2.8, 3.3, 2.7, 3.0, 2.9, 3.0, 3.0, 2.5, 2.9, 2.5, 3.6, 3.2, 2.7, 3.0, 2.5, 2.8, 3.2, 3.0, 3.8, 2.6, 2.2, 3.2, 2.8, 2.8, 2.7, 3.3, 3.2, 2.8, 3.0, 2.8, 3.0, 2.8, 3.8, 2.8, 2.8, 2.6, 3.0, 3.4, 3.1, 3.0, 3.1, 3.1, 3.1, 2.7, 3.2, 3.3, 3.0, 2.5, 3.0, 3.4, 3.0]

- 1

- 2

- 该组出现的问题即是:选取花萼宽度和长度特征并不能有效将蓝色和绿色离散点分开,所以相关度较低。

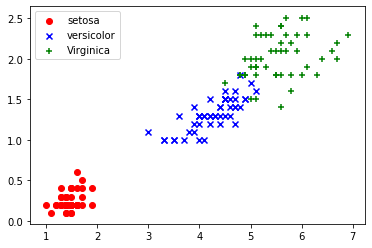

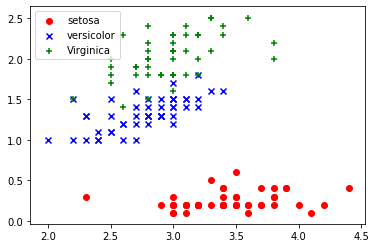

# 获取花卉三四列特征数据集 (花瓣特征) DD = iris.data X = [x[2] for x in DD] print (X) Y = [x[3] for x in DD] print (Y) #plt.scatter(X, Y, c=iris.target, marker='x') # 第一类 前50个样本 plt.scatter(X[:50], Y[:50], color='red', marker='o', label='setosa') # 第二类 中间50个样本 plt.scatter(X[50:100], Y[50:100], color='blue', marker='x', label='versicolor') # 第三类 后50个样本 plt.scatter(X[100:], Y[100:],color='green', marker='+', label='Virginica') # 图例 plt.legend(loc=2) #左上角 plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

[1.4, 1.4, 1.3, 1.5, 1.4, 1.7, 1.4, 1.5, 1.4, 1.5, 1.5, 1.6, 1.4, 1.1, 1.2, 1.5, 1.3, 1.4, 1.7, 1.5, 1.7, 1.5, 1.0, 1.7, 1.9, 1.6, 1.6, 1.5, 1.4, 1.6, 1.6, 1.5, 1.5, 1.4, 1.5, 1.2, 1.3, 1.4, 1.3, 1.5, 1.3, 1.3, 1.3, 1.6, 1.9, 1.4, 1.6, 1.4, 1.5, 1.4, 4.7, 4.5, 4.9, 4.0, 4.6, 4.5, 4.7, 3.3, 4.6, 3.9, 3.5, 4.2, 4.0, 4.7, 3.6, 4.4, 4.5, 4.1, 4.5, 3.9, 4.8, 4.0, 4.9, 4.7, 4.3, 4.4, 4.8, 5.0, 4.5, 3.5, 3.8, 3.7, 3.9, 5.1, 4.5, 4.5, 4.7, 4.4, 4.1, 4.0, 4.4, 4.6, 4.0, 3.3, 4.2, 4.2, 4.2, 4.3, 3.0, 4.1, 6.0, 5.1, 5.9, 5.6, 5.8, 6.6, 4.5, 6.3, 5.8, 6.1, 5.1, 5.3, 5.5, 5.0, 5.1, 5.3, 5.5, 6.7, 6.9, 5.0, 5.7, 4.9, 6.7, 4.9, 5.7, 6.0, 4.8, 4.9, 5.6, 5.8, 6.1, 6.4, 5.6, 5.1, 5.6, 6.1, 5.6, 5.5, 4.8, 5.4, 5.6, 5.1, 5.1, 5.9, 5.7, 5.2, 5.0, 5.2, 5.4, 5.1]

[0.2, 0.2, 0.2, 0.2, 0.2, 0.4, 0.3, 0.2, 0.2, 0.1, 0.2, 0.2, 0.1, 0.1, 0.2, 0.4, 0.4, 0.3, 0.3, 0.3, 0.2, 0.4, 0.2, 0.5, 0.2, 0.2, 0.4, 0.2, 0.2, 0.2, 0.2, 0.4, 0.1, 0.2, 0.2, 0.2, 0.2, 0.1, 0.2, 0.2, 0.3, 0.3, 0.2, 0.6, 0.4, 0.3, 0.2, 0.2, 0.2, 0.2, 1.4, 1.5, 1.5, 1.3, 1.5, 1.3, 1.6, 1.0, 1.3, 1.4, 1.0, 1.5, 1.0, 1.4, 1.3, 1.4, 1.5, 1.0, 1.5, 1.1, 1.8, 1.3, 1.5, 1.2, 1.3, 1.4, 1.4, 1.7, 1.5, 1.0, 1.1, 1.0, 1.2, 1.6, 1.5, 1.6, 1.5, 1.3, 1.3, 1.3, 1.2, 1.4, 1.2, 1.0, 1.3, 1.2, 1.3, 1.3, 1.1, 1.3, 2.5, 1.9, 2.1, 1.8, 2.2, 2.1, 1.7, 1.8, 1.8, 2.5, 2.0, 1.9, 2.1, 2.0, 2.4, 2.3, 1.8, 2.2, 2.3, 1.5, 2.3, 2.0, 2.0, 1.8, 2.1, 1.8, 1.8, 1.8, 2.1, 1.6, 1.9, 2.0, 2.2, 1.5, 1.4, 2.3, 2.4, 1.8, 1.8, 2.1, 2.4, 2.3, 1.9, 2.3, 2.5, 2.3, 1.9, 2.0, 2.3, 1.8]

- 1

- 2

- 3

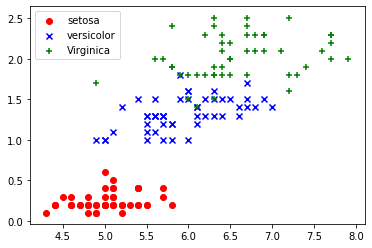

# 获取花卉一四列特征数据集 DD = iris.data X = [x[0] for x in DD] print (X) Y = [x[3] for x in DD] print (Y) #plt.scatter(X, Y, c=iris.target, marker='x') # 第一类 前50个样本 plt.scatter(X[:50], Y[:50], color='red', marker='o', label='setosa') # 第二类 中间50个样本 plt.scatter(X[50:100], Y[50:100], color='blue', marker='x', label='versicolor') # 第三类 后50个样本 plt.scatter(X[100:], Y[100:],color='green', marker='+', label='Virginica') # 图例 plt.legend(loc=2) #左上角 plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

[5.1, 4.9, 4.7, 4.6, 5.0, 5.4, 4.6, 5.0, 4.4, 4.9, 5.4, 4.8, 4.8, 4.3, 5.8, 5.7, 5.4, 5.1, 5.7, 5.1, 5.4, 5.1, 4.6, 5.1, 4.8, 5.0, 5.0, 5.2, 5.2, 4.7, 4.8, 5.4, 5.2, 5.5, 4.9, 5.0, 5.5, 4.9, 4.4, 5.1, 5.0, 4.5, 4.4, 5.0, 5.1, 4.8, 5.1, 4.6, 5.3, 5.0, 7.0, 6.4, 6.9, 5.5, 6.5, 5.7, 6.3, 4.9, 6.6, 5.2, 5.0, 5.9, 6.0, 6.1, 5.6, 6.7, 5.6, 5.8, 6.2, 5.6, 5.9, 6.1, 6.3, 6.1, 6.4, 6.6, 6.8, 6.7, 6.0, 5.7, 5.5, 5.5, 5.8, 6.0, 5.4, 6.0, 6.7, 6.3, 5.6, 5.5, 5.5, 6.1, 5.8, 5.0, 5.6, 5.7, 5.7, 6.2, 5.1, 5.7, 6.3, 5.8, 7.1, 6.3, 6.5, 7.6, 4.9, 7.3, 6.7, 7.2, 6.5, 6.4, 6.8, 5.7, 5.8, 6.4, 6.5, 7.7, 7.7, 6.0, 6.9, 5.6, 7.7, 6.3, 6.7, 7.2, 6.2, 6.1, 6.4, 7.2, 7.4, 7.9, 6.4, 6.3, 6.1, 7.7, 6.3, 6.4, 6.0, 6.9, 6.7, 6.9, 5.8, 6.8, 6.7, 6.7, 6.3, 6.5, 6.2, 5.9]

[0.2, 0.2, 0.2, 0.2, 0.2, 0.4, 0.3, 0.2, 0.2, 0.1, 0.2, 0.2, 0.1, 0.1, 0.2, 0.4, 0.4, 0.3, 0.3, 0.3, 0.2, 0.4, 0.2, 0.5, 0.2, 0.2, 0.4, 0.2, 0.2, 0.2, 0.2, 0.4, 0.1, 0.2, 0.2, 0.2, 0.2, 0.1, 0.2, 0.2, 0.3, 0.3, 0.2, 0.6, 0.4, 0.3, 0.2, 0.2, 0.2, 0.2, 1.4, 1.5, 1.5, 1.3, 1.5, 1.3, 1.6, 1.0, 1.3, 1.4, 1.0, 1.5, 1.0, 1.4, 1.3, 1.4, 1.5, 1.0, 1.5, 1.1, 1.8, 1.3, 1.5, 1.2, 1.3, 1.4, 1.4, 1.7, 1.5, 1.0, 1.1, 1.0, 1.2, 1.6, 1.5, 1.6, 1.5, 1.3, 1.3, 1.3, 1.2, 1.4, 1.2, 1.0, 1.3, 1.2, 1.3, 1.3, 1.1, 1.3, 2.5, 1.9, 2.1, 1.8, 2.2, 2.1, 1.7, 1.8, 1.8, 2.5, 2.0, 1.9, 2.1, 2.0, 2.4, 2.3, 1.8, 2.2, 2.3, 1.5, 2.3, 2.0, 2.0, 1.8, 2.1, 1.8, 1.8, 1.8, 2.1, 1.6, 1.9, 2.0, 2.2, 1.5, 1.4, 2.3, 2.4, 1.8, 1.8, 2.1, 2.4, 2.3, 1.9, 2.3, 2.5, 2.3, 1.9, 2.0, 2.3, 1.8]

- 1

- 2

- 3

# 获取花卉一三列特征数据集 DD = iris.data X = [x[0] for x in DD] print (X) Y = [x[2] for x in DD] print (Y) #plt.scatter(X, Y, c=iris.target, marker='x') # 第一类 前50个样本 plt.scatter(X[:50], Y[:50], color='red', marker='o', label='setosa') # 第二类 中间50个样本 plt.scatter(X[50:100], Y[50:100], color='blue', marker='x', label='versicolor') # 第三类 后50个样本 plt.scatter(X[100:], Y[100:],color='green', marker='+', label='Virginica') # 图例 plt.legend(loc=2) #左上角 plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

[5.1, 4.9, 4.7, 4.6, 5.0, 5.4, 4.6, 5.0, 4.4, 4.9, 5.4, 4.8, 4.8, 4.3, 5.8, 5.7, 5.4, 5.1, 5.7, 5.1, 5.4, 5.1, 4.6, 5.1, 4.8, 5.0, 5.0, 5.2, 5.2, 4.7, 4.8, 5.4, 5.2, 5.5, 4.9, 5.0, 5.5, 4.9, 4.4, 5.1, 5.0, 4.5, 4.4, 5.0, 5.1, 4.8, 5.1, 4.6, 5.3, 5.0, 7.0, 6.4, 6.9, 5.5, 6.5, 5.7, 6.3, 4.9, 6.6, 5.2, 5.0, 5.9, 6.0, 6.1, 5.6, 6.7, 5.6, 5.8, 6.2, 5.6, 5.9, 6.1, 6.3, 6.1, 6.4, 6.6, 6.8, 6.7, 6.0, 5.7, 5.5, 5.5, 5.8, 6.0, 5.4, 6.0, 6.7, 6.3, 5.6, 5.5, 5.5, 6.1, 5.8, 5.0, 5.6, 5.7, 5.7, 6.2, 5.1, 5.7, 6.3, 5.8, 7.1, 6.3, 6.5, 7.6, 4.9, 7.3, 6.7, 7.2, 6.5, 6.4, 6.8, 5.7, 5.8, 6.4, 6.5, 7.7, 7.7, 6.0, 6.9, 5.6, 7.7, 6.3, 6.7, 7.2, 6.2, 6.1, 6.4, 7.2, 7.4, 7.9, 6.4, 6.3, 6.1, 7.7, 6.3, 6.4, 6.0, 6.9, 6.7, 6.9, 5.8, 6.8, 6.7, 6.7, 6.3, 6.5, 6.2, 5.9]

[1.4, 1.4, 1.3, 1.5, 1.4, 1.7, 1.4, 1.5, 1.4, 1.5, 1.5, 1.6, 1.4, 1.1, 1.2, 1.5, 1.3, 1.4, 1.7, 1.5, 1.7, 1.5, 1.0, 1.7, 1.9, 1.6, 1.6, 1.5, 1.4, 1.6, 1.6, 1.5, 1.5, 1.4, 1.5, 1.2, 1.3, 1.4, 1.3, 1.5, 1.3, 1.3, 1.3, 1.6, 1.9, 1.4, 1.6, 1.4, 1.5, 1.4, 4.7, 4.5, 4.9, 4.0, 4.6, 4.5, 4.7, 3.3, 4.6, 3.9, 3.5, 4.2, 4.0, 4.7, 3.6, 4.4, 4.5, 4.1, 4.5, 3.9, 4.8, 4.0, 4.9, 4.7, 4.3, 4.4, 4.8, 5.0, 4.5, 3.5, 3.8, 3.7, 3.9, 5.1, 4.5, 4.5, 4.7, 4.4, 4.1, 4.0, 4.4, 4.6, 4.0, 3.3, 4.2, 4.2, 4.2, 4.3, 3.0, 4.1, 6.0, 5.1, 5.9, 5.6, 5.8, 6.6, 4.5, 6.3, 5.8, 6.1, 5.1, 5.3, 5.5, 5.0, 5.1, 5.3, 5.5, 6.7, 6.9, 5.0, 5.7, 4.9, 6.7, 4.9, 5.7, 6.0, 4.8, 4.9, 5.6, 5.8, 6.1, 6.4, 5.6, 5.1, 5.6, 6.1, 5.6, 5.5, 4.8, 5.4, 5.6, 5.1, 5.1, 5.9, 5.7, 5.2, 5.0, 5.2, 5.4, 5.1]

- 1

- 2

- 3

# 获取花卉二三列特征数据集 DD = iris.data X = [x[1] for x in DD] print (X) Y = [x[2] for x in DD] print (Y) #plt.scatter(X, Y, c=iris.target, marker='x') # 第一类 前50个样本 plt.scatter(X[:50], Y[:50], color='red', marker='o', label='setosa') # 第二类 中间50个样本 plt.scatter(X[50:100], Y[50:100], color='blue', marker='x', label='versicolor') # 第三类 后50个样本 plt.scatter(X[100:], Y[100:],color='green', marker='+', label='Virginica') # 图例 plt.legend(loc=2) #左上角 plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

[3.5, 3.0, 3.2, 3.1, 3.6, 3.9, 3.4, 3.4, 2.9, 3.1, 3.7, 3.4, 3.0, 3.0, 4.0, 4.4, 3.9, 3.5, 3.8, 3.8, 3.4, 3.7, 3.6, 3.3, 3.4, 3.0, 3.4, 3.5, 3.4, 3.2, 3.1, 3.4, 4.1, 4.2, 3.1, 3.2, 3.5, 3.6, 3.0, 3.4, 3.5, 2.3, 3.2, 3.5, 3.8, 3.0, 3.8, 3.2, 3.7, 3.3, 3.2, 3.2, 3.1, 2.3, 2.8, 2.8, 3.3, 2.4, 2.9, 2.7, 2.0, 3.0, 2.2, 2.9, 2.9, 3.1, 3.0, 2.7, 2.2, 2.5, 3.2, 2.8, 2.5, 2.8, 2.9, 3.0, 2.8, 3.0, 2.9, 2.6, 2.4, 2.4, 2.7, 2.7, 3.0, 3.4, 3.1, 2.3, 3.0, 2.5, 2.6, 3.0, 2.6, 2.3, 2.7, 3.0, 2.9, 2.9, 2.5, 2.8, 3.3, 2.7, 3.0, 2.9, 3.0, 3.0, 2.5, 2.9, 2.5, 3.6, 3.2, 2.7, 3.0, 2.5, 2.8, 3.2, 3.0, 3.8, 2.6, 2.2, 3.2, 2.8, 2.8, 2.7, 3.3, 3.2, 2.8, 3.0, 2.8, 3.0, 2.8, 3.8, 2.8, 2.8, 2.6, 3.0, 3.4, 3.1, 3.0, 3.1, 3.1, 3.1, 2.7, 3.2, 3.3, 3.0, 2.5, 3.0, 3.4, 3.0]

[1.4, 1.4, 1.3, 1.5, 1.4, 1.7, 1.4, 1.5, 1.4, 1.5, 1.5, 1.6, 1.4, 1.1, 1.2, 1.5, 1.3, 1.4, 1.7, 1.5, 1.7, 1.5, 1.0, 1.7, 1.9, 1.6, 1.6, 1.5, 1.4, 1.6, 1.6, 1.5, 1.5, 1.4, 1.5, 1.2, 1.3, 1.4, 1.3, 1.5, 1.3, 1.3, 1.3, 1.6, 1.9, 1.4, 1.6, 1.4, 1.5, 1.4, 4.7, 4.5, 4.9, 4.0, 4.6, 4.5, 4.7, 3.3, 4.6, 3.9, 3.5, 4.2, 4.0, 4.7, 3.6, 4.4, 4.5, 4.1, 4.5, 3.9, 4.8, 4.0, 4.9, 4.7, 4.3, 4.4, 4.8, 5.0, 4.5, 3.5, 3.8, 3.7, 3.9, 5.1, 4.5, 4.5, 4.7, 4.4, 4.1, 4.0, 4.4, 4.6, 4.0, 3.3, 4.2, 4.2, 4.2, 4.3, 3.0, 4.1, 6.0, 5.1, 5.9, 5.6, 5.8, 6.6, 4.5, 6.3, 5.8, 6.1, 5.1, 5.3, 5.5, 5.0, 5.1, 5.3, 5.5, 6.7, 6.9, 5.0, 5.7, 4.9, 6.7, 4.9, 5.7, 6.0, 4.8, 4.9, 5.6, 5.8, 6.1, 6.4, 5.6, 5.1, 5.6, 6.1, 5.6, 5.5, 4.8, 5.4, 5.6, 5.1, 5.1, 5.9, 5.7, 5.2, 5.0, 5.2, 5.4, 5.1]

- 1

- 2

- 3

# 获取花卉二四列特征数据集 DD = iris.data X = [x[1] for x in DD] print (X) Y = [x[3] for x in DD] print (Y) #plt.scatter(X, Y, c=iris.target, marker='x') # 第一类 前50个样本 plt.scatter(X[:50], Y[:50], color='red', marker='o', label='setosa') # 第二类 中间50个样本 plt.scatter(X[50:100], Y[50:100], color='blue', marker='x', label='versicolor') # 第三类 后50个样本 plt.scatter(X[100:], Y[100:],color='green', marker='+', label='Virginica') # 图例 plt.legend(loc=2) #左上角 plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

[3.5, 3.0, 3.2, 3.1, 3.6, 3.9, 3.4, 3.4, 2.9, 3.1, 3.7, 3.4, 3.0, 3.0, 4.0, 4.4, 3.9, 3.5, 3.8, 3.8, 3.4, 3.7, 3.6, 3.3, 3.4, 3.0, 3.4, 3.5, 3.4, 3.2, 3.1, 3.4, 4.1, 4.2, 3.1, 3.2, 3.5, 3.6, 3.0, 3.4, 3.5, 2.3, 3.2, 3.5, 3.8, 3.0, 3.8, 3.2, 3.7, 3.3, 3.2, 3.2, 3.1, 2.3, 2.8, 2.8, 3.3, 2.4, 2.9, 2.7, 2.0, 3.0, 2.2, 2.9, 2.9, 3.1, 3.0, 2.7, 2.2, 2.5, 3.2, 2.8, 2.5, 2.8, 2.9, 3.0, 2.8, 3.0, 2.9, 2.6, 2.4, 2.4, 2.7, 2.7, 3.0, 3.4, 3.1, 2.3, 3.0, 2.5, 2.6, 3.0, 2.6, 2.3, 2.7, 3.0, 2.9, 2.9, 2.5, 2.8, 3.3, 2.7, 3.0, 2.9, 3.0, 3.0, 2.5, 2.9, 2.5, 3.6, 3.2, 2.7, 3.0, 2.5, 2.8, 3.2, 3.0, 3.8, 2.6, 2.2, 3.2, 2.8, 2.8, 2.7, 3.3, 3.2, 2.8, 3.0, 2.8, 3.0, 2.8, 3.8, 2.8, 2.8, 2.6, 3.0, 3.4, 3.1, 3.0, 3.1, 3.1, 3.1, 2.7, 3.2, 3.3, 3.0, 2.5, 3.0, 3.4, 3.0]

[0.2, 0.2, 0.2, 0.2, 0.2, 0.4, 0.3, 0.2, 0.2, 0.1, 0.2, 0.2, 0.1, 0.1, 0.2, 0.4, 0.4, 0.3, 0.3, 0.3, 0.2, 0.4, 0.2, 0.5, 0.2, 0.2, 0.4, 0.2, 0.2, 0.2, 0.2, 0.4, 0.1, 0.2, 0.2, 0.2, 0.2, 0.1, 0.2, 0.2, 0.3, 0.3, 0.2, 0.6, 0.4, 0.3, 0.2, 0.2, 0.2, 0.2, 1.4, 1.5, 1.5, 1.3, 1.5, 1.3, 1.6, 1.0, 1.3, 1.4, 1.0, 1.5, 1.0, 1.4, 1.3, 1.4, 1.5, 1.0, 1.5, 1.1, 1.8, 1.3, 1.5, 1.2, 1.3, 1.4, 1.4, 1.7, 1.5, 1.0, 1.1, 1.0, 1.2, 1.6, 1.5, 1.6, 1.5, 1.3, 1.3, 1.3, 1.2, 1.4, 1.2, 1.0, 1.3, 1.2, 1.3, 1.3, 1.1, 1.3, 2.5, 1.9, 2.1, 1.8, 2.2, 2.1, 1.7, 1.8, 1.8, 2.5, 2.0, 1.9, 2.1, 2.0, 2.4, 2.3, 1.8, 2.2, 2.3, 1.5, 2.3, 2.0, 2.0, 1.8, 2.1, 1.8, 1.8, 1.8, 2.1, 1.6, 1.9, 2.0, 2.2, 1.5, 1.4, 2.3, 2.4, 1.8, 1.8, 2.1, 2.4, 2.3, 1.9, 2.3, 2.5, 2.3, 1.9, 2.0, 2.3, 1.8]

- 1

- 2

- 3

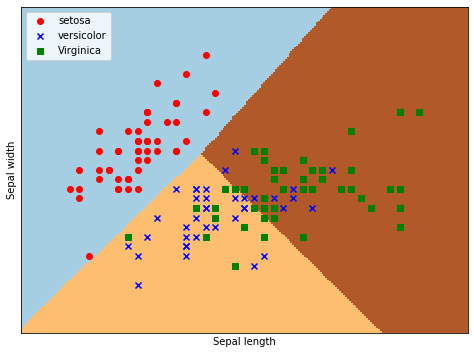

三、逻辑回归分类结果

3.1 选取花萼特征分类

# 获取花卉前两列数据集 X = X = iris.data[:, 0:2] Y = iris.target # 逻辑回归模型 lr = LogisticRegression(C=1e5) lr.fit(X,Y) # meshgrid函数生成两个网格矩阵 h = .02 x_min, x_max = X[:, 0].min() - .5, X[:, 0].max() + .5 y_min, y_max = X[:, 1].min() - .5, X[:, 1].max() + .5 xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h)) # pcolormesh函数将xx,yy两个网格矩阵和对应的预测结果Z绘制在图片上 Z = lr.predict(np.c_[xx.ravel(), yy.ravel()]) Z = Z.reshape(xx.shape) plt.figure(1, figsize=(8,6)) plt.pcolormesh(xx, yy, Z, cmap=plt.cm.Paired) # 绘制散点图 plt.scatter(X[:50,0], X[:50,1], color='red',marker='o', label='setosa') plt.scatter(X[50:100,0], X[50:100,1], color='blue', marker='x', label='versicolor') plt.scatter(X[100:,0], X[100:,1], color='green', marker='s', label='Virginica') plt.xlabel('Sepal length') plt.ylabel('Sepal width') plt.xlim(xx.min(), xx.max()) plt.ylim(yy.min(), yy.max()) plt.xticks(()) plt.yticks(()) plt.legend(loc=2) plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

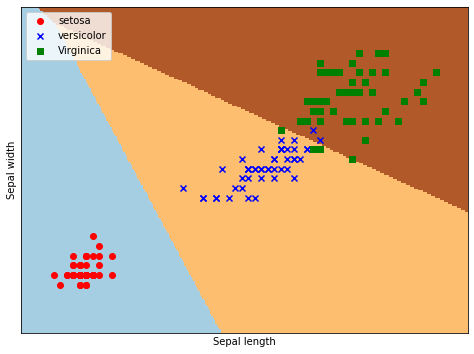

3.2 选取花瓣特征分类

# 获取花卉后两列数据集 X = X = iris.data[:, 2:4] Y = iris.target # 逻辑回归模型 lr = LogisticRegression(C=1e5) lr.fit(X,Y) # meshgrid函数生成两个网格矩阵 h = .02 x_min, x_max = X[:, 0].min() - .5, X[:, 0].max() + .5 y_min, y_max = X[:, 1].min() - .5, X[:, 1].max() + .5 xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h)) # pcolormesh函数将xx,yy两个网格矩阵和对应的预测结果Z绘制在图片上 Z = lr.predict(np.c_[xx.ravel(), yy.ravel()]) Z = Z.reshape(xx.shape) plt.figure(1, figsize=(8,6)) plt.pcolormesh(xx, yy, Z, cmap=plt.cm.Paired) # 绘制散点图 plt.scatter(X[:50,0], X[:50,1], color='red',marker='o', label='setosa') plt.scatter(X[50:100,0], X[50:100,1], color='blue', marker='x', label='versicolor') plt.scatter(X[100:,0], X[100:,1], color='green', marker='s', label='Virginica') plt.xlabel('Sepal length') plt.ylabel('Sepal width') plt.xlim(xx.min(), xx.max()) plt.ylim(yy.min(), yy.max()) plt.xticks(()) plt.yticks(()) plt.legend(loc=2) plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

四、数据集切割(训练集+测试集)

x=iris.data

y=iris.target

x_train,x_test,y_train,y_test=model_selection.train_test_split(x,y,random_state=101,test_size=0.3)

print("split_train_data 70%:", x_train.shape, "split_train_target 70%:",y_train.shape, "split_test_data 30%", x_test.shape, "split_test_target 30%",y_test.shape)

- 1

- 2

- 3

- 4

- 5

split_train_data 70%: (105, 4) split_train_target 70%: (105,) split_test_data 30% (45, 4) split_test_target 30% (45,)

- 1

- 2

五、模型训练并用验证集验证

5.1 逻辑回归

# Logistic Regression

model = LogisticRegression()

model.fit(x_train, y_train)

prediction=model.predict(x_test)

print('The accuracy of the Logistic Regression is: {0}'.format(metrics.accuracy_score(prediction,y_test)))

- 1

- 2

- 3

- 4

- 5

- 6

The accuracy of the Logistic Regression is: 0.9555555555555556

- 1

- 2

5.2 决策树

# DecisionTreeClassifier

model=DecisionTreeClassifier()

model.fit(x_train, y_train)

prediction=model.predict(x_test)

print('The accuracy of the DecisionTreeClassifier is: {0}'.format(metrics.accuracy_score(prediction,y_test)))

- 1

- 2

- 3

- 4

- 5

- 6

The accuracy of the DecisionTreeClassifier is: 0.9555555555555556

- 1

- 2

5.3 K-邻近

# K-Nearest Neighbours

model=KNeighborsClassifier(n_neighbors=3)

model.fit(x_train, y_train)

prediction=model.predict(x_test)

print('The accuracy of the K-Nearest Neighbours is: {0}'.format(metrics.accuracy_score(prediction,y_test)))

- 1

- 2

- 3

- 4

- 5

- 6

The accuracy of the K-Nearest Neighbours is: 1.0

- 1

- 2

5.4 支持向量机

# Support Vector Machine

model = svm.SVC()

model.fit(x_train, y_train)

prediction=model.predict(x_test)

print('The accuracy of the SVM is: {0}'.format(metrics.accuracy_score(prediction,y_test)))

- 1

- 2

- 3

- 4

- 5

- 6

The accuracy of the SVM is: 1.0

- 1

- 2

六、神经网络

x=iris.data

y=iris.target

np.random.seed(seed=7)

y_Label=LabelBinarizer().fit_transform(y)

x_train,y_train,x_test,y_test=model_selection.train_test_split(x,y_Label,test_size=0.3,random_state=42)

- 1

- 2

- 3

- 4

- 5

- 6

from keras.models import Sequential

from keras.layers.core import Dense

model = Sequential() #建立模型

model.add(Dense(4,activation='relu',input_shape=(4,)))

model.add(Dense(6,activation='relu'))

model.add(Dense(3,activation='softmax'))

model.summary()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

Model: "sequential_23"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_68 (Dense) (None, 4) 20

_________________________________________________________________

dense_69 (Dense) (None, 6) 30

_________________________________________________________________

dense_70 (Dense) (None, 3) 21

=================================================================

Total params: 71

Trainable params: 71

Non-trainable params: 0

_________________________________________________________________

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

model.compile(loss='categorical_crossentropy',optimizer='rmsprop',metrics=['accuracy'])

step=25

history=model.fit(x_train,x_test,validation_data=(y_train,y_test),batch_size=10,epochs=step)

train_result=history.history

- 1

- 2

- 3

- 4

- 5

- 6

Train on 105 samples, validate on 45 samples Epoch 1/25 105/105 [==============================] - 0s 2ms/step - loss: 0.1160 - accuracy: 0.9429 - val_loss: 0.0453 - val_accuracy: 1.0000 Epoch 2/25 105/105 [==============================] - 0s 123us/step - loss: 0.1121 - accuracy: 0.9524 - val_loss: 0.0486 - val_accuracy: 1.0000 Epoch 3/25 105/105 [==============================] - 0s 114us/step - loss: 0.1116 - accuracy: 0.9429 - val_loss: 0.0496 - val_accuracy: 1.0000 Epoch 4/25 105/105 [==============================] - 0s 114us/step - loss: 0.1129 - accuracy: 0.9524 - val_loss: 0.0479 - val_accuracy: 1.0000 Epoch 5/25 105/105 [==============================] - 0s 123us/step - loss: 0.1136 - accuracy: 0.9524 - val_loss: 0.0483 - val_accuracy: 1.0000 Epoch 6/25 105/105 [==============================] - 0s 114us/step - loss: 0.1112 - accuracy: 0.9524 - val_loss: 0.0518 - val_accuracy: 1.0000 Epoch 7/25 105/105 [==============================] - 0s 114us/step - loss: 0.1110 - accuracy: 0.9429 - val_loss: 0.0505 - val_accuracy: 1.0000 Epoch 8/25 105/105 [==============================] - 0s 123us/step - loss: 0.1099 - accuracy: 0.9524 - val_loss: 0.0467 - val_accuracy: 1.0000 Epoch 9/25 105/105 [==============================] - 0s 114us/step - loss: 0.1109 - accuracy: 0.9524 - val_loss: 0.0554 - val_accuracy: 0.9778 Epoch 10/25 105/105 [==============================] - 0s 123us/step - loss: 0.1097 - accuracy: 0.9524 - val_loss: 0.0535 - val_accuracy: 1.0000 Epoch 11/25 105/105 [==============================] - 0s 114us/step - loss: 0.1091 - accuracy: 0.9524 - val_loss: 0.0452 - val_accuracy: 1.0000 Epoch 12/25 105/105 [==============================] - 0s 123us/step - loss: 0.1040 - accuracy: 0.9619 - val_loss: 0.0742 - val_accuracy: 0.9778 Epoch 13/25 105/105 [==============================] - 0s 123us/step - loss: 0.1124 - accuracy: 0.9524 - val_loss: 0.0670 - val_accuracy: 0.9778 Epoch 14/25 105/105 [==============================] - ETA: 0s - loss: 0.0918 - accuracy: 0.90 - 0s 133us/step - loss: 0.1110 - accuracy: 0.9429 - val_loss: 0.0746 - val_accuracy: 0.9778 Epoch 15/25 105/105 [==============================] - 0s 114us/step - loss: 0.1105 - accuracy: 0.9429 - val_loss: 0.0545 - val_accuracy: 0.9778 Epoch 16/25 105/105 [==============================] - 0s 123us/step - loss: 0.1077 - accuracy: 0.9524 - val_loss: 0.0519 - val_accuracy: 1.0000 Epoch 17/25 105/105 [==============================] - 0s 114us/step - loss: 0.1113 - accuracy: 0.9524 - val_loss: 0.0562 - val_accuracy: 0.9778 Epoch 18/25 105/105 [==============================] - 0s 123us/step - loss: 0.1085 - accuracy: 0.9524 - val_loss: 0.0491 - val_accuracy: 1.0000 Epoch 19/25 105/105 [==============================] - 0s 123us/step - loss: 0.1101 - accuracy: 0.9524 - val_loss: 0.0548 - val_accuracy: 0.9778 Epoch 20/25 105/105 [==============================] - 0s 114us/step - loss: 0.1060 - accuracy: 0.9429 - val_loss: 0.0451 - val_accuracy: 1.0000 Epoch 21/25 105/105 [==============================] - 0s 123us/step - loss: 0.1128 - accuracy: 0.9524 - val_loss: 0.0450 - val_accuracy: 1.0000 Epoch 22/25 105/105 [==============================] - 0s 123us/step - loss: 0.1086 - accuracy: 0.9524 - val_loss: 0.0534 - val_accuracy: 0.9778 Epoch 23/25 105/105 [==============================] - 0s 123us/step - loss: 0.1065 - accuracy: 0.9524 - val_loss: 0.0455 - val_accuracy: 1.0000 Epoch 24/25 105/105 [==============================] - 0s 114us/step - loss: 0.1062 - accuracy: 0.9524 - val_loss: 0.0434 - val_accuracy: 1.0000 Epoch 25/25 105/105 [==============================] - 0s 123us/step - loss: 0.1081 - accuracy: 0.9524 - val_loss: 0.0423 - val_accuracy: 1.0000

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

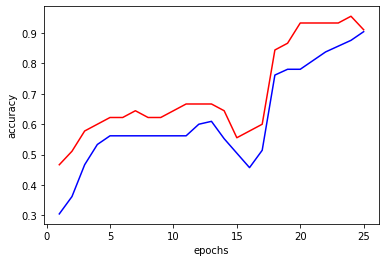

- 基于keras的全连接神经网络模型,仅仅需要很少的训练次数,即可达到100%的准确度,是训练iris数据集时不错的选择。

# 从最终的训练模型种读取准确度

acc=train_result['accuracy']

val_acc=train_result['val_accuracy']

epochs=range(1,step+1)

plt.plot(epochs,acc,'b-')

plt.plot(epochs,val_acc,'r')

plt.xlabel('epochs')

plt.ylabel('accuracy')

plt.show()

t=model.predict(y_train)

resultsss=model.evaluate(y_train,y_test)

resultsss

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

45/45 [==============================] - 0s 44us/step

[0.5691331187884013, 0.9111111164093018]

- 1

- 2

- 3

七、将花瓣和花萼特征分离+标签 训练

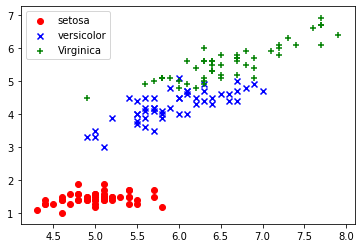

7.1 选取花萼特征训练

# 获取花卉一二列特征数据集

DD = iris.data

x=DD[ :,0:2]

print(x)

y=iris.target

- 1

- 2

- 3

- 4

- 5

- 6

[[5.1 3.5] [4.9 3. ] [4.7 3.2] [4.6 3.1] [5. 3.6] [5.4 3.9] [4.6 3.4] [5. 3.4] [4.4 2.9] [4.9 3.1] [5.4 3.7] [4.8 3.4] [4.8 3. ] [4.3 3. ] [5.8 4. ] [5.7 4.4] [5.4 3.9] [5.1 3.5] [5.7 3.8] [5.1 3.8] [5.4 3.4] [5.1 3.7] [4.6 3.6] [5.1 3.3] [4.8 3.4] [5. 3. ] [5. 3.4] [5.2 3.5] [5.2 3.4] [4.7 3.2] [4.8 3.1] [5.4 3.4] [5.2 4.1] [5.5 4.2] [4.9 3.1] [5. 3.2] [5.5 3.5] [4.9 3.6] [4.4 3. ] [5.1 3.4] [5. 3.5] [4.5 2.3] [4.4 3.2] [5. 3.5] [5.1 3.8] [4.8 3. ] [5.1 3.8] [4.6 3.2] [5.3 3.7] [5. 3.3] [7. 3.2] [6.4 3.2] [6.9 3.1] [5.5 2.3] [6.5 2.8] [5.7 2.8] [6.3 3.3] [4.9 2.4] [6.6 2.9] [5.2 2.7] [5. 2. ] [5.9 3. ] [6. 2.2] [6.1 2.9] [5.6 2.9] [6.7 3.1] [5.6 3. ] [5.8 2.7] [6.2 2.2] [5.6 2.5] [5.9 3.2] [6.1 2.8] [6.3 2.5] [6.1 2.8] [6.4 2.9] [6.6 3. ] [6.8 2.8] [6.7 3. ] [6. 2.9] [5.7 2.6] [5.5 2.4] [5.5 2.4] [5.8 2.7] [6. 2.7] [5.4 3. ] [6. 3.4] [6.7 3.1] [6.3 2.3] [5.6 3. ] [5.5 2.5] [5.5 2.6] [6.1 3. ] [5.8 2.6] [5. 2.3] [5.6 2.7] [5.7 3. ] [5.7 2.9] [6.2 2.9] [5.1 2.5] [5.7 2.8] [6.3 3.3] [5.8 2.7] [7.1 3. ] [6.3 2.9] [6.5 3. ] [7.6 3. ] [4.9 2.5] [7.3 2.9] [6.7 2.5] [7.2 3.6] [6.5 3.2] [6.4 2.7] [6.8 3. ] [5.7 2.5] [5.8 2.8] [6.4 3.2] [6.5 3. ] [7.7 3.8] [7.7 2.6] [6. 2.2] [6.9 3.2] [5.6 2.8] [7.7 2.8] [6.3 2.7] [6.7 3.3] [7.2 3.2] [6.2 2.8] [6.1 3. ] [6.4 2.8] [7.2 3. ] [7.4 2.8] [7.9 3.8] [6.4 2.8] [6.3 2.8] [6.1 2.6] [7.7 3. ] [6.3 3.4] [6.4 3.1] [6. 3. ] [6.9 3.1] [6.7 3.1] [6.9 3.1] [5.8 2.7] [6.8 3.2] [6.7 3.3] [6.7 3. ] [6.3 2.5] [6.5 3. ] [6.2 3.4] [5.9 3. ]]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

x_train,x_test,y_train,y_test=model_selection.train_test_split(x,y,random_state=101,test_size=0.3)

print("split_train_data 70%:", x_train.shape, "split_train_target 70%:",y_train.shape, "split_test_data 30%", x_test.shape, "split_test_target 30%",y_test.shape)

- 1

- 2

- 3

split_train_data 70%: (105, 2) split_train_target 70%: (105,) split_test_data 30% (45, 2) split_test_target 30% (45,)

- 1

- 2

# K-Nearest Neighbours

model=KNeighborsClassifier(n_neighbors=3)

model.fit(x_train, y_train)

prediction=model.predict(x_test)

print('The accuracy of the K-Nearest Neighbours is: {0}'.format(metrics.accuracy_score(prediction,y_test)))

- 1

- 2

- 3

- 4

- 5

- 6

The accuracy of the K-Nearest Neighbours is: 0.6444444444444445

- 1

- 2

7.1 选取花瓣特征训练

DD = iris.data

x=DD[ :,2:4]

print(x)

- 1

- 2

- 3

- 4

[[1.4 0.2] [1.4 0.2] [1.3 0.2] [1.5 0.2] [1.4 0.2] [1.7 0.4] [1.4 0.3] [1.5 0.2] [1.4 0.2] [1.5 0.1] [1.5 0.2] [1.6 0.2] [1.4 0.1] [1.1 0.1] [1.2 0.2] [1.5 0.4] [1.3 0.4] [1.4 0.3] [1.7 0.3] [1.5 0.3] [1.7 0.2] [1.5 0.4] [1. 0.2] [1.7 0.5] [1.9 0.2] [1.6 0.2] [1.6 0.4] [1.5 0.2] [1.4 0.2] [1.6 0.2] [1.6 0.2] [1.5 0.4] [1.5 0.1] [1.4 0.2] [1.5 0.2] [1.2 0.2] [1.3 0.2] [1.4 0.1] [1.3 0.2] [1.5 0.2] [1.3 0.3] [1.3 0.3] [1.3 0.2] [1.6 0.6] [1.9 0.4] [1.4 0.3] [1.6 0.2] [1.4 0.2] [1.5 0.2] [1.4 0.2] [4.7 1.4] [4.5 1.5] [4.9 1.5] [4. 1.3] [4.6 1.5] [4.5 1.3] [4.7 1.6] [3.3 1. ] [4.6 1.3] [3.9 1.4] [3.5 1. ] [4.2 1.5] [4. 1. ] [4.7 1.4] [3.6 1.3] [4.4 1.4] [4.5 1.5] [4.1 1. ] [4.5 1.5] [3.9 1.1] [4.8 1.8] [4. 1.3] [4.9 1.5] [4.7 1.2] [4.3 1.3] [4.4 1.4] [4.8 1.4] [5. 1.7] [4.5 1.5] [3.5 1. ] [3.8 1.1] [3.7 1. ] [3.9 1.2] [5.1 1.6] [4.5 1.5] [4.5 1.6] [4.7 1.5] [4.4 1.3] [4.1 1.3] [4. 1.3] [4.4 1.2] [4.6 1.4] [4. 1.2] [3.3 1. ] [4.2 1.3] [4.2 1.2] [4.2 1.3] [4.3 1.3] [3. 1.1] [4.1 1.3] [6. 2.5] [5.1 1.9] [5.9 2.1] [5.6 1.8] [5.8 2.2] [6.6 2.1] [4.5 1.7] [6.3 1.8] [5.8 1.8] [6.1 2.5] [5.1 2. ] [5.3 1.9] [5.5 2.1] [5. 2. ] [5.1 2.4] [5.3 2.3] [5.5 1.8] [6.7 2.2] [6.9 2.3] [5. 1.5] [5.7 2.3] [4.9 2. ] [6.7 2. ] [4.9 1.8] [5.7 2.1] [6. 1.8] [4.8 1.8] [4.9 1.8] [5.6 2.1] [5.8 1.6] [6.1 1.9] [6.4 2. ] [5.6 2.2] [5.1 1.5] [5.6 1.4] [6.1 2.3] [5.6 2.4] [5.5 1.8] [4.8 1.8] [5.4 2.1] [5.6 2.4] [5.1 2.3] [5.1 1.9] [5.9 2.3] [5.7 2.5] [5.2 2.3] [5. 1.9] [5.2 2. ] [5.4 2.3] [5.1 1.8]]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

x_train,x_test,y_train,y_test=model_selection.train_test_split(x,y,random_state=101,test_size=0.3)

print("split_train_data 70%:", x_train.shape, "split_train_target 70%:",y_train.shape, "split_test_data 30%", x_test.shape, "split_test_target 30%",y_test.shape)

- 1

- 2

- 3

split_train_data 70%: (105, 2) split_train_target 70%: (105,) split_test_data 30% (45, 2) split_test_target 30% (45,)

- 1

- 2

# K-Nearest Neighbours

model=KNeighborsClassifier(n_neighbors=3)

model.fit(x_train, y_train)

prediction=model.predict(x_test)

print('The accuracy of the K-Nearest Neighbours is: {0}'.format(metrics.accuracy_score(prediction,y_test)))

- 1

- 2

- 3

- 4

- 5

- 6

The accuracy of the K-Nearest Neighbours is: 0.9777777777777777

- 1

- 2

-

由此可见,同样选取了准确率很高的KNN模型,选取花萼特征和花瓣特征分别进行训练,得到的预测准确度是不同的。花萼特征相关度较低,花瓣特征相关度较高,花瓣训练结果较为乐观,而四个特征一起加入训练时准确率最高,可以达到100%.

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/知新_RL/article/detail/345196

推荐阅读

相关标签