- 1期望薪资22k,三年go好未来5轮面试经历_好未来客户端面试

- 2都2024了,还在纠结图片识别?fastapi+streamlit+langchain给你答案!_streamlit fastapi

- 3Flutter 2024 产品路线图正式公布,2024年Android开发学习路线_安卓开发roadmap

- 4Apple watch 开发指南(4) 配置你的xcode_xcode运行调试真机手表

- 5gitee(码云)和gitHub的区别_码云和github的区别

- 6Qwen-14B-Chat 非量化微调_qwen4b部署要求

- 7AI绘画工具介绍:解锁创意的新工具

- 8Jdon框架(JdonFramework)应用系统

- 9SQL server 错误代码对照表_1051 已将停止控制发送给与其他运行服务相关的服务

- 10企业员工人事管理系统(数据库课设)_数据库物理结构设计公司人事管理

在Ubantu24.04上安装kubenates(k8s) 1.30_下载用于 kubernetes 软件包仓库的公共签名密钥

赞

踩

在Ubantu24.04上安装kubenates(k8s) 1.30

1.更新 apt 包索引并安装使用 Kubernetes apt 仓库所需要的包:

sudo apt-get update

- 1

2.下载用于 Kubernetes 软件包仓库的公共签名密钥。所有仓库都使用相同的签名密钥,因此你可以忽略URL中的版本:

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

- 1

3.添加 Kubernetes apt 仓库。

# 此操作会覆盖 /etc/apt/sources.list.d/kubernetes.list 中现存的所有配置。

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

- 1

- 2

4.更新 apt 包索引,安装 kubelet、kubeadm 和 kubectl,并锁定其版本:

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

- 1

- 2

- 3

5.关闭系统交换区,不关闭无法启动kubenates

swapoff -a

- 1

6.安装containerd,如果已经装了就跳过此步骤

sudo apt-get install containerd

# 启动

sudo systemctl start containerd

# 查看状态(是否启动)

sudo systemctl status containerd

- 1

- 2

- 3

- 4

- 5

7.确定docker是否正常

sudo systemctl status docker

- 1

7.1改docker配置文件

假如没有这个文件夹的,自行创建

sudo mkdir /etc/docker

- 1

创建好之后编辑

sudo vim /etc/docker/daemon.json

- 1

插入如下内容,将docker默认的cgroupfs修改为systemd

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

- 1

- 2

- 3

7.2 重启docker

sudo systemctl restart docker

# kubelet也可以重启一下

systemctl restart kubelet

- 1

- 2

- 3

8.创建k8s默认的yaml配置文件

sudo kubeadm config print init-defaults > init.default.yaml

# 如果没有权限,需要增加文件夹的读写权限如下

sudo chmod 777 文件夹

- 1

- 2

- 3

9.配置init.default.yaml

vim init.default.yaml

- 1

9.1去改这个文件的镜像地址,保存退出

1.2.3.4改为你自己的IP地址,注意nodeRegistration对应的值,假如是master,

输入命令 vim /etc/hosts

然后输入 你自己的IP master

imageRepository: registry.aliyuncs.com/google_containers

advertiseAddress: 1.2.3.4

- 1

- 2

10.执行以下命令

kubeadm config images pull --config=init.default.yaml

- 1

这时我们需要使用到的镜像,出现以下表示拉取完成

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.30.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.30.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.30.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.30.0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.11.1

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.12-0

- 1

- 2

- 3

- 4

- 5

- 6

- 7

11.执行命令,指定镜像避免连接超时

sudo kubeadm init --image-repository=registry.aliyuncs.com/google_containers

- 1

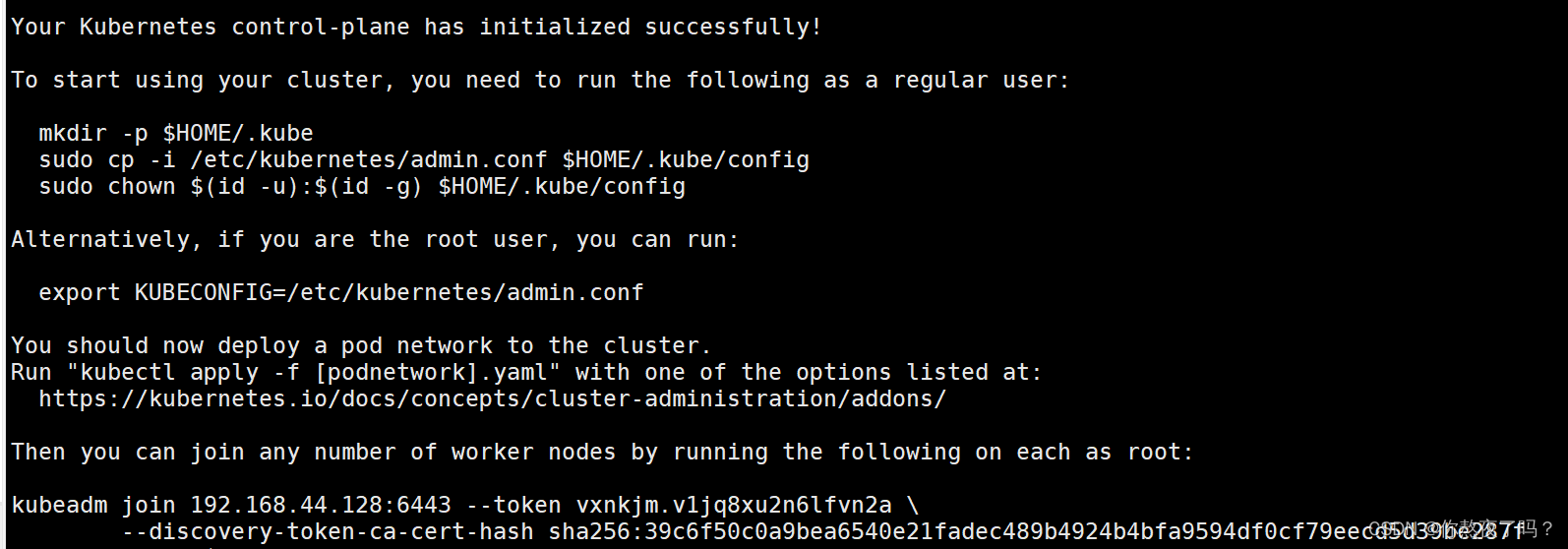

成功!!!!!!Success

12.别慌,继续执行以下命令

# 如果不是管理员

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 如果是管理员

export KUBECONFIG=/etc/kubernetes/admin.conf

source /etc/profile

- 1

- 2

- 3

- 4

- 5

- 6

- 7

# 记住这个命令不用执行

kubeadm join 192.168.67.128:6443 --token pevxnb.k75nwsq9c8j6hi19 \

--discovery-token-ca-cert-hash sha256:e933f3e00dcc3a048205a15c78cc5e43e07907d9b396b32b8968f614bc8e7085

# 如果忘记了命令,可以这样查看

kubeadm token create --print-join-command

# 输出

kubeadm join 192.168.67.128:6443 --token fv0ocw.jjha16yy77qfpegc --discovery-token-ca-cert-hash sha256:5bb18f3753cb782e7301f8efb3bc056ed93a5940f29661a73c4f7dd75a0d4703

- 1

- 2

- 3

- 4

- 5

- 6

- 7

来试试命令

kubectl get svc

# 输出

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5m46s

- 1

- 2

- 3

- 4

OVER

可能遇到的异常

稍等片刻之后,奇怪的是K8S挂掉了或者是有几个节点没有启动

报错信息——couldn’t get current server API group list: Get “http://localhost:8080/api?timeout=32s”: dial tcp 127.0.0.1:8080: connect: connection refused

或者是network plugin is not ready: cni config uninitialized

或者是flannel相关的错误

通过以下命令查看

kubectl get po -n kube-flannel

# 输出 flannel没有起来

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-q99fm 0/1 CrashLoopBackOff 5 (2m18s ago) 5m25s

- 1

- 2

- 3

- 4

很明显服务没有启动,查看报错信息:journalctl -f -u kubelet | grep error发现是和cni/bin/flannel相关的,经过我好多天的研究,最后通过重新安装flannel得到解决,累累的。贴个插件地址https://github.com/flannel-io/flannel。

接下来,我们通过yml的形式重新启动新的flannel pod,原文提到的地址https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml,里面的三个镜像全是在docker.io上的,根本下载不下来。

然后通过科学上网,我把这些镜像放到阿里云了!

——————重点开始——

① 拉这两个镜像

docker pull registry.cn-hangzhou.aliyuncs.com/zhangjinbo/flannel-cni-plugin:1.4.1-flannel1

docker pull registry.cn-hangzhou.aliyuncs.com/zhangjinbo/flannel:0.25.3

- 1

- 2

② 创建yml文件,以yml的形式创建pod

vim kube-flannel.yml

- 1

贴下面的内容

apiVersion: v1 kind: Namespace metadata: labels: k8s-app: flannel pod-security.kubernetes.io/enforce: privileged name: kube-flannel --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: flannel name: flannel namespace: kube-flannel --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: flannel name: flannel rules: - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - get - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch - apiGroups: - networking.k8s.io resources: - clustercidrs verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: flannel name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-flannel --- apiVersion: v1 data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } kind: ConfigMap metadata: labels: app: flannel k8s-app: flannel tier: node name: kube-flannel-cfg namespace: kube-flannel --- apiVersion: apps/v1 kind: DaemonSet metadata: labels: app: flannel k8s-app: flannel tier: node name: kube-flannel-ds namespace: kube-flannel spec: selector: matchLabels: app: flannel k8s-app: flannel template: metadata: labels: app: flannel k8s-app: flannel tier: node spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux containers: - args: - --ip-masq - --kube-subnet-mgr command: - /opt/bin/flanneld env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: EVENT_QUEUE_DEPTH value: "5000" image: registry.cn-hangzhou.aliyuncs.com/zhangjinbo/flannel:0.25.3 name: kube-flannel resources: requests: cpu: 100m memory: 50Mi securityContext: capabilities: add: - NET_ADMIN - NET_RAW privileged: false volumeMounts: - mountPath: /run/flannel name: run - mountPath: /etc/kube-flannel/ name: flannel-cfg - mountPath: /run/xtables.lock name: xtables-lock hostNetwork: true initContainers: - args: - -f - /flannel - /opt/cni/bin/flannel command: - cp image: registry.cn-hangzhou.aliyuncs.com/zhangjinbo/flannel-cni-plugin:1.4.1-flannel1 name: install-cni-plugin volumeMounts: - mountPath: /opt/cni/bin name: cni-plugin - args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist command: - cp image: registry.cn-hangzhou.aliyuncs.com/zhangjinbo/flannel:0.25.3 name: install-cni volumeMounts: - mountPath: /etc/cni/net.d name: cni - mountPath: /etc/kube-flannel/ name: flannel-cfg priorityClassName: system-node-critical serviceAccountName: flannel tolerations: - effect: NoSchedule operator: Exists volumes: - hostPath: path: /run/flannel name: run - hostPath: path: /opt/cni/bin name: cni-plugin - hostPath: path: /etc/cni/net.d name: cni - configMap: name: kube-flannel-cfg name: flannel-cfg - hostPath: path: /run/xtables.lock type: FileOrCreate name: xtables-lock

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

③ 然后

kubectl apply -f kube-flannel.yml

# 运行完毕之后查看po

kubectl get po -n kube-flannel

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-jfxt4 0/1 XXXXX错误 0 36m

- 1

- 2

- 3

- 4

- 5

- 6

看看你的kube-flannel-ds-xxxxx是什么状态,如果不是READY,检查一下这个po的运行情况

xxxxx用你机子上的替换

kubectl logs kube-flannel-ds-xxxxx-n kube-flannel

有一种可能是会出现pod cidr not assgned,这是我们未给节点分配IP导致

kubectl get nodes

# 输出

NAME STATUS ROLES AGE VERSION

izbp15esj07w8y851x1qexz NotReady control-plane 179m v1.28.10

# 配置IP

kubectl edit node izbp15esj07w8y851x1qexz

# 加上这个,保存退出,注意若有多个podCIDR不能出现重复

spec:

podCIDR: 10.244.0.0/24

# 删掉之前配置的

kubectl delete -f kube-flannel.yml

# 重新创建

kubectl apply -f kube-flannel.yml

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

不出意外,已经部署成功了

(●’◡’●)(●’◡’●)(●’◡’●)(●’◡’●)(●’◡’●)

检查一下

kubectl get po -n kube-flannel # 输出 NAME READY STATUS RESTARTS AGE kube-flannel-ds-jfxt4 1/1 Running 0 28m kubectl get po -n kube-system # 输出 NAME READY STATUS RESTARTS AGE coredns-66f779496c-bg7pw 1/1 Running 0 3h6m coredns-66f779496c-zfxqs 1/1 Running 0 3h6m etcd-izbp15esj07w8y851x1qexz 1/1 Running 0 3h6m kube-apiserver-izbp15esj07w8y851x1qexz 1/1 Running 0 3h6m kube-controller-manager-izbp15esj07w8y851x1qexz 1/1 Running 0 3h6m kube-proxy-ns9jg 1/1 Running 0 3h6m kube-scheduler-izbp15esj07w8y851x1qexz 1/1 Running 0 3h6m

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16