- 1C语言——单向链表_c语言 单向链表

- 2Go + FFmpeg交互丨学习记录_golang ffmpeg

- 3解决Anaconda中一些包装不上的情况_annaconda有些包装不了

- 4Hive 教程(官方Tutorial)

- 5Java实战:Spring Boot 实现异步记录复杂日志_springboot 异步记录日志

- 6tomcat面试和Spring的面试题_tomcat acid

- 7c++ stl算法库常见算法_c++ 算法库有哪些算法

- 8深度信念网络(DBN)介绍

- 9探索本地人工智能新境界:LocalAI 模型画廊

- 10安卓玩机工具推荐----MTK芯片读写分区 备份分区 恢复分区 制作线刷包 工具操作解析【二】_mtk工具

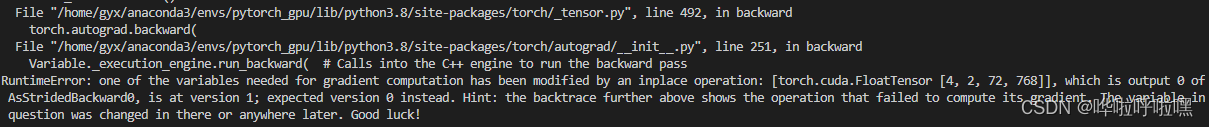

Error-RuntimeError: one of the variables needed for gradient computation has been modified by

赞

踩

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.FloatTensor [4, 2, 72, 768]], which is output 0 of AsStridedBackward0, is at version 1; expected version 0 instead. Hint: the backtrace further above shows the operation that failed to compute its gradient. The variable in question was changed in there or anywhere later. Good luck!

翻译一下:

RuntimeError:梯度计算所需的变量之一已被就地操作修改:[torch.cuda.FloatTensor [4, 2, 72, 768]],这是 AsStridedBackward0 的输出 0,版本为 1;预期的版本 0 代替。提示:上面的回溯显示了无法计算其梯度的操作。有问题的变量后来在那里或任何地方都发生了变化。祝你好运!

发现报错位置:

- total_loss, loss, loss_prompt, loss_pair = self._train_step(batch, step, epoch)

- total_loss.backward()

发现很多地方是修改添加,retain_graph=True,可以让前一次的backward()的梯度保存在buffer内:

- # 原始代码

- total_loss.backward()

- # 修改成:

- total_loss.backward(retain_graph=True)

但是还是报相同的错误,只能查看错误位置,添加代码with torch.autograd.detect_anomaly():

- with torch.autograd.detect_anomaly():

- total_loss, loss, loss_prompt, loss_pair = self._train_step(batch, step, epoch)

- total_loss.backward()

运行之后发现出错位置是在这一句:

mean_token = x[:, :, -end:, :].mean(1, keepdim=True).expand(-1, num, -1, -1)出错可能是由于张量的计算在计算图中被修改,导致版本不匹配的错误。我们可以通过重写这段代码,避免潜在的就地操作,并确保计算图的正确性,所以修改成:

- # 深拷贝

- x = x.clone()

- sub_tensor = x[:, :, -end:, :]

- # 深拷贝

- sub_tensor_clone = sub_tensor.clone()

- mean_token = sub_tensor_clone.mean(1, keepdim=True)

- mean_token = mean_token.expand(-1, num, -1, -1)

对两个地方进行了拷贝,避免切片操作对原向量的影响,同时仔细检查代码中的所有操作,确保没有任何就地修改 (in-place) 操作,例如使用 .add_()、.mul_() 等操作符等