- 1土壤品质检测仪的具体功能应用

- 2【简单易懂】MySQL表的增删改查(初阶)_mysql增删改查算法描述

- 3人工智能领域中声源定位的研究与发展------第二章 声源定位系统 (2)_声源定位 github

- 4c语言程序设计商品库存管理系统,《C语言课程设计商品库存管理系统》.doc

- 5使用 Langflow 和 Streamlit 构建基于 RAG 的对话式聊天机器人 了解如何在 20 分钟或更短的时间内构建利用检索增强生成 (RAG) 的聊天机器人,且无需编码

- 6做软件测试,到底能不能去外包?_40多岁 下岗 去外包做测试

- 7信创产品有哪些?如何成为信创产品

- 8linux的权限管理_tomcat 限制、禁止外部对examples目录的访问。

- 9【蓝桥杯软件赛 零基础备赛20周】第1周——蓝桥杯软件赛介绍_蓝桥杯网课

- 10使用Python与MoviePy库高效剪辑视频:从入门到实战_python视频库

ghost的网络结构和实现代码总结(torch)_ghost卷积代码

赞

踩

ghost模型的效果

GhostNet是为移动设备设计的,文中没有给出在GPU端的开销情况,在华为P30 Pro上进行了GhostNet的实际推理速度测试,并和其他模型进行了对比,所以在GPU上的实际效果还需要测试。和MobileNetV3相比,相同FLOPs的情况下,大约可以提升0.3%~0.5%不等的top-1准确率,相同Latency的情况下,大约可以提升0.5%的top1准确率。在Top1准确率在75%的情况下,GhostNet的Latency大约是40ms,而MobileNetV3大约是45ms。得出结论认为GhostNet总体好于当前的MobileNetV3,MobileNetV2,EfficientNet,ShuffleNetV2,MnasNet,FBNet,ProxylessNAS等网络。【0】

ghost模型实现原理

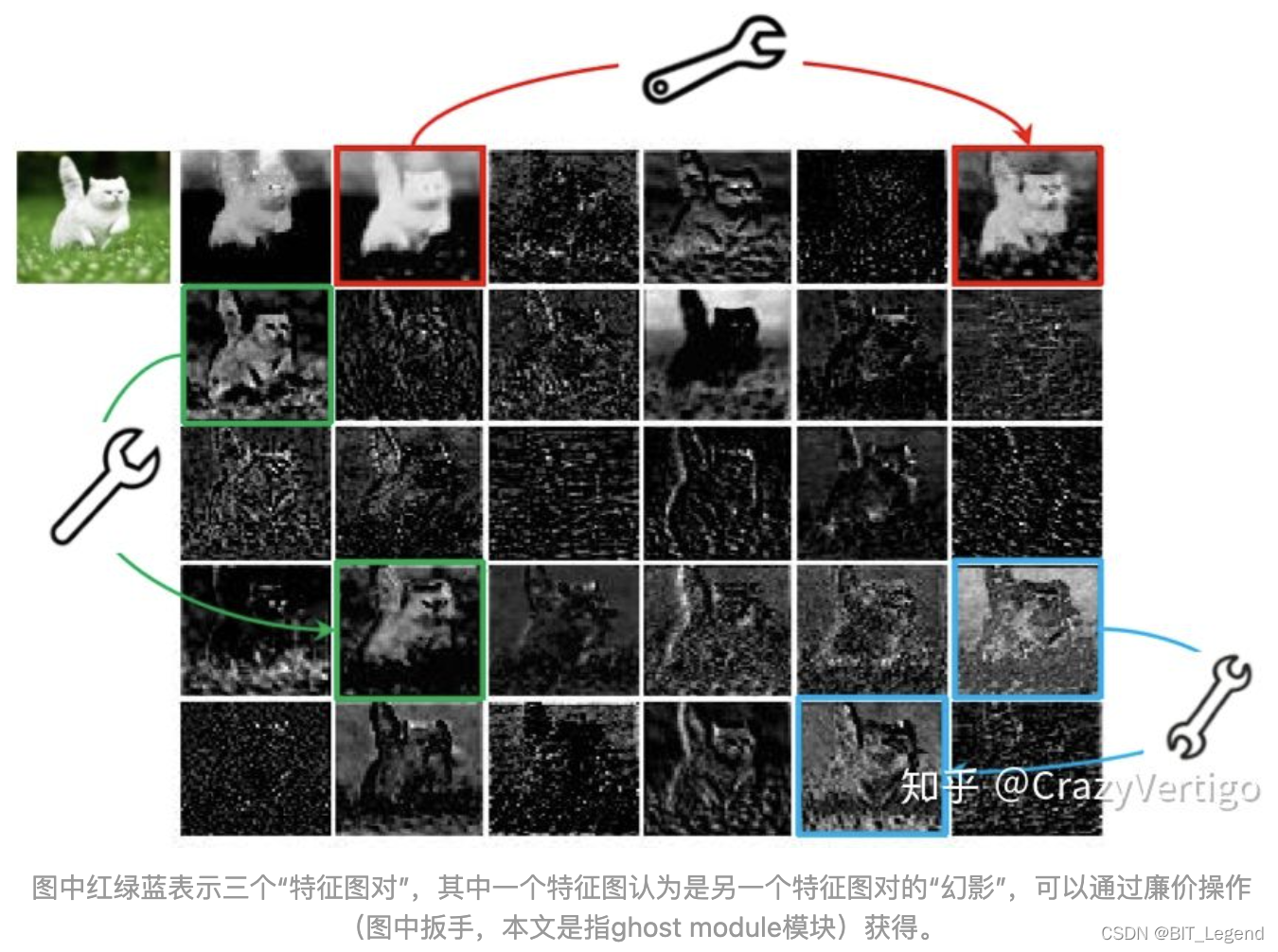

ghost模型的整个结构照搬了mobilenetv3,只是把基本单元给替换掉了,ghost模型的基本单元如上图所示,将原本的一步卷积变为两步卷积,第一步首先进行常规卷积,但是减少了输出通道数,第二步在第一步的基础上进行深度可分离卷积(仅取第一步),这里深度可分离卷积跟常规深度可分离卷积有点区别,常规深度可分离卷积(仅取第一步)的输入输出通道数完全相等,卷积核数量也等于输入通道数,这里输出通道数可能是输入通道数的整数倍,卷积核数量等于输出通道数。此外,第二步卷积还有并行的一个连接分支,这个分支直接就是第一步卷积的输出。ghost卷积模块的输出通道数等于第一步卷积后的通道数c加上第二步卷积后的通道数n*c,所以最终通道数为(n+1)*c。此操作的依据是经过观察,发现大部分卷积操作后,输出的特征图很多通道之间存在很高的相似性,那我们就可以经过第一步卷积得到那些没有相似性的通道,然后经过第二步卷积得到剩余那些有相似性的通道,可视化如下图:

ghost模型实现代码

ghost模型由华为提出,在github上有官方的torch和tensorflow代码,以下为官方torch代码。

- # 官方代码的ghost网络模型 https://github.com/huawei-noah/CV-Backbones

- """

- Creates a GhostNet Model as defined in:

- GhostNet: More Features from Cheap Operations By Kai Han, Yunhe Wang, Qi Tian, Jianyuan Guo, Chunjing Xu, Chang Xu.

- https://arxiv.org/abs/1911.11907

- Modified from https://github.com/d-li14/mobilenetv3.pytorch and https://github.com/rwightman/pytorch-image-models

- """

- import torch

- import torch.nn as nn

- import torch.nn.functional as F

- import math

-

-

- __all__ = ['ghost_net']

-

- # 设定整个模型的所有BN层的衰减系数,该系数用于平滑统计的均值和方差,torch与tf不太一样,两者以1为互补

- momentum = 0.01 # 官方默认0.1,越小,最终的统计均值和方差越接近于整体均值和方差,前提是batchsize足够大

-

- # 保证v可以被divisor整除

- def _make_divisible(v, divisor, min_value=None):

- if min_value is None:

- min_value = divisor

- new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)

- if new_v < 0.9 * v:

- new_v += divisor

- return new_v

-

- # 定义激活函数

- def hard_sigmoid(x, inplace: bool = False):

- if inplace:

- return x.add_(3.).clamp_(0., 6.).div_(6.)

- else:

- return F.relu6(x + 3.) / 6.

-

- # 定义SE模块

- class SqueezeExcite(nn.Module):

- def __init__(self, in_chs, se_ratio=0.25, reduced_base_chs=None, act_layer=nn.ReLU, gate_fn=hard_sigmoid, divisor=4, **_):

- super(SqueezeExcite, self).__init__()

- self.gate_fn = gate_fn

- reduced_chs = _make_divisible((reduced_base_chs or in_chs) * se_ratio, divisor)

- self.avg_pool = nn.AdaptiveAvgPool2d(1)

- self.conv_reduce = nn.Conv2d(in_chs, reduced_chs, 1, bias=True)

- self.act1 = act_layer(inplace=True)

- self.conv_expand = nn.Conv2d(reduced_chs, in_chs, 1, bias=True)

-

- def forward(self, x):

- x_se = self.avg_pool(x)

- x_se = self.conv_reduce(x_se)

- x_se = self.act1(x_se)

- x_se = self.conv_expand(x_se)

- x = x * self.gate_fn(x_se)

- return x

-

- # 定义基本卷积模块

- class ConvBnAct(nn.Module):

- def __init__(self, in_chs, out_chs, kernel_size, stride=1, act_layer=nn.ReLU):

- super(ConvBnAct, self).__init__()

- self.conv = nn.Conv2d(in_chs, out_chs, kernel_size, stride, kernel_size//2, bias=False)

- self.bn1 = nn.BatchNorm2d(out_chs, momentum=momentum)

- self.act1 = act_layer(inplace=True)

-

- def forward(self, x):

- x = self.conv(x)

- x = self.bn1(x)

- x = self.act1(x)

- return x

-

- # 定义ghost模块

- class GhostModule(nn.Module):

- def __init__(self, inp, oup, kernel_size=1, ratio=2, dw_size=3, stride=1, relu=True):

- super(GhostModule, self).__init__()

- self.oup = oup

- init_channels = math.ceil(oup / ratio)

- new_channels = init_channels*(ratio-1)

-

- self.primary_conv = nn.Sequential(

- nn.Conv2d(inp, init_channels, kernel_size, stride, kernel_size//2, bias=False),

- nn.BatchNorm2d(init_channels, momentum=momentum),

- nn.ReLU(inplace=True) if relu else nn.Sequential(),

- )

-

- self.cheap_operation = nn.Sequential(

- nn.Conv2d(init_channels, new_channels, dw_size, 1, dw_size//2, groups=init_channels, bias=False),

- nn.BatchNorm2d(new_channels, momentum=momentum),

- nn.ReLU(inplace=True) if relu else nn.Sequential(),

- )

-

- def forward(self, x):

- x1 = self.primary_conv(x)

- x2 = self.cheap_operation(x1)

- out = torch.cat([x1,x2], dim=1)

- return out[:,:self.oup,:,:]

-

- # 定义ghost网络基本单元

- class GhostBottleneck(nn.Module):

- """ Ghost bottleneck w/ optional SE"""

-

- def __init__(self, in_chs, mid_chs, out_chs, dw_kernel_size=3,

- stride=1, act_layer=nn.ReLU, se_ratio=0.):

- super(GhostBottleneck, self).__init__()

- has_se = se_ratio is not None and se_ratio > 0.

- self.stride = stride

-

- # Point-wise expansion

- self.ghost1 = GhostModule(in_chs, mid_chs, relu=True)

-

- # Depth-wise convolution

- if self.stride > 1:

- self.conv_dw = nn.Conv2d(mid_chs, mid_chs, dw_kernel_size, stride=stride,

- padding=(dw_kernel_size-1)//2,

- groups=mid_chs, bias=False)

- self.bn_dw = nn.BatchNorm2d(mid_chs, momentum=momentum)

-

- # Squeeze-and-excitation

- if has_se:

- self.se = SqueezeExcite(mid_chs, se_ratio=se_ratio)

- else:

- self.se = None

-

- # Point-wise linear projection

- self.ghost2 = GhostModule(mid_chs, out_chs, relu=False)

-

- # shortcut

- if (in_chs == out_chs and self.stride == 1):

- self.shortcut = nn.Sequential()

- else:

- self.shortcut = nn.Sequential(

- nn.Conv2d(in_chs, in_chs, dw_kernel_size, stride=stride,

- padding=(dw_kernel_size-1)//2, groups=in_chs, bias=False),

- nn.BatchNorm2d(in_chs, momentum=momentum),

- nn.Conv2d(in_chs, out_chs, 1, stride=1, padding=0, bias=False),

- nn.BatchNorm2d(out_chs, momentum=momentum),

- )

-

-

- def forward(self, x):

- residual = x

-

- # 1st ghost bottleneck

- x = self.ghost1(x)

-

- # Depth-wise convolution

- if self.stride > 1:

- x = self.conv_dw(x)

- x = self.bn_dw(x)

-

- # Squeeze-and-excitation

- if self.se is not None:

- x = self.se(x)

-

- # 2nd ghost bottleneck

- x = self.ghost2(x)

-

- x += self.shortcut(residual)

- return x

-

- # 搭建ghost网络模型,整个网络模型完全照搬mobilenetv3,仅仅只是更换了网络基本单元

- class GhostNet(nn.Module):

- def __init__(self, cfgs, num_classes=1000, width=1.0, dropout=0.2):

- super(GhostNet, self).__init__()

- # setting of inverted residual blocks

- self.cfgs = cfgs

- self.dropout = dropout

-

- # building first layer

- output_channel = _make_divisible(16 * width, 4)

- self.conv_stem = nn.Conv2d(3, output_channel, 3, 2, 1, bias=False)

- self.bn1 = nn.BatchNorm2d(output_channel, momentum=momentum)

- self.act1 = nn.ReLU(inplace=True)

- input_channel = output_channel

-

- # building inverted residual blocks

- stages = []

- block = GhostBottleneck

- for cfg in self.cfgs:

- layers = []

- for k, exp_size, c, se_ratio, s in cfg:

- output_channel = _make_divisible(c * width, 4)

- hidden_channel = _make_divisible(exp_size * width, 4)

- layers.append(block(input_channel, hidden_channel, output_channel, k, s,

- se_ratio=se_ratio))

- input_channel = output_channel

- stages.append(nn.Sequential(*layers))

-

- output_channel = _make_divisible(exp_size * width, 4)

- stages.append(nn.Sequential(ConvBnAct(input_channel, output_channel, 1)))

- input_channel = output_channel

-

- self.blocks = nn.Sequential(*stages)

-

- # building last several layers

- output_channel = 1280

- self.global_pool = nn.AdaptiveAvgPool2d((1, 1))

- self.conv_head = nn.Conv2d(input_channel, output_channel, 1, 1, 0, bias=True)

- self.act2 = nn.ReLU(inplace=True)

- self.classifier = nn.Linear(output_channel, num_classes)

-

- def forward(self, x):

- x = self.conv_stem(x)

- x = self.bn1(x)

- x = self.act1(x)

- x = self.blocks(x)

- x = self.global_pool(x)

- x = self.conv_head(x)

- x = self.act2(x)

- x = x.view(x.size(0), -1)

- if self.dropout > 0.:

- x = F.dropout(x, p=self.dropout, training=self.training)

- x = self.classifier(x) # 最后的输出层并不包含激活函数,直接就是全链接的输出,在损失函数中包含softmax操作,实际使用需要自己再加一个softmax

- return x

-

-

- def ghostnet(**kwargs):

- """

- Constructs a GhostNet model

- """

- cfgs = [

- # k, t, c, SE, s

- # stage1

- [[3, 16, 16, 0, 1]],

- # stage2

- [[3, 48, 24, 0, 2]],

- [[3, 72, 24, 0, 1]],

- # stage3

- [[5, 72, 40, 0.25, 2]],

- [[5, 120, 40, 0.25, 1]],

- # stage4

- [[3, 240, 80, 0, 2]],

- [[3, 200, 80, 0, 1],

- [3, 184, 80, 0, 1],

- [3, 184, 80, 0, 1],

- [3, 480, 112, 0.25, 1],

- [3, 672, 112, 0.25, 1]

- ],

- # stage5

- [[5, 672, 160, 0.25, 2]],

- [[5, 960, 160, 0, 1],

- [5, 960, 160, 0.25, 1],

- [5, 960, 160, 0, 1],

- [5, 960, 160, 0.25, 1]

- ]

- ]

- return GhostNet(cfgs, **kwargs)

-

-

- if __name__=='__main__':

- model = ghostnet()

- model.eval()

- print(model)

- input = torch.randn(32,3,320,256)

- y = model(input)

- print(y.size())

包含训练和测试的完整代码见:https://github.com/LegendBIT/torch-classification-model