热门标签

热门文章

- 1hdfs学习笔记

- 2Android应用程序开发以及背后的设计思想深度剖析_android app 设计思想

- 3从SQL质量管理体系来看SQL审核(4)- 如何设计一个优秀的SQL审核引擎

- 4基于python爬虫的个性化书籍推荐系统毕业设计开题报告_个性化推荐国外研究现状

- 5性能猛兽:OrangePi Kunpeng Pro评测!_orangepi aipro kunpeng pro

- 6Unity的三种截取屏幕方式_unity 获取 整个虚拟机的屏幕

- 7Java中的数据结构:选择与优化

- 8Hadoop技术在协同过滤就业推荐系统中的应用及推荐原理解析_基于用户协同过滤算法是在哪计算的hadoop

- 9Kafka的数据存储_kafka数据存储在哪里

- 10OAuth2的入门理解和案例解析_解析oauth2中的信息

当前位置: article > 正文

【python】——爬虫07scrapy学习记录_scrapy数据建模 能不能append

作者:正经夜光杯 | 2024-06-27 23:25:04

赞

踩

scrapy数据建模 能不能append

一、scrapy一些概念

1. what

- scrapy,python 开源网络爬虫

2. scrapy流程

改写为:

3. 模块具体作用

二、构造请求

开发:

# 1. 创建项目

scrapy startproject mySpider

# 2.生成一个爬虫

scrapy genspider itcast itcast.cn

# 3.提取数据

# 根据网站结构在spider中实现数据采集相关

# 4.保存数据

# 使用pipeline进行数据后续处理和保存

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

创建项目

创建爬虫

完成爬虫

- 修改起始url

- 检查修改允许的域名

- 在parse方法中实现爬取逻辑

import scrapy class ItcastSpider(scrapy.Spider): name = 'itcast' # 2.检查修改允许的域名 allowed_domains = ['itcast.cn'] # 1. 修改起始url start_urls = ['http://www.itcast.cn/channel/teacher.shtml#ajavaee'] # 3.在parse方法中实现爬取逻辑 def parse(self, response): node_list = response.xpath('//div[@class="li_txt"]') print(len(node_list))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

import scrapy class ItcastSpider(scrapy.Spider): name = 'itcast' # 2.检查修改允许的域名 allowed_domains = ['itcast.cn'] # 1. 修改起始url start_urls = ['http://www.itcast.cn/channel/teacher.shtml#ajavaee'] # 3.在parse方法中实现爬取逻辑 def parse(self, response): # 获取所有教室结点 node_list = response.xpath('//div[@class="li_txt"]') # 遍历教师节点列表 for node in node_list: temp = {} # scrapy中xpath方法返回的是选择器对象列表 # extract() 用于从选择器对象中提取数据,配合索引 # extract_first()直接提取第一个数据,自然不用索引 # 确定只含有一个值,直接使用.extract_first() temp['name'] = node.xpath('./h3/text()').extract_first() temp['title'] = node.xpath('./h4/text()')[0].extract() temp['desc'] = node.xpath('./p/text()')[0].extract() # 如果使用return 函数就直接执行完毕了 # 如果使用列表 .append 还需要做翻页操作 yield temp

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

说明:

- scrapy.Spider爬虫类中必须有名为parse的解析

- 若网站层次复杂,可自定义其他解析函数

- 解析函数中 url必须属于允许的域中

- 启动爬虫注意位置,在项目下启动

- parse()函数中使用yield返回数据 ,注意:解析函数中的yield能够传递的对象只能是:Baseltem,Request, dict,None

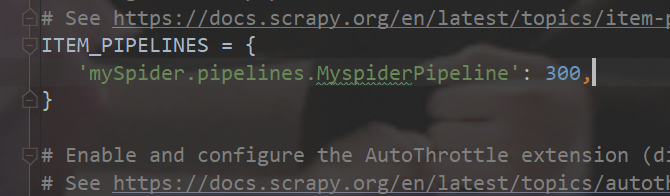

保存数据——管道

- 定义管道

- 启用管道

# Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html # useful for handling different item types with a single interface from itemadapter import ItemAdapter import json class MyspiderPipeline(object): def __init__(self): self.file = open('itcast.json','w') def process_item(self, item, spider): # print('itcast',item) # 将字典数据序列化 json_data = json.dumps(item, ensure_ascii=False) + ',\n' # 将数据写入文件 self.file.write(json_data) # 默认使用完管道之后 需要将数据返回给引擎 return item def __del__(self): self.file.close()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

三、scrapy建模、请求

1. 在item.py中建模

- 确定采集字段

- 需要item支持

# Define here the models for your scraped items # # See documentation in: # https://docs.scrapy.org/en/latest/topics/items.html import scrapy class MyspiderItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name = scrapy.Field() title = scrapy.Field() desc = scrapy.Field() pass

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

2. 使用——在爬虫中实例化

3. 翻页处理

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/正经夜光杯/article/detail/764206

推荐阅读

相关标签