记kafka的安装_kafka安装

赞

踩

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

前言

提示:由于服务器需求,需要安装消息队列kafka,之前是没有安装过的。用博客记录下这次安装

提示:以下是本篇文章正文内容,下面案例可供参考

一、kafka是什么?

Kafka 是一个开源的分布式流处理平台,最初由 LinkedIn 开发,并于2011年开源。它主要用于构建实时数据管道和流处理应用程序。Kafka 的设计目标是提供高吞吐量、低延迟和高可靠性,适用于处理大量数据的实时流。

二、安装步骤

1.解压文件到当前目录

代码如下(示例):

root@hecs-349024:~# cd /usr/local/

root@hecs-349024:/usr/local# mkdir kafka

root@hecs-349024:/usr/local#

root@hecs-349024:/usr/local#

root@hecs-349024:/usr/local#

root@hecs-349024:/usr/local#

root@hecs-349024:/usr/local#

root@hecs-349024:/usr/local# cd kafka/

root@hecs-349024:/usr/local/kafka# ll

total 8

drwxr-xr-x 2 root root 4096 Jun 21 09:55 ./

drwxr-xr-x 15 root root 4096 Jun 21 09:55 ../

root@hecs-349024:/usr/local/kafka# tar -zxvf /root/kafka_2.13-3.7.0.tgz -C ./

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

2.使用自定义的日志目录

代码如下(示例):

drwxr-xr-x 8 root root 4096 Jun 21 09:59 ./ drwxr-xr-x 3 root root 4096 Jun 21 09:56 ../ drwxr-xr-x 3 root root 4096 Feb 9 21:34 bin/ drwxr-xr-x 3 root root 4096 Feb 9 21:34 config/ drwxr-xr-x 2 root root 12288 Jun 21 09:56 libs/ -rw-r--r-- 1 root root 15125 Feb 9 21:25 LICENSE drwxr-xr-x 2 root root 4096 Feb 9 21:34 licenses/ drwxr-xr-x 2 root root 4096 Jun 21 09:59 logs/ -rw-r--r-- 1 root root 28359 Feb 9 21:25 NOTICE drwxr-xr-x 2 root root 4096 Feb 9 21:34 site-docs/ root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# cd logs/ root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0/logs# ll total 8 drwxr-xr-x 2 root root 4096 Jun 21 09:59 ./ drwxr-xr-x 8 root root 4096 Jun 21 09:59 ../ root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0/logs# pwd /usr/local/kafka/kafka_2.13-3.7.0/logs

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

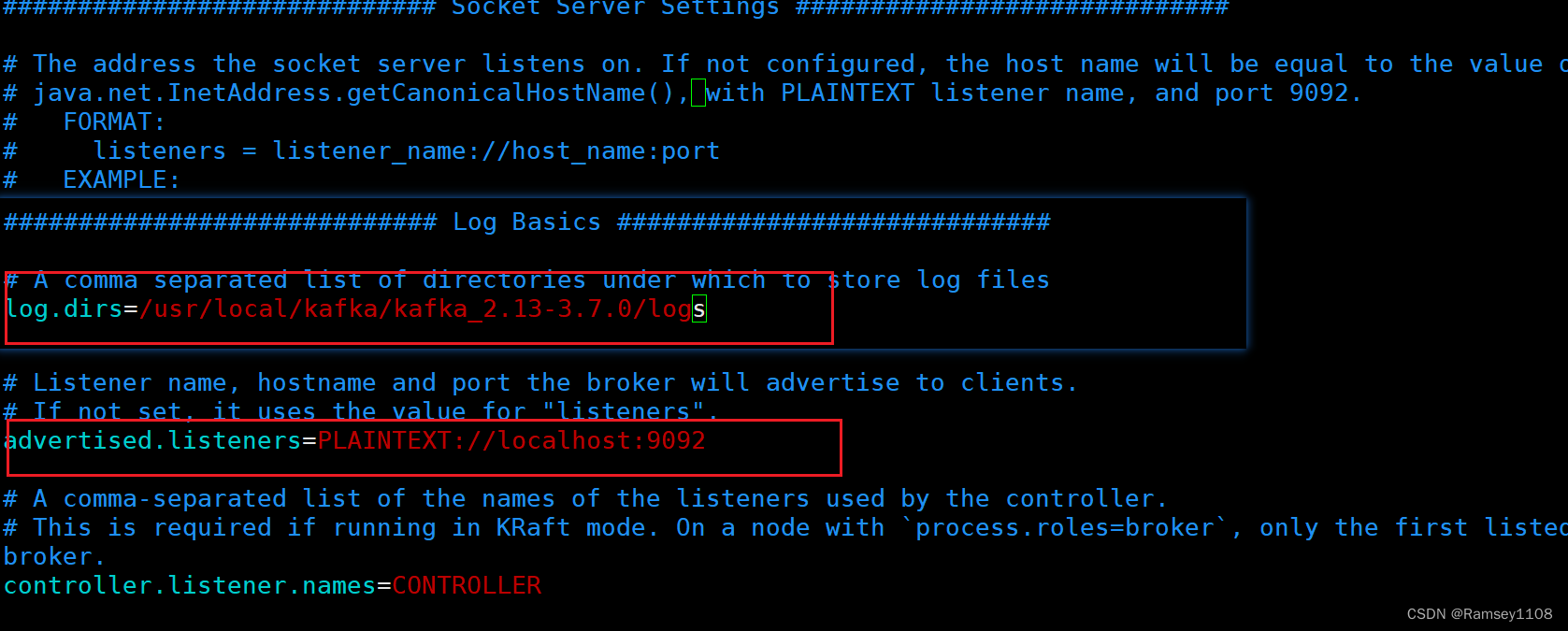

这里需要记下我们的log目录地址,我这里的是 /usr/local/kafka/kafka_2.13-3.7.0/logs

3.更改默认的配置文件

之前网上的教程是配置kafka的时候是需要依赖zookeeper的,于是网上找了一下资料如下:

从Apache Kafka 2.8.0版本开始,不再需要ZooKeeper作为其元数据存储和管理系统。Kafka社区已经引入了一个新的Raft协议(Kafka Raft Metadata Quorum,或称KRaft),能够替代ZooKeeper来管理集群的元数据。

在使用KRaft的配置下,Kafka集群将直接管理自己需要的元数据信息,这种架构简化了部署,使得Kafka能够更容易地扩展和管理。然而,这个特性在Kafka 2.8.0版本中还是一个预览版,不建议在生产环境中使用。在后续的版本中,该特性会逐步完善并稳定下来。

总结来说,如果你使用的是Kafka 2.8.0及其之后的版本,并且配置使用了KRaft模式,那么Kafka运行时就不再依赖ZooKeeper。但是,如果你使用的是Kafka 2.8.0之前的版本,或者没有启用KRaft模式,那么依然需要ZooKeeper来协调和管理集群元数据。

那么进入我们的文件夹看一下,果然是能看到Kafka这个目录的

root@hecs-349024:~# cd /usr/local/kafka/kafka_2.13-3.7.0/ root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# ll total 120 drwxr-xr-x 8 root root 4096 Jun 21 10:07 ./ drwxr-xr-x 3 root root 4096 Jun 21 09:56 ../ drwxr-xr-x 3 root root 4096 Feb 9 21:34 bin/ drwxr-xr-x 3 root root 4096 Feb 9 21:34 config/ -rw-r--r-- 1 root root 35550 Jun 21 16:07 kafka.log drwxr-xr-x 2 root root 12288 Jun 21 09:56 libs/ -rw-r--r-- 1 root root 15125 Feb 9 21:25 LICENSE drwxr-xr-x 2 root root 4096 Feb 9 21:34 licenses/ drwxr-xr-x 3 root root 4096 Jun 21 17:06 logs/ -rw-r--r-- 1 root root 28359 Feb 9 21:25 NOTICE drwxr-xr-x 2 root root 4096 Feb 9 21:34 site-docs/ root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# cd config/ root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0/config# ll total 84 drwxr-xr-x 3 root root 4096 Feb 9 21:34 ./ drwxr-xr-x 8 root root 4096 Jun 21 10:07 ../ -rw-r--r-- 1 root root 906 Feb 9 21:25 connect-console-sink.properties -rw-r--r-- 1 root root 909 Feb 9 21:25 connect-console-source.properties -rw-r--r-- 1 root root 5475 Feb 9 21:25 connect-distributed.properties -rw-r--r-- 1 root root 883 Feb 9 21:25 connect-file-sink.properties -rw-r--r-- 1 root root 881 Feb 9 21:25 connect-file-source.properties -rw-r--r-- 1 root root 2063 Feb 9 21:25 connect-log4j.properties -rw-r--r-- 1 root root 2540 Feb 9 21:25 connect-mirror-maker.properties -rw-r--r-- 1 root root 2262 Feb 9 21:25 connect-standalone.properties -rw-r--r-- 1 root root 1221 Feb 9 21:25 consumer.properties drwxr-xr-x 2 root root 4096 Jun 21 10:03 kraft/ -rw-r--r-- 1 root root 4917 Feb 9 21:25 log4j.properties -rw-r--r-- 1 root root 2065 Feb 9 21:25 producer.properties -rw-r--r-- 1 root root 6896 Feb 9 21:25 server.properties -rw-r--r-- 1 root root 1094 Feb 9 21:25 tools-log4j.properties -rw-r--r-- 1 root root 1169 Feb 9 21:25 trogdor.conf -rw-r--r-- 1 root root 1205 Feb 9 21:25 zookeeper.properties root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0/config# cd kraft/ root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0/config/kraft# ll total 32 drwxr-xr-x 2 root root 4096 Jun 21 10:03 ./ drwxr-xr-x 3 root root 4096 Feb 9 21:34 ../ -rw-r--r-- 1 root root 6111 Jun 21 10:02 broker.properties -rw-r--r-- 1 root root 5736 Jun 21 10:03 controller.properties -rw-r--r-- 1 root root 6313 Jun 21 10:01 server.properties root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0/config/kraft# pwd /usr/local/kafka/kafka_2.13-3.7.0/config/kraft root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0/config/kraft# root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0/config/kraft# ll total 32 drwxr-xr-x 2 root root 4096 Jun 21 10:03 ./ drwxr-xr-x 3 root root 4096 Feb 9 21:34 ../ -rw-r--r-- 1 root root 6111 Jun 21 10:02 broker.properties -rw-r--r-- 1 root root 5736 Jun 21 10:03 controller.properties -rw-r--r-- 1 root root 6313 Jun 21 10:01 server.properties

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

可以看到在config目录下有kraft的目录,那么我们只需要配置kraft的目录即可

(broker、controller、server)三个目录

在broker和server的两个配置文件,我们要修改两处,其中log的地址是刚刚我们自定义的文件夹目录地址,localhost可以配置成我们需要的ip地址。

在controller中我们只需要配置日志的文件目录即可。不需要配置地址

4.完成启动前设置

配置KAFKA_CLUSTER_ID并持久化到存储目录中

KAFKA_CLUSTER_ID是Kafka集群的唯一标识符,用于标识一个特定的Kafka集群。每个Kafka集群都会有一个独一无二的CLUSTER_ID,这个ID是在Kafka集群启动时生成的,并且在整个生命周期中保持不变。

root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# KAFKA_CLUSTER_ID="$(bin/kafka-storage.sh random-uuid)"

root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0#

root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0#

root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0#

root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# bin/kafka-storage.sh format -t $KAFKA_CLUSTER_ID -c config/kraft/server.propertiesmetaPropertiesEnsemble=MetaPropertiesEnsemble(metadataLogDir=Optional.empty, dirs={/usr/local/kafka/kafka_2.13-3.7.0/logs: EMPTY})

Formatting /usr/local/kafka/kafka_2.13-3.7.0/logs with metadata.version 3.7-IV4.

root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# ^C

root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# ^C

root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# echo $KAFKA_CLUSTER_ID

dFAmI9AKQVCd9ZD

root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

5.检查服务启动以及日志情况

需要注意的是我们启动的时候需要指定配置文件进行启动,记得我们是kafka集群模式,指定目录的时候一定注意看清楚

root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# nohup bin/kafka-server-start.sh config/kraft/server.properties > kafka.log 2>&1 & [1] 3508 root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# jps 3923 Jps 3508 Kafka 16837 jar root@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# oot@hecs-349024:/usr/local/kafka/kafka_2.13-3.7.0# ll total 120 drwxr-xr-x 8 root root 4096 Jun 21 10:07 ./ drwxr-xr-x 3 root root 4096 Jun 21 09:56 ../ drwxr-xr-x 3 root root 4096 Feb 9 21:34 bin/ drwxr-xr-x 3 root root 4096 Feb 9 21:34 config/ -rw-r--r-- 1 root root 35944 Jun 21 17:07 kafka.log drwxr-xr-x 2 root root 12288 Jun 21 09:56 libs/ -rw-r--r-- 1 root root 15125 Feb 9 21:25 LICENSE drwxr-xr-x 2 root root 4096 Feb 9 21:34 licenses/ drwxr-xr-x 3 root root 4096 Jun 21 17:22 logs/ -rw-r--r-- 1 root root 28359 Feb 9 21:25 NOTICE drwxr-xr-x 2 root root 4096 Feb 9 21:34 site-docs/

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

可以看到目录已经生成,最后我们看一下日志没问题就代表我们的安装部署启动已经成功了

[2024-06-21 10:07:09,460] INFO [BrokerLifecycleManager id=1] Successfully registered broker 1 with broker epoch 8 (kafka.server.BrokerLifecycleManager)

[2024-06-21 10:07:09,461] INFO [BrokerServer id=1] Waiting for the broker to be unfenced (kafka.server.BrokerServer)

[2024-06-21 10:07:09,462] INFO [BrokerLifecycleManager id=1] The broker is in RECOVERY. (kafka.server.BrokerLifecycleManager)

[2024-06-21 10:07:09,525] INFO [BrokerLifecycleManager id=1] The broker has been unfenced. Transitioning from RECOVERY to RUNNING. (kafka.server.BrokerLifecycleManager)

[2024-06-21 10:07:09,526] INFO [BrokerServer id=1] Finished waiting for the broker to be unfenced (kafka.server.BrokerServer)

[2024-06-21 10:07:09,527] INFO authorizerStart completed for endpoint PLAINTEXT. Endpoint is now READY. (org.apache.kafka.server.network.EndpointReadyFutures)

[2024-06-21 10:07:09,527] INFO [SocketServer listenerType=BROKER, nodeId=1] Enabling request processing. (kafka.network.SocketServer)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

总结

以上就是我个人安装kafka的过程记录,希望可以对大家有帮助!