- 1各家的“ChatGPT”什么时候能取代程序员?CSDN AI编程榜发布_ai coding工具排行

- 2引领“工作流时代”的AI绘画工具!Comfyui零基础入门操作教程

- 3Numpy中ndim、shape、dtype、astype的用法_numpy as type

- 4数据库——基础语法_为什么不能随意更改或删除被参照表中的主候选键?

- 5Day95:云上攻防-云原生篇&Docker安全&权限环境检测&容器逃逸&特权模式&危险挂载_docker中的 .dockerenv 文件

- 6electron无边框拖拽-按节点拖动窗口_electron 无边框

- 7WebSecurity源码分析

- 8【面试宝典】60道C++STL高频题整理(附答案背诵版)_c++ stl面试题

- 9【算法模板】数据结构:三分查找

- 10清北复交人浙南 计算机交叉学科项目大盘点!_计算机本科加法学硕士清华

Langchain Streamlit AI系列之 构建多 PDF RAG 聊天机器人,通过对话式 AI 聊天机器人读取、处理和与 PDF 数据交互(教程含源码)_基于 langchain 和 streamlit,构建多 pdf rag 聊天机器人

赞

踩

简介

与大型 PDF 对话很酷。您可以与笔记、书籍和文档等进行聊天。这篇博文将帮助您构建一个基于 Multi RAG Streamlit 的 Web 应用程序,以通过对话式 AI 聊天机器人读取、处理和与 PDF 数据交互。以下是此应用程序工作原理的分步说明,使用简单的语言便于理解。

系列文章

-

《LangChain + Streamlit:在几分钟内创建基于语言模型的大型演示所需的技术堆栈》 权重1,本地类、Streamlit类、LangChain类

-

《使用本地 Llama 2 模型和向量数据库建立私有检索增强生成 (RAG) 系统 LangChain》权重1,llama类、本地类、langchain类

-

《如何在自己的电脑上构建大语言模型,使用 LangChain(而不是 OpenAI)回答关于您的文档的问题》 权重2,本地类、langchain类

-

《在本地 PC 上构建本地聊天机器人100%离线,基于 Langchain、LLama Streamlit 的小于 1 GB 的大型语言模型(支持apple macos m1 m2 window11)》 权重1,本地类、苹果类,llama类、langchain类

-

《如何使用 GPT4All 和 Langchain 来处理您的文档》 权重1,矢量数据库类、langchain类

系列文章

-

《LangChain + Streamlit:在几分钟内创建基于语言模型的大型演示所需的技术堆栈》 权重1,本地类、Streamlit类、LangChain类

-

《LangChain 系列教程之 使用 LangChain 将对话转化为有向图 构建一个旨在了解有关新潜在客户的关键信息的聊天机器人。》 权重0,矢量数据库类、langchain类

-

《Langchain Streamlit AI系列之使用 Langchain、OpenAI 函数调用和 Streamlit 构建 AI 金融分析师》 权重0,矢量数据库类、langchain类

-

《Langchain Streamlit AI系列之 LangChain + Streamlit+ Llama 将对话式 AI 引入你的本地机器(教程含完成源码)》 权重0,矢量数据库类、langchain类

-

《Langchain Streamlit AI系列之 构建多 PDF RAG 聊天机器人,通过对话式 AI 聊天机器人读取、处理和与 PDF 数据交互(教程含源码)》 权重0,矢量数据库类、langchain类

使用必要的工具做好准备

该应用程序首先导入各种强大的库:

- Streamlit:用于创建 Web 界面。

- PyPDF2:用于读取 PDF 文件的工具。

- Langchain:一套用于自然语言处理和创建对话式 AI 的工具。

- FAISS:一个用于高效向量相似性搜索的库,可用于在大型数据集中快速查找信息。

import streamlit as st

from PyPDF2 import PdfReader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_core.prompts import ChatPromptTemplate

from langchain_community.embeddings.spacy_embeddings import SpacyEmbeddings

from langchain_community.vectorstores import FAISS

from langchain.tools.retriever import create_retriever_tool

from dotenv import load_dotenv

from langchain_anthropic import ChatAnthropic

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain.agents import AgentExecutor, create_tool_calling_agent

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

阅读和处理 PDF 文件

我们的应用程序中的第一个主要功能是用于读取 PDF 文件:

- PDF 阅读器:当用户上传一个或多个 PDF 文件时,应用程序会读取这些文档的每一页并提取文本,将其合并为一个连续的字符串。

一旦文本被提取出来,它就会被分成可管理的块:

文本分割器:使用 Langchain 库,将文本分成每 1000 个字符的块。这种分割有助于更有效地处理和分析文本。

def pdf_read(pdf_doc):

text = ""

for pdf in pdf_doc:

pdf_reader = PdfReader(pdf)

for page in pdf_reader.pages:

text += page.extract_text()

return text

def get_chunks(text):

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

chunks = text_splitter.split_text(text)

return chunks

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

创建可搜索的文本数据库并进行嵌入

为了使文本可搜索,应用程序将文本块转换为向量表示:

- 向量存储:应用程序使用 FAISS 库将文本块转换为向量,并在本地保存这些向量。这种转换至关重要,因为它允许系统在文本中执行快速有效的搜索。

embeddings = SpacyEmbeddings(model_name="en_core_web_sm")

def vector_store(text_chunks):

vector_store = FAISS.from_texts(text_chunks, embedding=embeddings)

vector_store.save_local("faiss_db")

- 1

- 2

- 3

- 4

- 5

- 6

设置对话式人工智能

该应用的核心是对话式人工智能,它使用了 OpenAI 的强大模型:

- AI 配置:该应用使用 OpenAI 的 GPT 模型设置对话式人工智能。该人工智能旨在根据其处理的 PDF 内容回答问题。

-对话链:人工智能使用一组提示来理解上下文,并为用户查询提供准确的响应。如果文本中没有问题的答案,人工智能将被编程为以“上下文中没有答案”来响应,以确保用户不会收到错误的信息。

def get_conversational_chain(tools, ques): llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0, api_key="") prompt = ChatPromptTemplate.from_messages([...]) tool=[tools] agent = create_tool_calling_agent(llm, tool, prompt) agent_executor = AgentExecutor(agent=agent, tools=tool, verbose=True) response=agent_executor.invoke({"input": ques}) print(response) st.write("Reply: ", response['output']) def user_input(user_question): new_db = FAISS.load_local("faiss_db", embeddings,allow_dangerous_deserialization=True) retriever=new_db.as_retriever() retrieval_chain= create_retriever_tool(retriever,"pdf_extractor","This tool is to give answer to queries from the pdf") get_conversational_chain(retrieval_chain,user_question)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

用户交互

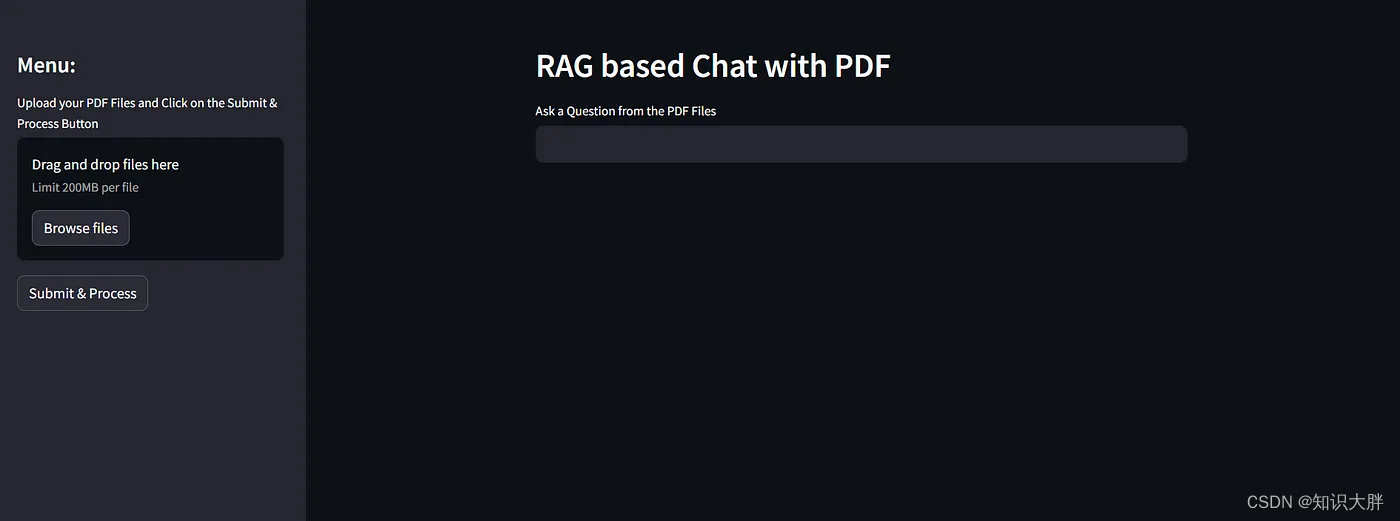

后端准备就绪后,该应用程序使用 Streamlit 创建了一个用户友好的界面:

- 用户界面:用户可以看到一个简单的文本输入框,他们可以在其中输入与 PDF 内容相关的问题。然后,该应用程序会直接在网页上显示 AI 的响应。-

文件上传和处理:用户可以随时上传新的 PDF 文件。该应用程序会实时处理这些文件,并使用新文本更新数据库,以供 AI 搜索。

def main(): st.set_page_config("Chat PDF") st.header("RAG based Chat with PDF") user_question = st.text_input("Ask a Question from the PDF Files") if user_question: user_input(user_question) with st.sidebar: pdf_doc = st.file_uploader("Upload your PDF Files and Click on the Submit & Process Button", accept_multiple_files=True) if st.button("Submit & Process"): with st.spinner("Processing..."): raw_text = pdf_read(pdf_doc) text_chunks = get_chunks(raw_text) vector_store(text_chunks) st.success("Done")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

结论

完整代码

import streamlit as st from PyPDF2 import PdfReader from langchain.text_splitter import RecursiveCharacterTextSplitter from langchain_core.prompts import ChatPromptTemplate from langchain_community.embeddings.spacy_embeddings import SpacyEmbeddings from langchain_community.vectorstores import FAISS from langchain.tools.retriever import create_retriever_tool from dotenv import load_dotenv from langchain_anthropic import ChatAnthropic from langchain_openai import ChatOpenAI, OpenAIEmbeddings from langchain.agents import AgentExecutor, create_tool_calling_agent import os os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE" embeddings = SpacyEmbeddings(model_name="en_core_web_sm") def pdf_read(pdf_doc): text = "" for pdf in pdf_doc: pdf_reader = PdfReader(pdf) for page in pdf_reader.pages: text += page.extract_text() return text def get_chunks(text): text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200) chunks = text_splitter.split_text(text) return chunks def vector_store(text_chunks): vector_store = FAISS.from_texts(text_chunks, embedding=embeddings) vector_store.save_local("faiss_db") def get_conversational_chain(tools,ques): #os.environ["ANTHROPIC_API_KEY"]=os.getenv["ANTHROPIC_API_KEY"] #llm = ChatAnthropic(model="claude-3-sonnet-20240229", temperature=0, api_key=os.getenv("ANTHROPIC_API_KEY"),verbose=True) llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0, api_key="") prompt = ChatPromptTemplate.from_messages( [ ( "system", """You are a helpful assistant. Answer the question as detailed as possible from the provided context, make sure to provide all the details, if the answer is not in provided context just say, "answer is not available in the context", don't provide the wrong answer""", ), ("placeholder", "{chat_history}"), ("human", "{input}"), ("placeholder", "{agent_scratchpad}"), ] ) tool=[tools] agent = create_tool_calling_agent(llm, tool, prompt) agent_executor = AgentExecutor(agent=agent, tools=tool, verbose=True) response=agent_executor.invoke({"input": ques}) print(response) st.write("Reply: ", response['output']) def user_input(user_question): new_db = FAISS.load_local("faiss_db", embeddings,allow_dangerous_deserialization=True) retriever=new_db.as_retriever() retrieval_chain= create_retriever_tool(retriever,"pdf_extractor","This tool is to give answer to queries from the pdf") get_conversational_chain(retrieval_chain,user_question) def main(): st.set_page_config("Chat PDF") st.header("RAG based Chat with PDF") user_question = st.text_input("Ask a Question from the PDF Files") if user_question: user_input(user_question) with st.sidebar: st.title("Menu:") pdf_doc = st.file_uploader("Upload your PDF Files and Click on the Submit & Process Button", accept_multiple_files=True) if st.button("Submit & Process"): with st.spinner("Processing..."): raw_text = pdf_read(pdf_doc) text_chunks = get_chunks(raw_text) vector_store(text_chunks) st.success("Done") if __name__ == "__main__": main()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

通过将应用程序另存为 app.py 然后使用来运行

streamlit 运行应用程序.py

输出: