热门标签

热门文章

- 1PTA 1059 C语言竞赛 (20 分)_c1059 c语言竞赛 (20 分)

- 2OAuth2授权(Client Credentials)

- 3Sharding-JDBC分库分表绑定表规则优化使用_sharding.tables

- 4Auto.js 分享信息到 QQ 微信_autojs消息分享

- 5数据清洗之数据转换_df1['网管中网元名称'].str.find('光口') != -1

- 6在线数据库关系图设计工具,选Itbuilder_数据库关系设计工具

- 7java计算机毕业设计管易tms运输智能监控管理系统(附源码+springboot+开题+论文+部署)_tms源码

- 8大话C语言:第24篇 预处理

- 9Django 中的用户认证_django authenticate

- 10数据结构树-->树基础_除尾节点,每一个节点有且只有一个

当前位置: article > 正文

风格迁移网络修改流程(自用版)_adaattn风格迁移

作者:我家自动化 | 2024-06-12 20:11:13

赞

踩

adaattn风格迁移

一. AdaAttN-Revisit Attention Mechanism in Arbitrary Neural Style Transfer(ICCV2021)

- 下载vgg_normalised.pth

- 打开visdom

python -m visdom.server

- 1

- 在 train_adaattn.sh 中配置 content_path、style_path 和 image_encoder_path,分别表示训练内容图像、训练样式图像和 "vgg_normalised.pth "文件夹的路径。

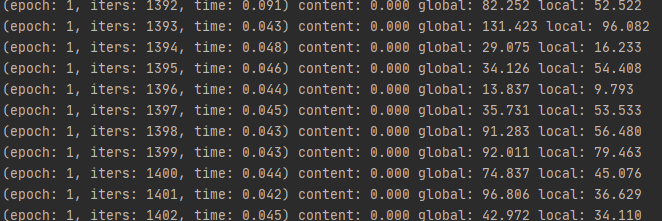

python train.py --content_path F:\RefDayDataset\KAIST_256\trainA --style_path F:\RefDayDataset\KAIST_256\trainB --name AdaAttN_kaist --model adaattn --dataset_mode unaligned --no_dropout --load_size 286 --crop_size 256 --image_encoder_path C:\Users\64883\Desktop\AdaAttN-main\models\vgg_normalised.pth --gpu_ids 0 --batch_size 1 --n_epochs 2 --n_epochs_decay 3 --display_freq 1 --display_port 8097 --display_env AdaAttN --lambda_local 3 --lambda_global 10 --lambda_content 0 --shallow_layer --skip_connection_3

- 1

问题1

OSError: [WinError 1455] 页面文件太小,无法完成操作。 Error loading "D:\Anaconda3\envs\paddlepaddle\lib\site-packages\torch\lib\cudnn_cnn_infer64_8.dll" or one of its dependencies.

self._popen = self._Popen(self)

File "D:\Anaconda3\envs\paddlepaddle\lib\multiprocessing\context.py", line 223, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

File "D:\Anaconda3\envs\paddlepaddle\lib\multiprocessing\context.py", line 322, in _Popen

return Popen(process_obj)

File "D:\Anaconda3\envs\paddlepaddle\lib\multiprocessing\popen_spawn_win32.py", line 89, in __init__

reduction.dump(process_obj, to_child)

File "D:\Anaconda3\envs\paddlepaddle\lib\multiprocessing\reduction.py", line 60, in dump

ForkingPickler(file, protocol).dump(obj)

BrokenPipeError: [Errno 32] Broken pipe

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

解决方法

parser.add_argument('--num_threads', default=4, type=int, help='# threads for loading data')

- 1

修改为

parser.add_argument('--num_threads', default=0, type=int, help='# threads for loading data')

- 1

问题2

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 4.08 GiB (GPU 0; 8.00 GiB total capacity; 134.76 MiB already allocated; 4.94 GiB free; 748.00 MiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for M

emory Management and PYTORCH_CUDA_ALLOC_CONF

- 1

- 2

解决方法:降低分辨率

问题3 输出频率太频繁了

解决方法

--display_freq 1

- 1

更改为

--display_freq 1000

- 1

问题4 内容损失始终为0

解决方法

--lambda_content 0

- 1

修改为

--lambda_content 10

- 1

问题5 训练轮次过少

解决方法

--n_epochs 2 --n_epochs_decay 3

- 1

修改为

--n_epochs 100 --n_epochs_decay 100

- 1

- 修改测试代码

python test.py --content_path /home/sys120-1/cy/ref_based/FLIR/testA --style_path /home/sys120-1/cy/ref_based/FLIR/testB --name AdaAttN_flir --model adaattn --dataset_mode unaligned --load_size 286 --crop_size 256 --image_encoder_path /home/sys120-1/cy/ref_based/AdaAttN-main/models/vgg_normalised.pth --gpu_ids 1 --skip_connection_3 --shallow_layer

- 1

问题1:只跑了50张

修改test_options.py

parser.add_argument('--num_test', type=int, default=50, help='how many test images to run')

- 1

修改为

parser.add_argument('--num_test', type=int, default=2000, help='how many test images to run')

- 1

二. ArtFlow- Unbiased Image Style Transfer via Reversible Neural Flows(CVPR2021)

- 下载VGG模型,创建models文件夹,将模型移动到models文件夹下

- 修改训练代码

创建experiments文件夹

python -u train.py --content_dir F:/RefDayDataset/KAIST_256/trainA --style_dir F:/RefDayDataset/KAIST_256/trainB --save_dir ./experiments/ArtFlow-AdaIN --n_flow 8 --n_block 2 --batch_size 4 --operator adain

- 1

问题1

Traceback (most recent call last):

File "train.py", line 152, in <module>

content_dataset = FlatFolderDataset(args.content_dir, content_tf)

File "train.py", line 37, in __init__

self.paths = os.listdir(self.root)

OSError: [WinError 123] 文件名、目录名或卷标语法不正确。: "'F:\\RefDayDataset\\KAIST_256\\trainA'"

- 1

- 2

- 3

- 4

- 5

- 6

- 7

解决方法:把单引号删除

问题2

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

解决方法

parser.add_argument('--n_threads', type=int, default=8)

- 1

修改为

parser.add_argument('--n_threads', type=int, default=0)

- 1

问题3

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 36.00 MiB (GPU 0; 8.00 GiB total capacity; 7.42 GiB already allocated; 0 bytes free; 7.47 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memor

y Management and PYTORCH_CUDA_ALLOC_CONF

- 1

- 2

- 3

解决方法:降低batchsize,降低分辨率

--batch_size 4

- 1

修改为

--batch_size 1

- 1

三. IEST- Artistic Style Transfer with Internal-external Learning and Contrastive Learning(NeurIPS2021)

- 下载VGG模型,并移动到models文件夹下

- 修改训练代码

python train.py --content_dir F:/RefDayDataset/KAIST_256/trainA --style_dir F:/RefDayDataset/KAIST_256/trainB

- 1

问题1

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

解决方法

parser.add_argument('--n_threads', type=int, default=16)

- 1

修改为

parser.add_argument('--n_threads', type=int, default=0)

- 1

问题2

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 36.00 MiB (GPU 0; 8.00 GiB total capacity; 7.42 GiB already allocated; 0 bytes free; 7.47 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memor

y Management and PYTORCH_CUDA_ALLOC_CONF

- 1

- 2

- 3

解决方法:降低batchsize,降低分辨率

parser.add_argument('--batch_size', type=int, default=12)

- 1

修改为

parser.add_argument('--batch_size', type=int, default=2)

- 1

问题3

RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

- 1

- 2

- 3

解决方法:试试在另外一张卡,或者改变num_workers

四. CAST- Domain Enhanced Arbitrary Image Style Transfer via Contrastive Learning(SIGGRAPH2022)

- 下载pretrained style classification model和pretrained content encoder

- 修改训练代码

python train.py --dataroot F:/RefDayDataset/KAIST_256 --name cast

- 1

问题1

File "<frozen importlib._bootstrap>", line 1006, in _gcd_import

File "<frozen importlib._bootstrap>", line 983, in _find_and_load

File "<frozen importlib._bootstrap>", line 967, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 677, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 728, in exec_module

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "C:\Users\64883\Desktop\CAST_pytorch-main\models\cast_model.py", line 11, in <module>

import kornia.augmentation as K

ModuleNotFoundError: No module named 'kornia'

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

解决方法

pip install kornia

- 1

问题2

requests.exceptions.ConnectionError: HTTPConnectionPool(host='localhost', port=8097): Max retries exceeded with url: /env/main (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x00000230810E0588>: Failed to establish a new connection: [WinError 10061] 由于目标计算机积极拒绝,无法连接。')

)

[WinError 10061] 由于目标计算机积极拒绝,无法连接。

on_close() takes 1 positional argument but 3 were given

Visdom python client failed to establish socket to get messages from the server. This feature is optional and can be disabled by initializing Visdom with `use_incoming_socket=False`, which will prevent waiting for this request to timeout.

Traceback (most recent call last):

File "D:\Anaconda3\envs\paddlepaddle\lib\site-packages\urllib3\util\connection.py", line 85, in create_connection

sock.connect(sa)

ConnectionRefusedError: [WinError 10061] 由于目标计算机积极拒绝,无法连接。

During handling of the above exception, another exception occurred:

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

解决方法

python -m visdom.server

- 1

问题3

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "D:\Anaconda3\envs\paddlepaddle\lib\multiprocessing\spawn.py", line 105, in spawn_main

exitcode = _main(fd)

File "D:\Anaconda3\envs\paddlepaddle\lib\multiprocessing\spawn.py", line 115, in _main

self = reduction.pickle.load(from_parent)

EOFError: Ran out of input

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

解决方法

parser.add_argument('--num_threads', default=4, type=int, help='# threads for loading data')

- 1

修改为

parser.add_argument('--num_threads', default=0, type=int, help='# threads for loading data')

- 1

问题4

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 4.08 GiB (GPU 0; 8.00 GiB total capacity; 751.44 MiB already allocated; 4.37 GiB free; 1.30 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Mem

ory Management and PYTORCH_CUDA_ALLOC_CONF

- 1

- 2

解决方法:降低batchsize,降低分辨率

五. StyTr2- Image Style Transfer with Transformers(CVPR2022)

- 下载VGG模型,移动到models文件夹下

- 修改训练代码

python train.py --content_dir F:/RefDayDataset/KAIST_256/trainA --style_dir F:/RefDayDataset/KAIST_256/trainB --save_dir experiments/ --batch_size 1

- 1

问题1

ImportError: cannot import name '_new_empty_tensor' from 'torchvision.ops' (D:\python\lib\site-packages\torchvision\ops\__init__.py)

- 1

解决方法

import torchvision

if float(torchvision.__version__[:3]) < 0.7:

from torchvision.ops import _new_empty_tensor

from torchvision.ops.misc import _output_size

- 1

- 2

- 3

- 4

修改为

import torchvision

if float(torchvision.__version__[2:4]) < 7:

from torchvision.ops import _new_empty_tensor

from torchvision.ops.misc import _output_size

- 1

- 2

- 3

- 4

问题2

ImportError: cannot import name 'container_abcs' from 'torch._six' (D:\Anaconda3\envs\paddlepaddle\lib\site-packages\torch\_six.py)

- 1

- 2

解决方法

from torch._six import container_abcs

- 1

修改为

import collections.abc as container_abcs

- 1

问题3

File "D:\Anaconda3\envs\paddlepaddle\lib\site-packages\torch\_utils.py", line 577, in <lambda>

return [_get_device_attr(lambda m: m.get_device_properties(i)) for i in device_ids]

File "D:\Anaconda3\envs\paddlepaddle\lib\site-packages\torch\cuda\__init__.py", line 374, in get_device_properties

raise AssertionError("Invalid device id")

AssertionError: Invalid device id

- 1

- 2

- 3

- 4

- 5

- 6

解决方法

train中116行注释掉

# network = nn.DataParallel(network, device_ids=[0,1])

- 1

问题4

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

解决方法

parser.add_argument('--n_threads', type=int, default=16)

- 1

修改为

parser.add_argument('--n_threads', type=int, default=0)

- 1

问题5

Traceback (most recent call last):

File "train.py", line 135, in <module>

{'params': network.module.transformer.parameters()},

File "D:\Anaconda3\envs\paddlepaddle\lib\site-packages\torch\nn\modules\module.py", line 1270, in __getattr__

type(self).__name__, name))

AttributeError: 'StyTrans' object has no attribute 'module'

- 1

- 2

- 3

- 4

- 5

- 6

- 7

这个错误通常在使用 PyTorch 的多 GPU 训练时出现。在多 GPU 训练中,模型通常会被包装在 nn.DataParallel 或 nn.parallel.DistributedDataParallel 中,以实现并行计算。这会导致模型对象的属性访问发生变化。

解决方法

optimizer = torch.optim.Adam([

{'params': network.module.transformer.parameters()},

{'params': network.module.decode.parameters()},

{'params': network.module.embedding.parameters()},

], lr=args.lr)

- 1

- 2

- 3

- 4

- 5

更改为

optimizer = torch.optim.Adam([

{'params': network.transformer.parameters()},

{'params': network.decode.parameters()},

{'params': network.embedding.parameters()},

], lr=args.lr)

- 1

- 2

- 3

- 4

- 5

六. QuantArt- Quantizing Image Style Transfer Towards High Visual Fidelity(CVPR2023)

- 创建kaist.yaml

- 运行训练代码

python -u main.py --base configs/kaist.yaml -t True --gpus 0

- 1

声明:本文内容由网友自发贡献,转载请注明出处:【wpsshop】

推荐阅读

相关标签