- 1自己写一个决策树?python 下 DecisionTreeClassifier 实现_python decisiontreeclassifier 实现

- 2高等数学:第三章 微分中值定理与导数的应用(6)最小值与最大值问题_利用微分中值定理比较函数大小

- 3使用Java和PostGis的全国A级风景区数据入库实战_postgis java

- 4从鸿蒙开发聊聊聊聊程序员如何抓住新技术的红利_鸿蒙个人开发者如何挣钱

- 5Docker基本命令

- 6java实现希尔排序

- 7Quartus使用步骤及联合Modelsim仿真教程_quartus怎么仿真

- 8数据集:mnist手写数据集_一个手写数字数据集怎么做

- 9行为检测论文笔记【综述】基于深度学习的行为检测方法综述_深度学习的工人多种不安全行为识别方法综述

- 10MySQL——C/C++连接数据库_c++ mysql

Word2vec模型原理与keras、tensorflow实现word2vec_使用tensorflow和keras来训练word2vec模型

赞

踩

目录

Word2vec简称“词向量”,是一种单词的表示方法。该表示方法不仅能够将高维稀疏向量映射到低纬度空间,同时向量之间的关系还表征了单词之间的关系。在单词相似度、推荐系统中都被广泛使用。

一、Word2vec模型介绍与举例

Word2vec简称词向量。是google 2013年提出的词嵌入模型之一,包含两种网络结构,分别是CBOW(Continues Bag of Words连续词袋模型)和Skip-gram。

CBOW模型和Skip-gram模型的结构如下,可以看出,CBOW模型的是通过上下单词预测中心词,而Skip-gram模型是通过中心词预测上下文。上下文的宽度被称为windows(窗口),下图中windows=2。

在CBOW模型中,上下文单词首先被映射为词向量,词向量的维度一般取50-100维度之间。然后对上下文词向量求和,之后是输出层,输出层的目标是中心词。

在Skip-gram模型中,中心词首先被映射为词向量,在中间层不进行变换,之后是输出层,输出层目标是中心词周围的词向量。

cbow和skip-gram,相对来说后者更为常用。目标是通过训练,用向量embedding来表示词,向量embedding中包含了词的语义和语法信息。CBOW和skipGram实现大体相似,以Skip-Gram模型为例进行讲解。

1.1 Skip-Gram详解

统计语言模型需要计算出一句话在词袋空间中出现的概率,通常的优化目标是最大化概率。w表示词袋中的单词,序号表示了词在句子中的顺序关系。但是这个目标实现起来比较困难。

Skip-Gram放宽了限制,做了一些调整。1)不再用句子中前面的词来预测下一个词,而是用当前词去预测句子中周围的词;2)周围的词包括当前词左右两侧的词;3)丢掉了词序信息,在预测周围词的时候,不考虑与当前词的距离。优化目标是最大化同一个句子中同时出现的词的共现概率,如公式(1)所示,其中k表示滑窗大小。

(1)

如下图所示,在整个词袋vocabulary中预测上下文词,在输出端需要做一个|V|维度的分类,|V|表示词袋vocabulary中词的数量。每一次迭代计算量非常庞大,这是不可行的。为了解决这个问题,采用了Hierarchical Softmax分类算法。

Hierarchical Softmax

如下图所示,构建一个二叉树,每一个词或者分类结果都分布在二叉树的叶子节点上。在做实际分类的时候,从根节点一直走到对应的叶子节点,在每一个节点都做一个二分类即可。假设这是一颗均衡二叉树,并且词袋的大小是|V|,那么从根走到叶子节点只需要进行次计算,远远小于|V|次计算量。

具体如何计算?我们以上图中预测为例进行介绍。树的根部输入的是的向量,用表示。在二叉树的每一个节点上都存放一个向量,需要通过学习得到,最后的叶子节点上没有向量。显而易见,整棵树共有|V|个向量。规定在第k层的节点做分类时,节点左子树为正类别,节点右子树是负类别,该节点的向量用表示。那么正负类的分数如公式(2)(3)所示。在预测的时候,需要按照蓝色箭头的方向做分类,第0层分类结果是负类,第1层分类结果是正类,第2层分类结果是正类,最后到达叶子节点。最后把所有节点的分类分数累乘起来,作为预测的概率,如公式(4)所示,并通过反向传播进行优化。

(2)

(3)

(4)

Huffman编码是一种熵编码方式,对于出现频率高的符号用较短的编码表示,出现频率较低的符号用较长的编码表示,从而达到编码压缩的目的。Hierarchical Softmax树也可以采用Huffman编码的方式生成,高频词用较短的路径到达,低频词用较长的路径到达,可以进一步降低整个训练过程的计算量。

顺便提一句,如果输出端是对所有词的softmax分类的话,那么在Skip-gram模型中,分别有输入和输出两个矩阵,一般是采用输出矩阵作为表示向量。但是如果采用Hierarchical Softmax分类的话,输出端就不存在输出矩阵了,就只能采用输入矩阵作为表示向量了。

关于负采样的优化略。

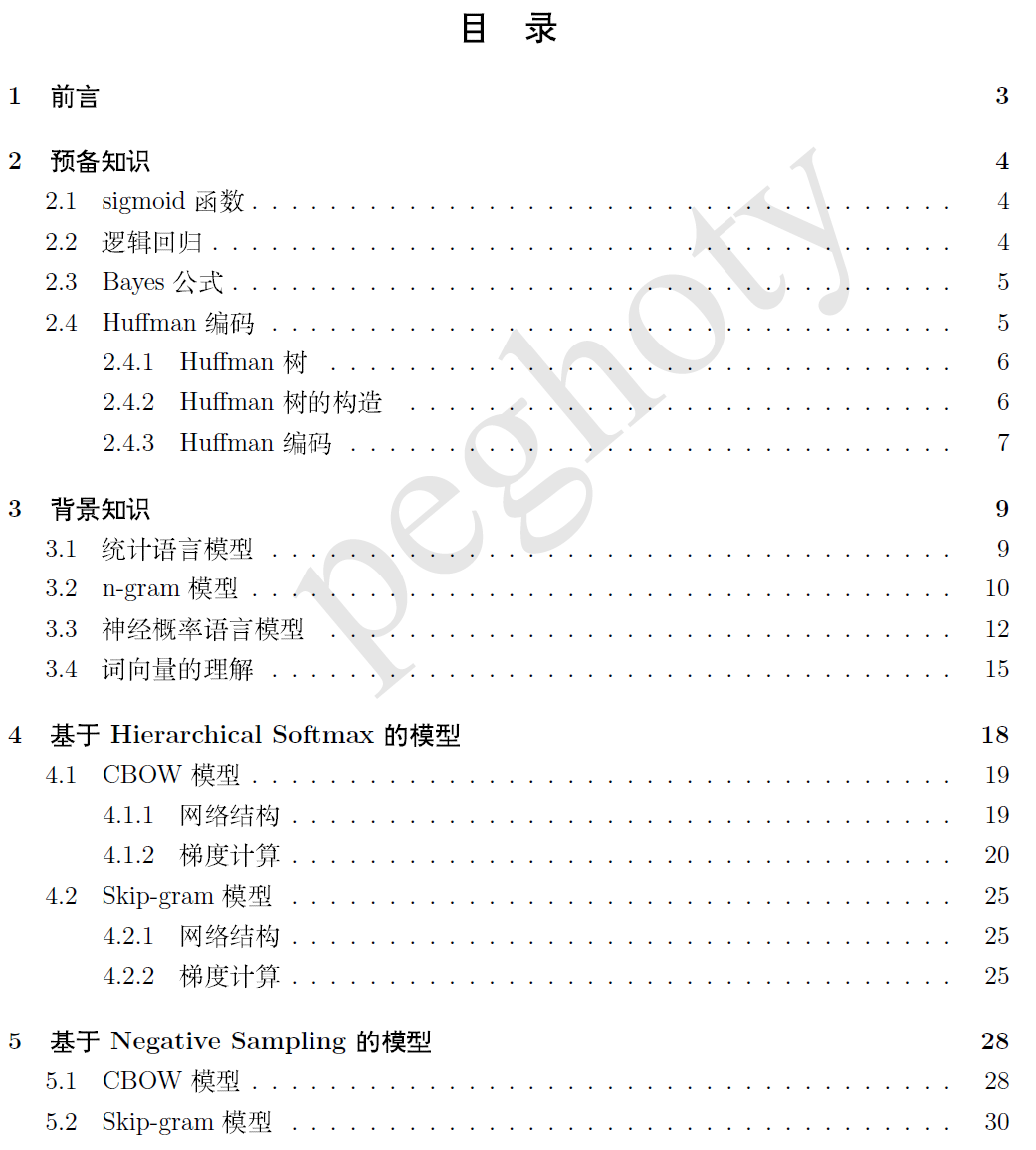

word2vec的原理详解,更多请见word2vec 中的数学原理详解(word2vec 中的数学原理详解(一)目录和前言_皮果提的博客-CSDN博客_word2vec数学原理详解)

1.2 词向量的优势

1.不丢失信息的情况下降低维度

2.矩阵及向量运算便于并

3.向量空间具有物理意义

4.可以在多个不同的维度上具有相似性

5.线性规则:king - man = queen – woman

二、word2vec之keras实现

本节分别实现了word2vec的skip-gram模型和CBOW模型,采用text8.txt语料进行训练,进行了词语相似度计算,并进行了可视化,查看结果,可以看到,语义相近的词向量,其距离越接近。

2.1 keras实现skip-gram模型

- #加载相关头文件

-

- from __future__ import print_function

- import numpy as np

- import collections

- import math

- import numpy as np

- import os

- import random

- #读取text8.txt文件,并查看文本长度。并处理成tokenizer.texts_to_sequences函数需要的格式,为strlist变量 。

- strlist = []

- with open('./text8.txt',"r") as f: #设置文件对象

- str1 = f.read() #可以是随便对文件的操作

- str1=str1.strip()

- print(str1.split())

- strlist = [str1]

打印结果如下:

17005207

采用Tokenizer进行分词,从上面的打印结果可以看到,文本总共有1700W单词,选取词典的大小为50000。采用Tokenizer进行分词。

- vocabulary_size = 50000

- #文本向量化

- from keras.preprocessing.text import Tokenizer

- tokenizer = Tokenizer(num_words=vocabulary_size)

- tokenizer.fit_on_texts(strlist)

- list1sequences = tokenizer.texts_to_sequences(strlist)

- #得到(单词,索引)字典word_index以及(索引,单词)字典index_word。注意词典的0号索引是默认的。因此,文章中的词的索引是从1开始的。

- word_index = tokenizer.word_index

- word_index["unk"]=0

- index_word = dict(zip(word_index.values(),word_index.keys()))

- word_index = tokenizer.word_index

- #将文本进行分词,并数字表示之后,先查看一下数据。

- data=list1sequences[0]

- print(data[:20])

打印如下:

[12, 6, 195, 2, 46, 59, 156, 128, 742, 477, 134, 1, 2, 1, 103, 855, 3, 1, 2, 1]

将数据组织成skip-gram模型需要的数据。窗口大小为window_size,则每一个单词的上下文为2*window_size。

Window_size为2时,则组织成如下格式

Xdata:[195,195,195,195, 2, 2, 2, 2, ...... .]

Yadata:[ 12, 6, 2, 46, 6, 195, 46, 59, ........]

代码如下:

- xdata=[] #输入

- ydata=[] #输出

- def generate_data(window_size):

- for data_index in range(window_size,len(data)-window_size):

- littlenum=data_index-window_size

- index_y=littlenum

- #窗口大小为window_size,ydata对应的数据索引为data_index时,则跳过。

- for j in range(2*window_size):

- xdata.append(data[data_index])

- if(data_index == index_y):

- index_y=index_y+1

- ydata.append(data[index_y])

- index_y=index_y+1

- generate_data(2)

-

构建skip-gram模型,并进行训练

- #词向量维度设置为128维,窗口大小设置为2,随机负采样的样本数设置为16。

- word_size = 128 #词向量维度

- window = 2 #窗口大小

- input_words = Input(shape=(1,), dtype='int32',name="inputA")

- input_vecs = Embedding(vocabulary_size, word_size, name='word2vec')(input_words)

- input_vecs_sum = Lambda(lambda x: K.sum(x, axis=1),name="InputLambda")(input_vecs)

- nb_negative = 16 #随机负采样的样本数

-

- #构造随机负样本,与目标组成抽样

- target_word = Input(shape=(1,), dtype='int32',name="inputB")

- negatives = Lambda(lambda x: K.random_uniform((K.shape(x)[0], nb_negative), 0, vocabulary_size, 'int32'))(target_word)

- samples = Lambda(lambda x: K.concatenate(x))([target_word,negatives])

-

- #只在抽样内做Dense和softmax

- softmax_weights = Embedding(vocabulary_size, word_size, name='W')(samples)

- softmax_biases = Embedding(vocabulary_size, 1, name='b')(samples)

-

- softmax = Lambda(lambda x: K.softmax((K.batch_dot(x[0], K.expand_dims(x[1],2))+x[2])[:,:,0]))([softmax_weights,input_vecs_sum,softmax_biases]) #用Embedding层存参数,用K后端实现矩阵乘法,以此复现Dense层的功能。

-

- #编译模型。

- 注意,标签为1个正样本,16个随机采样的样本。因此为多目标,所以,loss应该选择为sparse_categorical_crossentropy。

-

- model = Model(inputs=[input_words,target_word], outputs=softmax)

- model.compile(loss='sparse_categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

打印模型结果:

model.summary()

打印如下:

______________________________________________________________________

Layer (type) Output Shape Param # Connected to

===================================================================

inputB (InputLayer) (None, 1) 0

______________________________________________________________________

lambda_7 (Lambda) (None, 16) 0 inputB[0][0]

______________________________________________________________________

inputA (InputLayer) (None, 1) 0

______________________________________________________________________

lambda_8 (Lambda) (None, 17) 0 inputB[0][0]

lambda_7[0][0]

______________________________________________________________________

word2vec (Embedding) (None, 1, 128) 6400000 inputA[0][0]

______________________________________________________________________

W (Embedding) (None, 17, 128) 6400000 lambda_8[0][0]

______________________________________________________________________

InputLambda (Lambda) (None, 128) 0 word2vec[0][0]

______________________________________________________________________

b (Embedding) (None, 17, 1) 50000 lambda_8[0][0]

______________________________________________________________________

lambda_9 (Lambda) (None, 17) 0 W[0][0]

InputLambda[0][0]

b[0][0]

===================================================================

Total params: 12,850,000

Trainable params: 12,850,000

Non-trainable params: 0

将输入数据和标签转成model.fit函数需要的格式

x,y = np.array(xdata),np.array(ydata) #输入数据

z = np.zeros((len(x), 1)) #标签,标签是np.zeros,是因为,我们将正样本(目标值)放置在第一位,其索引为0,并且采用的是稀疏表示法(sparse_categorical_crossentropy)。

进行训练并保存训练结果,[x,y]对应两个输入,Z对应标签,训练50轮。

model.fit([x,y],z,epochs=50,batch_size=20480)

model.save('word2vecskipgrow.h5')

训练过程,部分打印log如下,可以看到在该语料上,训练50轮,就达到了82%的准确率,效果还不错。如果需要更为精确的词向量,可以采用更多的语料进行训练。

Epoch 45/50

66347248/66347248 [===============] - 64s 1us/step - loss: 0.5515 - acc: 0.8232

Epoch 46/50

66347248/66347248 [===============] - 64s 1us/step - loss: 0.5513 - acc: 0.8233

Epoch 47/50

66347248/66347248 [===============] - 64s 1us/step - loss: 0.5512 - acc: 0.8233

Epoch 48/50

66347248/66347248 [===============] - 64s 1us/step - loss: 0.5510 - acc: 0.8233

Epoch 49/50

66347248/66347248 [===============] - 64s 1us/step - loss: 0.5507 - acc: 0.8234

Epoch 50/50

66347248/66347248 [===============] - 64s 1us/step - loss: 0.5507 - acc: 0.8233

可视化训练结果词向量

取一些数据,查看相似度,确认一下训练效果.

- import numpy as np

- embeddings = model.get_weights()[0]

- normalized_embeddings = embeddings / (embeddings**2).sum(axis=1).reshape((-1,1))**0.5

- def most_similar(w):

- v = normalized_embeddings[word_index[w]]

- sims = np.dot(normalized_embeddings, v)

- sort = sims.argsort()[::-1]

- sort = sort[sort > 0]

- return [index_word[i] for i in sort[:10]]

- validata = [index_word[i] for i in data[5:10]]

- print("validate: ",validata)

- for i in valditedata:

- print(most_similar(i))

打印如下:

validate: ['of', 'abuse', 'first', 'used', 'against']

['of', 'garter', 'strelitz', 'archdukes', 'comprises', 'the', 'premeditated', 'defies', 'mosiah', 'rumelia']

['abuse', 'drug', 'abortion', 'terrorism', 'harassment', 'spying', 'trafficking', 'litigated', 'alcohol', 'violence']

['first', 'second', 'third', 'fourth', 'last', 'next', 'ninth', 'earliest', 'ever', 'fifth']

['used', 'employed', 'invented', 'uses', 'designed', 'intended', 'available', 'developed', 'able', 'coined']

['against', 'defending', 'opponents', 'oppose', 'defeat', 'counter', 'attacking', 'tactics', 'defeating', 'attack']

将文字降维度后,可视化显示。

- #降维

-

- from sklearn.manifold import TSNE

- from matplotlib import pylab

- num_points = 400

- tsne = TSNE(perplexity=30, n_components=2, init='pca', n_iter=5000)

- two_d_embeddings = tsne.fit_transform(normalized_embeddings[1:num_points+1, :])

- #可视化

- def plot(embeddings, labels):

- assert embeddings.shape[0] >= len(labels), 'More labels than embeddings'

- pylab.figure(figsize=(20,20)) # in inches

- for i, label in enumerate(labels):

- x, y = embeddings[i,:]

- pylab.scatter(x, y)

- pylab.annotate(label, xy=(x, y), xytext=(5, 2), textcoords='offset points',

- ha='right', va='bottom')

- pylab.show()

- words = [index_word[i] for i in range(1, num_points+1)]

- plot(two_d_embeddings, words)

效果如图,从图中,我们可以看到,相似的词汇都被聚合到了一起。比如数字、国家、情态动词等。

2.2 keras实现CBOW模型

关于CBOW代码,与skipgram的实现相似,此处主要介绍一下不同之处。

- 数据预处理。

与skipgram采用中心词预测周围的词不同,CBOW是用周围的词预测中心词。因此在数据处理方面会有不同。代码如下:

- xdata=[]

- ydata=[]

- def generate_data(window_size):

- for data_index in range(window_size,len(data)-window_size):

- index_x=data_index-window_size

- xItem=[]

- for j in range(2*window_size):

- if(data_index == index_x):

- index_x=index_x+1

- xItem.append(data[index_x])

- index_x=index_x+1

- xdata.append(xItem)

- ydata.append(data[data_index])

- #print(xItem) //

- #print(data[data_index]) //

- #将数据处理成CBOW所需格式,并打印部分数据,查看效果:

- data=list1sequences[0]

- print(data[:7])

- generate_data(2)

部分打印LOG如下:

[12, 6, 195, 2, 46, 59,156]

[12, 6, 2, 46]

195

[6, 195, 46, 59]

2

[195, 2, 59, 156]

46

搭建模型,模型与CBOW基本相同,仅数据输入部分不一样。Skipgram模型输入输入部分为一个单词,CBOW模型数据输入部分模型维度为window*2。

- input_words = Input(shape=(window*2,), dtype='int32',name="inputA")

- input_vecs = Embedding(vocabulary_size, word_size, name='word2vec')(input_words)

- input_vecs_sum = Lambda(lambda x: K.sum(x, axis=1),name="InputLambda")(input_vecs)

- #构造随机负样本,与目标组成抽样

- target_word = Input(shape=(1,), dtype='int32',name="inputB")

- negatives = Lambda(lambda x: K.random_uniform((K.shape(x)[0], nb_negative), 0, vocabulary_size, 'int32'))(target_word)

- samples = Lambda(lambda x: K.concatenate(x))([target_word,negatives]) #构造抽样,负样本随机抽。负样本也可能抽到正样本,但概率小。

- #只在抽样内做Dense和softmax

- softmax_weights = Embedding(vocabulary_size, word_size, name='W')(samples)

- softmax_biases = Embedding(vocabulary_size, 1, name='b')(samples)

- softmax = Lambda(lambda

- x:K.softmax((K.batch_dot(x[0],K.expand_dims(x[1],2))+x[2])[:,:,0]))([softmax_weights,input_vecs_sum,softmax_biases]) #用Embedding层存参数,用K后端实现矩阵乘法,以此复现Dense层的功能

更改之后,进行训练。打印LOG如下,可以看到CBOW的准确率要比skipgram高。

Epoch 46/50

16586812/16586812 [====] - 18s 1us/step - loss: 0.1588 - acc: 0.9439

Epoch 47/50

16586812/16586812 [====] - 18s 1us/step - loss: 0.1582 - acc: 0.9441

Epoch 48/50

16586812/16586812 [====] - 18s 1us/step - loss: 0.1576 - acc: 0.9443

Epoch 49/50

16586812/16586812 [====] - 18s 1us/step - loss: 0.1569 - acc: 0.9445

Epoch 50/50

16586812/16586812 [====] - 18s 1us/step - loss: 0.1565 - acc: 0.9446

如上,便完成了keras实现word2vec两种模型。两种模型的实现,对深入理解word2vec的原理具有重要的作用。一般来讲,word2vec的标签是单词表中所有单词,正样本只有一个,其他全部为负样本。本文通过随机选区16个样本作为负样本,大大降低了训练的复杂性,加快了训练速度,并且取得了不错的效果。

三、word2vec之tensorflow实现

3.1 tensorflow实现skip-gram模型

- #使用 Text8 数据集训练word2vec

-

- #1.安装依赖库

-

- In [40]:

- from __future__ import print_function

- import collections

- import math

- import numpy as np

- import os

- import random

- import tensorflow as tf

- import zipfile

- from matplotlib import pylab

- from six.moves import range

- from six.moves.urllib.request import urlretrieve

- from sklearn.manifold import TSNE

- print('check:libs well prepared')

- check:libs well prepared

- #2.下载数据并解压

-

- In [41]:

- url = 'http://mattmahoney.net/dc/'

-

- def maybe_download(filename, expected_bytes):

- #判断文件是否存在

- if not os.path.exists(filename):

- #下载

- print('download...')

- filename, _ = urlretrieve(url + filename, filename)

- #校验大小

- statinfo = os.stat(filename)

- if statinfo.st_size == expected_bytes:

- print('Found and verified %s' % filename)

- else:

- print('exception %s' % statinfo.st_size)

- return filename

-

- filename = maybe_download('text8.zip', 31344016)

- Found and verified text8.zip

- In [42]:

- def read_data(filename):

- with zipfile.ZipFile(filename) as f:

- data = tf.compat.as_str(f.read(f.namelist()[0])).split()

- return data

-

- words = read_data(filename)

- print('Data size %d' % len(words))

- Data size 17005207

- #3.编码并替换低频次

-

- In [43]:

- vocabulary_size = 50000

-

- def build_dataset(words):

- count = [['UNK', -1]]

- #每个词出现的次数

- count.extend(collections.Counter(words).most_common(vocabulary_size - 1))

- dictionary = dict()

- #单词到数字的映射

- for word, _ in count:

- dictionary[word] = len(dictionary)

- data = list()

- unk_count = 0

- for word in words:

- if word in dictionary:

- index = dictionary[word]

- else:

- index = 0

- unk_count = unk_count + 1

- data.append(index)

- count[0][1] = unk_count

- #数字到单词的映射

- reverse_dictionary = dict(zip(dictionary.values(), dictionary.keys()))

- return data, count, dictionary, reverse_dictionary

-

- #映射之后的训练数据

- data, count, dictionary, reverse_dictionary = build_dataset(words)

- #

- print('Most common words (+UNK)', count[:5])

- print('original data', words[:10])

- print('training data', data[:10])

- Most common words (+UNK) [['UNK', 418391], ('the', 1061396), ('of', 593677), ('and', 416629), ('one', 411764)]

- original data ['anarchism', 'originated', 'as', 'a', 'term', 'of', 'abuse', 'first', 'used', 'against']

- training data [5234, 3081, 12, 6, 195, 2, 3134, 46, 59, 156]

- #4.生成skip-gram训练数据

-

- In [46]:

- def generate_batch(batch_size, num_skips, skip_window):

- global data_index

- assert batch_size % num_skips == 0

- assert num_skips <= 2 * skip_window

- # x y

- batch = np.ndarray(shape=(batch_size), dtype=np.int32)

- labels = np.ndarray(shape=(batch_size, 1), dtype=np.int32)

- span = 2 * skip_window + 1 # context word context

- buffer = collections.deque(maxlen=span)

- for _ in range(span):

- buffer.append(data[data_index])

- # 循环使用

- data_index = (data_index + 1) % len(data)

- for i in range(batch_size // num_skips):

- target = skip_window #

- targets_to_avoid = [ skip_window ]

- for j in range(num_skips):

- while target in targets_to_avoid:

- target = random.randint(0, span - 1)

- targets_to_avoid.append(target)

- batch[i * num_skips + j] = buffer[skip_window]

- labels[i * num_skips + j, 0] = buffer[target]

- buffer.append(data[data_index])

- data_index = (data_index + 1) % len(data)

- return batch, labels

-

-

- print('data:', [reverse_dictionary[di] for di in data[:8]])

- data_index = 0

- batch, labels = generate_batch(batch_size=8, num_skips=2, skip_window=2)

- print(' batch:', [reverse_dictionary[bi] for bi in batch])

- print(' labels:', [reverse_dictionary[li] for li in labels.reshape(8)])

- data: ['anarchism', 'originated', 'as', 'a', 'term', 'of', 'abuse', 'first']

- batch: ['as', 'as', 'a', 'a', 'term', 'term', 'of', 'of']

- labels: ['originated', 'anarchism', 'of', 'term', 'as', 'a', 'abuse', 'first']

- #5.定义网络结构

-

- In [47]:

- batch_size = 128

- embedding_size = 128 #

- skip_window = 1 #

- num_skips = 2 #

- valid_size = 16 #

- valid_window = 100 #

- valid_examples = np.array(random.sample(range(valid_window), valid_size))

- num_sampled = 64 #

-

- graph = tf.Graph()

-

- with graph.as_default(), tf.device('/cpu:0'):

-

- # 输入数据

- train_dataset = tf.placeholder(tf.int32, shape=[batch_size])

- train_labels = tf.placeholder(tf.int32, shape=[batch_size, 1])

- valid_dataset = tf.constant(valid_examples, dtype=tf.int32)

-

- # 定义变量

- embeddings = tf.Variable(

- tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

- softmax_weights = tf.Variable(

- tf.truncated_normal([vocabulary_size, embedding_size],

- stddev=1.0 / math.sqrt(embedding_size)))

- softmax_biases = tf.Variable(tf.zeros([vocabulary_size]))

-

- #本次训练数据对应的embedding

- embed = tf.nn.embedding_lookup(embeddings, train_dataset)

- # batch loss

- loss = tf.reduce_mean(

- tf.nn.sampled_softmax_loss(weights=softmax_weights, biases=softmax_biases, inputs=embed,

- labels=train_labels, num_sampled=num_sampled, num_classes=vocabulary_size))

- #优化loss,更新参数

- optimizer = tf.train.AdagradOptimizer(1.0).minimize(loss)

-

- #归一化

- norm = tf.sqrt(tf.reduce_sum(tf.square(embeddings), 1, keep_dims=True))

- normalized_embeddings = embeddings / norm

- #用已有embedding计算valid的相似次

- valid_embeddings = tf.nn.embedding_lookup(

- normalized_embeddings, valid_dataset)

- similarity = tf.matmul(valid_embeddings, tf.transpose(normalized_embeddings))

- #6.运行训练流程

-

- In [48]:

- num_steps = 100000

-

- with tf.Session(graph=graph) as session:

- tf.global_variables_initializer().run()

- average_loss = 0

- for step in range(num_steps+1):

- batch_data, batch_labels = generate_batch(

- batch_size, num_skips, skip_window)

- feed_dict = {train_dataset : batch_data, train_labels : batch_labels}

- _, l = session.run([optimizer, loss], feed_dict=feed_dict)

- average_loss += l

- #2000次打印loss

- if step % 2000 == 0:

- if step > 0:

- average_loss = average_loss / 2000

- print('Average loss at step %d: %f' % (step, average_loss))

- average_loss = 0

- # 打印valid效果

- if step % 10000 == 0:

- sim = similarity.eval()

- for i in range(valid_size):

- valid_word = reverse_dictionary[valid_examples[i]]

- top_k = 5 #相似度最高的5个词

- nearest = (-sim[i, :]).argsort()[1:top_k+1]

- log = 'Nearest to %s:' % valid_word

- for k in range(top_k):

- close_word = reverse_dictionary[nearest[k]]

- log = '%s %s,' % (log, close_word)

- print(log)

- final_embeddings = normalized_embeddings.eval()

训练打印LOG如下:......

Nearest to up: out, off, down, back, them,

Nearest to this: which, it, another, itself, some,

Nearest to if: when, though, where, before, because,

Nearest to from: through, into, protestors, muir, in,

Nearest to s: whose, his, isbn, my, dedicates,

Nearest to however: but, although, that, though, especially,

Nearest to over: overshadowed, around, between, through, within,

Nearest to also: often, still, now, never, sometimes,

Nearest to used: designed, referred, seen, considered, known,

Nearest to on: upon, in, through, under, bathroom,

- #可视化

-

- In [49]:

- num_points = 400

-

- tsne = TSNE(perplexity=30, n_components=2, init='pca', n_iter=5000)

- two_d_embeddings = tsne.fit_transform(final_embeddings[1:num_points+1, :])

- In [50]:

- def plot(embeddings, labels):

- assert embeddings.shape[0] >= len(labels), 'More labels than embeddings'

- pylab.figure(figsize=(20,20)) # in inches

- for i, label in enumerate(labels):

- x, y = embeddings[i,:]

- pylab.scatter(x, y)

- pylab.annotate(label, xy=(x, y), xytext=(5, 2), textcoords='offset points',

- ha='right', va='bottom')

- pylab.show()

-

- words = [reverse_dictionary[i] for i in range(1, num_points+1)]

- plot(two_d_embeddings, words)

可视化结果如下图:

3.2 tensorflow实现CBOW模型

样本生成

- data_index = 0

-

- def cbow_batch(batch_size, bag_window):

- global data_index

- batch = np.ndarray(shape=(batch_size,bag_window*2), dtype=np.int32)

- labels = np.ndarray(shape=(batch_size, 1), dtype=np.int32)

- span = 2 * bag_window + 1 # [ bag_window target bag_window ]

- buffer = collections.deque(maxlen=span)

- for _ in range(span):

- buffer.append(data[data_index])

- data_index = (data_index + 1) % len(data)

- for i in range(batch_size):

- batch[i] = list(buffer)[:bag_window] + list(buffer)[bag_window+1:]

- labels[i] = buffer[bag_window]

- buffer.append(data[data_index])

- data_index = (data_index + 1) % len(data)

- return batch, labels

-

- print('data:', [reverse_dictionary[di] for di in data[:12]])

-

- batch, labels = cbow_batch(8,1)

- print('batch:', [[reverse_dictionary[w] for w in bi] for bi in batch])

- print('labels:', [reverse_dictionary[l] for l in labels.reshape(8)])

打印如下:

data: ['anarchism', 'originated', 'as', 'a', 'term', 'of', 'abuse', 'first', 'used', 'against', 'early', 'working'] batch: [['anarchism', 'as'], ['originated', 'a'], ['as', 'term'], ['a', 'of'], ['term', 'abuse'], ['of', 'first'], ['abuse', 'used'], ['first', 'against']] labels: ['originated', 'as', 'a', 'term', 'of', 'abuse', 'first', 'used']

网络结构:

- batch_size = 128

- embedding_size = 128

- bag_window = 1 # 跟skip_window作用相同

- valid_examples = np.array(random.sample(range(valid_window), valid_size))

- num_sampled = 64

-

- graph = tf.Graph()

-

- with graph.as_default(), tf.device('/cpu:0'):

-

- # 输入变量的维度不同

- train_dataset = tf.placeholder(tf.int32, shape=[batch_size, bag_window*2])

- train_labels = tf.placeholder(tf.int32, shape=[batch_size, 1])

- valid_dataset = tf.constant(valid_examples, dtype=tf.int32)

-

- embeddings = tf.Variable(

- tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

- softmax_weights = tf.Variable(

- tf.truncated_normal([vocabulary_size, embedding_size],

- stddev=1.0 / math.sqrt(embedding_size)))

- softmax_biases = tf.Variable(tf.zeros([vocabulary_size]))

-

-

- embed = tf.nn.embedding_lookup(embeddings, train_dataset)

- # inputs先做sum pooling再输入

- loss = tf.reduce_mean(

- tf.nn.sampled_softmax_loss(weights=softmax_weights, biases=softmax_biases, inputs=tf.reduce_sum(embed, 1),

- labels=train_labels, num_sampled=num_sampled, num_classes=vocabulary_size))

-

- optimizer = tf.train.AdagradOptimizer(1.0).minimize(loss)

-

- norm = tf.sqrt(tf.reduce_sum(tf.square(embeddings), 1, keep_dims=True))

- normalized_embeddings = embeddings / norm

- valid_embeddings = tf.nn.embedding_lookup(

- normalized_embeddings, valid_dataset)

- similarity = tf.matmul(valid_embeddings, tf.transpose(normalized_embeddings))

模型训练

- num_steps = 100001

-

- with tf.Session(graph=graph) as session:

- tf.global_variables_initializer().run()

- average_loss = 0

- for step in range(num_steps):

- batch_data, batch_labels = cbow_batch(batch_size, bag_window)

- feed_dict = {train_dataset : batch_data, train_labels : batch_labels}

- _, l = session.run([optimizer, loss], feed_dict=feed_dict)

- average_loss += l

- if step % 2000 == 0:

- if step > 0:

- average_loss = average_loss / 2000

-

- print('Average loss at step %d: %f' % (step, average_loss))

- average_loss = 0

- if step % 10000 == 0:

- sim = similarity.eval()

- for i in range(valid_size):

- valid_word = reverse_dictionary[valid_examples[i]]

- top_k = 5 # number of nearest neighbors

- nearest = (-sim[i, :]).argsort()[1:top_k+1]

- log = 'Nearest to %s:' % valid_word

- for k in range(top_k):

- close_word = reverse_dictionary[nearest[k]]

- log = '%s %s,' % (log, close_word)

- print(log)

- final_embeddings = normalized_embeddings.eval()

打印部分LOG如下:

四、相关参考

4.1 word2vec 中的数学原理详解

相关地址:word2vec 中的数学原理详解(一)目录和前言_皮果提的博客-CSDN博客_word2vec数学原理详解

(强烈推荐,目前讲解的最详细的一篇,目录如下)

4.2 深度解析Word2vec深度解析Word2vec - Jamest - 博客园Word2vec 本质上是一种降维操作——把词语从 one hot encoder 形式的表示降维到 Word2vec 形式的表示,即 Distributed Representation 。也就是,https://www.cnblogs.com/hellojamest/p/11621627.html

总结

在word2vec诞生之后,embedding的思想迅速从NLP领域扩散到几乎所有机器学习的领域,我们既然可以对一个序列中的词进行embedding,那自然可以对用户购买序列中的一个商品,用户观看序列中的一个电影进行embedding。而广告、推荐、搜索等领域用户数据的稀疏性几乎必然要求在构建DNN之前对user和item进行embedding后才能进行有效的训练。

具体来讲,如果item存在于一个序列中,item2vec的方法与word2vec没有任何区别。而如果我们摒弃序列中item的空间关系,在原来的目标函数基础上,自然是不存在时间窗口的概念了,取而代之的是item set中两两之间的条件概率。

Q&A

什么是embedding?为什么说embedding是深度学习的基本操作?

Embedding是用一个低维的向量表示一个物体,可以是一个词,或是一个商品,或是一个电影等等。这个Embedding向量的性质是能使距离相近的向量对应的物体有相近的含义,

Embedding能够用低维向量对物体进行编码还能保留其含义的特点非常适合深度学习。在传统机器学习模型构建过程中,我们经常使用one hot encoding对离散特征,特别是id类特征进行编码,但由于one hot encoding的维度等于物体的总数,这样的编码方式对于商品来说是极端稀疏的,甚至用multi hot encoding对用户浏览历史的编码也会是一个非常稀疏的向量。而深度学习的特点以及工程方面的原因使其不利于稀疏特征向量的处理。

为什么说深度学习的特点不适合处理特征过于稀疏的样本?

1、稀疏编码带来的全连接层参数爆炸,特征过于稀疏会导致整个网络收敛过慢,

2、因为每次更新只有极少数的权重会得到更新。这在样本有限的情况下几乎会导致模型不收敛。

Word2Vec中为什么使用负采样?

1.加速了模型计算。负采样的核心思想是:计算目标单词和窗口中的单词的真实单词对“得分”,再加一些“噪声”,即词表中的随机单词和目标单词的“得分”。放弃softmax函数,采用sigmoid函数,这样就不存在先求一遍窗口中所有单词的‘“得分”的情况了。

2.保证了模型训练的效果,其一模型每次只需要更新采样的词的权重,不用更新所有的权重,那样会很慢,其二中心词其实只跟它周围的词有关系,位置离着很远的词没有关系,也没必要同时训练更新。

word2vec中的负例采样为什么可以得到和softmax一样的效果?

softmax归一化因词表大而复杂度高,理论上NCE近似softmax。而负采样又是NCE的特例。实际中负采样数很少,因此近似NCE,又近似softmax,而且负采样公式更简单而被广泛运用。负采样在保证精读的前提下,提升了训练速度,很多大规模分布式模型训练的银弹。