热门标签

当前位置: article > 正文

LangChain-24 Agengts 通过TavilySearch Agent实现检索内容并回答 AgentExecutor转换Search 借助Prompt Tools工具_tavilysearchresults

作者:我家小花儿 | 2024-08-02 00:23:41

赞

踩

tavilysearchresults

安装依赖

pip install -qU langchain-core langchain-openai

- 1

Prompt

# 从Hub加载Prompt,自己写加载进来也一样

# SYSTEM

#

# You are a helpful assistant

#

# PLACEHOLDER

#

# chat_history

# HUMAN

#

# {input}

#

# PLACEHOLDER

#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

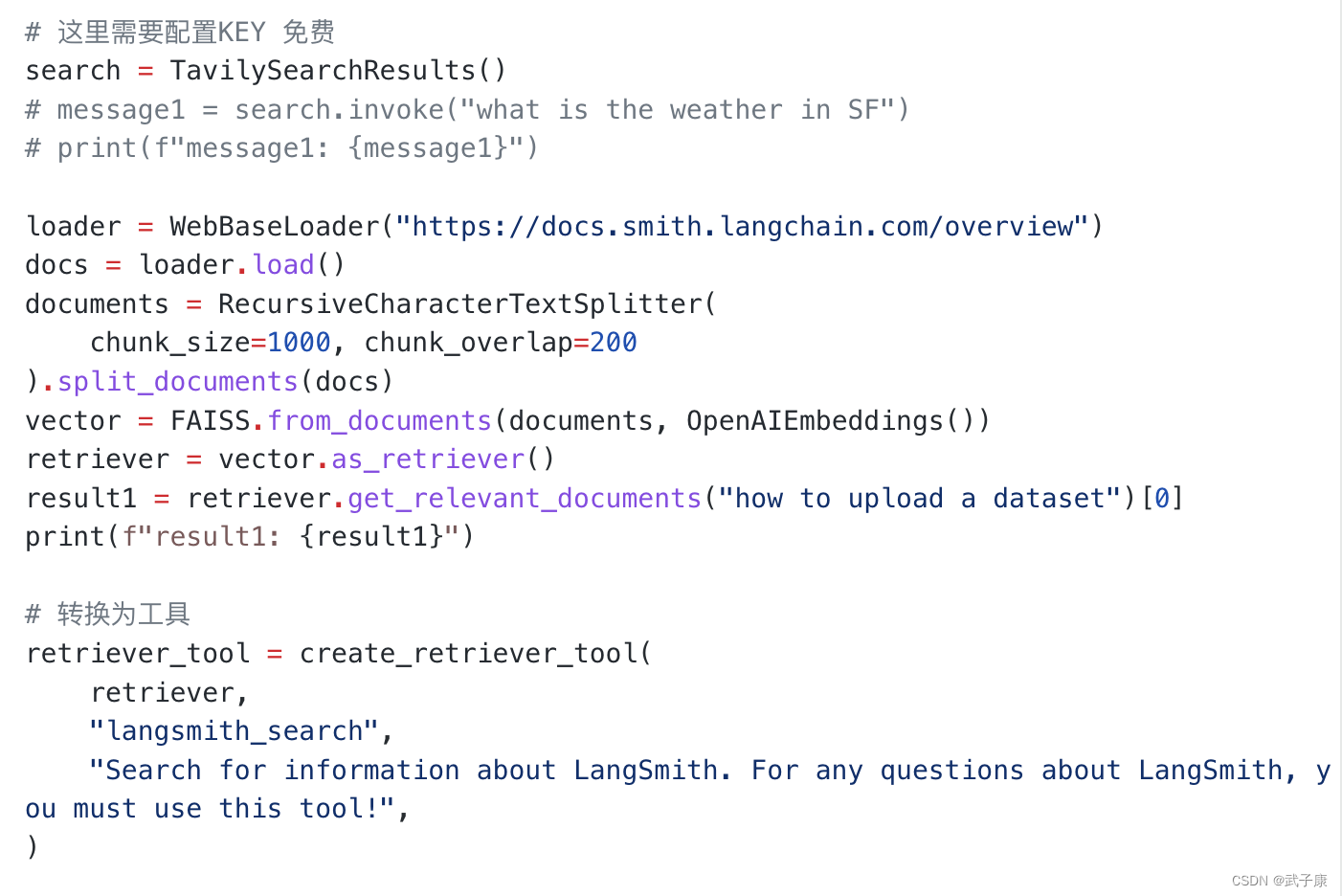

编写代码

from langchain_community.tools.tavily_search import TavilySearchResults from langchain_community.document_loaders import WebBaseLoader from langchain_community.vectorstores import FAISS from langchain_openai import OpenAIEmbeddings from langchain_text_splitters import RecursiveCharacterTextSplitter from langchain.tools.retriever import create_retriever_tool from langchain_openai import ChatOpenAI from langchain.agents import create_openai_functions_agent from langchain.agents import AgentExecutor from langchain import hub # 这里需要配置KEY 免费 search = TavilySearchResults() # message1 = search.invoke("what is the weather in SF") # print(f"message1: {message1}") loader = WebBaseLoader("https://docs.smith.langchain.com/overview") docs = loader.load() documents = RecursiveCharacterTextSplitter( chunk_size=1000, chunk_overlap=200 ).split_documents(docs) vector = FAISS.from_documents(documents, OpenAIEmbeddings()) retriever = vector.as_retriever() result1 = retriever.get_relevant_documents("how to upload a dataset")[0] print(f"result1: {result1}") # 转换为工具 retriever_tool = create_retriever_tool( retriever, "langsmith_search", "Search for information about LangSmith. For any questions about LangSmith, you must use this tool!", ) # 定义工具 tools = [search, retriever_tool] llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0) # 从Hub加载Prompt,自己写加载进来也一样 # SYSTEM # # You are a helpful assistant # # PLACEHOLDER # # chat_history # HUMAN # # {input} # # PLACEHOLDER # # agent_scratchpad prompt = hub.pull("hwchase17/openai-functions-agent") print(f"prompt message: {prompt.messages}") # 新建Agent agent = create_openai_functions_agent(llm, tools, prompt) # Agent执行器 agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True) # 尝试执行 message2 = agent_executor.invoke({"input": "hi!"}) print(f"message2: {message2}") # 执行任务 message3 = agent_executor.invoke({"input": "how can langsmith help with testing?"}) print(f"message3: {message3}")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

运行结果

➜ python3 test24.py

result1: page_content="about LangSmith:User Guide: Learn about the workflows LangSmith supports at each stage of the LLM application lifecycle.Setup: Learn how to create an account, obtain an API key, and configure your environment.Pricing: Learn about the pricing model for LangSmith.Self-Hosting: Learn about self-hosting options for LangSmith.Proxy: Learn about the proxy capabilities of LangSmith.Tracing: Learn about the tracing capabilities of LangSmith.Evaluation: Learn about the evaluation capabilities of LangSmith.Prompt Hub Learn about the Prompt Hub, a prompt management tool built into LangSmith.Additional Resources\u200bLangSmith Cookbook: A collection of tutorials and end-to-end walkthroughs using LangSmith.LangChain Python: Docs for the Python LangChain library.LangChain Python API Reference: documentation to review the core APIs of LangChain.LangChain JS: Docs for the TypeScript LangChain libraryDiscord: Join us on our Discord to discuss all things LangChain!Contact SalesIf you're interested in" metadata={'source': 'https://docs.smith.langchain.com/overview', 'title': 'LangSmith | 声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/我家小花儿/article/detail/916506推荐阅读

相关标签

Copyright © 2003-2013 www.wpsshop.cn 版权所有,并保留所有权利。