- 1【MIdjourney】几种独特的艺术风格_midjouney 不同模式风格

- 2多功能工具箱组合小程序源码+附流量主/云开发的_魔盒工具箱小程序源码

- 3Mysql底层剖析——各存储引擎的区别_mysql四种引擎的区别

- 4Linux运维_Linux临时环境变量设置(bin和include以及lib)

- 5[警告] FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future ver

- 6什么是命令注入?其原理是什么?_命令注入漏洞攻击原理

- 7build.gradle之Repository配置_gradle repositories

- 8HarmonyOS4.0—自定义渐变导航栏开发教程

- 9全站最详细的Python numpy 搭建全连接神经网络模型教程(理论计算+代码实现)(不止能预测手写数字数据,准确率93.21%)

- 10宇视业软视频及智能业务子系统录像检索配置指导_宇视视频监控管理平台录像怎么看

深度学习笔记--本地部署Mini-GPT4_minigpt4

赞

踩

目录

1--前言

本机环境:

System: Ubuntu 18.04

GPU: Tesla V100 (32G)

CUDA: 10.0(11.3 both ok)

项目地址:https://github.com/Vision-CAIR/MiniGPT-4

2--配置环境依赖

- git clone https://github.com/Vision-CAIR/MiniGPT-4.git

- cd MiniGPT-4

- conda env create -f environment.yml

- conda activate minigpt4

默认配置的环境名为 minigpt4,也可以通过 environment.yml 来修改环境名,这里博主设置的 python 环境为 ljf_minigpt4:

3--下载权重

这里博主选用的 LLaMA 权重为 llama-7b-hf,Vicuna 增量文件为 vicuna-7b-delta-v1.1,对应的下载地址如下:

这里提供两种下载方式,第一种是基于 huggingface_hub 第三方库进行下载:

pip install huggingface_hub- from huggingface_hub import snapshot_download

- snapshot_download(repo_id='decapoda-research/llama-7b-hf')

- # 对应的存储地址为:~/.cache/huggingface/hub/models--decapoda-research--llama-7b-hf/snapshots/(一串数字)/

-

- from huggingface_hub import snapshot_download

- snapshot_download(repo_id='lmsys/vicuna-7b-delta-v1.1')

- # 对应的存储地址为:~.cache/huggingface/hub/models--lmsys--vicuna-7b-delta-v1.1/snapshots/(一串数字)/

第一种下载方式容易出现连接超时的错误,这里提供第二种基于 wget 的下载方式:

- # 记录每一个文件的下载url,使用 wget 来下载,download.txt 的内容如下:

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/.gitattributes

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/LICENSE

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/README.md

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/config.json

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/generation_config.json

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00001-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00002-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00003-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00004-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00005-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00006-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00007-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00008-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00009-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00010-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00011-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00012-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00013-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00014-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00015-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00016-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00017-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00018-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00019-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00020-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00021-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00022-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00023-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00024-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00025-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00026-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00027-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00028-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00029-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00030-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00031-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00032-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model-00033-of-00033.bin

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/pytorch_model.bin.index.json

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/special_tokens_map.json

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/tokenizer.model

- https://huggingface.co/decapoda-research/llama-7b-hf/resolve/main/tokenizer_config.json

编写 download.sh,下载 download.txt 中所有的文件:

- #! /bin/bash

- while read file; do

- wget ${file}

- done < download.txt

同理对于增量文件,记录所有文件的下载 url,通过 wget 下载:

- https://huggingface.co/lmsys/vicuna-7b-delta-v1.1/resolve/main/.gitattributes

- https://huggingface.co/lmsys/vicuna-7b-delta-v1.1/resolve/main/README.md

- https://huggingface.co/lmsys/vicuna-7b-delta-v1.1/resolve/main/config.json

- https://huggingface.co/lmsys/vicuna-7b-delta-v1.1/resolve/main/generation_config.json

- https://huggingface.co/lmsys/vicuna-7b-delta-v1.1/resolve/main/pytorch_model-00001-of-00002.bin

- https://huggingface.co/lmsys/vicuna-7b-delta-v1.1/resolve/main/pytorch_model-00002-of-00002.bin

- https://huggingface.co/lmsys/vicuna-7b-delta-v1.1/resolve/main/pytorch_model.bin.index.json

- https://huggingface.co/lmsys/vicuna-7b-delta-v1.1/resolve/main/special_tokens_map.json

- https://huggingface.co/lmsys/vicuna-7b-delta-v1.1/resolve/main/tokenizer.model

- https://huggingface.co/lmsys/vicuna-7b-delta-v1.1/resolve/main/tokenizer_config.json

- #! /bin/bash

- while read file; do

- wget ${file}

- done < download.txt

4--生成 Vicuna 权重

安装 FastChat 的 python 库:

- pip install git+https://github.com/lm-sys/FastChat.git@v0.1.10

- # or

- pip install fschat

终端执行以下命令,生成最终的权重文件:

- python3 -m fastchat.model.apply_delta \

- --base-model-path llama-7b-hf_path \

- --target-model-path vicuna-7b_path \

- --delta-path vicuna-7b-delta-v1.1_path

--bash-model-path 表示第 3 步中下载的 llama-7b-hf 权重的存放地址;

--target-model-path 表示生成 Vicuna 权重的存放地址;

--delta-path 表示第 3 步中下载的 vicuna-7b-delta-v1.1 权重的存放地址;

5--测试

首先下载测试权重,这里博主选用的是 Checkpoint Aligned with Vicuna 7B:

Checkpoint Aligned with Vicuna 13B 下载地址

Checkpoint Aligned with Vicuna 7B 下载地址

接着配置测试文件:

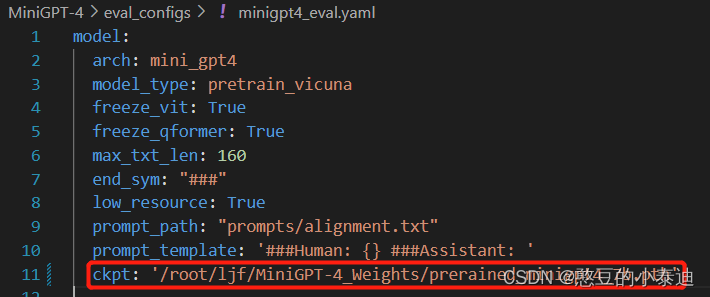

修改 MiniGPT-4/eval_configs/minigpt4_eval.yaml 配置文件中的 ckpt 路径为 Checkpoint Aligned with Vicuna 7B 的地址:

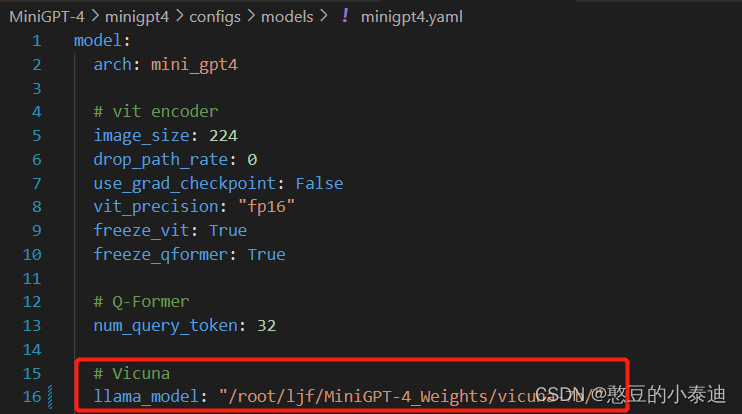

修改 MiniGPT-4/minigpt4/configs/models/minigpt4.yaml 配置文件中的 llama_model 为第4步生成 Vicuna 权重的存放地址:

执行以下命令启动网页端的 Mini-GPT4:

python demo.py --cfg-path eval_configs/minigpt4_eval.yaml --gpu-id 0在本机打开 local url 即可,也可以使用其它电脑打开 public url:当然也可以修改提供的demo,无需使用网页端直接在终端测试推理结果:

![]()

6--可能出现的问题

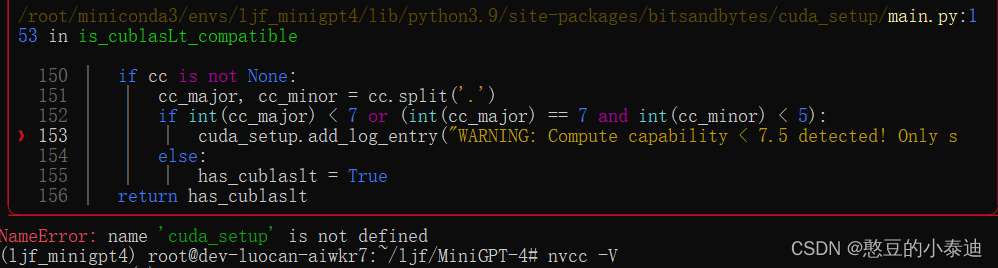

① name ‘cuda_setup’ is not defined:

解决方法:

升级 bitsandbytes 库,这里博主选用 0.38.1 版本的 bitsandbytes 解决了问题;

pip install bitsandbytes==0.38.1