热门标签

热门文章

- 1基于FPGA的简易计算器Verilog代码Quartus仿真_verilog模拟计算器

- 2鸿蒙开发-UI-布局-栅格布局_鸿蒙vp

- 3Interview之AI:人工智能领域岗位求职面试—人工智能算法工程师知识框架及课程大纲(AI基础之数学基础/数据结构与算法/编程学习基础、ML算法简介、DL算法简介)来理解技术交互流程_ai算法工程师要学什么

- 4python -tkinter基础学习3_python tkinter colspawn

- 5浅谈以数据结构的视角去解决算法问题的步骤_数据结构解决问题的步骤

- 6操作系统学习笔记(八):连续内存分配——碎片整理_操作系统对内存碎片的合并

- 7基于Python语言的Web框架flask实现的校园二手物品发布平台_flask校园二手交易网站

- 8mysql数据库 set类型_MySQL数据库数据类型之集合类型SET测试总结

- 9记最近一次Nodejs全栈开发经历_nodejs 开发思维

- 102023年第三届纳米材料与纳米技术国际会议(NanoMT 2023)_2023中美纳米医学与纳米生物技术年会

当前位置: article > 正文

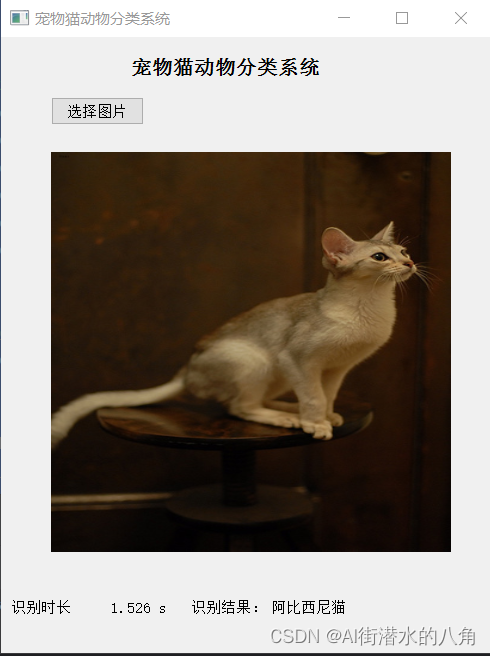

基于Pytorch框架的深度学习ConvNext神经网络宠物猫识别分类系统源码

作者:小桥流水78 | 2024-06-30 12:43:27

赞

踩

基于Pytorch框架的深度学习ConvNext神经网络宠物猫识别分类系统源码

第一步:准备数据

12种宠物猫类数据:self.class_indict = ["阿比西尼猫", "豹猫", "伯曼猫", "孟买猫", "英国短毛猫", "埃及猫", "缅因猫", "波斯猫", "布偶猫", "克拉特猫", "泰国暹罗猫", "加拿大无毛猫"]

,总共有2160张图片,每个文件夹单独放一种数据

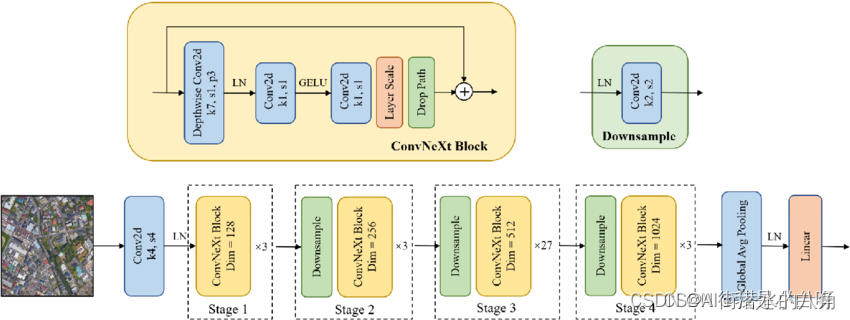

第二步:搭建模型

本文选择一个ConvNext网络,其原理介绍如下:

ConvNext (Convolutional Network Net Generation), 即下一代卷积神经网络, 是近些年来 CV 领域的一个重要发展. ConvNext 由 Facebook AI Research 提出, 仅仅通过卷积结构就达到了与 Transformer 结构相媲美的 ImageNet Top-1 准确率, 这在近年来以 Transformer 为主导的视觉问题解决趋势中显得尤为突出.

第三步:训练代码

1)损失函数为:交叉熵损失函数

2)训练代码:

- import os

- import argparse

-

- import torch

- import torch.optim as optim

- from torch.utils.tensorboard import SummaryWriter

- from torchvision import transforms

-

- from my_dataset import MyDataSet

- from model import convnext_tiny as create_model

- from utils import read_split_data, create_lr_scheduler, get_params_groups, train_one_epoch, evaluate

-

-

- def main(args):

- device = torch.device(args.device if torch.cuda.is_available() else "cpu")

- print(f"using {device} device.")

-

- if os.path.exists("./weights") is False:

- os.makedirs("./weights")

-

- tb_writer = SummaryWriter()

-

- train_images_path, train_images_label, val_images_path, val_images_label = read_split_data(args.data_path)

-

- img_size = 224

- data_transform = {

- "train": transforms.Compose([transforms.RandomResizedCrop(img_size),

- transforms.RandomHorizontalFlip(),

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

- "val": transforms.Compose([transforms.Resize(int(img_size * 1.143)),

- transforms.CenterCrop(img_size),

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

-

-

- # 实例化训练数据集

- train_dataset = MyDataSet(images_path=train_images_path,

- images_class=train_images_label,

- transform=data_transform["train"])

-

- # 实例化验证数据集

- val_dataset = MyDataSet(images_path=val_images_path,

- images_class=val_images_label,

- transform=data_transform["val"])

-

- batch_size = args.batch_size

- nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

- print('Using {} dataloader workers every process'.format(nw))

- train_loader = torch.utils.data.DataLoader(train_dataset,

- batch_size=batch_size,

- shuffle=True,

- pin_memory=True,

- num_workers=nw,

- collate_fn=train_dataset.collate_fn)

-

- val_loader = torch.utils.data.DataLoader(val_dataset,

- batch_size=batch_size,

- shuffle=False,

- pin_memory=True,

- num_workers=nw,

- collate_fn=val_dataset.collate_fn)

-

- model = create_model(num_classes=args.num_classes).to(device)

-

- if args.weights != "":

- assert os.path.exists(args.weights), "weights file: '{}' not exist.".format(args.weights)

- weights_dict = torch.load(args.weights, map_location=device)["model"]

- # 删除有关分类类别的权重

- for k in list(weights_dict.keys()):

- if "head" in k:

- del weights_dict[k]

- print(model.load_state_dict(weights_dict, strict=False))

-

- if args.freeze_layers:

- for name, para in model.named_parameters():

- # 除head外,其他权重全部冻结

- if "head" not in name:

- para.requires_grad_(False)

- else:

- print("training {}".format(name))

-

- # pg = [p for p in model.parameters() if p.requires_grad]

- pg = get_params_groups(model, weight_decay=args.wd)

- optimizer = optim.AdamW(pg, lr=args.lr, weight_decay=args.wd)

- lr_scheduler = create_lr_scheduler(optimizer, len(train_loader), args.epochs,

- warmup=True, warmup_epochs=1)

-

- best_acc = 0.

- for epoch in range(args.epochs):

- # train

- train_loss, train_acc = train_one_epoch(model=model,

- optimizer=optimizer,

- data_loader=train_loader,

- device=device,

- epoch=epoch,

- lr_scheduler=lr_scheduler)

-

- # validate

- val_loss, val_acc = evaluate(model=model,

- data_loader=val_loader,

- device=device,

- epoch=epoch)

-

- tags = ["train_loss", "train_acc", "val_loss", "val_acc", "learning_rate"]

- tb_writer.add_scalar(tags[0], train_loss, epoch)

- tb_writer.add_scalar(tags[1], train_acc, epoch)

- tb_writer.add_scalar(tags[2], val_loss, epoch)

- tb_writer.add_scalar(tags[3], val_acc, epoch)

- tb_writer.add_scalar(tags[4], optimizer.param_groups[0]["lr"], epoch)

-

- if best_acc < val_acc:

- torch.save(model.state_dict(), "./weights/best_model.pth")

- best_acc = val_acc

-

-

- if __name__ == '__main__':

- parser = argparse.ArgumentParser()

- parser.add_argument('--num_classes', type=int, default=12)

- parser.add_argument('--epochs', type=int, default=100)

- parser.add_argument('--batch-size', type=int, default=4)

- parser.add_argument('--lr', type=float, default=5e-4)

- parser.add_argument('--wd', type=float, default=5e-2)

-

- # 数据集所在根目录

- # https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz

- parser.add_argument('--data-path', type=str,

- default=r"G:\demo\data\cat_data_sets_models\cat_12_train")

-

- # 预训练权重路径,如果不想载入就设置为空字符

- # 链接: https://pan.baidu.com/s/1aNqQW4n_RrUlWUBNlaJRHA 密码: i83t

- parser.add_argument('--weights', type=str, default='./convnext_tiny_1k_224_ema.pth',

- help='initial weights path')

- # 是否冻结head以外所有权重

- parser.add_argument('--freeze-layers', type=bool, default=False)

- parser.add_argument('--device', default='cuda:0', help='device id (i.e. 0 or 0,1 or cpu)')

-

- opt = parser.parse_args()

-

- main(opt)

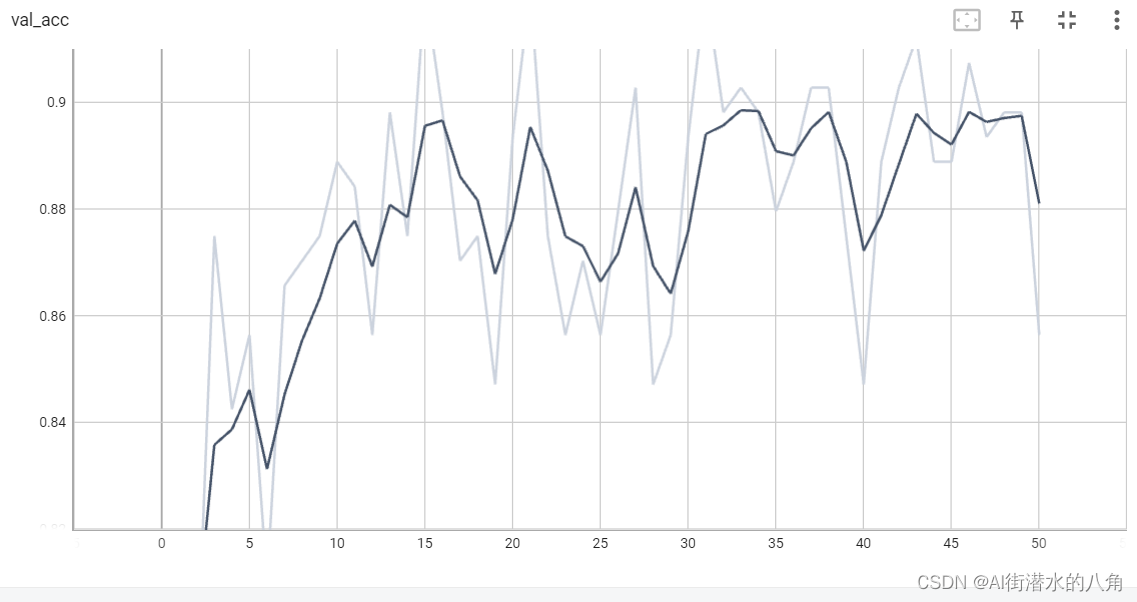

第四步:统计正确率

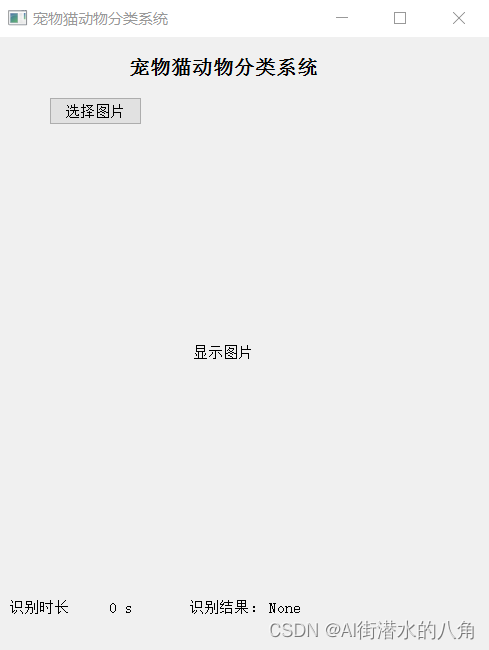

第五步:搭建GUI界面

第六步:整个工程的内容

有训练代码和训练好的模型以及训练过程,提供数据,提供GUI界面代码

代码的下载路径(新窗口打开链接):基于Pytorch框架的深度学习ConvNext神经网络宠物猫识别分类系统源码

有问题可以私信或者留言,有问必答

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/小桥流水78/article/detail/772623

推荐阅读

相关标签