- 1leetcode:126. 单词接龙 II_单词接龙ii

- 2十大开源大模型的特点及应用要点

- 3汇森投资:千亿级赛道,谁将是最先上市的中国版ServiceNow?_广通优云ipo最新消息

- 4教你如何将Web项目部署到Linux中_前端项目发布到linux

- 5惊人的算力成本背后,自动驾驶公司如何加速研发创新

- 6idea使用git的提交忽略文件配置_idea commit忽略文件

- 7内网渗透小结_删除ipc连接

- 8如何选择 SQL Server 版本_sqlserver的版本选择

- 9roLabelImg转DATO格式数据_rolabelimg 转dota

- 10RDC-SLAM:基于3D激光雷达的实时分布式协同SLAM系统_分布式高斯-赛德尔(dgs)方法属于哪种slam算法

史上最全的动态环境下SLAM论文汇总!(值得收藏)_a review of dynamic object filtering in slam based

赞

踩

动态环境SLAM是目前slam方向的一个热门研究领域。

Related survey papers:

- A survey: which features are required for dynamic visual simultaneous localization and mapping?. Zewen Xu,CAS. 2021

- State of the Art in Real-time Registration of RGB-D Images. Stotko, Patrick. University of Bonn. 2016

- Visual SLAM and Structure from Motion in Dynamic Environments: A Survey. University of Oxford. 2018

- State of the Art on 3D Reconstruction with RGB-D Cameras. Michael Zollhöfer. Stanford University. 2018

Related Article:

- https://www.zhihu.com/question/47817909

- deeplabv3+ slam

- SOF-SLAM:一种面向动态环境的语义视觉SLAM(2019,JCR Q1, 4.076)

中文工作汇总

-

1.DynaSLAM(IROS 2018)

- 论文:DynaSLAM: Tracking, Mapping and Inpainting in Dynamic Scenes

代码:https://github.com/BertaBescos/DynaSLAM

主要思想:(语义+几何)

1.使用Mask-CNN进行语义分割;

2.在low-cost Tracking阶段将动态区域(人)剔除,得到初始位姿;

3.多视图几何方法判断外点,通过区域增长法生成动态区域;

4.代码中将多视图几何的动态区域与语义分割人的区域全都去除,将mask传给orbslam进行跟踪;

5.背景修复,包括RGB图和深度图。

创新点: 语义分割无法识别移动的椅子,需要多视图几何的方法进行补充

讨论: DynaSLAM与下面的DS-SLAM是经典的动态slam系统,代码实现都很简洁。Dyna-SLAM的缺点在于:1.多视图几何方法得到的外点,在深度图上通过区域增长得到动态区域,只要物体上存在一个动态点,整个物体都会被“增长”成为动态 2.其将人的区域以及多视图几何方法得到的区域都直接去掉,与论文不符。

- 论文:DynaSLAM: Tracking, Mapping and Inpainting in Dynamic Scenes

-

2.DS-SLAM(IROS 2018, 清华大学)

- 论文:DS-SLAM: A Semantic Visual SLAM towards Dynamic Environments

代码:https://github.com/ivipsourcecode/DS-SLAM

主要思想:(语义+几何)

1.SegNet进行语义分割(单独一个线程);

2.对于前后两帧图像,通过极线几何检测外点;

3.如果某一物体外点数量过多,则认为是动态,剔除;

4.建立了语义八叉树地图。

讨论:这种四线程的结构以及极线约束的外点检测方法得到了很多论文的采纳,其缺点在于:1.极线约束的外点检测方法并不能找到所有外点,当物体沿极线方向运动时这种方法会失效 2.用特征点中的外点的比例来判断该物体是否运动,这用方法存在局限性,特征点的数量受物体纹理的影响较大 3.SegNet是2016年剔除的语义分割网络,分割效果有很大提升空间,实验效果不如Dyna-SLAM

- 3.Detect-SLAM(2018 IEEE WCACV, 北京大学)

论文:Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial

代码:https://github.com/liadbiz/detect-slam

主要思想:

目标检测的网络并不能实时运行,所以只在关键帧中进行目标检测,然后通过特征点的传播将其结果传播到普通帧中

1.只在关键帧中用SSD网络进行目标检测(得到的是矩形区域及其置信度),图割法剔除背景,得到更加精细的动态区域;

2.在普通帧中,利用feature matching + matching point expansion两种机制,对每个特征点动态概率传播,至此得到每个特征点的动态概率;

3.object map帮助提取候选区域。

- 4.VDO-SLAM(arXiv 2020)

论文:VDO-SLAM: A Visual Dynamic Object-aware SLAM System

代码: https://github.com/halajun/vdo_slam

主要思想:

1.运动物体跟踪,比较全的slam+运动跟踪的系统;

2.光流+语义分割。

- 5.Co-Fusion(ICRA 2017)

论文:Co-Fusion: Real-time Segmentation, Tracking and Fusion of Multiple Objects

代码:https://github.com/martinruenz/co-fusion

主要思想: 学习和维护每个物体的3D模型,并通过随时间的融合提高模型结果。这是一个经典的系统,很多论文都拿它进行对比

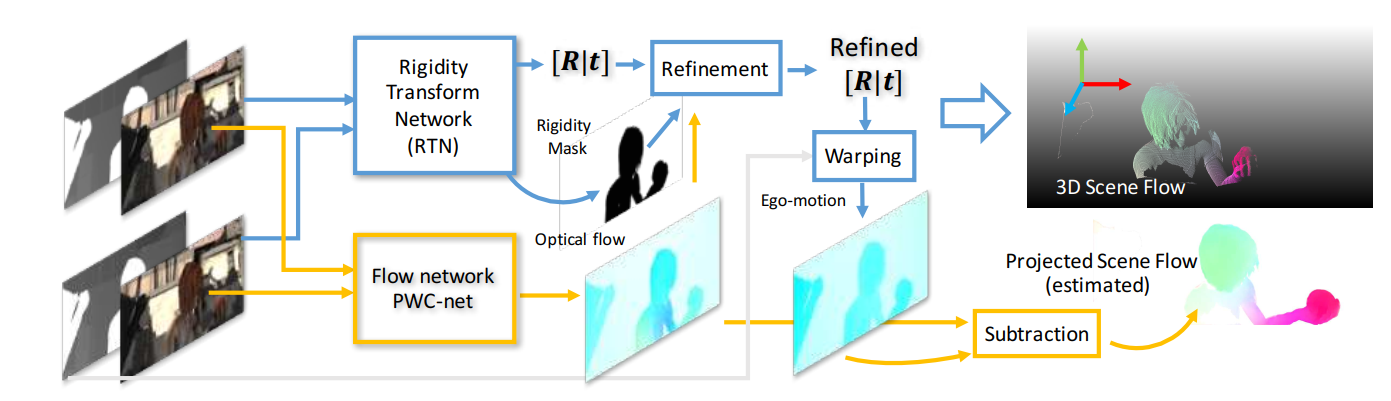

- 6.Learning Rigidity in Dynamic Scenes with a Moving Camera for 3D Motion Field Estimation(2018,ECCV,NVIDIA)

论文:Learning Rigidity in Dynamic Scenes with a Moving Camera for 3D Motion Field Estimation

代码:https://github.com/NVlabs/learningrigidity.git

主要思想:

1.RTN网络用于计算位姿以及刚体区域,PWC网络用于计算稠密光流;

2.基于以上结果,估计刚体区域的相对位姿;

3.计算刚体的3D场景流;

此外还开发了一套用于生成半人工动态场景的工具REFRESH。

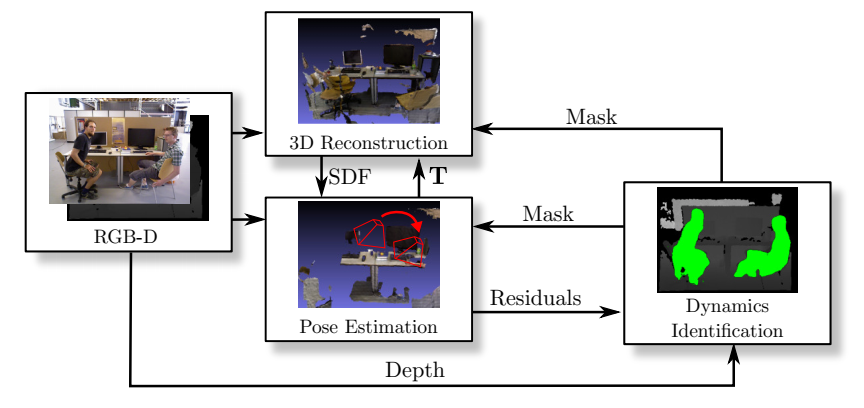

- 7.ReFusion(2019 IROS)

论文:ReFusion: 3D Reconstruction in Dynamic Environments for RGB-D Cameras Exploiting Residuals

代码:https://github.com/PRBonn/refusion

主要思想:

主流的动态slam方法需要用神经网络进行分实例分割,此过程需要预先定义可能动态的对象并在数据集上进行大量的训练,使用场景受到很大的限制。而ReFusion则使用纯几何的方法分割动态区域,具体的:在KinectFusion稠密slam系统的基础上,计算每个像素点的残差,通过自适应阈值分割得到大致动态区域,形态学处理得到最终动态区域,与此同时,可得到静态背景的TSDF地图。

讨论:为数不多的不使用神经网络的动态slam系统

- 8.RGB_D-SLAM-with-SWIAICP(2017)

论文:RGB-D SLAM in Dynamic Environments Using Static Point Weighting

代码:https://github.com/VitoLing/RGB_D-SLAM-with-SWIAICP

主要思想:

1.仅使用前景的边缘点进行跟踪( Foreground Depth Edge Extraction);

2.每隔n帧插入关键帧,通过当前帧与关键帧计算位姿;

3.通过投影误差计算每个点云的静态-动态质量,为下面的IAICP提供每个点云的权重;

4.提出了融合灰度信息的ICP算法–IAICP,用于计算帧与帧之间的位姿。

讨论: 前景物体的边缘点能够很好地表征整个物体,实验效果令人耳目一新。但是文中所用的ICP算法这并不是边缘slam常用的算法,边缘slam一般使用距离变换(DT)描述边缘点的误差,其可以避免点与点之间的匹配。论文Robust RGB-D visual odometry based on edges and points提出了一种很有意思的方案,用特征点计算位姿,用边缘点描述动态区域,很好地汲取了二者的优势。

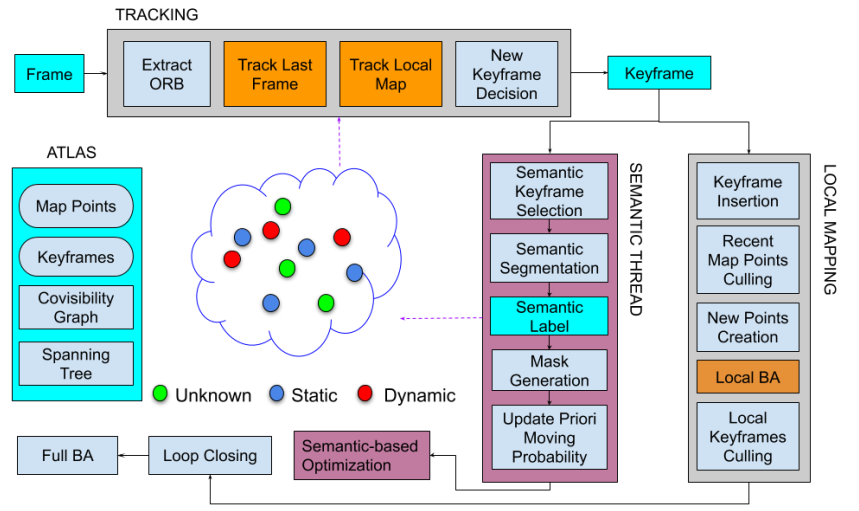

- 9.RDS-SLAM(2021,Access)

论文:RDS-SLAM: Real-Time Dynamic SLAM Using Semantic Segmentation Methods

代码:https://github.com/yubaoliu/RDS-SLAM.git

主要思想:克服不能实时进行语义分割的问题

1.选择最近的关键帧进行语义分割;

2.基于贝叶斯的概率传播;

3.通过上一帧和局部地图得到当前帧的外点;

4.根据运动概率加权计算位姿。

- 靠谱的工作

- DS-SLAM Dynaslam:https://github.com/zhuhu00/DS-SLAM_modify; https://blog.csdn.net/qq_41623632/article/details/112911046;

Dynamic Object Detection and Removal

-

Pfreundschuh, Patrick, et al. “Dynamic Object Aware LiDAR SLAM Based on Automatic Generation of Training Data.” (ICRA 2021)

-

Canovas Bruce, et al. “Speed and Memory Efficient Dense RGB-D SLAM in Dynamic Scenes.” (IROS 2020)

-

Yuan Xun and Chen Song. “SaD-SLAM: A Visual SLAM Based on Semantic and Depth Information.” (IROS 2020)

- USTC, code, video

-

Dong, Erqun, et al. “Pair-Navi: Peer-to-Peer Indoor Navigation with Mobile Visual SLAM.” (ICCC 2019)

-

Ji Tete, et al. “Towards Real-Time Semantic RGB-D SLAM in Dynamic Environments.” (ICRA 2021)

-

Palazzolo Emanuele, et al. “ReFusion: 3D Reconstruction in Dynamic Environments for RGB-D Cameras Exploiting Residuals.” (IROS 2019)

-

Arora Mehul, et al. Mapping the Static Parts of Dynamic Scenes from 3D LiDAR Point Clouds Exploiting Ground Segmentation. p. 6.

-

Chen Xieyuanli, et al. “Moving Object Segmentation in 3D LiDAR Data: A Learning-Based Approach Exploiting Sequential Data.” IEEE Robotics and Automation Letters, 2021

-

Zhang Tianwei, et al. “FlowFusion: Dynamic Dense RGB-D SLAM Based on Optical Flow.”(ICRA 2020)

- code. video.

-

Zhang Tianwei, et al. “AcousticFusion: Fusing Sound Source Localization to Visual SLAM in Dynamic Environments.”,IROS 2021

- video. 结合声音信号

-

-

Liu Yubao and Miura Jun. “RDS-SLAM: Real-Time Dynamic SLAM Using Semantic Segmentation Methods.” IEEE Access 2021

-

Liu Yubao and Miura Jun. “RDMO-SLAM: Real-Time Visual SLAM for Dynamic Environments Using Semantic Label Prediction With Optical Flow.” IEEE Access, vol. 9, 2021, pp. 106981–97. IEEE Xplore, https://doi.org/10.1109/ACCESS.2021.3100426.

- code, video.

-

-

Cheng Jiyu, et al. “Improving Visual Localization Accuracy in Dynamic Environments Based on Dynamic Region Removal.” IEEE Transactions on Automation Science and Engineering, vol. 17, no. 3, July 2020, pp. 1585–96. IEEE Xplore, https://doi.org/10.1109/TASE.2020.2964938.

-

Soares João Carlos Virgolino, et al. “Crowd-SLAM: Visual SLAM Towards Crowded Environments Using Object Detection.” Journal of Intelligent & Robotic Systems 2021

- code, video

-

Kaveti Pushyami and Singh Hanumant. “A Light Field Front-End for Robust SLAM in Dynamic Environments.”.

-

Kuen-Han Lin and Chieh-Chih Wang. “Stereo-Based Simultaneous Localization, Mapping and Moving Object Tracking.” IROS 2010

-

Fu, H.; Xue, H.; Hu, X.; Liu, B. LiDAR Data Enrichment by Fusing Spatial and Temporal Adjacent Frames. Remote Sens. 2021, 13, 3640.

-

Qian, Chenglong, et al. RF-LIO: Removal-First Tightly-Coupled Lidar Inertial Odometry in High Dynamic Environments. p. 8. IROS2021, XJTU

-

K. Minoda, F. Schilling, V. Wüest, D. Floreano, and T. Yairi, “VIODE: A Simulated Dataset to Address the Challenges of Visual-Inertial Odometry in Dynamic Environments,”RAL 2021

- 动态环境的数据集,包括了静态,动态等级的场景,感觉适合用来作为验证。

- 东京大学,code

-

W. Dai, Y. Zhang, P. Li, Z. Fang, and S. Scherer, “RGB-D SLAM in Dynamic Environments Using Point Correlations,” IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1–1, 2020

- 浙大,使用点的关联进行去除。

-

C. Huang, H. Lin, H. Lin, H. Liu, Z. Gao, and L. Huang, “YO-VIO: Robust Multi-Sensor Semantic Fusion Localization in Dynamic Indoor Environments,” in 2021 International Conference on Indoor Positioning and Indoor Navigation (IPIN), 2021.

- 使用yolo和光流对运动对象进行判断,去除特征点后进行定位

- VIO的结合

-

Dynamic-VINS:RGB-D Inertial Odometry for a Resource-restricted Robot in Dynamic Environments.

Dynamic Object Detection and Tracking

-

“AirDOS: Dynamic SLAM benefits from Articulated Objects,” Qiu Yuheng, et al. 2021(Arxiv)

-

“DOT: Dynamic Object Tracking for Visual SLAM.” Ballester, Irene, et al.(ICRA 2021)

- code, video, University of Zaragoza, Vision

-

Liu Yubao and Miura Jun. “RDMO-SLAM: Real-Time Visual SLAM for Dynamic Environments Using Semantic Label Prediction With Optical Flow.” IEEE Access.

-

Kim Aleksandr, et al. “EagerMOT: 3D Multi-Object Tracking via Sensor Fusion.” (ICRA 2021)

-

- Shan, Mo, et al. “OrcVIO: Object Residual Constrained Visual-Inertial Odometry.” (IROS2020)

- Shan, Mo, et al. “OrcVIO: Object Residual Constrained Visual-Inertial Odometry.” (IROS 2021)

-

Rosen, David M., et al. “Towards Lifelong Feature-Based Mapping in Semi-Static Environments.” (ICRA 2016)

-

- Henein Mina, et al. “Dynamic SLAM: The Need For Speed.” (ICRA 2020)

- Zhang Jun, et al. “VDO-SLAM: A Visual Dynamic Object-Aware SLAM System.” (ArXiv 2020)

- Robust Ego and Object 6-DoF Motion Estimation and Tracking,Jun Zhang, Mina Henein, Robert Mahony and Viorela Ila. IROS 2020(code)

-

Minoda, Koji, et al. “VIODE: A Simulated Dataset to Address the Challenges of Visual-Inertial Odometry in Dynamic Environments.” (RAL 2021)

-

Vincent, Jonathan, et al. “Dynamic Object Tracking and Masking for Visual SLAM.”, (IROS 2020)

- code, video,

-

Huang, Jiahui, et al. “ClusterVO: Clustering Moving Instances and Estimating Visual Odometry for Self and Surroundings.” (CVPR 2020)

-

Liu, Yuzhen, et al. “A Switching-Coupled Backend for Simultaneous Localization and Dynamic Object Tracking.” (RAL 2021)

- Tsinghua

-

Yang Charig, et al. “Self-Supervised Video Object Segmentation by Motion Grouping.”(ICCV 2021)

-

Long Ran, et al. “RigidFusion: Robot Localisation and Mapping in Environments with Large Dynamic Rigid Objects.” ,(RAL 2021)

- project page.code, video,

-

Yang Bohong, et al. “Multi-Classes and Motion Properties for Concurrent Visual SLAM in Dynamic Environments.” IEEE Transactions on Multimedia, 2021

-

Yang Gengshan and Ramanan Deva. “Learning to Segment Rigid Motions from Two Frames.” CVPR 2021

-

Thomas Hugues, et al. “Learning Spatiotemporal Occupancy Grid Maps for Lifelong Navigation in Dynamic Scenes.”

- code.

-

Jung Dongki, et al. “DnD: Dense Depth Estimation in Crowded Dynamic Indoor Scenes.” (ICCV 2021)

- code, video.

-

Luiten Jonathon, et al. “Track to Reconstruct and Reconstruct to Track.”, (RAL+ICRA 2020)

-

Grinvald, Margarita, et al. “TSDF++: A Multi-Object Formulation for Dynamic Object Tracking and Reconstruction.”(ICRA 2021)

-

Wang Chieh-Chih, et al. “Simultaneous Localization, Mapping and Moving Object Tracking.” The International Journal of Robotics Research 2007

-

Ran Teng, et al. “RS-SLAM: A Robust Semantic SLAM in Dynamic Environments Based on RGB-D Sensor.”

-

Xu Hua, et al. “OD-SLAM: Real-Time Localization and Mapping in Dynamic Environment through Multi-Sensor Fusion.” * (ICARM 2020)* https://doi.org/10.1109/ICARM49381.2020.9195374.

-

Wimbauer Felix, et al. “MonoRec: Semi-Supervised Dense Reconstruction in Dynamic Environments from a Single Moving Camera.” (CVPR 2021)

- Project page. code. video. video 2.

-

Liu Yu, et al. “Dynamic RGB-D SLAM Based on Static Probability and Observation Number.” IEEE Transactions on Instrumentation and Measurement, vol. 70, 2021, pp. 1–11. IEEE Xplore, https://doi.org/10.1109/TIM.2021.3089228.

-

P. Li, T. Qin, and S. Shen, “Stereo Vision-based Semantic 3D Object and Ego-motion Tracking for Autonomous Driving,” arXiv 2018

- 沈邵颉老师组

-

G. B. Nair et al., “Multi-object Monocular SLAM for Dynamic Environments,” IV2020

-

M. Rünz and L. Agapito, “Co-fusion: Real-time segmentation, tracking and fusion of multiple objects,” in 2017 IEEE International Conference on Robotics and Automation (ICRA), May 2017, pp. 4471–4478.

- code,

-

TwistSLAM: Constrained SLAM in Dynamic Environment,

- S3LAM的后续,使用全景分割作为检测的前端

Researchers

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/小小林熬夜学编程/article/detail/711051

Copyright © 2003-2013 www.wpsshop.cn 版权所有,并保留所有权利。