热门标签

热门文章

- 1今天我们来聊一聊知识图谱的存储方式_知识图谱保存的文件

- 2如何检查docker和docker compose是否已经安装?_检查docker-compose是否安装成功

- 3哈希扩展——布隆过滤器_布隆过滤器 hash 次数 改进

- 4图像处理中常用的彩色模型_图像处理 常用模型

- 5深入浅出 -- 系统架构之分布式多形态的存储型集群

- 6十、训练自己的TTS模型_tts 训练

- 7Transformers in NLP (一):图说transformer结构_transformer编码器的输出

- 85款免费且出色的ai智能ppt制作软件,值得拥有!_aippt 有免费吗

- 9NLP(二)(问答系统搭建(1))_问答系统语料库的构建

- 10TCN(Temporal Convolutional Network,时间卷积网络)

当前位置: article > 正文

中文文本预处理_中文文本预处理实例 r语言

作者:小小林熬夜学编程 | 2024-04-01 20:34:02

赞

踩

中文文本预处理实例 r语言

前言:对中文文本分词、去除停用词等预处理操作

pwd

- 1

1.导入数据

import jieba

import numpy as np

import pandas as pd

import csv

- 1

- 2

- 3

- 4

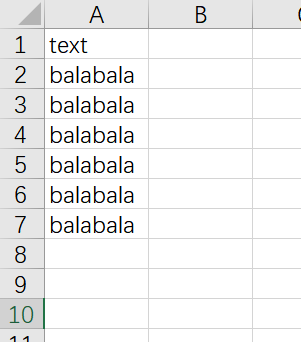

data_zh.xlsx文件必须含有一列列名为text,列中数据为若干篇文档

xlsx_file = pd.read_excel('./data_zh.xlsx')

csv_file = xlsx_file.to_csv('./data_zh.csv', encoding='utf-8')

csv_file=pd.read_csv('./data_zh.csv', low_memory=False, encoding='utf-8')

- 1

- 2

- 3

# 将csv中的数据读取为pandas dataframe结构

df_file=pd.DataFrame(csv_file)

# 显示前5行数据

df_file.head()

- 1

- 2

- 3

- 4

2. 数据预处理

2.1 去除非中文字符

def format_str(document):

'''去除非中文字符'''

content_str=''

for _char in document:

if (_char >= u'\u4e00' and _char <= u'\u9fa5'):

content_str = content_str + _char

else:

continue

return content_str

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

# 读取csv文件中的text列,将所有文章构成一个list

document_list = list(df_file['text'])

chinese_list=[]

for document in document_list:

chinese_list.append(format_str(document))

chinese_list

- 1

- 2

- 3

- 4

- 5

- 6

- 7

2.2 jieba分词

自定义词典dict_my.txt的格式为:

词 3 n

自定义词典包含三部分:词语、词频、词性(可省略,n表示名词)

词频的理解:https://github.com/fxsjy/jieba/issues/14

jieba.load_userdict("./dict_my.txt")

- 1

word_lists=[]

for i in range(len(chinese_list)):

result=[]

seg_list=jieba.cut(chinese_list[i])

for word in seg_list:

result.append(word)

word_lists.append(result)

word_lists

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

2.3 导入停用词库、自定义字典

停用词库stopwords_my.txt格式为:两个停用词间换行

word1

word2

word3

……

- 1

- 2

- 3

- 4

# 导入停用词

f=open("./stopwords_my.txt", 'r', encoding='utf-8')

stop_word_list=[]

for line in f.readlines():

stop_word_list.append(line.strip('\n'))

- 1

- 2

- 3

- 4

- 5

contents_clean = []

for word_list in word_lists:

line_clean = []

for word in word_list:

if word in stop_word_list:

continue

line_clean.append(word)

contents_clean.append(line_clean)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

# 去除停用词后的文本 list

contents_clean

- 1

- 2

2.4 显示高频词

显示高频词,并手动将高频且无意义的词放置停用词库

# 将分此后的结果合并至一个list中

all_words = []

for line in contents_clean:

for word in line:

all_words.append(word)

all_words

- 1

- 2

- 3

- 4

- 5

- 6

# 统计词频

from collections import Counter

wordcount = Counter(all_words)

word_count = wordcount.most_common(50)

frequence_list = []

for i in range(len(word_count)):

frequence_list.append(word_count[i][0])

frequence_list

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

# 获取不在停用词库中的高频词

need_to_add_stopword = []

for i in frequence_list:

if i not in stop_word_list:

need_to_add_stopword.append(i)

else:

continue

# 输出不在停用词库中的高频词

print("\n".join(str(i) for i in need_to_add_stopword))

# 将以下输出结果中的无用词复制到文件 stopwords_my.txt 中

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

2.5 分词后拼接

word_list=[]

for i in range(len(contents_clean)):

k_list=['']

for j in range(len(contents_clean[i])):

k_list[0] += (contents_clean[i][j] + ' ')

word_list.append(k_list[0])

word_list

- 1

- 2

- 3

- 4

- 5

- 6

- 7

3. 合并数据

3.1 分词结果写入df

word_data=pd.DataFrame({'text1':word_list})

word_data.head()

- 1

- 2

3.2 拼接两个df

# 使用join函数拼接

data = df_file.join(word_data)

# 输出拼接后的结果

data.head()

- 1

- 2

- 3

- 4

3.3 整理新的df

# 重设df的列名,根据需求设置列名,‘jiebatext’为经过预处理的文本

data.columns=['order', 'title', 'text', 'jiebatext']

data.head()

- 1

- 2

- 3

# 删除不需要的列

del data['order']

- 1

- 2

# 查看最终的df

data.head()

- 1

- 2

4. 结果写入csv文件并导出来excel

经测试,R语言实现结构主体模型(STM)最好输入xlsx,因为csv会因为分隔符等问题而报错

data.to_csv(path_or_buf='./data_zh_done.csv', index=False, encoding='utf-8')

- 1

csv_file=pd.read_csv('./data_zh_done.csv', low_memory=False, encoding='utf-8')

csv_file.to_excel('./data_zh_done.xlsx', index=False, encoding='utf-8')

- 1

- 2

声明:本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:【wpsshop博客】

推荐阅读

相关标签