热门标签

热门文章

- 1ViLBERT:视觉语言多模态预训练模型_视觉-语言多模态训练模型

- 2深度之眼Paper带读笔记NLP.11:FASTTEXT.Baseline.06_in this work, we explore ways to scale thesebaseli

- 3matlab accumarray_32个实用matlab编程技巧

- 4【科研新手指南2】「NLP+网安」相关顶级会议&期刊 投稿注意事项+会议等级+DDL+提交格式_ccs投稿须知文件

- 5spring-boog-测试打桩-Mockito_org.mockito.exceptions.base.mockitoexception: chec

- 6Git将当前分支暂存切换到其他分支_如何暂存修改切换分支git

- 7solidity实现智能合约教程(3)-空投合约

- 8上位机图像处理和嵌入式模块部署(qmacvisual寻找圆和寻找直线)

- 930天拿下Rust之错误处理

- 10基于springboot的在线招聘平台设计与实现 毕业设计开题报告_招聘app的设计与实现的选题背景怎么写

当前位置: article > 正文

Pytorch+Text-CNN+Word2vec电影评论实战_电影评论数据集下载

作者:小丑西瓜9 | 2024-03-31 23:50:13

赞

踩

电影评论数据集下载

0.前言

参考:这位博主 。自己再写博客是为了方便再回顾

1.电影评论数据集

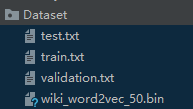

数据集下载:链接:https://pan.baidu.com/s/1zultY2ODRFaW3XiQFS-36w

提取码:mgh2 压缩包里有四个文件,将解压好的文件夹放在项目目录里即可

训练数据集过大所以我使用的是test数据集进行训练

2.加载数据

import pandas as pd

# 加载数据

train_data = pd.read_csv('./Dataset/test.txt', names=['label', 'review'], sep='\t')

train_labels = train_data['label']

train_reviews = train_data['review']

- 1

- 2

- 3

- 4

- 5

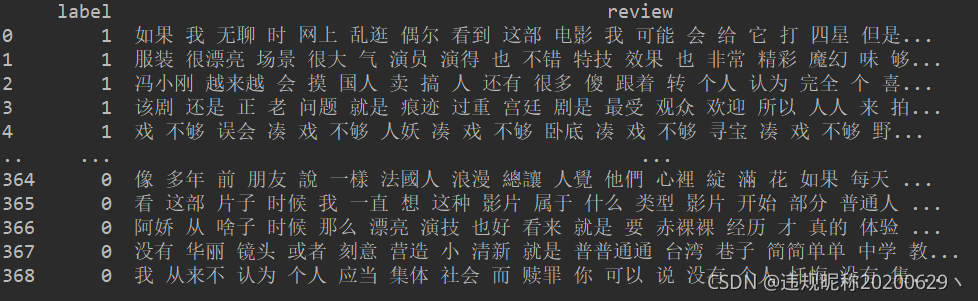

训练数据共有369条

comments_len = train_data.iloc[:, 1].apply(lambda x: len(x.split(' ')))

print(comments_len)

train_data['comments_len'] = comments_len

print(train_data['comments_len'].describe(percentiles=[.5, .95]))

- 1

- 2

- 3

- 4

train_data.iloc[:, 1].apply(lambda x: len(x.split(’ ')))的意思是对train_data第2列(编号为1即review)的数据返回每一条数据词的数量

可以看出95%的评论词的个数都在63以内,那么我们取每条评论词的数量最多为max_sent=63,超过了就切掉后面的词,否则就补充

3.数据预处理

from collections import Counter def text_process(review): """ 数据预处理 :param review: 评论数据train_reviews :return: 词汇表words、词-id的字典word2id、id-词的字典id2word、pad_sentencesid """ words = [] for i in range(len(review)): words += review[i].split(' ') # 选出频率较高的词,存放到word_freq.txt中 with open('./Dataset/word_freq.txt', 'w', encoding='utf-8') as f: # Counter(words).most_common() 找出出现频率最高的词(不加参数则返回所有词及其频率) for word, freq in Counter(words).most_common(): if freq > 1: f.write(word+'\n') # 取出出数据 with open('./Dataset/word_freq.txt', encoding='utf-8') as f: words = [i.strip() for i in f] # 去重(词汇表) words = list(set(words)) # 词-id的字典word2id word2id = {j: i for i, j in enumerate(words)} # id-词的字典id2word id2word = {i: j for i, j in enumerate(words)} pad_id = word2id['把'] # 中性词的id 用于填充 sentences = [i.split(' ') for i in review] # 填充后的所有句子 每个词用id表示 pad_sentencesid = [] for i in sentences: # 如果词汇表中没有这个词 用pad_id替代 如果有这个词返回这个词对应的id temp = [word2id.get(j, pad_id) for j in i] # 如果句子词的数量大于max_sent,则截断后面的 if len(i) > max_sent: temp = temp[:max_sent] else: # 如果句子词的数量小于max_sent,则用pad_id进行填充 for j in range(max_sent - len(i)): temp.append(pad_id) pad_sentencesid.append(temp) return words, word2id, id2word, pad_sentencesid

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

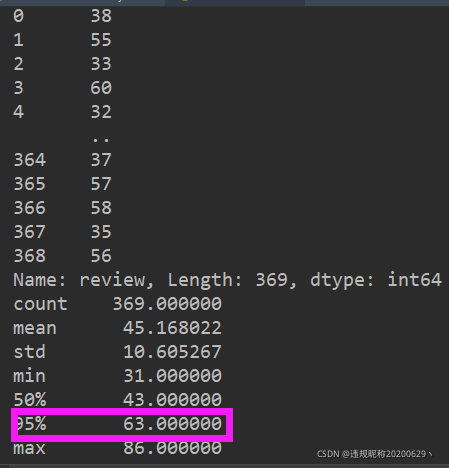

首先将所有评论的词放到words中,选出出现频率freq>1的词,存放到word_freq.txt再取出放到words中,freq可以自己控制大于多少,当然也可以不要这一步,这步操作在数据量大的时候会比较高效,筛选后基本保留了情感分类的关键词

我们把那些出现频率freq<=1的词都变成"把“字,变成什么无所谓,只要不会影响我们的分类结果就行,这个字是个中性词,所以不会对情感分类有什么影响。

在对每个句子进行处理,如果句子词的数量大于max_sent,则截断后面的词,否则用中性词的pad_id进行填充

4.得到数据

import torch import torch.utils.data as Data import numpy as np from gensim.models import keyedvectors # hyper parameter Batch_Size = 32 Embedding_Size = 50 # 词向量维度 Filter_Num = 10 # 卷积核个数 Dropout = 0.5 Epochs = 60 LR = 0.01 # 加载词向量模型Word2vec w2v = keyedvectors.load_word2vec_format('./Dataset/wiki_word2vec_50.bin', binary=True) def get_data(labels, reviews): words, word2id, id2word, pad_sentencesid = text_process(reviews) x = torch.from_numpy(np.array(pad_sentencesid)) # [369, 63] y = torch.from_numpy(np.array(labels)) # [369] dataset = Data.TensorDataset(x, y) data_loader = Data.DataLoader(dataset=dataset, batch_size=Batch_Size) # 遍历词汇表中所有的词 如果w2v中有该词的向量表示则不作操作,否则随机生成向量放到w2v中 for i in range(len(words)): try: w2v[words[i]] = w2v[words[i]] except Exception: w2v[words[i]] = np.random.randn(50, ) return data_loader, id2word

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

重点:评论中的词在w2v中可能没有,得进行随机分配向量,否则会报错

5.将词转为词向量

def word2vec(x): # [batch_size, 63]

"""

将句子中的所有词转为词向量

:param x: batch_size个句子

:return: batch_size个句子的词向量

"""

batch_size = x.shape[0]

x_embedding = np.ones((batch_size, x.shape[1], Embedding_Size)) # [batch_size, 63, 50]

for i in range(len(x)):

# item() 将tensor类型转为number类型

x_embedding[i] = w2v[[id2word[j.item()] for j in x[i]]]

return torch.tensor(x_embedding).to(torch.float32)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

这里将所有句子中的词都转为词向量

6.模型

import torch.nn as nn class TextCNN(nn.Module): def __init__(self): super(TextCNN, self).__init__() self.conv = nn.Sequential( nn.Conv1d(1, Filter_Num, (2, Embedding_Size)), nn.ReLU(), nn.MaxPool2d((max_sent-1, 1)) ) self.dropout = nn.Dropout(Dropout) self.fc = nn.Linear(Filter_Num, 2) self.softmax = nn.Softmax(dim=1) # 行 def forward(self, X): # [batch_size, 63] batch_size = X.shape[0] X = word2vec(X) # [batch_size, 63, 50] X = X.unsqueeze(1) # [batch_size, 1, 63, 50] X = self.conv(X) # [batch_size, 10, 1, 1] X = X.view(batch_size, -1) # [batch_size, 10] X = self.fc(X) # [batch_size, 2] X = self.softmax(X) # [batch_size, 2] return X

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

TextCNN网络模型

7.训练

if __name__ == '__main__': data_loader, id2word = get_data(train_labels, train_reviews) text_cnn = TextCNN() optimizer = torch.optim.Adam(text_cnn.parameters(), lr=LR) loss_fuc = nn.CrossEntropyLoss() print("+++++++++++start train+++++++++++") for epoch in range(Epochs): for step, (batch_x, batch_y) in enumerate(data_loader): # 前向传播 predicted = text_cnn.forward(batch_x) loss = loss_fuc(predicted, batch_y) # 反向传播 optimizer.zero_grad() loss.backward() optimizer.step() # 计算accuracy # dim=0表示取每列的最大值,dim=1表示取每行的最大值 # torch.max()[0]表示返回最大值 torch.max()[1]表示返回最大值的索引 predicted = torch.max(predicted, dim=1)[1].numpy() label = batch_y.numpy() accuracy = sum(predicted == label) / label.size if step % 30 == 0: print('epoch:', epoch, ' | train loss:%.4f' % loss.item(), ' | test accuracy:', accuracy)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

8.所有代码

#encoding:utf-8 #作者:codewen import pandas as pd from collections import Counter import torch import torch.nn as nn import torch.utils.data as Data import numpy as np from gensim.models import keyedvectors # 加载数据 train_data = pd.read_csv('./Dataset/test.txt', names=['label', 'review'], sep='\t') train_labels = train_data['label'] train_reviews = train_data['review'] # 95%的评论 词的数量在62以内 comments_len = train_data.iloc[:, 1].apply(lambda x: len(x.split(' '))) print(comments_len) train_data['comments_len'] = comments_len print(train_data['comments_len'].describe(percentiles=[.5, .95])) # 对数据进行处理 如果句子长度大于max_sent则截断,否则用'空'填充 max_sent = 63 def text_process(reviews): """ 数据预处理 :param review: 评论数据train_reviews :return: 词汇表words、词-id的字典word2id、id-词的字典id2word、pad_sentencesid """ words = [] for i in range(len(reviews)): words += reviews[i].split(' ') # 选出频率较高的词,存放到word_freq.txt中 with open('./Dataset/word_freq.txt', 'w', encoding='utf-8') as f: # Counter(words).most_common() 找出出现频率最高的词(不加参数则返回所有词及其频率) for word, freq in Counter(words).most_common(): if freq > 1: f.write(word+'\n') # 取出出数据 with open('./Dataset/word_freq.txt', encoding='utf-8') as f: words = [i.strip() for i in f] # 去重(词汇表) words = list(set(words)) # 词-id的字典word2id word2id = {j: i for i, j in enumerate(words)} # id-词的字典id2word id2word = {i: j for i, j in enumerate(words)} pad_id = word2id['把'] # 中性词的id 用于填充 sentences = [i.split(' ') for i in reviews] # 填充后的所有句子 每个词用id表示 pad_sentencesid = [] for i in sentences: # 如果词汇表中没有这个词 用pad_id替代 如果有这个词返回这个词对应的id temp = [word2id.get(j, pad_id) for j in i] # 如果句子词的数量大于max_sent,则截断后面的 if len(i) > max_sent: temp = temp[:max_sent] else: # 如果句子词的数量小于max_sent,则用pad_id进行填充 for j in range(max_sent - len(i)): temp.append(pad_id) pad_sentencesid.append(temp) return words, word2id, id2word, pad_sentencesid # hyper parameter Batch_Size = 32 Embedding_Size = 50 # 词向量维度 Filter_Num = 10 # 卷积核个数 Dropout = 0.5 Epochs = 60 LR = 0.01 # 加载词向量模型Word2vec w2v = keyedvectors.load_word2vec_format('./Dataset/wiki_word2vec_50.bin', binary=True) def get_data(labels, reviews): words, word2id, id2word, pad_sentencesid = text_process(reviews) x = torch.from_numpy(np.array(pad_sentencesid)) # [369, 63] y = torch.from_numpy(np.array(labels)) # [369] dataset = Data.TensorDataset(x, y) data_loader = Data.DataLoader(dataset=dataset, batch_size=Batch_Size) # 遍历词汇表中所有的词 如果w2v中有该词的向量表示则不作操作,否则随机生成向量放到w2v中 for i in range(len(words)): try: w2v[words[i]] = w2v[words[i]] except Exception: w2v[words[i]] = np.random.randn(50, ) return data_loader, id2word def word2vec(x): # [batch_size, 63] """ 将句子中的所有词转为词向量 :param x: batch_size个句子 :return: batch_size个句子的词向量 """ batch_size = x.shape[0] x_embedding = np.ones((batch_size, x.shape[1], Embedding_Size)) # [batch_size, 63, 50] for i in range(len(x)): # item() 将tensor类型转为number类型 x_embedding[i] = w2v[[id2word[j.item()] for j in x[i]]] return torch.tensor(x_embedding).to(torch.float32) class TextCNN(nn.Module): def __init__(self): super(TextCNN, self).__init__() self.conv = nn.Sequential( nn.Conv1d(1, Filter_Num, (2, Embedding_Size)), nn.ReLU(), nn.MaxPool2d((max_sent-1, 1)) ) self.dropout = nn.Dropout(Dropout) self.fc = nn.Linear(Filter_Num, 2) self.softmax = nn.Softmax(dim=1) # 行 def forward(self, X): # [batch_size, 63] batch_size = X.shape[0] X = word2vec(X) # [batch_size, 63, 50] X = X.unsqueeze(1) # [batch_size, 1, 63, 50] X = self.conv(X) # [batch_size, 10, 1, 1] X = X.view(batch_size, -1) # [batch_size, 10] X = self.fc(X) # [batch_size, 2] X = self.softmax(X) # [batch_size, 2] return X if __name__ == '__main__': data_loader, id2word = get_data(train_labels, train_reviews) text_cnn = TextCNN() optimizer = torch.optim.Adam(text_cnn.parameters(), lr=LR) loss_fuc = nn.CrossEntropyLoss() print("+++++++++++start train+++++++++++") for epoch in range(Epochs): for step, (batch_x, batch_y) in enumerate(data_loader): # 前向传播 predicted = text_cnn.forward(batch_x) loss = loss_fuc(predicted, batch_y) # 反向传播 optimizer.zero_grad() loss.backward() optimizer.step() # 计算accuracy # dim=0表示取每列的最大值,dim=1表示取每行的最大值 # torch.max()[0]表示返回最大值 torch.max()[1]表示返回最大值的索引 predicted = torch.max(predicted, dim=1)[1].numpy() label = batch_y.numpy() accuracy = sum(predicted == label) / label.size if step % 30 == 0: print('epoch:', epoch, ' | train loss:%.4f' % loss.item(), ' | test accuracy:', accuracy)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

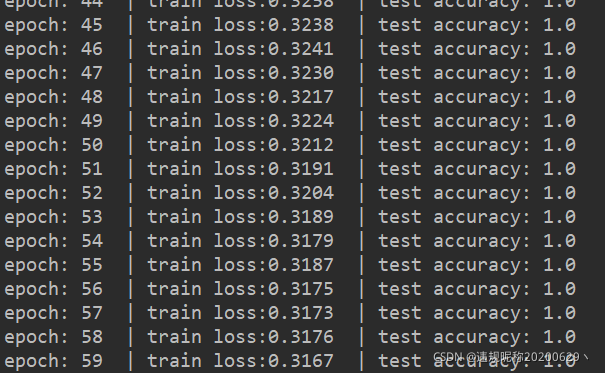

大概一分钟后,训练结束

声明:本文内容由网友自发贡献,转载请注明出处:【wpsshop博客】

推荐阅读

相关标签