- 1YOLOv8添加CBAM注意力机制(模型改进)_yolov8不同位置加cbam

- 2大学生个人简历模板范文精选5篇_简历模板 csdn

- 3Stable Diffusion WebUI 生成参数:高清修复/高分辨率修复(Hires.fix)_hires. fix

- 4git 进阶系列教程--add_git add all

- 5海洋科学—物理海洋学 第十一章 卫星海洋遥感_瞬时态结构海洋学

- 6Mac使用DBeaver连接达梦数据库_mac dbeaver

- 7lightweight java profiler与java flame graph初了解

- 8鸿蒙HarmonyOS应用开发为何选择ArkTS不是Java?

- 9网易Java岗研发面经(技术3面+总结)分布式/Spring/高并发/设计模式_北京易车 java技术几面

- 10【平台介绍】一站式OCR服务平台Textin的相关内容介绍

Roberta 源码阅读_roberta代码

赞

踩

- tokenizer = RobertaTokenizer.from_pretrained('microsoft/codebert-base-mlm')

- model = RobertaForMaskedLM.from_pretrained('microsoft/codebert-base-mlm')

- input_ids = tokenizer(['Language model is what I need.','I love China'],padding=True,return_tensors='pt')

- out = model(**input_ids)

Start: modeling_roberta.py 当中的 RobertaForMaskedLM

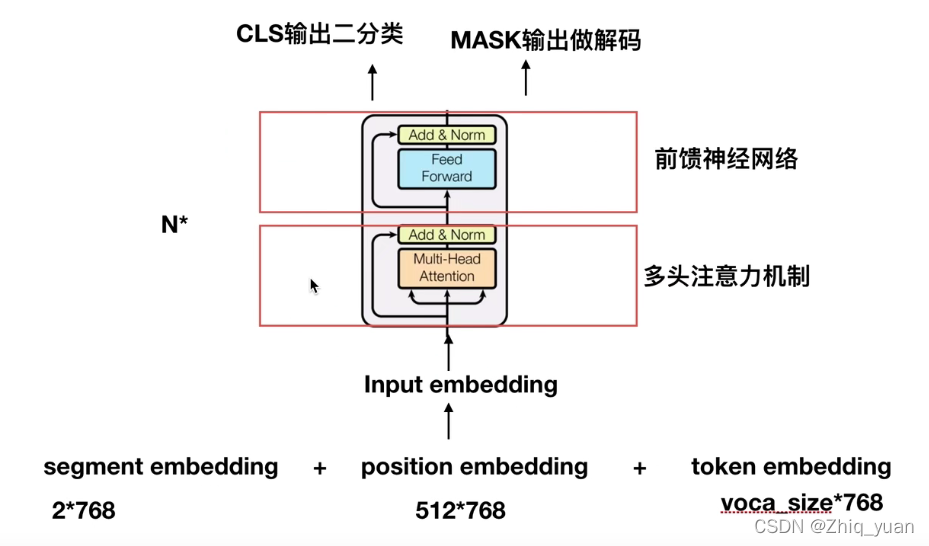

进入 RobertaModel 当中, 首先第一个模块是RobertEmbedding, 也就是将词进行 embedding,由此进入 A:首先第一个模块是 RobertEmbedding, 这里的embedding_out = inputs_embeds + position_embeddings + token_type_embeddings,embedding_out.shpe(batch_size * sequence * hidden_size) 有关参数的解释可见:【NLP】Transformers 源码阅读和实践_fengdu78的博客-CSDN博客, Nice blog.

A:首先第一个模块是 RobertEmbedding, 这里的embedding_out = inputs_embeds + position_embeddings + token_type_embeddings,embedding_out.shpe(batch_size * sequence * hidden_size) 有关参数的解释可见:【NLP】Transformers 源码阅读和实践_fengdu78的博客-CSDN博客, Nice blog.

B: 接着是: RobertaEncoder

- # BertEncoder由12层BertLayer构成

- self.layer = nn.ModuleList([BertLayer(config) for _ in range(config.num_hidden_layers)])

B.1:随后进入 RobertaLayer,并由此进入 RobertaAttention

B.2: 由 RobertaAttention 中的 self.self() 进入到 RobertaSelfAttention: BertAttention是上述代码中attention实例对应的类,是transformer进行self-attention的核心类。包括了BertSelfAttention和BertSelfOutput成员。

- class RobertaAttention(nn.Module):

- def __init__(self, config):

- super().__init__()

- self.self = RobertaSelfAttention(config)

- self.output = RobertaSelfOutput(config)

- self.pruned_heads = set()

-

- def prune_heads(self, heads):

- if len(heads) == 0:

- return

- heads, index = find_pruneable_heads_and_indices(

- heads, self.self.num_attention_heads, self.self.attention_head_size, self.pruned_heads

- )

-

- # Prune linear layers

- self.self.query = prune_linear_layer(self.self.query, index)

- self.self.key = prune_linear_layer(self.self.key, index)

- self.self.value = prune_linear_layer(self.self.value, index)

- self.output.dense = prune_linear_layer(self.output.dense, index, dim=1)

-

- # Update hyper params and store pruned heads

- self.self.num_attention_heads = self.self.num_attention_heads - len(heads)

- self.self.all_head_size = self.self.attention_head_size * self.self.num_attention_heads

- self.pruned_heads = self.pruned_heads.union(heads)

-

- def forward(

- self,

- hidden_states,

- attention_mask=None,

- head_mask=None,

- encoder_hidden_states=None,

- encoder_attention_mask=None,

- output_attentions=False,

- ):

- # 从这里进入 RobertaSelfAttention

- self_outputs = self.self(

- hidden_states,

- attention_mask,

- head_mask,

- encoder_hidden_states,

- encoder_attention_mask,

- output_attentions,

- )

- attention_output = self.output(self_outputs[0], hidden_states)

- outputs = (attention_output,) + self_outputs[1:] # add attentions if we output them

- return outputs

B.3: 由 RobertaSelfAttention 处计算 Q,K,V, 并返回output

C. 得到 encoder 的输出向量,是len==1的tuple,所以 sequence= encoder_outputs[0] 维度为: batch_size * sequence_length * hidden_size(768)

D. RobertaPooler: 但是此处的 pooled_output = None, 并不会使用RobertaPooler. 应为我这里加载的 model 是CodeBert,special token 是<s> 而非Bert当中的[CLS]. 如果将加载的模型换成 RobertaModel 则会有一个 pooled_output的输出。维度为:batch_size * hidden_size.

- model = RobertaModel.from_pretrained('roberta-base')

- tokenizer = RobertaTokenizer.from_pretrained('roberta-base')

- input_ids = tokenizer("The [MASK] on top allows for less material higher up the pyramid.", return_tensors='pt')['input_ids']

- vector1,pooler1 = model(input_ids)

-

- print('pooler2:', pooler1) # shape: 1 * hidden_size (因为这里只有一句话)

- print('vector2[:,0:1,:]:', vector1[:, 0:1, :]) # shape: batch_size * sequence_length * hidden_size

E. 回到 开头的 RobertaForMaskedLM 当中 此时的 sequence_output 的shape 依旧是:batch_size * sequence_length * hidden_size. 然后 将得到的sequence_out 输入到 self.lm_head() 当中。

F. 将得到的值 输入到 RobertaLMHead() 当中, 在最后输出的时候 x = self.decoder(x) 的shape 为: batch_size * sequence_length * vocab_size.

G. 最后一步的输出,竟然是 prediction,是因为我的输入没有<mask> 吗?

- tokenizer = RobertaTokenizer.from_pretrained('microsoft/codebert-base-mlm')

- model = RobertaForMaskedLM.from_pretrained('microsoft/codebert-base-mlm')

- input_ids = tokenizer(['Language model is what I need.','I love China'],padding=True,return_tensors='pt')

- out = model(**input_ids) # 这个是上图当中的prediction

这是 output 的截图:

最后 贴上一个BERT的网络架构图: