- 1常见的文件系统类型及其特点

- 2【代码随想录算法训练营第五十三天|739.每日温度、496.下一个更大元素I、503.下一个更大元素II】

- 3【408考点之数据结构】排序的基本概念

- 4AI绘画工作流,WebUI与ComfyUI怎么选?_webui和comfyui

- 5Python 没有 pip 包问题解决_python没有pip

- 6JavaScript入门 事件简介/事件对象位置属性 /鼠标事件/表单事件/键盘事件/浏览器相关事件/焦点事件/触摸事件 Day12_document.ontouchend

- 7什么是时间复杂度,空间复杂度_空间复杂度算不算输入数据

- 8希尔伯特变换(matlab)_matlab 希尔伯特变换

- 9传神社区|数据集合集第4期|中文NLP数据集合集

- 10Https环境将key秘钥和crt格式证书转成cer证书_crt转cer

NLP-Task1:基于机器学习的文本分类_task1 基于numpy的文本分类

赞

踩

NLP-Task1:基于机器学习的文本分类

实现基于logistic/softmax regression的文本分类

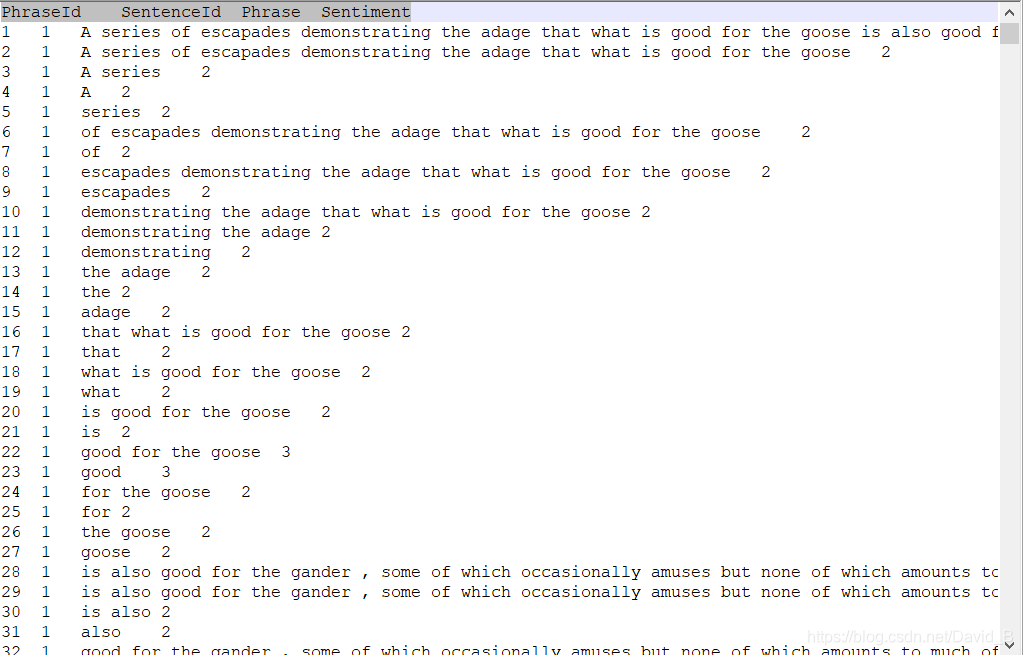

数据集:Classify the sentiment of sentences from the Rotten Tomatoes dataset

网盘下载链接见文末

- 需要了解的知识点:

- 文本特征表示:Bag-of-Word,N-gram

- 分类器:logistic/softmax regression,损失函数、(随机)梯度下降、特征选择

- 数据集:训练集/验证集/测试集的划分

- 实验:

- 分析不同的特征、损失函数、学习率对最终分类性能的影响

- shuffle 、batch、mini-batch

本文参考:NLP-Beginner 任务一:基于机器学习的文本分类(超详细!!

一、工作简介

1.1 主要内容

利用softmax regression对文本情感进行分类

1.2 数据集

训练集共156060条英文评论,情感分共0-4类

二、特征提取(Feature extraction)

2.1 词袋特征(Bag-of-word)

词袋模型即所有单词相互独立

在一句话中,存在于句子的单词 (不区分大小写),则对应的向量位置上的数字为1,反之为0,通过这种方式,可以把一个句子变成一个由数字表示的0-1向量。虽然转换方式非常简单,但是它没有考虑词序

- 词袋:i, you, him, her, love, hate, but

- 句子:I love you

- 向量:[1,1,0,0,1,0,0]

2.2 N元特征(N-gram)

N元特征考虑词序,如当N=2时,I love you不再看作是I, love, you这三个单词,而是 I love, love you 这两个词组

通常来说,使用N元特征时,会一并使用1, 2, …, N-1元特征,数据量大的输入时,较大的N会导致结果模型训练极度缓慢

- 特征(N=1&2):i, you, him, her, love, hate, but, i-love, i-hate, love-you, hate-you, you-but, but-love, but-hate, love-him, hate-him

- 句子:I love you

- 向量:[1,1,0,0,1,0,0,0,1,0,1,0,0,0,0,0,0]

三、Softmax Regression

3.1 softmax介绍

给定一个输入x,输出一个y向量,y的长度即为类别的个数,总和为1,y中的数字是各类别的概率

- 0-4共五类,则 y = [ 0 , 0 , 0.7 , . 25 , 0.05 ] T y=[0,0,0.7,.25,0.05]^T y=[0,0,0.7,.25,0.05]T表示类别2的概率为0.7,类别3的概率为0.25,类别4的概率为0.05

公式:

p ( y = c ∣ x ) = s o f t m a x ( w c T x ) = e x p ( w c T x ) ∑ c ′ = 1 C e x p ( w c T x ) p(y=c|x)=softmax(w^T_cx)=\frac{exp(w^T_cx)}{\sum_{c'=1}^Cexp(w^T_cx)} p(y=c∣x)=softmax(wcTx)=∑c′=1Cexp(wcTx)exp(wcTx)

其中 w c w_c wc是第 c c c类的权重向量,记 W = [ w 1 , w 2 , . . . , w C ] W=[w_1,w_2,...,w_C] W=[w1,w2,...,wC]为权重矩阵,则上述公式可以表示为列向量:

y ^ = f ( x ) = s o f t m a x ( W T x ) = e x p ( W T x ) 1 ⃗ c T e x p ( W T x ) \hat{y}=f(x)=softmax(W^Tx)=\frac{exp(W^Tx)}{\vec{1}^T_cexp(W^Tx)} y^=f(x)=softmax(WTx)=1 cTexp(WTx)exp(WTx)

y ^ \hat{y} y^ 中的每一个元素,代表对应类别的概率,接下来求解参数矩 W W W

3.2 损失函数

用损失函数对模型的好坏做出评价

在此选择交叉熵损失函数,对每一个样本n,其损失值为:

L ( f W ( x ( n ) ) , y ( n ) ) = − ∑ c = 1 C y c ( n ) l o g y ^ c ( n ) = − ( y ( n ) ) T l o g y ^ ( n ) L(fW(x^{(n)}),y^{(n)})=-\sum_{c=1}^Cy_c^{(n)}log\hat{y}_c^{(n)}=-(y^{(n)})^Tlog\hat{y}^{(n)} L(fW(x(n)),y(n))=−∑c=1Cyc(n)logy^c(n)=−(y(n))Tlogy^(n)

= − ( y ( n ) ) T l o g ( e x p ( W T x ) 1 ⃗ c T e x p ( W T x ) ) =-(y^{(n)})^Tlog(\frac{exp(W^Tx)}{\vec{1}^T_cexp(W^Tx)}) =−(y(n))Tlog(1 cTexp(WTx)exp(WTx))

其中 y ( n ) = ( I ( c = 0 ) , I ( c = 1 ) , . . . , I ( c = C ) ) y^{(n)}=(I(c=0),I(c=1),...,I(c=C)) y(n)=(I(c=0),I(c=1),...,I(c=C)),是一个one-hot向量,只有一个元素是1,其余都是0

对于总体样本,总的损失值则是每个样本损失值的平均,即

L ( W ) = L ( f W ( x ) , y ) = 1 N ∑ n = 1 N L ( f W ( x ( n ) ) , y ( n ) ) L(W)=L(fW(x),y)=\frac{1}{N}\sum_{n=1}^NL(fW(x^{(n)}),y^{(n)}) L(W)=L(fW(x),y)=N1∑n=1NL(fW(x(n)),y(n))

通过找到损失函数的最小值,确定最优的参数矩阵 W W W

3.3 梯度下降

∂ L ( W ) ∂ W = − 1 N ∑ n = 1 N x ( n ) ( y ( n ) − y ^ W ( n ) ) T \frac{\partial L(W)}{\partial W}=-\frac{1}{N}\sum_{n=1}^Nx^{(n)}(y^{(n)}-\hat{y}^{(n)}_W)^T ∂W∂L(W)=−N1∑n=1Nx(n)(y(n)−y^W(n))T

1、整批量梯度下降(Batch)参数更新:

W t + 1 ← W t − α ∂ L ( W t ) ∂ W t W_{t+1}\gets W_t-\alpha\frac{\partial L(W_t)}{\partial W_t} Wt+1←Wt−α∂Wt∂L(Wt)

2、随机梯度下降(Shuffle)参数更新,每次只随机选取一个样本:

Δ W = − x ( n ) ( y ( n ) − y ^ W ( n ) ) T \Delta W=-x^{(n)}(y^{(n)}-\hat{y}^{(n)}_W)^T ΔW=−x(n)(y(n)−y^W(n))T

W t + 1 ← W t − α ( Δ W t ) W_{t+1}\gets W_t-\alpha(\Delta W_t) Wt+1←Wt−α(ΔWt)

3、小批量梯度下降(Mini-Batch)参数更新,每次随机选取一些样本(如k个),设样本集合为S:

Δ W = − 1 k ∑ n ∈ S x ( n ) ( y ( n ) − y ^ W ( n ) ) T \Delta W=-\frac{1}{k}\sum\limits_{n\in S}x^{(n)}(y^{(n)}-\hat{y}^{(n)}_W)^T ΔW=−k1n∈S∑x(n)(y(n)−y^W(n))T

W t + 1 ← W t − α ( Δ W t ) W_{t+1}\gets W_t-\alpha(\Delta W_t) Wt+1←Wt−α(ΔWt)

四、代码实现

4.1 实验基本设置

- 样本个数:1000

- 训练集与测试集:7:3

- 学习率: 1 0 − 3 10^{-3} 10−3~ 1 0 4 10^4 104

- Mini-Batch大小:1%

(分析三种梯度下降的优劣时,需要控制每种梯度下降中计算梯度的次数一致)

4.2 代码

4.2.1 main.py

import numpy import csv import random from feature import Bag,Gram from comparison_plot import alpha_gradient_plot # 数据读取 with open('train.tsv') as f: tsvreader = csv.reader(f, delimiter='\t') temp = list(tsvreader) # 初始化 data = temp[1:] max_item=1000 random.seed(2021) numpy.random.seed(2021) # 特征提取 bag=Bag(data,max_item) bag.get_words() bag.get_matrix() gram=Gram(data, dimension=2, max_item=max_item) gram.get_words() gram.get_matrix() # 画图 alpha_gradient_plot(bag,gram,10000,10) # 计算10000次 alpha_gradient_plot(bag,gram,100000,10) # 计算100000次

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

4.2.2 特征提取—feature.py

import numpy import random def data_split(data, test_rate=0.3, max_item=1000): """把数据按一定比例划分成训练集和测试集""" train = list() test = list() i = 0 for datum in data: i += 1 if random.random() > test_rate: train.append(datum) else: test.append(datum) if i > max_item: break return train, test class Bag: """Bag of words""" def __init__(self, my_data, max_item=1000): self.data = my_data[:max_item] self.max_item=max_item self.dict_words = dict() # 单词到单词编号的映射 self.len = 0 # 记录有几个单词 self.train, self.test = data_split(my_data, test_rate=0.3, max_item=max_item) self.train_y = [int(term[3]) for term in self.train] # 训练集类别 self.test_y = [int(term[3]) for term in self.test] # 测试集类别 self.train_matrix = None # 训练集的0-1矩阵(每行一个句子) self.test_matrix = None # 测试集的0-1矩阵(每行一个句子) def get_words(self): for term in self.data: s = term[2] s = s.upper() # 记得要全部转化为大写!!(或者全部小写,否则一个单词例如i,I会识别成不同的两个单词) words = s.split() for word in words: # 一个一个单词寻找 if word not in self.dict_words: self.dict_words[word] = len(self.dict_words) self.len = len(self.dict_words) self.test_matrix = numpy.zeros((len(self.test), self.len)) # 初始化0-1矩阵 self.train_matrix = numpy.zeros((len(self.train), self.len)) # 初始化0-1矩阵 def get_matrix(self): for i in range(len(self.train)): # 训练集矩阵 s = self.train[i][2] words = s.split() for word in words: word = word.upper() self.train_matrix[i][self.dict_words[word]] = 1 for i in range(len(self.test)): # 测试集矩阵 s = self.test[i][2] words = s.split() for word in words: word = word.upper() self.test_matrix[i][self.dict_words[word]] = 1 class Gram: """N-gram""" def __init__(self, my_data, dimension=2, max_item=1000): self.data = my_data[:max_item] self.max_item = max_item self.dict_words = dict() # 特征到t正编号的映射 self.len = 0 # 记录有多少个特征 self.dimension = dimension # 决定使用几元特征 self.train, self.test = data_split(my_data, test_rate=0.3, max_item=max_item) self.train_y = [int(term[3]) for term in self.train] # 训练集类别 self.test_y = [int(term[3]) for term in self.test] # 测试集类别 self.train_matrix = None # 训练集0-1矩阵(每行代表一句话) self.test_matrix = None # 测试集0-1矩阵(每行代表一句话) def get_words(self): for d in range(1, self.dimension + 1): # 提取 1-gram, 2-gram,..., N-gram 特征 for term in self.data: s = term[2] s = s.upper() # 记得要全部转化为大写!!(或者全部小写,否则一个单词例如i,I会识别成不同的两个单词) words = s.split() for i in range(len(words) - d + 1): # 一个一个特征找 temp = words[i:i + d] temp = "_".join(temp) # 形成i d-gram 特征 if temp not in self.dict_words: self.dict_words[temp] = len(self.dict_words) self.len = len(self.dict_words) self.test_matrix = numpy.zeros((len(self.test), self.len)) # 训练集矩阵初始化 self.train_matrix = numpy.zeros((len(self.train), self.len)) # 测试集矩阵初始化 def get_matrix(self): for d in range(1, self.dimension + 1): for i in range(len(self.train)): # 训练集矩阵 s = self.train[i][2] s = s.upper() words = s.split() for j in range(len(words) - d + 1): temp = words[j:j + d] temp = "_".join(temp) self.train_matrix[i][self.dict_words[temp]] = 1 for i in range(len(self.test)): # 测试集矩阵 s = self.test[i][2] s = s.upper() words = s.split() for j in range(len(words) - d + 1): temp = words[j:j + d] temp = "_".join(temp) self.test_matrix[i][self.dict_words[temp]] = 1

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

4.2.3 Softmax regression.py

import numpy import random class Softmax: """Softmax regression""" def __init__(self, sample, typenum, feature): self.sample = sample # 训练集样本个数 self.typenum = typenum # (情感)种类个数 self.feature = feature # 0-1向量的长度 self.W = numpy.random.randn(feature, typenum) # 参数矩阵W初始化 def softmax_calculation(self, x): """x是向量,计算softmax值""" exp = numpy.exp(x - numpy.max(x)) # 先减去最大值防止指数太大溢出 return exp / exp.sum() def softmax_all(self, wtx): """wtx是矩阵,即许多向量叠在一起,按行计算softmax值""" wtx -= numpy.max(wtx, axis=1, keepdims=True) # 先减去行最大值防止指数太大溢出 wtx = numpy.exp(wtx) wtx /= numpy.sum(wtx, axis=1, keepdims=True) return wtx def change_y(self, y): """把(情感)种类转换为一个one-hot向量""" ans = numpy.array([0] * self.typenum) ans[y] = 1 return ans.reshape(-1, 1) def prediction(self, X): """给定0-1矩阵X,计算每个句子的y_hat值(概率)""" prob = self.softmax_all(X.dot(self.W)) return prob.argmax(axis=1) def correct_rate(self, train, train_y, test, test_y): """计算训练集和测试集的准确率""" # train set n_train = len(train) pred_train = self.prediction(train) train_correct = sum([train_y[i] == pred_train[i] for i in range(n_train)]) / n_train # test set n_test = len(test) pred_test = self.prediction(test) test_correct = sum([test_y[i] == pred_test[i] for i in range(n_test)]) / n_test print(train_correct, test_correct) return train_correct, test_correct def regression(self, X, y, alpha, times, strategy="mini", mini_size=100): """Softmax regression""" if self.sample != len(X) or self.sample != len(y): raise Exception("Sample size does not match!") # 样本个数不匹配 if strategy == "mini": # mini-batch for i in range(times): increment = numpy.zeros((self.feature, self.typenum)) # 梯度初始为0矩阵 for j in range(mini_size): # 随机抽K次 k = random.randint(0, self.sample - 1) yhat = self.softmax_calculation(self.W.T.dot(X[k].reshape(-1, 1))) increment += X[k].reshape(-1, 1).dot((self.change_y(y[k]) - yhat).T) # 梯度加和 # print(i * mini_size) self.W += alpha / mini_size * increment # 参数更新 elif strategy == "shuffle": # 随机梯度 for i in range(times): k = random.randint(0, self.sample - 1) # 每次抽一个 yhat = self.softmax_calculation(self.W.T.dot(X[k].reshape(-1, 1))) increment = X[k].reshape(-1, 1).dot((self.change_y(y[k]) - yhat).T) # 计算梯度 self.W += alpha * increment # 参数更新 # if not (i % 10000): # print(i) elif strategy=="batch": # 整批量梯度 for i in range(times): increment = numpy.zeros((self.feature, self.typenum)) ## 梯度初始为0矩阵 for j in range(self.sample): # 所有样本都要计算 yhat = self.softmax_calculation(self.W.T.dot(X[j].reshape(-1, 1))) increment += X[j].reshape(-1, 1).dot((self.change_y(y[j]) - yhat).T) # 梯度加和 # print(i) self.W += alpha / self.sample * increment # 参数更新 else: raise Exception("Unknown strategy")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

4.2.4 结果分析及可视化—comparison_plot.py

import matplotlib.pyplot from mysoftmax_regression import Softmax def alpha_gradient_plot(bag,gram, total_times, mini_size): """Plot categorization verses different parameters.""" alphas = [0.001, 0.01, 0.1, 1, 10, 100, 1000, 10000] # Bag of words # Shuffle shuffle_train = list() shuffle_test = list() for alpha in alphas: soft = Softmax(len(bag.train), 5, bag.len) soft.regression(bag.train_matrix, bag.train_y, alpha, total_times, "shuffle") r_train, r_test = soft.correct_rate(bag.train_matrix, bag.train_y, bag.test_matrix, bag.test_y) shuffle_train.append(r_train) shuffle_test.append(r_test) # Batch batch_train = list() batch_test = list() for alpha in alphas: soft = Softmax(len(bag.train), 5, bag.len) soft.regression(bag.train_matrix, bag.train_y, alpha, int(total_times/bag.max_item), "batch") r_train, r_test = soft.correct_rate(bag.train_matrix, bag.train_y, bag.test_matrix, bag.test_y) batch_train.append(r_train) batch_test.append(r_test) # Mini-batch mini_train = list() mini_test = list() for alpha in alphas: soft = Softmax(len(bag.train), 5, bag.len) soft.regression(bag.train_matrix, bag.train_y, alpha, int(total_times/mini_size), "mini",mini_size) r_train, r_test= soft.correct_rate(bag.train_matrix, bag.train_y, bag.test_matrix, bag.test_y) mini_train.append(r_train) mini_test.append(r_test) matplotlib.pyplot.subplot(2,2,1) matplotlib.pyplot.semilogx(alphas,shuffle_train,'r--',label='shuffle') matplotlib.pyplot.semilogx(alphas, batch_train, 'g--', label='batch') matplotlib.pyplot.semilogx(alphas, mini_train, 'b--', label='mini-batch') matplotlib.pyplot.semilogx(alphas,shuffle_train, 'ro-', alphas, batch_train, 'g+-',alphas, mini_train, 'b^-') matplotlib.pyplot.legend() matplotlib.pyplot.title("Bag of words -- Training Set") matplotlib.pyplot.xlabel("Learning Rate") matplotlib.pyplot.ylabel("Accuracy") matplotlib.pyplot.ylim(0,1) matplotlib.pyplot.subplot(2, 2, 2) matplotlib.pyplot.semilogx(alphas, shuffle_test, 'r--', label='shuffle') matplotlib.pyplot.semilogx(alphas, batch_test, 'g--', label='batch') matplotlib.pyplot.semilogx(alphas, mini_test, 'b--', label='mini-batch') matplotlib.pyplot.semilogx(alphas, shuffle_test, 'ro-', alphas, batch_test, 'g+-', alphas, mini_test, 'b^-') matplotlib.pyplot.legend() matplotlib.pyplot.title("Bag of words -- Test Set") matplotlib.pyplot.xlabel("Learning Rate") matplotlib.pyplot.ylabel("Accuracy") matplotlib.pyplot.ylim(0, 1) # N-gram # Shuffle shuffle_train = list() shuffle_test = list() for alpha in alphas: soft = Softmax(len(gram.train), 5, gram.len) soft.regression(gram.train_matrix, gram.train_y, alpha, total_times, "shuffle") r_train, r_test = soft.correct_rate(gram.train_matrix, gram.train_y, gram.test_matrix, gram.test_y) shuffle_train.append(r_train) shuffle_test.append(r_test) # Batch batch_train = list() batch_test = list() for alpha in alphas: soft = Softmax(len(gram.train), 5, gram.len) soft.regression(gram.train_matrix, gram.train_y, alpha, int(total_times / gram.max_item), "batch") r_train, r_test = soft.correct_rate(gram.train_matrix, gram.train_y, gram.test_matrix, gram.test_y) batch_train.append(r_train) batch_test.append(r_test) # Mini-batch mini_train = list() mini_test = list() for alpha in alphas: soft = Softmax(len(gram.train), 5, gram.len) soft.regression(gram.train_matrix, gram.train_y, alpha, int(total_times / mini_size), "mini", mini_size) r_train, r_test = soft.correct_rate(gram.train_matrix, gram.train_y, gram.test_matrix, gram.test_y) mini_train.append(r_train) mini_test.append(r_test) matplotlib.pyplot.subplot(2, 2, 3) matplotlib.pyplot.semilogx(alphas, shuffle_train, 'r--', label='shuffle') matplotlib.pyplot.semilogx(alphas, batch_train, 'g--', label='batch') matplotlib.pyplot.semilogx(alphas, mini_train, 'b--', label='mini-batch') matplotlib.pyplot.semilogx(alphas, shuffle_train, 'ro-', alphas, batch_train, 'g+-', alphas, mini_train, 'b^-') matplotlib.pyplot.legend() matplotlib.pyplot.title("N-gram -- Training Set") matplotlib.pyplot.xlabel("Learning Rate") matplotlib.pyplot.ylabel("Accuracy") matplotlib.pyplot.ylim(0, 1) matplotlib.pyplot.subplot(2, 2, 4) matplotlib.pyplot.semilogx(alphas, shuffle_test, 'r--', label='shuffle') matplotlib.pyplot.semilogx(alphas, batch_test, 'g--', label='batch') matplotlib.pyplot.semilogx(alphas, mini_test, 'b--', label='mini-batch') matplotlib.pyplot.semilogx(alphas, shuffle_test, 'ro-', alphas, batch_test, 'g+-', alphas, mini_test, 'b^-') matplotlib.pyplot.legend() matplotlib.pyplot.title("N-gram -- Test Set") matplotlib.pyplot.xlabel("Learning Rate") matplotlib.pyplot.ylabel("Accuracy") matplotlib.pyplot.ylim(0, 1) matplotlib.pyplot.tight_layout() matplotlib.pyplot.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

4.3 结果

4.3.1 实验一

4.3.2 实验二

训练集的准确率高达100%,意味着模型存在着过拟合现象;而测试集的最高准确率都在55%附近

数据集下载

鉴于kaggle上直接下载要VPN,附上网盘下载链接:

数据集下载 提取码:cnex