- 1智驭未来!百望云强势登榜“2024中国AI应用层创新企业”

- 2一文搞懂编程界中最基础最常见【必知必会】的十一个算法,再也别说你只是听说过【建议收藏+关注】_编程基础算法讲解_程序算法有哪些

- 3正确解决AttributeError: ‘str‘ object has no attribute ‘decode‘异常的有效解决方法_linux 上编程报错"str" object has no attribute "decode

- 4AI助力前端开发:探索智能网页编写的新纪元_前端如何写ai

- 5珈和科技和比昂科技达成战略合作,共创智慧农业领域新篇章

- 6【Python数据分析系列】一文总结dataframe截取/选择/切片的几种方式_dataframe横切选择

- 7关于Java反射的详解及实战案例_java反射实例

- 8tm1639c语言程序,51单片机各种汇编延时子程序整理汇总

- 9Taro 框架中 vue3 request.ts 请求封装_taro.request 封装

- 10Apache Flink X Apache Doris 构建极速易用的实时数仓架构_flinkcdc+flink+doris 实时数仓

大数据最新FlinkCDC全量及增量采集SqlServer数据_flink cdc sql server_flink-connector-sqlserver-cdc

赞

踩

- 1

- 2

TABLE_CATALOG TABLE_SCHEMA TABLE_NAME TABLE_TYPE

test dbo user_info BASE TABLE

test dbo systranschemas BASE TABLE

test cdc change_tables BASE TABLE

test cdc ddl_history BASE TABLE

test cdc lsn_time_mapping BASE TABLE

test cdc captured_columns BASE TABLE

test cdc index_columns BASE TABLE

test dbo orders BASE TABLE

test cdc dbo_orders_CT BASE TABLE

#### 二、具体实现

##### 2.1 Flik-CDC采集SqlServer主程序

添加依赖包:

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-sqlserver-cdc</artifactId>

<version>3.0.0</version>

</dependency>

- 1

- 2

- 3

- 4

- 5

编写主函数:

- 1

- 2

- 3

- 4

- 5

public static void main(String[] args) throws Exception { StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); // 设置全局并行度 env.setParallelism(1); // 设置时间语义为ProcessingTime env.getConfig().setAutoWatermarkInterval(0); // 每隔60s启动一个检查点 env.enableCheckpointing(60000, CheckpointingMode.EXACTLY\_ONCE); // checkpoint最小间隔 env.getCheckpointConfig().setMinPauseBetweenCheckpoints(1000); // checkpoint超时时间 env.getCheckpointConfig().setCheckpointTimeout(60000); // 同一时间只允许一个checkpoint // env.getCheckpointConfig().setMaxConcurrentCheckpoints(1); // Flink处理程序被cancel后,会保留Checkpoint数据 // env.getCheckpointConfig().setExternalizedCheckpointCleanup(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN\_ON\_CANCELLATION); SourceFunction<String> sqlServerSource = SqlServerSource.<String>builder() .hostname("localhost") .port(1433) .username("SA") .password("") .database("test") .tableList("dbo.t\_info") .startupOptions(StartupOptions.initial()) .debeziumProperties(getDebeziumProperties()) .deserializer(new CustomerDeserializationSchemaSqlserver()) .build(); DataStreamSource<String> dataStreamSource = env.addSource(sqlServerSource, "\_transaction\_log\_source"); dataStreamSource.print().setParallelism(1); env.execute("sqlserver-cdc-test"); } public static Properties getDebeziumProperties() { Properties properties = new Properties(); properties.put("converters", "sqlserverDebeziumConverter"); properties.put("sqlserverDebeziumConverter.type", "SqlserverDebeziumConverter"); properties.put("sqlserverDebeziumConverter.database.type", "sqlserver"); // 自定义格式,可选 properties.put("sqlserverDebeziumConverter.format.datetime", "yyyy-MM-dd HH:mm:ss"); properties.put("sqlserverDebeziumConverter.format.date", "yyyy-MM-dd"); properties.put("sqlserverDebeziumConverter.format.time", "HH:mm:ss"); return properties; }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

##### 2.2 自定义`Sqlserver`反序列化格式:

`Flink-CDC`底层技术为`debezium`,它捕获到`Sqlserver`数据变更(CRUD)的数据格式如下:

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

#初始化

Struct{after=Struct{id=1,order_date=2024-01-30,purchaser=1,quantity=100,product_id=1},source=Struct{version=1.9.7.Final,connector=sqlserver,name=sqlserver_transaction_log_source,ts_ms=1706574924473,snapshot=true,db=zeus,schema=dbo,table=orders,commit_lsn=0000002b:00002280:0003},op=r,ts_ms=1706603724432}

#新增

Struct{after=Struct{id=12,order_date=2024-01-11,purchaser=6,quantity=233,product_id=63},source=Struct{version=1.9.7.Final,connector=sqlserver,name=sqlserver_transaction_log_source,ts_ms=1706603786187,db=zeus,schema=dbo,table=orders,change_lsn=0000002b:00002480:0002,commit_lsn=0000002b:00002480:0003,event_serial_no=1},op=c,ts_ms=1706603788461}

#更新

Struct{before=Struct{id=12,order_date=2024-01-11,purchaser=6,quantity=233,product_id=63},after=Struct{id=12,order_date=2024-01-11,purchaser=8,quantity=233,product_id=63},source=Struct{version=1.9.7.Final,connector=sqlserver,name=sqlserver_transaction_log_source,ts_ms=1706603845603,db=zeus,schema=dbo,table=orders,change_lsn=0000002b:00002500:0002,commit_lsn=0000002b:00002500:0003,event_serial_no=2},op=u,ts_ms=1706603850134}

#删除

Struct{before=Struct{id=11,order_date=2024-01-11,purchaser=6,quantity=233,product_id=63},source=Struct{version=1.9.7.Final,connector=sqlserver,name=sqlserver_transaction_log_source,ts_ms=1706603973023,db=zeus,schema=dbo,table=orders,change_lsn=0000002b:000025e8:0002,commit_lsn=0000002b:000025e8:0005,event_serial_no=1},op=d,ts_ms=1706603973859}

因此,可以根据自己需要自定义反序列化格式,将数据按照标准统一数据输出,下面是我自定义的格式,供大家参考:

- 1

- 2

- 3

- 4

- 5

import com.alibaba.fastjson2.JSON;

import com.alibaba.fastjson2.JSONObject;

import com.alibaba.fastjson2.JSONWriter;

import com.ververica.cdc.debezium.DebeziumDeserializationSchema;

import io.debezium.data.Envelope;

import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.util.Collector;

import org.apache.kafka.connect.data.Field;

import org.apache.kafka.connect.data.Schema;

import org.apache.kafka.connect.data.Struct;

import org.apache.kafka.connect.source.SourceRecord;

import java.util.HashMap;

import java.util.Map;

public class CustomerDeserializationSchemaSqlserver implements DebeziumDeserializationSchema {

private static final long serialVersionUID = -1L; @Override public void deserialize(SourceRecord sourceRecord, Collector collector) { Map<String, Object> resultMap = new HashMap<>(); String topic = sourceRecord.topic(); String[] split = topic.split("[.]"); String database = split[1]; String table = split[2]; resultMap.put("db", database); resultMap.put("tableName", table); //获取操作类型 Envelope.Operation operation = Envelope.operationFor(sourceRecord); //获取数据本身 Struct struct = (Struct) sourceRecord.value(); Struct after = struct.getStruct("after"); Struct before = struct.getStruct("before"); String op = operation.name(); resultMap.put("op", op); //新增,更新或者初始化 if (op.equals(Envelope.Operation.CREATE.name()) || op.equals(Envelope.Operation.READ.name()) || op.equals(Envelope.Operation.UPDATE.name())) { JSONObject afterJson = new JSONObject(); if (after != null) { Schema schema = after.schema(); for (Field field : schema.fields()) { afterJson.put(field.name(), after.get(field.name())); } resultMap.put("after", afterJson); } } if (op.equals(Envelope.Operation.DELETE.name())) { JSONObject beforeJson = new JSONObject(); if (before != null) { Schema schema = before.schema(); for (Field field : schema.fields()) { beforeJson.put(field.name(), before.get(field.name())); } resultMap.put("before", beforeJson); } } collector.collect(JSON.toJSONString(resultMap, JSONWriter.Feature.FieldBased, JSONWriter.Feature.LargeObject)); } @Override public TypeInformation<String> getProducedType() { return BasicTypeInfo.STRING\_TYPE\_INFO; }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

}

##### 2.3 自定义日期格式转换器 `debezium`会将日期转为5位数字,日期时间转为13位的数字,因此我们需要根据`Sqlserver`的日期类型转换成标准的时期或者时间格式。`Sqlserver`的日期类型主要包含以下几种: | 字段类型 | 快照类型(jdbc type) | cdc类型(jdbc type) | | --- | --- | --- | | DATE | java.sql.Date(91) | java.sql.Date(91) | | TIME | java.sql.Timestamp(92) | java.sql.Time(92) | | DATETIME | java.sql.Timestamp(93) | java.sql.Timestamp(93) | | DATETIME2 | java.sql.Timestamp(93) | java.sql.Timestamp(93) | | DATETIMEOFFSET | microsoft.sql.DateTimeOffset(-155) | microsoft.sql.DateTimeOffset(-155) | | SMALLDATETIME | java.sql.Timestamp(93) | java.sql.Timestamp(93) |

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

import io.debezium.spi.converter.CustomConverter;

import io.debezium.spi.converter.RelationalColumn;

import org.apache.kafka.connect.data.SchemaBuilder;

import java.time.ZoneOffset;

import java.time.format.DateTimeFormatter;

import java.util.Properties;

@Sl4j

public class SqlserverDebeziumConverter implements CustomConverter<SchemaBuilder, RelationalColumn> {

private static final String DATE\_FORMAT = "yyyy-MM-dd"; private static final String TIME\_FORMAT = "HH:mm:ss"; private static final String DATETIME\_FORMAT = "yyyy-MM-dd HH:mm:ss"; private DateTimeFormatter dateFormatter; private DateTimeFormatter timeFormatter; private DateTimeFormatter datetimeFormatter; private SchemaBuilder schemaBuilder; private String databaseType; private String schemaNamePrefix; @Override public void configure(Properties properties) { // 必填参数:database.type,只支持sqlserver this.databaseType = properties.getProperty("database.type"); // 如果未设置,或者设置的不是mysql、sqlserver,则抛出异常。 if (this.databaseType == null || !this.databaseType.equals("sqlserver"))) { throw new IllegalArgumentException("database.type 必须设置为'sqlserver'"); } // 选填参数:format.date、format.time、format.datetime。获取时间格式化的格式 String dateFormat = properties.getProperty("format.date", DATE\_FORMAT); String timeFormat = properties.getProperty("format.time", TIME\_FORMAT); String datetimeFormat = properties.getProperty("format.datetime", DATETIME\_FORMAT); // 获取自身类的包名+数据库类型为默认schema.name String className = this.getClass().getName(); // 查看是否设置schema.name.prefix this.schemaNamePrefix = properties.getProperty("schema.name.prefix", className + "." + this.databaseType); // 初始化时间格式化器 dateFormatter = DateTimeFormatter.ofPattern(dateFormat); timeFormatter = DateTimeFormatter.ofPattern(timeFormat); datetimeFormatter = DateTimeFormatter.ofPattern(datetimeFormat); } // sqlserver的转换器 public void registerSqlserverConverter(String columnType, ConverterRegistration<SchemaBuilder> converterRegistration) { String schemaName = this.schemaNamePrefix + "." + columnType.toLowerCase(); schemaBuilder = SchemaBuilder.string().name(schemaName); switch (columnType) { case "DATE": converterRegistration.register(schemaBuilder, value -> { if (value == null) { return null; } else if (value instanceof java.sql.Date) { return dateFormatter.format(((java.sql.Date) value).toLocalDate()); } else { return this.failConvert(value, schemaName); } }); break; case "TIME": converterRegistration.register(schemaBuilder, value -> { if (value == null) { return null; } else if (value instanceof java.sql.Time) { return timeFormatter.format(((java.sql.Time) value).toLocalTime()); } else if (value instanceof java.sql.Timestamp) { return timeFormatter.format(((java.sql.Timestamp) value).toLocalDateTime().toLocalTime()); } else {

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

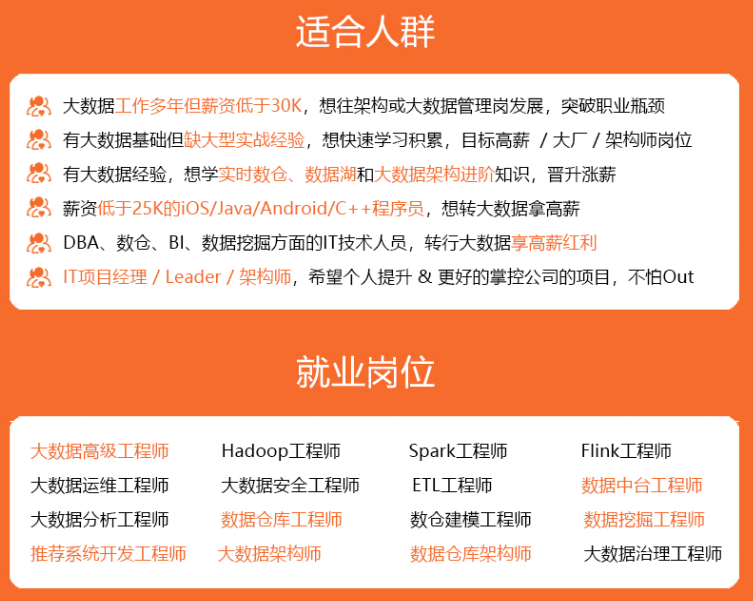

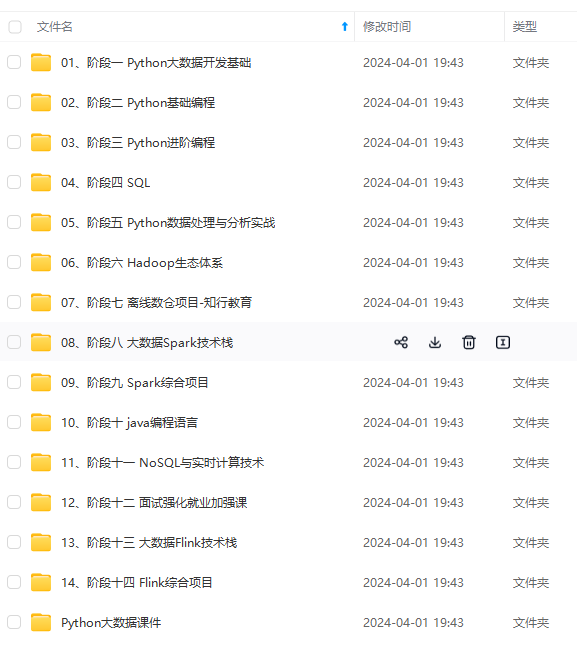

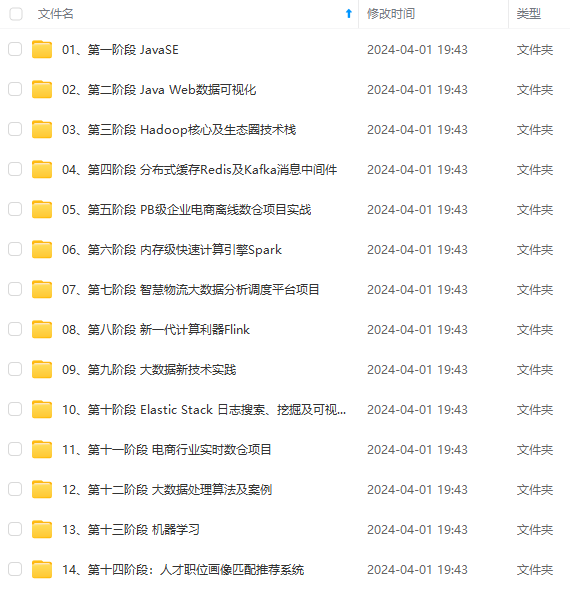

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

)]

[外链图片转存中…(img-ocepcTM9-1714781582203)]

[外链图片转存中…(img-lgVPjo5G-1714781582204)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新