热门标签

热门文章

- 1Jupyter Notebook Nbextensions 最常见安装问题不显示Nbextensions标签或无法正常loading_jupyter notebook nbextensions完整完成但无法使用

- 2linux进程及作业管理实验,2018-2019-1 20189221《Linux内核原理与分析》第一周作业

- 3IP地址是随着网络变化的吗?_ip地址会变吗

- 4STM32 配置中断常用库函数_stm32中断函数

- 5联邦学习笔记(五):联邦学习模型压缩提升通讯效率

- 6史上最强Mamba环境配置教程_mamba2模型环境配置

- 7mysql中QueryTimeOut_MySQL JDBC的queryTimeout坑

- 8在 Confluent Cloud 上使用 Databend Kafka Connect 构建实时数据流同步

- 92024年钉钉杯AB题数据分析与求解_2024钉钉杯

- 10.npmrc 文件的作用_c盘 user 文件夹中的 .npmrc

当前位置: article > 正文

多头Attention MultiheadAttention 怎么用?详细解释_多个attention输出后如何使用

作者:人工智能uu | 2024-07-31 04:40:04

赞

踩

多个attention输出后如何使用

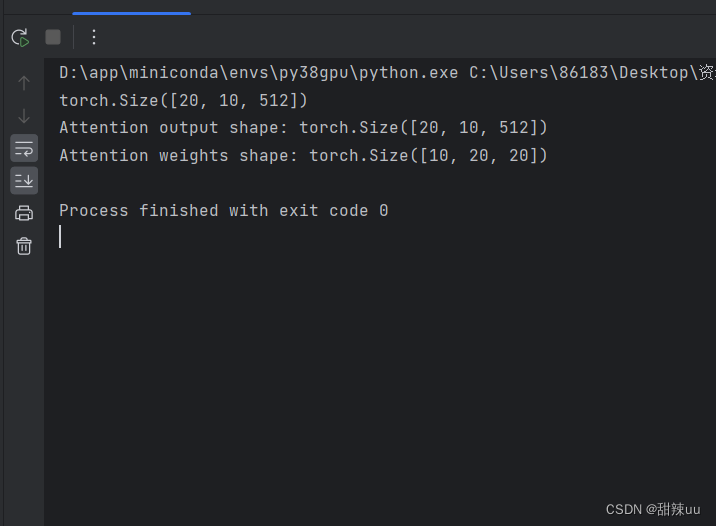

- import torch

- import torch.nn as nn

-

- # 定义多头注意力层

- embed_dim = 512 # 输入嵌入维度

- num_heads = 8 # 注意力头的数量

- multihead_attn = nn.MultiheadAttention(embed_dim, num_heads)

-

- # 创建一些示例数据

- batch_size = 10 # 批次大小

- seq_len = 20 # 序列长度

- query = torch.rand(seq_len, batch_size, embed_dim) # 查询张量

- key = torch.rand(seq_len, batch_size, embed_dim) # 键张量

- value = torch.rand(seq_len, batch_size, embed_dim) # 值张量

- print(query.shape)

- # 计算多头注意力

- attn_output, attn_output_weights = multihead_attn(query, key, value)

- print("Attention output shape:", attn_output.shape) # [seq_len, batch_size, embed_dim]

- print("Attention weights shape:", attn_output_weights.shape) # [batch_size, num_heads, seq_len, seq_len]

推荐阅读

相关标签