热门标签

热门文章

- 1【接口】常见接口集合(返回JSON)

- 2Java开发中的23种设计模式详解(转)_java23种设计模式 百度网盘

- 3基于Hive数仓的陌陌聊天数据需求开发_hive momo实验

- 4抖音seo短视频矩阵系统源码开发部署分享--技术自研_抖音矩阵云混剪系统

- 5论文笔记《3D Gaussian Splatting for Real-Time Radiance Field Rendering》_3d gaussian splatting论文

- 6MySQL笔记——数据库当中的事务以及Java实现对数据库进行增删改查操作_删除start transaction里面插入的数据

- 7pandas导出Excel表格,银行卡号、身份证号无法正常显示的问题,该怎么解决?...

- 8计算机视觉:从OpenCV到物体识别_计算机视觉 物体识别

- 9服务器搭建图床 + PicGo 配置 + Typora 总结_京东云服务器 搭建图床

- 10BCD码计数器Verilog代码vivado仿真_编写veriloghdl代码实现模为60的bcd码加减法计数器,并编写测试代码进行测试。

当前位置: article > 正文

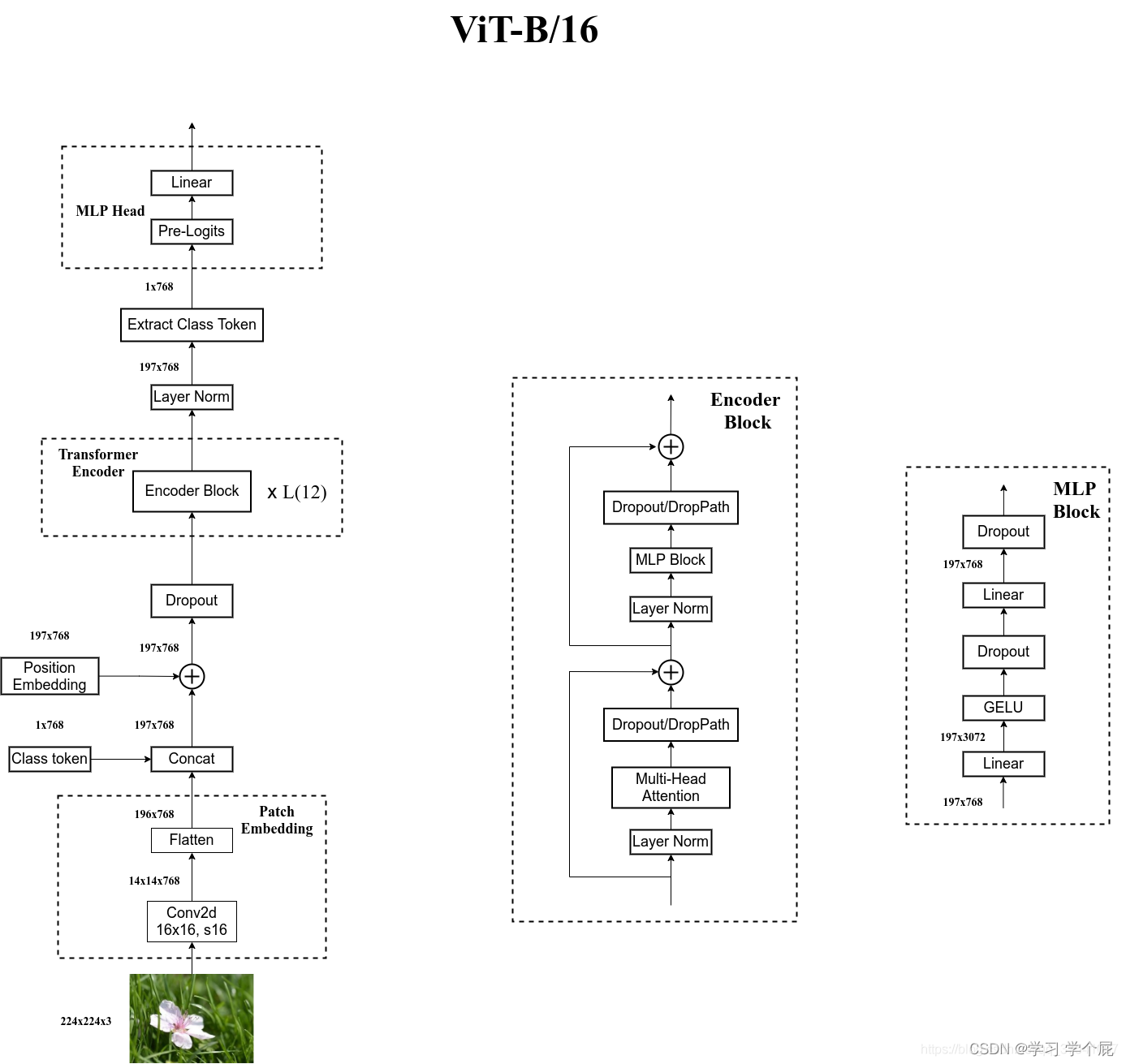

VIT(Vision Transformer)笔记_viv transformer矩阵计算

作者:人工智能uu | 2024-06-28 00:16:02

赞

踩

viv transformer矩阵计算

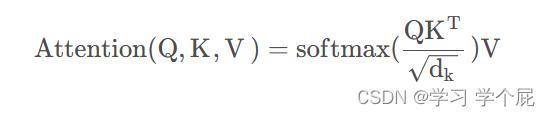

1. Self Attention公式

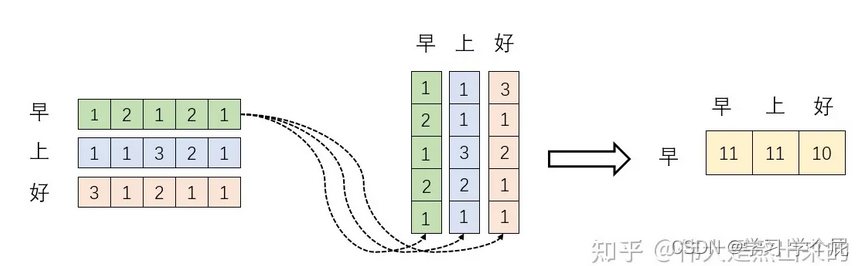

先理解,一个矩阵乘以它自己的转置,是在计算第一个行向量与自己的内积,表征两个向量的夹角,表征一个向量在另一个向量上的投影

投影的值大,说明两个向量相关度高。

矩阵是一个方阵,我们以行向量的角度理解,里面保存了每个向量与自己和其他向量进行内积运算的结果。

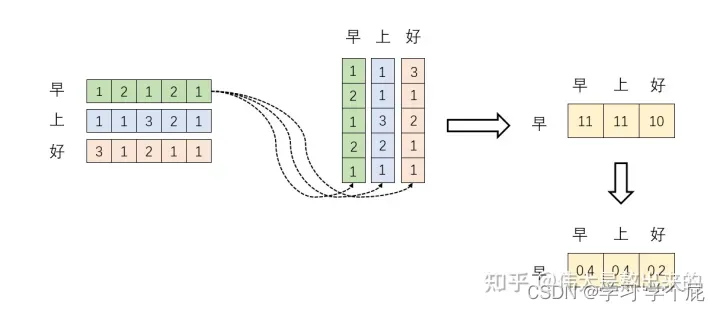

Softmax之后,这些数字的和为1了

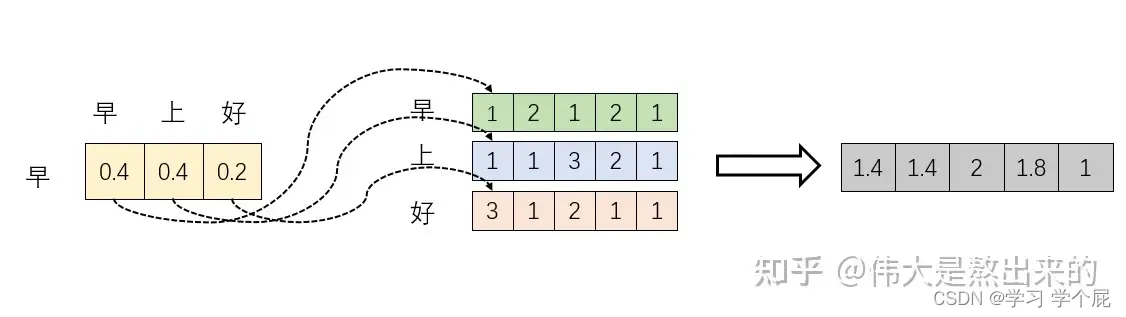

之后再乘X矩阵

这个新的行向量就是"早"字词向量经过注意力机制加权求和之后的表示。

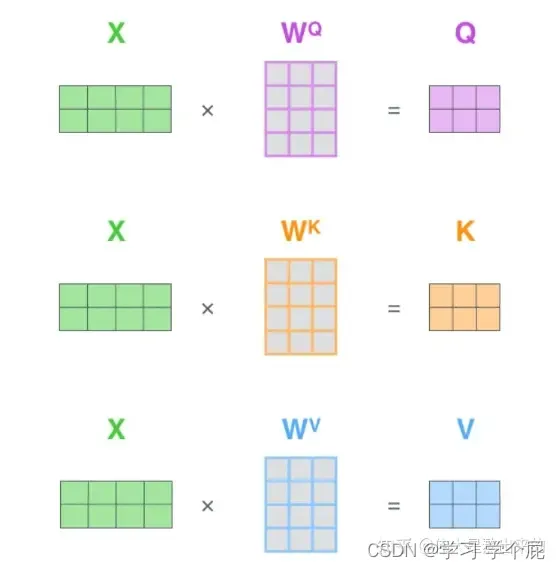

对于QKV矩阵,其实都是X矩阵的线性表示,采用W矩阵,是为了提高矩阵的拟合能力。

对于,是为了使方差变为1,使得模型稳定。

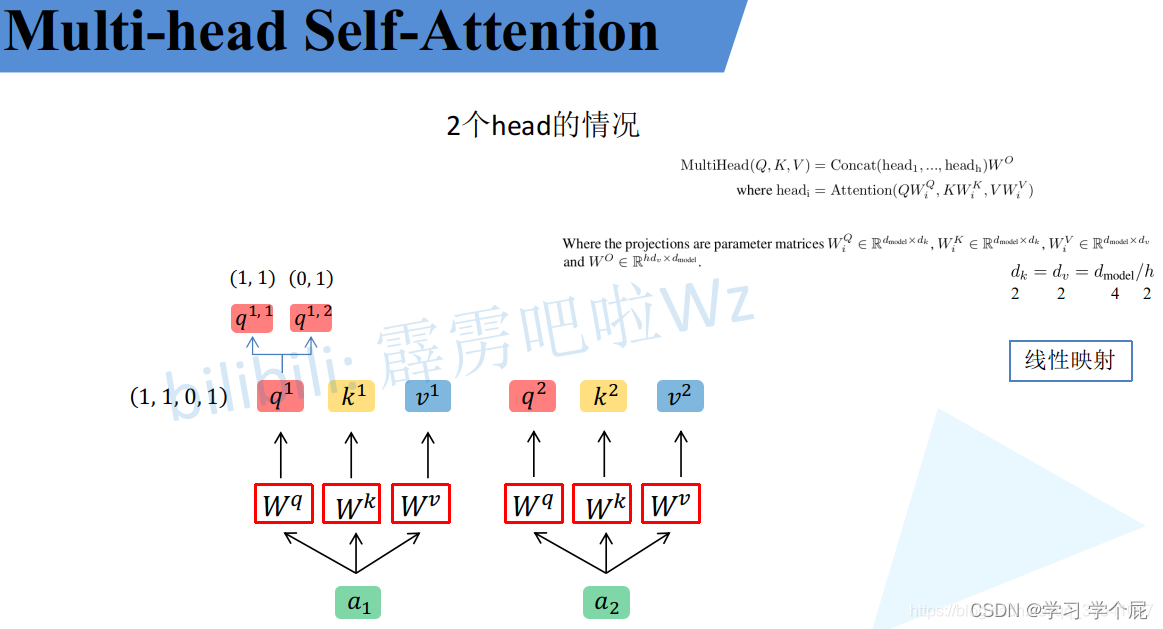

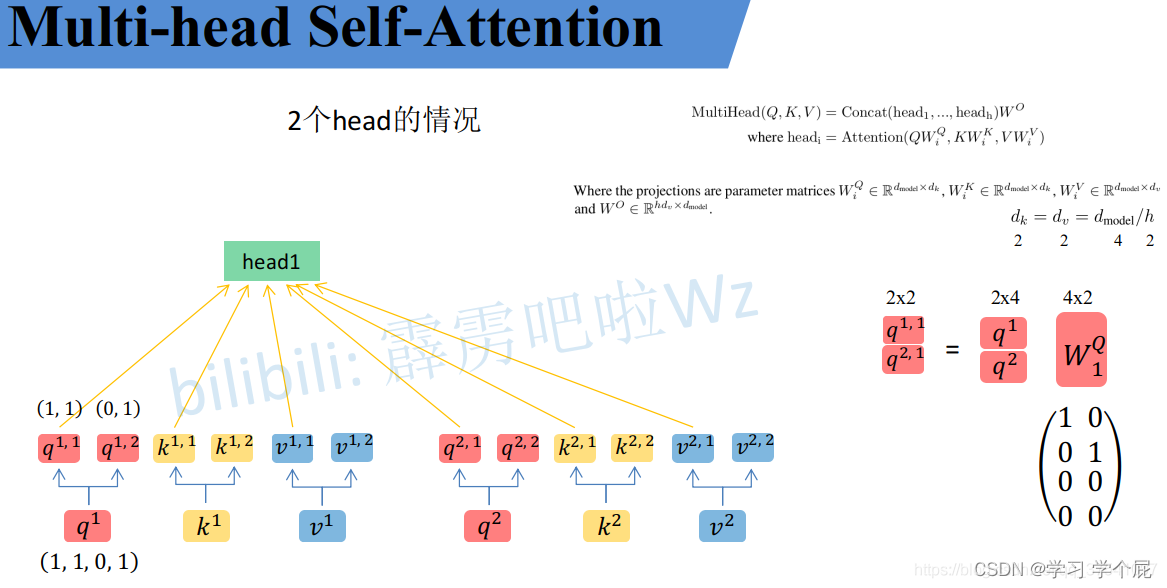

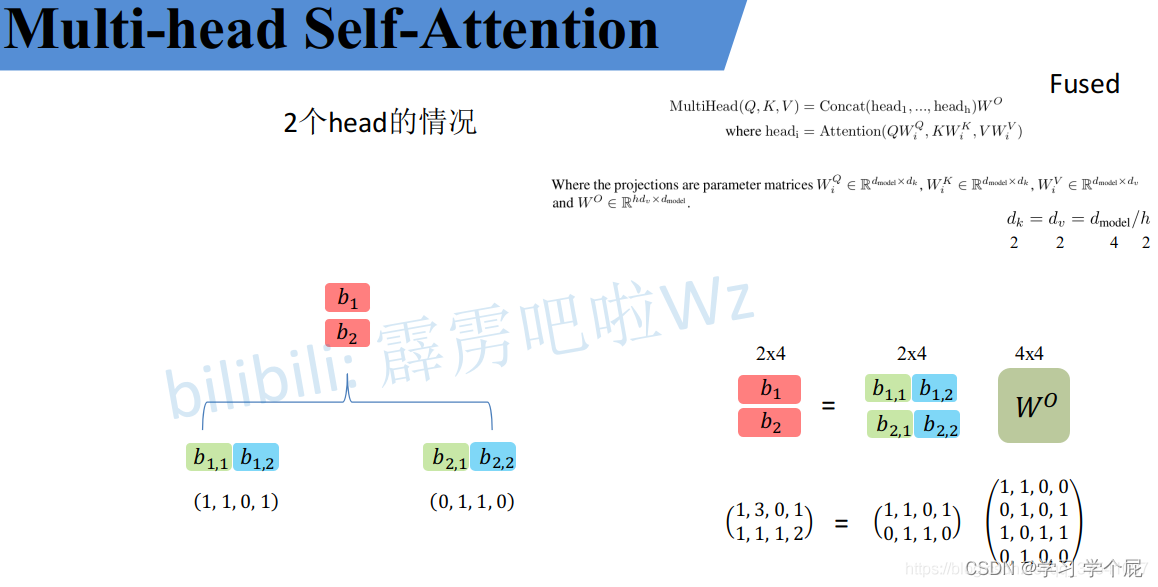

2. Multi-Head Attention

首先和self-attention一样,将a分成QKV,之后根据头数,将QKV均分

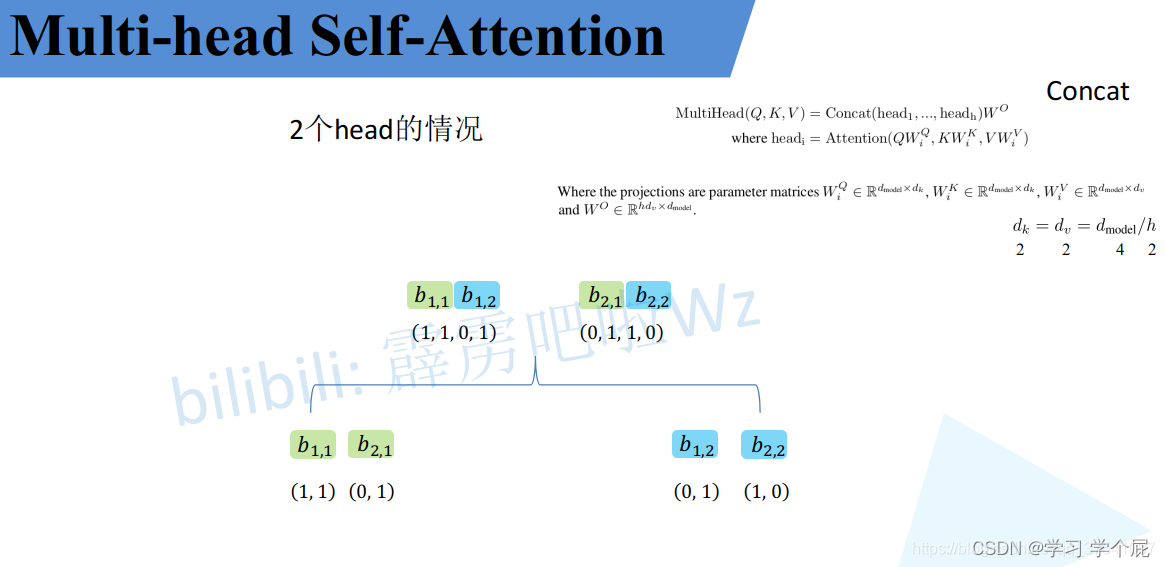

接着将每个head得到的结果进行concat拼接

接着将拼接后的结果与W融合

代码实现如下

- class Attention(nn.Module):

- def __init__(self,

- dim, # 输入token的dim

- num_heads=8,

- qkv_bias=False,

- qk_scale=None,

- attn_drop_ratio=0.,

- proj_drop_ratio=0.):

- super(Attention, self).__init__()

- self.num_heads = num_heads

- head_dim = dim // num_heads

- self.scale = qk_scale or head_dim ** -0.5

- self.qkv = nn.Linear(dim, dim * 3, bias=qkv_bias)

- self.attn_drop = nn.Dropout(attn_drop_ratio)

- self.proj = nn.Linear(dim, dim)

- self.proj_drop = nn.Dropout(proj_drop_ratio)

-

- def forward(self, x):

- # [batch_size, num_patches + 1, total_embed_dim]

- B, N, C = x.shape

-

- # qkv(): -> [batch_size, num_patches + 1, 3 * total_embed_dim]

- # reshape: -> [batch_size, num_patches + 1, 3, num_heads, embed_dim_per_head]

- # permute: -> [3, batch_size, num_heads, num_patches + 1, embed_dim_per_head]

- qkv = self.qkv(x).reshape(B, N, 3, self.num_heads, C // self.num_heads).permute(2, 0, 3, 1, 4)

- # [batch_size, num_heads, num_patches + 1, embed_dim_per_head]

- q, k, v = qkv[0], qkv[1], qkv[2] # make torchscript happy (cannot use tensor as tuple)

-

- # transpose: -> [batch_size, num_heads, embed_dim_per_head, num_patches + 1]

- # @: multiply -> [batch_size, num_heads, num_patches + 1, num_patches + 1]

- attn = (q @ k.transpose(-2, -1)) * self.scale

- attn = attn.softmax(dim=-1)

- attn = self.attn_drop(attn)

-

- # @: multiply -> [batch_size, num_heads, num_patches + 1, embed_dim_per_head]

- # transpose: -> [batch_size, num_patches + 1, num_heads, embed_dim_per_head]

- # reshape: -> [batch_size, num_patches + 1, total_embed_dim]

- x = (attn @ v).transpose(1, 2).reshape(B, N, C)

- x = self.proj(x)

- x = self.proj_drop(x)

- return x

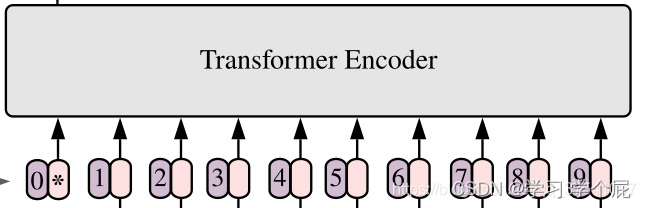

3. Embedding层

将图片划分成一堆Patches

将输入图片(224x224)按照16x16大小的Patch进行划分,划分后会得到(224/16)^2 = 196个patch,每个patch的shape的大小则为【16,16,3】,之后将其拉成16*16*3=768的向量(token)

代码实现如下

- class PatchEmbed(nn.Module):

- """

- 2D Image to Patch Embedding

- """

- def __init__(self, img_size=224, patch_size=16, in_c=3, embed_dim=768):

- super().__init__()

- img_size = (img_size, img_size)

- patch_size = (patch_size, patch_size)

- self.img_size = img_size

- self.patch_size = patch_size

- #224/16=14,224/16=14

- self.grid_size = (img_size[0] // patch_size[0], img_size[1] // patch_size[1])

- #14*14=196

- self.num_patches = self.grid_size[0] * self.grid_size[1]

-

- self.proj = nn.Conv2d(in_c, embed_dim, kernel_size=patch_size, stride=patch_size)

-

- def forward(self, x):

- B, C, H, W = x.shap

- # flatten: [B, C, H, W] -> [B, C, HW] B*768*196

- # transpose: [B, C, HW] -> [B, HW, C] B*196*768

- x = self.proj(x).flatten(2).transpose(1, 2)

- x = self.norm(x)

- return x

在Embedding层后,需增加一个Positional Encoding【196,768】->【197,768】

- # 定义一个可学习的Class token

- self.cls_token = nn.Parameter(torch.zeros(1, 1, embed_dim)) # 第一个1为batch_size embed_dim=768

- cls_token = self.cls_token.expand(x.shape[0], -1, -1) # 保证cls_token的batch维度和x一致

- if self.dist_token is None:

- x = torch.cat((cls_token, x), dim=1) # [B, 197, 768] self.dist_token为None,会执行这句

- else:

- x = torch.cat((cls_token, self.dist_token.expand(x.shape[0], -1, -1), x), dim=1)

-

之后再加上一个位置编码

- # 定义一个可学习的位置编码

- self.pos_embed = nn.Parameter(torch.zeros(1, num_patches + self.num_tokens, embed_dim)) #这个维度为(1,197,768)

- x = x + self.pos_embed

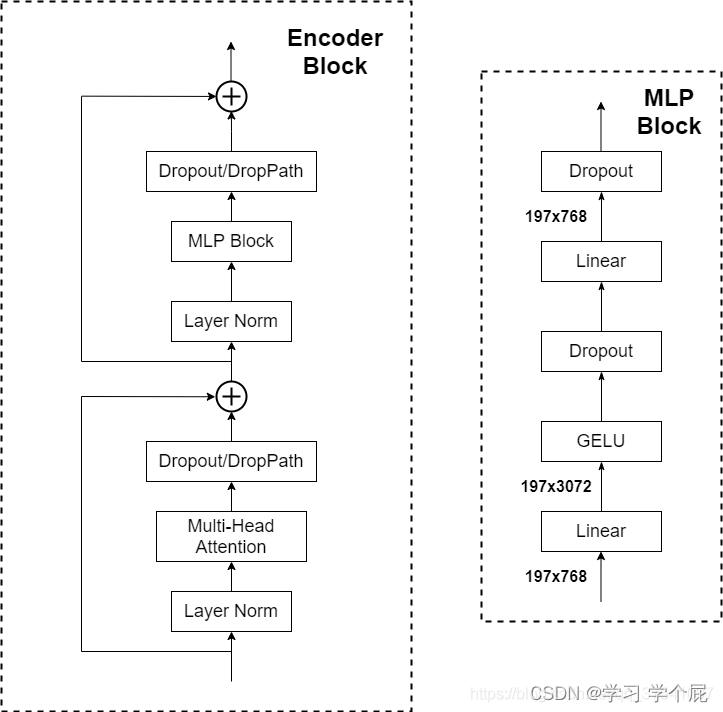

4. Transformer Encoder

其实就是重复堆叠Encoder Block L次

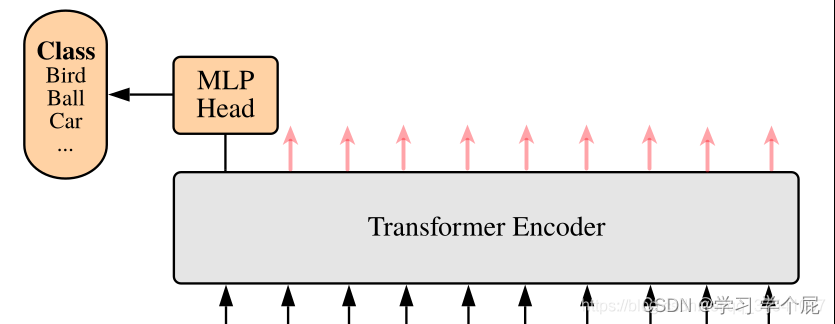

5. MLP Head

从【197,768】中取出【1,768】,然后进行分类

6. 网络结构

推荐阅读

相关标签