- 1RespeakPro对口型数字人使用教程_在线数字人对口型

- 2图像处理与视觉感知复习--概述_灰度级数通常取l=

- 3c#中的类数组数据集合_c# 类数组

- 4Python生成excel文件的三种方式_python新建excel表格

- 5windows10配置sqlserver2014允许远程连接

- 6Apache IoTDB 2021年度总结:在持续开源的路上勇往直前

- 7Git-将某次commit从一个分支转移到另一个分支_git将某个分支的代码某次提交,再提交到另外一个分支

- 8Linux常见面试题,一网打尽!

- 9医疗AI新突破!多模态对齐网络精准预测X光生存,自动生成医疗报告!

- 10anywhere无法获取服务器响应,使用Chat Anywhere可能出现的问题与解决方法

docker 搭建 redis 集群_docker搭建redis集群

赞

踩

Redis主从集群结构

下图就是一个简单的Redis主从集群结构:

如图所示,集群中有一个master节点、两个slave节点(现在叫replica)。当我们通过Redis的Java客户端访问主从集群时,应该做好路由:

- 如果是写操作,应该访问master节点,master会自动将数据同步给两个slave节点

- 如果是读操作,建议访问各个slave节点,从而分担并发压力

Redis主从集群搭建

我们会在同一个虚拟机中利用3个Docker容器来搭建主从集群,容器信息如下:

| ##### 容器名

| ##### 角色

| ##### IP

| ##### 映射端口

|

| — | — | — | — |

| r1 | master | 192.168.70.145 | 7001 |

| r2 | slave | 192.168.70.145 | 7002 |

| r3 | slave | 192.168.70.145 | 7003 |

- 通过docker-compose.yaml文件搭建

version: "3.2" services: r1: image: redis container_name: r1 network_mode: "host" #直接在宿主机上,没有通过网桥 entrypoint: ["redis-server", "--port", "7001"] r2: image: redis container_name: r2 network_mode: "host" entrypoint: ["redis-server", "--port", "7002"] r3: image: redis container_name: r3 network_mode: "host" entrypoint: ["redis-server", "--port", "7003"]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 挂载镜像 镜像

[root@Docker redis]# docker load -i redis.tar

2edcec3590a4: Loading layer [==================================================>] 83.86MB/83.86MB

9b24afeb7c2f: Loading layer [==================================================>] 338.4kB/338.4kB

4b8e2801e0f9: Loading layer [==================================================>] 4.274MB/4.274MB

529cdb636f61: Loading layer [==================================================>] 27.8MB/27.8MB

9975392591f2: Loading layer [==================================================>] 2.048kB/2.048kB

8e5669d83291: Loading layer [==================================================>] 3.584kB/3.584kB

Loaded image: redis:latest

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 使用docker-compose运行容器

[root@Docker redis]# docker compose up -d

WARN[0000] /root/redis/docker-compose.yaml: `version` is obsolete

[+] Running 3/3

✔ Container r1 Started 0.5s

✔ Container r2 Started 0.5s

✔ Container r3 Started

- 1

- 2

- 3

- 4

- 5

- 6

- 查看是否运行成功

[root@Docker redis]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ebcb81cf22a7 redis "redis-server --port…" 3 minutes ago Up 3 minutes r3

6f9fdad55162 redis "redis-server --port…" 3 minutes ago Up 3 minutes r1

d4b87d243843 redis "redis-server --port…" 3 minutes ago Up 3 minutes r2

[root@Docker ~]# ps -ef|grep redis

root 37485 37423 0 19:23 ? 00:00:00 redis-server *:7003

root 37489 37417 0 19:23 ? 00:00:00 redis-server *:7002

root 37493 37428 0 19:23 ? 00:00:00 redis-server *:7001

root 37805 36419 0 19:28 pts/0 00:00:00 grep --color=auto redi

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 建立主从关系有临时和永久两种模式:

- 永久生效:在redis.conf文件中利用slaveof命令指定master节点

- 临时生效:直接利用redis-cli控制台输入slaveof命令,指定master节点

# Redis5.0以前

slaveof <masterip> <masterport>

# Redis5.0以后

replicaof <masterip> <masterport>

- 1

- 2

- 3

- 4

- redis默认每一台机器都是master

r1 127.0.0.1:7001> info replication # Replication role:master connected_slaves:0 master_failover_state:no-failover master_replid:60e71a4245287790354b761da5293f277fc06bbd master_replid2:0000000000000000000000000000000000000000 master_repl_offset:0 second_repl_offset:-1 repl_backlog_active:0 repl_backlog_size:1048576 repl_backlog_first_byte_offset:0 repl_backlog_histlen:0 127.0.0.1:7001> ---------------------------------------------------------------- r2 127.0.0.1:7002> info replication # Replication role:master connected_slaves:0 master_failover_state:no-failover master_replid:3748fe320f7ade3e218ecc02e465ed0e37fa2906 master_replid2:0000000000000000000000000000000000000000 master_repl_offset:0 second_repl_offset:-1 repl_backlog_active:0 repl_backlog_size:1048576 repl_backlog_first_byte_offset:0 repl_backlog_histlen:0 ------------------------------------------------------------------- r3 127.0.0.1:7003> info replication # Replication role:master connected_slaves:0 master_failover_state:no-failover master_replid:24a8e2f9c93757046a37e65c2424655f082fbb64 master_replid2:0000000000000000000000000000000000000000 master_repl_offset:0 second_repl_offset:-1 repl_backlog_active:0 repl_backlog_size:1048576 repl_backlog_first_byte_offset:0 repl_backlog_histlen:0

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 连接r2,让其以r1为master

127.0.0.1:7002> slaveof 192.168.70.145 7001 OK # 查看是否成功 127.0.0.1:7002> info replication # Replication role:slave master_host:192.168.70.145 master_port:7001 master_link_status:up master_last_io_seconds_ago:3 master_sync_in_progress:0 slave_read_repl_offset:0 slave_repl_offset:0 slave_priority:100 slave_read_only:1 replica_announced:1 connected_slaves:0 master_failover_state:no-failover master_replid:1b21b42e9e00ab7972832625056ea34cbf210541 master_replid2:0000000000000000000000000000000000000000 master_repl_offset:0 second_repl_offset:-1 repl_backlog_active:1 repl_backlog_size:1048576 repl_backlog_first_byte_offset:1 repl_backlog_histlen:0 127.0.0.1:7002>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 连接r3,让其以r1为master

127.0.0.1:7003> slaveof 192.168.70.145 7001 OK 127.0.0.1:7003> info replication # Replication role:slave master_host:192.168.70.145 master_port:7001 master_link_status:up master_last_io_seconds_ago:2 master_sync_in_progress:0 slave_read_repl_offset:42 slave_repl_offset:42 slave_priority:100 slave_read_only:1 replica_announced:1 connected_slaves:0 master_failover_state:no-failover master_replid:1b21b42e9e00ab7972832625056ea34cbf210541 master_replid2:0000000000000000000000000000000000000000 master_repl_offset:42 second_repl_offset:-1 repl_backlog_active:1 repl_backlog_size:1048576 repl_backlog_first_byte_offset:43 repl_backlog_histlen:0 127.0.0.1:7003>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 查看r1

127.0.0.1:7001> info replication # Replication role:master connected_slaves:2 slave0:ip=192.168.70.145,port=7002,state=online,offset=42,lag=0 slave1:ip=192.168.70.145,port=7003,state=online,offset=42,lag=0 master_failover_state:no-failover master_replid:1b21b42e9e00ab7972832625056ea34cbf210541 master_replid2:0000000000000000000000000000000000000000 master_repl_offset:42 second_repl_offset:-1 repl_backlog_active:1 repl_backlog_size:1048576 repl_backlog_first_byte_offset:1 repl_backlog_histlen:42 127.0.0.1:7001>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 测试 redis 主从结构

- master 主要负责写

127.0.0.1:7001> set k1 v1

OK

127.0.0.1:7001> get k1

"v1"

127.0.0.1:7001>

- 1

- 2

- 3

- 4

- 5

- slaver 主要负责读

127.0.0.1:7003> get k1

"v1"

127.0.0.1:7003> set k2 v2

(error) READONLY You can't write against a read only replica.

127.0.0.1:7003>

127.0.0.1:7002> get k1

"v1"

127.0.0.1:7002> set k2 v2

(error) READONLY You can't write against a read only replica.

127.0.0.1:7002>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

主从同步原理

- 全量同步

- 主从第一次建立连接时,会执行全量同步,将master节点的所有数据都拷贝给slave节点,流程:

- 流程:

这里有一个问题,master如何得知salve是否是第一次来同步呢??

有几个概念,可以作为判断依据:

- Replication Id:简称replid,是数据集的标记,replid一致则是同一数据集。每个master都有唯一的replid,slave则会继承master节点的replid

- offset:偏移量,随着记录在repl_baklog中的数据增多而逐渐增大。slave完成同步时也会记录当前同步的offset。如果slave的offset小于master的offset,说明slave数据落后于master,需要更新。

- 因此slave做数据同步,必须向master声明自己的replication id 和offset,master才可以判断到底需要同步哪些数据。

由于我们在执行slaveof命令之前,所有redis节点都是master,有自己的replid和offset。

当我们第一次执行slaveof命令,与master建立主从关系时,发送的replid和offset是自己的,与master肯定不一致。

master判断发现slave发送来的replid与自己的不一致,说明这是一个全新的slave,就知道要做全量同步了。

master会将自己的replid和offset都发送给这个slave,slave保存这些信息到本地。自此以后slave的replid就与master一致了

因此,master判断一个节点是否是第一次同步的依据,就是看replid是否一致。流程如图:

完整流程描述:

- slave节点请求增量同步

- master节点判断replid,发现不一致,拒绝增量同步

- master将完整内存数据生成RDB,发送RDB到slave

- slave清空本地数据,加载master的RDB

- master将RDB期间的命令记录在repl_baklog,并持续将log中的命令发送给slave

- slave执行接收到的命令,保持与master之间的同步

来看下r1节点的运行日志:

再看下r2节点执行replicaof命令时的日志:

与我们描述的完全一致。

- 增量同步

- 全量同步需要先做RDB,然后将RDB文件通过网络传输个slave,成本太高了。因此除了第一次做全量同步,其它大多数时候slave与master都是做增量同步。

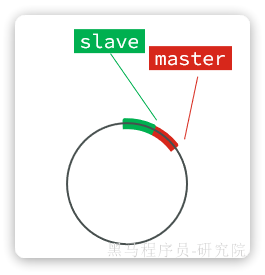

- 什么是增量同步?就是只更新slave与master存在差异的部分数据。如图:

- 那么master怎么知道slave与自己的数据差异在哪里呢?

- 这就要说到全量同步时的repl_baklog文件了。这个文件是一个固定大小的数组,只不过数组是环形,也就是说角标到达数组末尾后,会再次从0开始读写,这样数组头部的数据就会被覆盖。

repl_baklog中会记录Redis处理过的命令及offset,包括master当前的offset,和slave已经拷贝到的offset:

slave与master的offset之间的差异,就是salve需要增量拷贝的数据了。

随着不断有数据写入,master的offset逐渐变大,slave也不断的拷贝,追赶master的offset:

直到数组被填满:

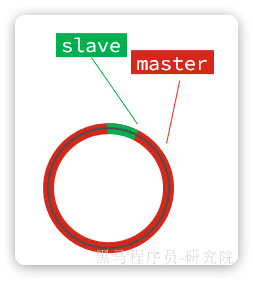

此时,如果有新的数据写入,就会覆盖数组中的旧数据。不过,旧的数据只要是绿色的,说明是已经被同步到slave的数据,即便被覆盖了也没什么影响。因为未同步的仅仅是红色部分:

但是,如果slave出现网络阻塞,导致master的offset远远超过了slave的offset:

如果master继续写入新数据,master的offset就会覆盖repl_baklog中旧的数据,直到将slave现在的offset也覆盖:

棕色框中的红色部分,就是尚未同步,但是却已经被覆盖的数据。此时如果slave恢复,需要同步,却发现自己的offset都没有了,无法完成增量同步了。只能做全量同步。

repl_baklog大小有上限,写满后会覆盖最早的数据。如果slave断开时间过久,导致尚未备份的数据被覆盖,则无法基于repl_baklog做增量同步,只能再次全量同步。

- 主从同步优化

- 主从同步可以保证主从数据的一致性,非常重要。

- 可以从以下几个方面来优化Redis主从就集群:

- 在master中配置repl-diskless-sync yes启用无磁盘复制,避免全量同步时的磁盘IO。

- Redis单节点上的内存占用不要太大,减少RDB导致的过多磁盘IO

- 适当提高repl_baklog的大小,发现slave宕机时尽快实现故障恢复,尽可能避免全量同步

- 限制一个master上的slave节点数量,如果实在是太多slave,则可以采用主-从-从链式结构,减少master压力

- 主-从-从架构图:

简述全量同步和增量同步区别?

- 全量同步:master将完整内存数据生成RDB,发送RDB到slave。后续命令则记录在repl_baklog,逐个发送给slave。

- 增量同步:slave提交自己的offset到master,master获取repl_baklog中从offset之后的命令给slave

- 1

- 2

什么时候执行全量同步?

- slave节点第一次连接master节点时

- slave节点断开时间太久,repl_baklog中的offset已经被覆盖时

- 1

- 2

什么时候执行增量同步?

- slave节点断开又恢复,并且在repl_baklog中能找到offset时

- 1

Redis哨兵集群结构

Redis提供了哨兵(Sentinel)机制来监控主从集群监控状态,确保集群的高可用性

- 哨兵的作用如下:

- 状态监控:Sentinel 会不断检查您的master和slave是否按预期工作

- 故障恢复(failover):如果master故障,Sentinel会将一个slave提升为master。当故障实例恢复后会成为slave

- 状态通知:Sentinel充当Redis客户端的服务发现来源,当集群发生failover时,会将最新集群信息推送给Redis的客户端

那么问题来了,Sentinel怎么知道一个Redis节点是否宕机呢?

- 状态监控

Sentinel基于心跳机制监测服务状态,每隔1秒向集群的每个节点发送ping命令,并通过实例的响应结果来做出判断

- 主观下线(sdown):如果某sentinel节点发现某Redis节点未在规定时间响应,则认为该节点主观下线。

- 客观下线(odown):若超过指定数量(通过quorum设置)的sentinel都认为该节点主观下线,则该节点客观下线。quorum值最好超过Sentinel节点数量的一半,Sentinel节点数量至少3台。

- 如图:

一旦发现master故障,sentinel需要在salve中选择一个作为新的master,选择依据是这样的:

- 首先会判断slave节点与master节点断开时间长短,如果超过down-after-milliseconds * 10则会排除该slave节点

- 然后判断slave节点的slave-priority值,越小优先级越高,如果是0则永不参与选举(默认都是1)。

- 如果slave-prority一样,则判断slave节点的offset值,越大说明数据越新,优先级越高

- 最后是判断slave节点的run_id大小,越小优先级越高(通过info server可以查看run_id)。

问题来了,当选出一个新的master后,该如何实现身份切换呢?

大概分为两步:

- 在多个sentinel中选举一个leader

- 由leader执行failover

- 选举leader

首先,Sentinel集群要选出一个执行failover的Sentinel节点,可以成为leader。要成为leader要满足两个条件:

- 最先获得超过半数的投票

- 获得的投票数不小于quorum值

而sentinel投票的原则有两条: - 优先投票给目前得票最多的

- 如果目前没有任何节点的票,就投给自己

比如有3个sentinel节点,s1、s2、s3,假如s2先投票: - 此时发现没有任何人在投票,那就投给自己。s2得1票

- 接着s1和s3开始投票,发现目前s2票最多,于是也投给s2,s2得3票

- s2称为leader,开始故障转移

不难看出,谁先投票,谁就会称为leader,那什么时候会触发投票呢?

答案是第一个确认master客观下线的人会立刻发起投票,一定会成为leader。

OK,sentinel找到leader以后,该如何完成failover呢?

- failover

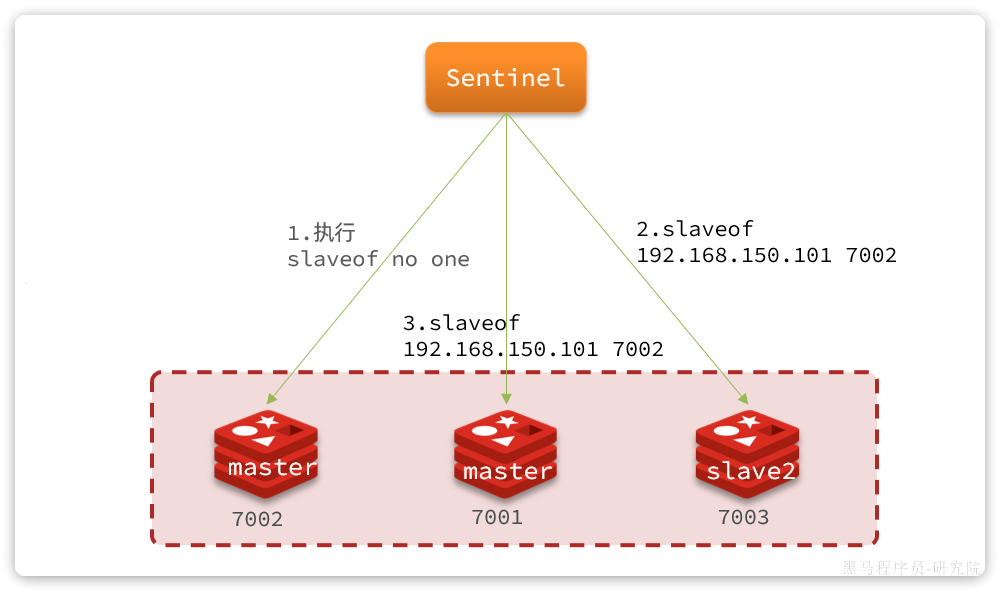

我们举个例子,有一个集群,初始状态下7001为master,7002和7003为slave:

假如master发生故障,slave1当选。则故障转移的流程如下:

1)sentinel给备选的slave1节点发送slaveof no one命令,让该节点成为master

2)sentinel给所有其它slave发送slaveof 192.168.150.101 7002 命令,让这些节点成为新master,也就是7002的slave节点,开始从新的master上同步数据。

3)最后,当故障节点恢复后会接收到哨兵信号,执行slaveof 192.168.150.101 7002命令,成为slave:

Redis哨兵集群搭建

- 编写sentinel.conf文件

sentinel announce-ip "192.168.150.101" #--改成自己的ip

sentinel monitor hmaster 192.168.150.101 7001 2 # 2 认定master下线时的quorum值

sentinel down-after-milliseconds hmaster 5000 #- hmaster:主节点名称,自定义,任意写

sentinel failover-timeout hmaster 60000

# - sentinel down-after-milliseconds hmaster 5000:声明master节点超时多久后被标记下线

# - sentinel failover-timeout hmaster 60000:在第一次故障转移失败后多久再次重试

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 创建文件夹哨兵 s1、s2、s3文件夹 并拷贝sentinel.conf

[root@Docker redis]# pwd /root/redis [root@Docker redis]# mkdir s1 s2 s3 [root@Docker redis]# ll 总用量 113588 -rw-r--r--. 1 root root 408 6月 19 19:18 docker-compose.yaml -rw-r--r--. 1 root root 116304384 6月 19 19:18 redis.tar drwxr-xr-x. 2 root root 6 6月 19 20:50 s1 drwxr-xr-x. 2 root root 6 6月 19 20:50 s2 drwxr-xr-x. 2 root root 6 6月 19 20:50 s3 -rw-r--r--. 1 root root 171 6月 19 20:50 sentinel.conf [root@Docker redis]# cp sentinel.conf s1 [root@Docker redis]# cp sentinel.conf s2 [root@Docker redis]# cp sentinel.conf s3 [root@Docker redis]# ll

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 编写docker-compose.yaml

version: "3.2" services: r1: image: redis container_name: r1 network_mode: "host" entrypoint: ["redis-server", "--port", "7001"] r2: image: redis container_name: r2 network_mode: "host" entrypoint: ["redis-server", "--port", "7002", "--slaveof", "192.168.150.101", "7001"] r3: image: redis container_name: r3 network_mode: "host" entrypoint: ["redis-server", "--port", "7003", "--slaveof", "192.168.150.101", "7001"] s1: image: redis container_name: s1 volumes: - /root/redis/s1:/etc/redis network_mode: "host" entrypoint: ["redis-sentinel", "/etc/redis/sentinel.conf", "--port", "27001"] s2: image: redis container_name: s2 volumes: - /root/redis/s2:/etc/redis network_mode: "host" entrypoint: ["redis-sentinel", "/etc/redis/sentinel.conf", "--port", "27002"] s3: image: redis container_name: s3 volumes: - /root/redis/s3:/etc/redis network_mode: "host" entrypoint: ["redis-sentinel", "/etc/redis/sentinel.conf", "--port", "27003"]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 启动

docker compose up -d

查看日志

docker logs s1

# Sentinel ID is 8e91bd24ea8e5eb2aee38f1cf796dcb26bb88acf

# +monitor master hmaster 192.168.150.101 7001 quorum 2

* +slave slave 192.168.150.101:7003 192.168.150.101 7003 @ hmaster 192.168.150.101 7001

* +sentinel sentinel 5bafeb97fc16a82b431c339f67b015a51dad5e4f 192.168.150.101 27002 @ hmaster 192.168.150.101 7001

* +sentinel sentinel 56546568a2f7977da36abd3d2d7324c6c3f06b8d 192.168.150.101 27003 @ hmaster 192.168.150.101 7001

* +slave slave 192.168.150.101:7002 192.168.150.101 7002 @ hmaster 192.168.150.101 7001

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

Sentinel的三个作用是什么?

- 集群监控

- 故障恢复

- 状态通知

Sentinel如何判断一个redis实例是否健康?

- 每隔1秒发送一次ping命令,如果超过一定时间没有相向则认为是主观下线(sdown)

- 如果大多数sentinel都认为实例主观下线,则判定服务客观下线(odown)

故障转移步骤有哪些?

- 首先要在sentinel中选出一个leader,由leader执行failover

- 选定一个slave作为新的master,执行slaveof noone,切换到master模式

- 然后让所有节点都执行slaveof 新master

- 修改故障节点配置,添加slaveof 新master

sentinel选举leader的依据是什么?

- 票数超过sentinel节点数量1半

- 票数超过quorum数量

- 一般情况下最先发起failover的节点会当选

sentinel从slave中选取master的依据是什么?

- 首先会判断slave节点与master节点断开时间长短,如果超过down-after-milliseconds * 10则会排除该slave节点

- 然后判断slave节点的slave-priority值,越小优先级越高,如果是0则永不参与选举(默认都是1)。

- 如果slave-prority一样,则判断slave节点的offset值,越大说明数据越新,优先级越高

- 最后是判断slave节点的run_id大小,越小优先级越高(通过info server可以查看run_id)。

RedisTemplate连接哨兵集群

- 1)引入依赖

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

- 1

- 2

- 3

- 4

- 2)配置哨兵地址

spring:

redis:

sentinel:

master: hmaster # 集群名

nodes: # 哨兵地址列表

- 192.168.70.145:27001

- 192.168.70.145:27002

- 192.168.70.145:27003

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 3)配置读写分离

- 配置读写分离,让java客户端将写请求发送到master节点,读请求发送到slave节点。定义一个bean即可:

@Bean

public LettuceClientConfigurationBuilderCustomizer clientConfigurationBuilderCustomizer(){

return clientConfigurationBuilder -> clientConfigurationBuilder.readFrom(ReadFrom.REPLICA_PREFERRED);

}

- 1

- 2

- 3

- 4

这个bean中配置的就是读写策略,包括四种:

- MASTER:从主节点读取

- MASTER_PREFERRED:优先从master节点读取,master不可用才读取slave

- REPLICA:从slave节点读取

- REPLICA_PREFERRED:优先从slave节点读取,所有的slave都不可用才读取master