热门标签

热门文章

- 1RabbitMQ入门_rabbitmq 开启mqtt后没有15675端口

- 2uniapp保存修改个人信息后,使用uni.navigateBack(),返回上一页,没有消息提示,但是已经写过消息提示代码

- 3Stable Diffusion教程:4000字说清楚图生图_stable diffusion拉伸、裁剪、填充

- 4工控安全工具集

- 5java并发编程的艺术和并发编程这一篇就够了_java并发编程的艺术和java并发编程之美

- 6语义分割综述_语义分割多通道数据

- 7寒假集训一期总结

- 8MySQL的四个事务隔离级别有哪些?各自存在哪些问题?_mysql事物的隔离级别

- 9LangChain曝关键漏洞,数百万AI应用面临攻击风险_langchain 漏洞

- 10[转](21条消息) Pico Neo 3教程☀️ 三、SDK 的进阶功能_pico3怎么转换场景

当前位置: article > 正文

nlp——SentenceTransformer使用例子_sentencetransformer加载本地模型

作者:Guff_9hys | 2024-08-11 19:08:18

赞

踩

sentencetransformer加载本地模型

去Hugging Face官网下载sentence-transformers模型

1、导入所需要的库

- from transformers import AutoTokenizer, AutoModel

- import numpy as np

- import torch

- import torch.nn.functional as F

2、加载预训练模型

- path = 'D:/Model/sentence-transformers/all-MiniLM-L6-v2'

- tokenizer = AutoTokenizer.from_pretrained(path)

- model = AutoModel.from_pretrained(path)

3、定义平均池化

- def mean_pooling(model_output, attention_mask):

- #First element of model_output contains all token embeddings

- token_embeddings = model_output[0]

- input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

- return torch.sum(token_embeddings * input_mask_expanded, 1) /

- torch.clamp(input_mask_expanded.sum(1), min=1e-9)

4、对句子进行嵌入

- sentences = ['loved thisand know really bought wanted see pictures myselfIm lucky enough someone could justify buying present',

- 'issue pages stickers restuck really used configurations made regular pages rather taking pieces robot back',

- 'stickers dont stick well first time placing',

- 'Great fun grandson loves robots',

- 'would suggest younger kids son 3']

- encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

-

- with torch.no_grad():

- model_output = model(**encoded_input)

-

- sentence_embeddings1 = mean_pooling(model_output, encoded_input['attention_mask'])

- print("Sentence embeddings:")

- print(sentence_embeddings1)

- # Normalize embeddings

- sentence_embeddings2 = F.normalize(sentence_embeddings1, p=2, dim=1)

- print("Sentence embeddings:")

- print(sentence_embeddings2)

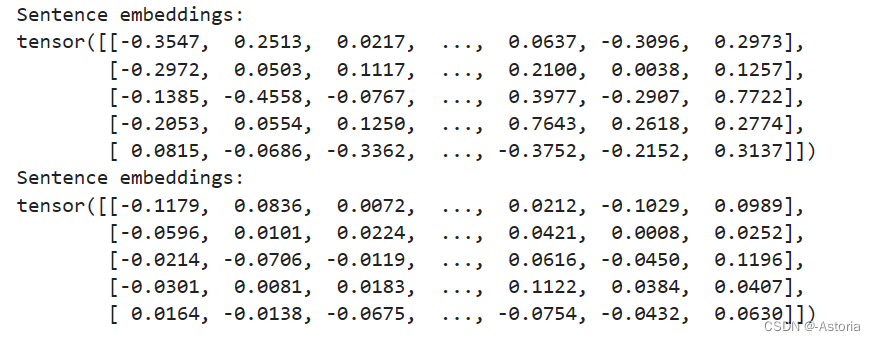

5、运行结果

6、定义句子之间的相似度

- def compute_sim_score(v1, v2) :

- return v1.dot(v2) / (np.linalg.norm(v1) * np.linalg.norm(v2))

7、计算句子相似度

- #'issue pages stickers restuck really used configurations made regular pages rather taking pieces robot back'

- #'stickers dont stick well first time placing'

- compute_sim_score(sentence_embeddings1[1], sentence_embeddings1[2])

- #result:tensor(0.5126)

8、看一下嵌入的shape

- sentence_embeddings1.shape

- #torch.Size([5, 384])

展望总结:

接下来试试对真实用户对项目的评论句子做嵌入

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/Guff_9hys/article/detail/965623

推荐阅读

相关标签