文本预处理方法

大纲(Outline)

Estimates state that 70%–85% of the world’s data is text (unstructured data) [1]. New deep learning language models (transformers) have caused explosive growth in industry applications [5,6.11].

估计表明,全球数据的70%–85%是文本(非结构化数据) [1] 。 新的深度学习语言模型(变压器)已引起行业应用的爆炸式增长[5,6.11] 。

This blog is not an article introducing you to Natural Language Processing. Instead, it assumes you are familiar with noise reduction and normalization of text. It covers text preprocessing up to producing tokens and lemmas from the text.

这不是一篇向您介绍自然语言处理的文章。 相反,它假定您熟悉降噪和文本规范化。 它涵盖了文本预处理,直至从文本产生标记和引理。

We stop at feeding the sequence of tokens into a Natural Language model.

我们停止将标记序列输入自然语言模型。

The feeding of that sequence of tokens into a Natural Language model to accomplish a specific model task is not covered here.

这里不介绍将令牌序列馈送到自然语言模型中以完成特定模型任务的过程。

In production-grade Natural Language Processing (NLP), what is covered in this blog is that fast text pre-processing (noise cleaning and normalization) is critical.

在 生产级自然语言处理(NLP ),此博客涵盖的内容是快速的文本预处理(噪声清除和归一化) 至关重要。

I discuss packages we use for production-level NLP;

我讨论了用于生产级NLP的软件包。

I detail the production-level NLP preprocessing text tasks with python code and packages;

我详细介绍了具有python代码和软件包的生产级NLP预处理文本任务;

Finally. I report benchmarks for NLP text pre-processing tasks;

最后。 我报告了NLP文本预处理任务的基准。

将NLP处理分为两个步骤 (Dividing NLP processing into two Steps)

We segment NLP into two major steps (for the convenience of this article):

我们将NLP分为两个主要步骤(为方便起见):

Text pre-processing into tokens. We clean (noise removal) and then normalize the text. The goal is to transform the text into a corpus that any NLP model can use. A goal is rarely achieved until the introduction of the transformer [2].

将文本预处理为令牌。 我们清洗(去除噪音),然后将文本标准化。 目的是将文本转换为任何NLP模型都可以使用的语料库。 在引入变压器之前很少达到目标[2] 。

A corpus is an input (text preprocessed into a sequence of tokens) into NLP models for training or prediction.

语料库是对NLP模型的输入(经过预处理的标记序列),用于训练或预测。

The rest of this article is devoted to noise removal text and normalization of text into tokens/lemmas (Step 1: text pre-processing).

本文的其余部分专门讨论噪声消除文本以及将文本规范化为标记/引理(步骤1:文本预处理)。

Noise removal deletes or transforms things in the text that degrade the NLP task model.

噪声消除会删除或转换文本中使NLP任务模型降级的内容。

Noise removal is usually an NLP task-dependent. For example, e-mail may or may not be removed if it is a text classification task or a text redaction task.

噪声消除通常取决于NLP任务。 例如,如果电子邮件是文本分类任务或文本编辑任务,则可能会或可能不会将其删除。

I show replacement or removal of the noise.

我表示已替换或消除了噪音。

Normalization of the corpus is transforming the text into a common form. The most frequent example is normalization by transforming all characters to lowercase.

语料库的规范化将文本转换为通用形式。 最常见的示例是通过将所有字符转换为小写字母进行规范化。

In follow-on blogs, we will cover different deep learning language models and Transformers (Steps 2-n) fed by the corpus token/lemma stream.

在后续博客中,我们将介绍由语料库标记/引理流提供的不同深度学习语言模型和Transformers(步骤2-n)。

NLP文本预处理包特征 (NLP Text Pre-Processing Package Factoids)

There are many NLP packages available. We use spaCy [2], textacy [4], Hugging Face transformers [5], and regex [7] in most of our NLP production applications. The following are some of the “factoids” we used in our decision process.

有许多可用的NLP软件包。 我们在大多数NLP生产应用程序中都使用spaCy [2],textacy [4],Hugging Face变形器[5]和regex [7] 。 以下是我们在决策过程中使用的一些“事实”。

Note: The following “factoids” may be biased. That is why we refer to them as “factoids.”

注意:以下“类事实”可能会有偏差。 这就是为什么我们称它们为“类固醇”。

NLTK [3]

NLTK [3]

NLTK is a string processing library. All the tools take strings as input and return strings or lists of strings as output [3].

NLTK是字符串处理库。 所有工具都将字符串作为输入,并将字符串或字符串列表作为输出[3] 。

NLTK is a good choice if you want to explore different NLP with a corpus whose length is less than a million words.

如果您要使用长度小于一百万个单词的语料库探索其他NLP ,则NLTK是一个不错的选择。

NLTK is a bad choice if you want to go into production with your NLP application [3].

如果您想将NLP应用程序投入生产,则NLTK是一个不好的选择[3]。

正则表达式 (Regex)

The use of regex is pervasive throughout our text-preprocessing code. Regex is a fast string processor. Regex, in various forms, has been around for over 50 years. Regex support is part of the standard library of Java and Python, and is built into the syntax of others, including Perl and ECMAScript (JavaScript);

在我们的文本预处理代码中,正则表达式的使用无处不在。 正则表达式是一种快速的字符串处理器。 各种形式的正则表达式已经存在了50多年。 Regex支持是Java和Python标准库的一部分,并内置于其他语法中,包括Perl和ECMAScript (JavaScript);

空间[2] (spaCy [2])

spaCy is a moderate choice if you want to research different NLP models with a corpus whose length is greater than a million words.

spaCy是一个温和的选择,如果你想用一个语料库,其长度比一万字更大,以研究不同NLP模型。

If you use a selection from spaCy [3], Hugging Face [5], fast.ai [13], and GPT-3 [6], then you are performing SOTA (state-of-the-art) research of different NLP models (my opinion at the time of writing this blog).

如果您使用spaCy [3] , Hugging Face [5] , fast.ai [13]和GPT-3 [6]中的选项,则您正在执行不同NLP的SOTA (最新技术)研究模型(我在撰写此博客时的看法)。

spaCy is a good choice if you want to go into production with your NLP application.

如果要使用NLP应用程序投入生产, spaCy是一个不错的选择。

spaCy is an NLP library implemented both in Python and Cython. Because of the Cython, parts of spaCy are faster than if implemented in Python [3];

spaCy是同时在Python和Cython中实现的NLP库。 由于使用了Cython, spaCy的某些部分比实施起来要快 在Python [3]中;

spacy is the fastest package, we know of, for NLP operations;

我们知道, spacy是最快的NLP操作包。

spacy is available for operating systems MS Windows, macOS, and Ubuntu [3];

spacy可用于MS Windows,macOS和Ubuntu [3]操作系统;

spaCy runs natively on Nvidia GPUs [3];

spaCy在Nvidia GPU上本地运行[3] ;

explosion/spaCy has 16,900 stars on Github (7/22/2020);

爆炸/ spaCy有Github上16900星(2020年7月22日);

spaCy has 138 public repository implementations on GitHub;

spaCy在GitHub上有138个公共存储库实现;

spaCy comes with pre-trained statistical models and word vectors;

spaCy带有预训练的统计模型和单词向量;

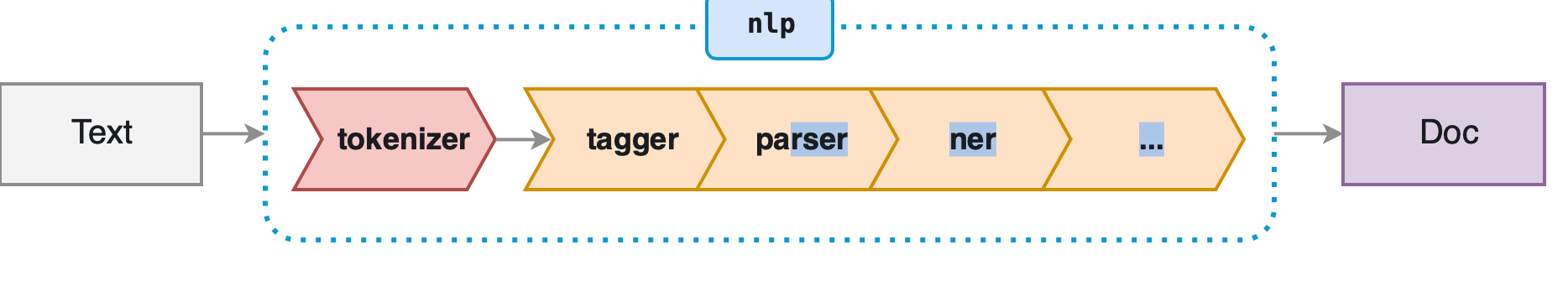

spaCy transforms text into document objects, vocabulary objects, word- token objects, and other useful objects resulting from parsing the text ;

spaCy将文本转换为文档对象,词汇对象,单词标记对象和其他因解析文本而产生的有用对象;

Doc class has several useful attributes and methods. Significantly, you can create new operations on these objects as well as extend a class with new attributes (adding to the spaCy pipeline);

Doc类具有几个有用的属性和方法。 重要的是,您可以在这些对象上创建新操作,以及使用新属性(添加到spaCy管道)扩展类。

spaCy features tokenization for 50+ languages;

spaCy具有针对50多种语言的标记化功能;

创建long_s练习文本字符串 (Creating long_s Practice Text String)

We create long_, a long string that has extra whitespace, emoji, email addresses, $ symbols, HTML tags, punctuation, and other text that may or may not be noise for the downstream NLP task and/or model.

我们创建long_ ,这是一个长字符串,其中包含额外的空格,表情符号,电子邮件地址,$符号,HTML标签,标点符号以及其他可能对下游NLP任务和/或模型long_文本。

MULPIPIER = int(3.8e3)text_l = 300%time long_s = ':( 声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/Cpp五条/article/detail/354112