- 1抖音视频无水印爬虫下载工具|视频关键词批量采集软件_批量提取抖音视频名称

- 2Bert 自然语言处理模型基础知识——使用 Transformer架构和自注意力机制的神经网络结构_transformer生成神经网络模型

- 3NLP--事件抽取模型对比_ddparser 依存句法

- 4CUMCM历年赛题汇总_全国大学生数学建模竞赛(cumcm)近年题目

- 5如何搭建一个 tts 语言合成模型_tts模型训练

- 6基于知识的推荐系统(案例学习)

- 7【一】Java快速入门_学习java

- 8awesome系列网址_awesome cnblog

- 9【AIGC】单图换脸离线版软件包及使用方法_ai换脸替换工具离线版怎么用

- 10多模态推荐系统综述

Actor-Critic_actor-critic可以在每一步之后都进行更新吗

赞

踩

算法思想

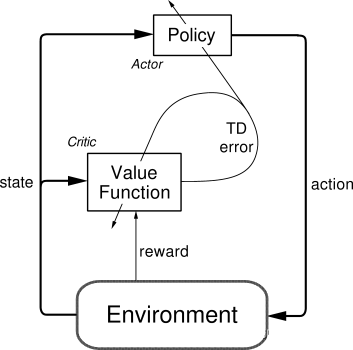

Actor-Critic是一种基于策略和价值的强化学习算法,原来 Actor-Critic 的 Actor 的前生是 Policy Gradients ,这能让它毫不费力地在连续动作中选取合适的动作,,而 Q-learning 做这件事会瘫痪。原来 Actor Critic 中的 Critic 的前生是 Q-learning 或者其他的以值(value)为基础的学习法 , 能进行单步更新,而传统的 Policy Gradients 则是回合更新,这降低了学习效率。

Actor 和 Critic 他们都能用不同的神经网络来代替。其中Actor基于策略函数,负责生成动作(Action)并和环境交互,Critic基于价值函数,负责评估Actor的表现,Critic通过学习环境和奖励之间的关系, 能看到现在所处状态的潜在奖励, 所以用它来指点 Actor 便能使 Actor 每一步都在更新, 如果使用单纯的 Policy Gradients,Actor 只能等到回合结束才能开始更新。即在Actor-Critic算法中,我们要做两组近似,一组是策略函数的近似,另一组是价值函数的近似。

总的来说,就是Critic通过Q网络计算状态的最优价值

v

t

v_t

vt, 而Actor利用

v

t

v_t

vt这个最优价值迭代更新策略函数的参数

θ

\theta

θ,进而选择动作,并得到反馈和新的状态,Critic使用反馈和新的状态更新Q网络参数

w

w

w, 在后面Critic会使用新的网络参数

w

w

w来帮Actor计算状态的最优价值

v

t

v_t

vt。

缺点:

Actor-Critic 涉及到了两个神经网络,而且每次都是在连续状态中更新参数,每次参数更新前后都存在相关性,导致神经网络只能片面的看待问题,甚至导致神经网络学不到东西。

算法实现

- Actor 网络

class Actor(): def __init__(self, env): # init some parameters self.state_dim = env.observation_space.shape[0] # observation特征数量 self.action_dim = env.action_space.n # action特征数量 self.model = tf.keras.Sequential([ tf.keras.layers.Dense(128, activation='relu', use_bias=True), tf.keras.layers.Dense(self.action_dim, use_bias=True) ]) actor_optimizer = tf.keras.optimizers.Adam(1e-3) self.model.compile( loss='categorical_crossentropy', optimizer=actor_optimizer ) # 选择动作 def choose_action(self, observation): prob_weights = self.model.predict(observation[np.newaxis, :]) prob_weights = tf.nn.softmax(prob_weights) action = np.random.choice(range(prob_weights.shape[1]), p=prob_weights.numpy().ravel()) return action # 学习 def learn(self, state, action, td_error): s = state[np.newaxis, :] one_hot_action = np.zeros(self.action_dim) one_hot_action[action] = 1 a = one_hot_action[np.newaxis, :] self.model.fit(s, td_error * a, verbose=0) def saveModel(self): path = os.path.join('model', '_'.join([File, ALG_NAME, ENV_NAME])) if not os.path.exists(path): os.makedirs(path) self.model.save_weights(os.path.join(path, 'actor.tf'), save_format='tf') print('Saved weights.') def loadModel(self): path = os.path.join('model', '_'.join([File, ALG_NAME, ENV_NAME])) if os.path.exists(path): self.model.load_weights(os.path.join(path, 'actor.tf')) print('Load weights!') else: print("No model file find, please train model first...")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- Critic 网络

class Critic(): def __init__(self, env): self.state_dim = env.observation_space.shape[0] # observation特征数量 self.action_dim = env.action_space.n # action特征数量 self.model = tf.keras.Sequential([ tf.keras.layers.Dense(128, activation='relu', use_bias=True), tf.keras.layers.Dense(1, use_bias=True) ]) critic_optimizer = tf.keras.optimizers.Adam(1e-3) self.model.compile(loss='mse', optimizer=critic_optimizer) def train_Q_network(self, state, reward, next_state, done): s, s_ = state[np.newaxis, :], next_state[np.newaxis, :] V = self.model(s) V_ = self.model(s_) td_error = reward + GAMMA * V_ - V value_target = reward if done else reward + GAMMA * np.array(self.model(s_))[0][0] value_target = [[value_target]] self.model.fit(s, np.array(value_target), verbose=0) return td_error def saveModel(self): path = os.path.join('model', '_'.join([File, ALG_NAME, ENV_NAME])) if not os.path.exists(path): os.makedirs(path) self.model.save_weights(os.path.join(path, 'critic.tf'), save_format='tf') print('Saved weights.') def loadModel(self): path = os.path.join('model', '_'.join([File, ALG_NAME, ENV_NAME])) if os.path.exists(path): self.model.load_weights(os.path.join(path, 'critic.tf')) print('Load weights!') else: print("No model file find, please train model first...")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- main

# Hyper Parameters GAMMA = 0.95 # discount factor LEARNING_RATE = 0.01 # learning rate File = 'ActorCritic-1.1' ALG_NAME = 'AC' ENV_NAME = 'CartPole-v0' def main(): EPISODE = 200 # Episode limitation STEP = 1000 # Step limitation in an episode env = gym.make(ENV_NAME) actor = Actor(env) critic = Critic(env) all_rewards = [] for episode in range(EPISODE): # initialize task state = env.reset() total_reward = 0 # Train for step in range(STEP): action = actor.choose_action(state) # e-greedy action for train next_state, reward, done, _ = env.step(action) td_error = critic.train_Q_network(state, reward, next_state, done) # gradient = grad[r + gamma * V(s_) - V(s)] actor.learn(state, action, td_error) # true_gradient = grad[logPi(s,a) * td_error] total_reward += reward state = next_state if done: all_rewards.append(total_reward) break print("total_reward---------------", total_reward) plt.plot(all_rewards) plt.show() actor.saveModel() critic.saveModel()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

参考文章

https://www.cnblogs.com/pinard/p/10272023.html

https://mofanpy.com/tutorials/machine-learning/reinforcement-learning/intro-AC/