热门标签

热门文章

- 1李沐动手学深度学习V2-BERT微调和代码实现_bert微调代码

- 2EF5.0odeFirst数据迁移找不到Shell10.0_a78dbae7dcdeebbc62a8198eb78cbed78a04c9f6

- 3微信小程序投票系统制作过程详解

- 4LangChain - 01 - 快速开始_to install langchain-community run `pip install -u

- 5原创10个python自动化化案例,一口一个高效办公!

- 6Java System#exit 无法退出程序的问题探索_system.exit(-1)进程未退出

- 7DGF论文阅读笔记

- 8【区块链】深入剖析免费赚钱app的本质_看视频赚金币与函数有关系吗

- 9华为v30怎么升级鸿蒙系统,这四款华为手机可升级到鸿蒙系统,老机型居多,最低只需千元!...

- 10python gdal教程_在python中利用GDAL对tif文件进行读写的方法

当前位置: article > 正文

SRGAN超分辨重建_srgan全称

作者:小丑西瓜9 | 2024-03-26 10:52:23

赞

踩

srgan全称

简介

SRGAN全称:super-resolution generative adversarial network,是利用生成对抗网络进行图像超分辨率重建的一种深度学习网络。

效果展示

网络结构

网络由生成网络和判别网络组成。

生成网络:input + Conv(channel = 64, stride = 1) + Relu + B个残差块 + 2次Deconv(4倍放大)

判别网络:input + Conv(channel = 64, stride = 1) + Leaky Relu + 重复卷积块(Conv+Leak Relu+BN)进行下采样 + Dense + Sigmoid (结果和1或0对比)

Loss函数

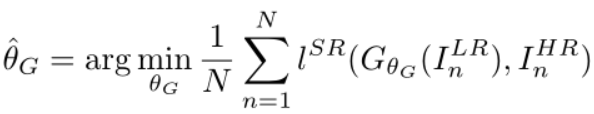

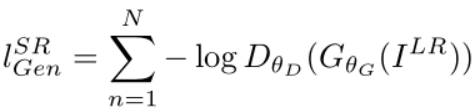

1.优化目标

2.损失函数

总损失 = 内容损失 + 对抗损失 + 正则项

其中,正则项基于全变分损失,用来去噪,使图像变得平滑。

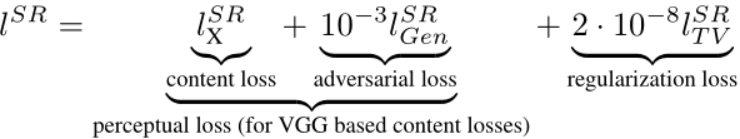

3.感知损失

感知损失 = 内容损失 + 对抗损失

内容损失:

采用VGG提取特征,计算fake和gt特征的欧氏距离。

尽管使用传统的MSE损失函数能实现高的信噪比PSNR,但缺失了高频内容,使生成的图像过于平滑。所以使用VGG损失函数。

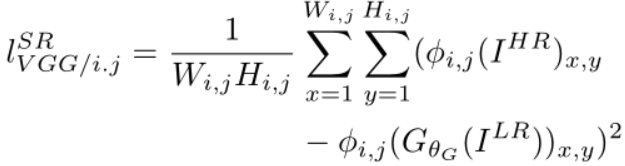

对抗损失:

判别生成网络生成的样本。

训练过程

生成网络和判别网络交替训练。

判别网络:

# original的imgs_hr和valid=1对比

# generator的fake_hr和fake=0对比

imgs_hr, imgs_lr = self.data_loader.load_data(batch_size)

fake_hr = self.generator.predict(imgs_lr)

d_loss_real = self.discriminator.train_on_batch(imgs_hr, valid=1)

d_loss_fake = self.discriminator.train_on_batch(fake_hr, fake=0)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

生成网络:

# generator的fake_hr判别结果validity和valid=1对比

# 通过VGG的fake_features和image_features对比

fake_hr = self.generator(img_lr)

fake_features = self.vgg(fake_hr)

validity = self.discriminator(fake_hr)

self.combined = Model(img_lr, [validity, fake_features])

imgs_hr, imgs_lr = self.data_loader.load_data(batch_size)

image_features = self.vgg.predict(imgs_hr)

g_loss = self.combined.train_on_batch(imgs_lr, [valid=1, image_features])

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

网络计算参数

判别网络参数

input = generate (512, 512, 3)--------> output = (32, 32, 1) _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_3 (InputLayer) (None, 512, 512, 3) 0 _________________________________________________________________ conv2d_1 (Conv2D) (None, 512, 512, 64) 1792 _________________________________________________________________ leaky_re_lu_1 (LeakyReLU) (None, 512, 512, 64) 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 256, 256, 64) 36928 _________________________________________________________________ leaky_re_lu_2 (LeakyReLU) (None, 256, 256, 64) 0 _________________________________________________________________ batch_normalization_1 (Batch (None, 256, 256, 64) 256 _________________________________________________________________ conv2d_3 (Conv2D) (None, 256, 256, 128) 73856 _________________________________________________________________ leaky_re_lu_3 (LeakyReLU) (None, 256, 256, 128) 0 _________________________________________________________________ batch_normalization_2 (Batch (None, 256, 256, 128) 512 _________________________________________________________________ conv2d_4 (Conv2D) (None, 128, 128, 128) 147584 _________________________________________________________________ leaky_re_lu_4 (LeakyReLU) (None, 128, 128, 128) 0 _________________________________________________________________ batch_normalization_3 (Batch (None, 128, 128, 128) 512 _________________________________________________________________ conv2d_5 (Conv2D) (None, 128, 128, 256) 295168 _________________________________________________________________ leaky_re_lu_5 (LeakyReLU) (None, 128, 128, 256) 0 _________________________________________________________________ batch_normalization_4 (Batch (None, 128, 128, 256) 1024 _________________________________________________________________ conv2d_6 (Conv2D) (None, 64, 64, 256) 590080 _________________________________________________________________ leaky_re_lu_6 (LeakyReLU) (None, 64, 64, 256) 0 _________________________________________________________________ batch_normalization_5 (Batch (None, 64, 64, 256) 1024 _________________________________________________________________ conv2d_7 (Conv2D) (None, 64, 64, 512) 1180160 _________________________________________________________________ leaky_re_lu_7 (LeakyReLU) (None, 64, 64, 512) 0 _________________________________________________________________ batch_normalization_6 (Batch (None, 64, 64, 512) 2048 _________________________________________________________________ conv2d_8 (Conv2D) (None, 32, 32, 512) 2359808 _________________________________________________________________ leaky_re_lu_8 (LeakyReLU) (None, 32, 32, 512) 0 _________________________________________________________________ batch_normalization_7 (Batch (None, 32, 32, 512) 2048 _________________________________________________________________ dense_1 (Dense) (None, 32, 32, 1024) 525312 _________________________________________________________________ leaky_re_lu_9 (LeakyReLU) (None, 32, 32, 1024) 0 _________________________________________________________________ dense_2 (Dense) (None, 32, 32, 1) 1025 ================================================================= Total params: 5,219,137 Trainable params: 5,215,425 Non-trainable params: 3,712

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

生成网络参数

input = original (128, 128, 3)--------> output = (512, 512, 3) __________________________________________________________________________________________________ Layer (type) Output Shape Param # Connected to ================================================================================================== input_4 (InputLayer) (None, 128, 128, 3) 0 __________________________________________________________________________________________________ conv2d_9 (Conv2D) (None, 128, 128, 64) 15616 input_4[0][0] __________________________________________________________________________________________________ activation_1 (Activation) (None, 128, 128, 64) 0 conv2d_9[0][0] __________________________________________________________________________________________________ conv2d_10 (Conv2D) (None, 128, 128, 64) 36928 activation_1[0][0] __________________________________________________________________________________________________ batch_normalization_8 (BatchNor (None, 128, 128, 64) 256 conv2d_10[0][0] __________________________________________________________________________________________________ activation_2 (Activation) (None, 128, 128, 64) 0 batch_normalization_8[0][0] __________________________________________________________________________________________________ conv2d_11 (Conv2D) (None, 128, 128, 64) 36928 activation_2[0][0] __________________________________________________________________________________________________ batch_normalization_9 (BatchNor (None, 128, 128, 64) 256 conv2d_11[0][0] __________________________________________________________________________________________________ add_1 (Add) (None, 128, 128, 64) 0 batch_normalization_9[0][0] activation_1[0][0] __________________________________________________________________________________________________ conv2d_12 (Conv2D) (None, 128, 128, 64) 36928 add_1[0][0] __________________________________________________________________________________________________ batch_normalization_10 (BatchNo (None, 128, 128, 64) 256 conv2d_12[0][0] __________________________________________________________________________________________________ activation_3 (Activation) (None, 128, 128, 64) 0 batch_normalization_10[0][0] __________________________________________________________________________________________________ conv2d_13 (Conv2D) (None, 128, 128, 64) 36928 activation_3[0][0] __________________________________________________________________________________________________ batch_normalization_11 (BatchNo (None, 128, 128, 64) 256 conv2d_13[0][0] __________________________________________________________________________________________________ add_2 (Add) (None, 128, 128, 64) 0 batch_normalization_11[0][0] add_1[0][0] __________________________________________________________________________________________________ conv2d_14 (Conv2D) (None, 128, 128, 64) 36928 add_2[0][0] __________________________________________________________________________________________________ batch_normalization_12 (BatchNo (None, 128, 128, 64) 256 conv2d_14[0][0] __________________________________________________________________________________________________ activation_4 (Activation) (None, 128, 128, 64) 0 batch_normalization_12[0][0] __________________________________________________________________________________________________ conv2d_15 (Conv2D) (None, 128, 128, 64) 36928 activation_4[0][0] __________________________________________________________________________________________________ batch_normalization_13 (BatchNo (None, 128, 128, 64) 256 conv2d_15[0][0] __________________________________________________________________________________________________ add_3 (Add) (None, 128, 128, 64) 0 batch_normalization_13[0][0] add_2[0][0] __________________________________________________________________________________________________ conv2d_16 (Conv2D) (None, 128, 128, 64) 36928 add_3[0][0] __________________________________________________________________________________________________ batch_normalization_14 (BatchNo (None, 128, 128, 64) 256 conv2d_16[0][0] __________________________________________________________________________________________________ activation_5 (Activation) (None, 128, 128, 64) 0 batch_normalization_14[0][0] __________________________________________________________________________________________________ conv2d_17 (Conv2D) (None, 128, 128, 64) 36928 activation_5[0][0] __________________________________________________________________________________________________ batch_normalization_15 (BatchNo (None, 128, 128, 64) 256 conv2d_17[0][0] __________________________________________________________________________________________________ add_4 (Add) (None, 128, 128, 64) 0 batch_normalization_15[0][0] add_3[0][0] __________________________________________________________________________________________________ conv2d_18 (Conv2D) (None, 128, 128, 64) 36928 add_4[0][0] __________________________________________________________________________________________________ batch_normalization_16 (BatchNo (None, 128, 128, 64) 256 conv2d_18[0][0] __________________________________________________________________________________________________ activation_6 (Activation) (None, 128, 128, 64) 0 batch_normalization_16[0][0] __________________________________________________________________________________________________ conv2d_19 (Conv2D) (None, 128, 128, 64) 36928 activation_6[0][0] __________________________________________________________________________________________________ batch_normalization_17 (BatchNo (None, 128, 128, 64) 256 conv2d_19[0][0] __________________________________________________________________________________________________ add_5 (Add) (None, 128, 128, 64) 0 batch_normalization_17[0][0] add_4[0][0] __________________________________________________________________________________________________ conv2d_20 (Conv2D) (None, 128, 128, 64) 36928 add_5[0][0] __________________________________________________________________________________________________ batch_normalization_18 (BatchNo (None, 128, 128, 64) 256 conv2d_20[0][0] __________________________________________________________________________________________________ activation_7 (Activation) (None, 128, 128, 64) 0 batch_normalization_18[0][0] __________________________________________________________________________________________________ conv2d_21 (Conv2D) (None, 128, 128, 64) 36928 activation_7[0][0] __________________________________________________________________________________________________ batch_normalization_19 (BatchNo (None, 128, 128, 64) 256 conv2d_21[0][0] __________________________________________________________________________________________________ add_6 (Add) (None, 128, 128, 64) 0 batch_normalization_19[0][0] add_5[0][0] __________________________________________________________________________________________________ conv2d_22 (Conv2D) (None, 128, 128, 64) 36928 add_6[0][0] __________________________________________________________________________________________________ batch_normalization_20 (BatchNo (None, 128, 128, 64) 256 conv2d_22[0][0] __________________________________________________________________________________________________ activation_8 (Activation) (None, 128, 128, 64) 0 batch_normalization_20[0][0] __________________________________________________________________________________________________ conv2d_23 (Conv2D) (None, 128, 128, 64) 36928 activation_8[0][0] __________________________________________________________________________________________________ batch_normalization_21 (BatchNo (None, 128, 128, 64) 256 conv2d_23[0][0] __________________________________________________________________________________________________ add_7 (Add) (None, 128, 128, 64) 0 batch_normalization_21[0][0] add_6[0][0] __________________________________________________________________________________________________ conv2d_24 (Conv2D) (None, 128, 128, 64) 36928 add_7[0][0] __________________________________________________________________________________________________ batch_normalization_22 (BatchNo (None, 128, 128, 64) 256 conv2d_24[0][0] __________________________________________________________________________________________________ activation_9 (Activation) (None, 128, 128, 64) 0 batch_normalization_22[0][0] __________________________________________________________________________________________________ conv2d_25 (Conv2D) (None, 128, 128, 64) 36928 activation_9[0][0] __________________________________________________________________________________________________ batch_normalization_23 (BatchNo (None, 128, 128, 64) 256 conv2d_25[0][0] __________________________________________________________________________________________________ add_8 (Add) (None, 128, 128, 64) 0 batch_normalization_23[0][0] add_7[0][0] __________________________________________________________________________________________________ conv2d_26 (Conv2D) (None, 128, 128, 64) 36928 add_8[0][0] __________________________________________________________________________________________________ batch_normalization_24 (BatchNo (None, 128, 128, 64) 256 conv2d_26[0][0] __________________________________________________________________________________________________ activation_10 (Activation) (None, 128, 128, 64) 0 batch_normalization_24[0][0] __________________________________________________________________________________________________ conv2d_27 (Conv2D) (None, 128, 128, 64) 36928 activation_10[0][0] __________________________________________________________________________________________________ batch_normalization_25 (BatchNo (None, 128, 128, 64) 256 conv2d_27[0][0] __________________________________________________________________________________________________ add_9 (Add) (None, 128, 128, 64) 0 batch_normalization_25[0][0] add_8[0][0] __________________________________________________________________________________________________ conv2d_28 (Conv2D) (None, 128, 128, 64) 36928 add_9[0][0] __________________________________________________________________________________________________ batch_normalization_26 (BatchNo (None, 128, 128, 64) 256 conv2d_28[0][0] __________________________________________________________________________________________________ activation_11 (Activation) (None, 128, 128, 64) 0 batch_normalization_26[0][0] __________________________________________________________________________________________________ conv2d_29 (Conv2D) (None, 128, 128, 64) 36928 activation_11[0][0] __________________________________________________________________________________________________ batch_normalization_27 (BatchNo (None, 128, 128, 64) 256 conv2d_29[0][0] __________________________________________________________________________________________________ add_10 (Add) (None, 128, 128, 64) 0 batch_normalization_27[0][0] add_9[0][0] __________________________________________________________________________________________________ conv2d_30 (Conv2D) (None, 128, 128, 64) 36928 add_10[0][0] __________________________________________________________________________________________________ batch_normalization_28 (BatchNo (None, 128, 128, 64) 256 conv2d_30[0][0] __________________________________________________________________________________________________ activation_12 (Activation) (None, 128, 128, 64) 0 batch_normalization_28[0][0] __________________________________________________________________________________________________ conv2d_31 (Conv2D) (None, 128, 128, 64) 36928 activation_12[0][0] __________________________________________________________________________________________________ batch_normalization_29 (BatchNo (None, 128, 128, 64) 256 conv2d_31[0][0] __________________________________________________________________________________________________ add_11 (Add) (None, 128, 128, 64) 0 batch_normalization_29[0][0] add_10[0][0] __________________________________________________________________________________________________ conv2d_32 (Conv2D) (None, 128, 128, 64) 36928 add_11[0][0] __________________________________________________________________________________________________ batch_normalization_30 (BatchNo (None, 128, 128, 64) 256 conv2d_32[0][0] __________________________________________________________________________________________________ activation_13 (Activation) (None, 128, 128, 64) 0 batch_normalization_30[0][0] __________________________________________________________________________________________________ conv2d_33 (Conv2D) (None, 128, 128, 64) 36928 activation_13[0][0] __________________________________________________________________________________________________ batch_normalization_31 (BatchNo (None, 128, 128, 64) 256 conv2d_33[0][0] __________________________________________________________________________________________________ add_12 (Add) (None, 128, 128, 64) 0 batch_normalization_31[0][0] add_11[0][0] __________________________________________________________________________________________________ conv2d_34 (Conv2D) (None, 128, 128, 64) 36928 add_12[0][0] __________________________________________________________________________________________________ batch_normalization_32 (BatchNo (None, 128, 128, 64) 256 conv2d_34[0][0] __________________________________________________________________________________________________ activation_14 (Activation) (None, 128, 128, 64) 0 batch_normalization_32[0][0] __________________________________________________________________________________________________ conv2d_35 (Conv2D) (None, 128, 128, 64) 36928 activation_14[0][0] __________________________________________________________________________________________________ batch_normalization_33 (BatchNo (None, 128, 128, 64) 256 conv2d_35[0][0] __________________________________________________________________________________________________ add_13 (Add) (None, 128, 128, 64) 0 batch_normalization_33[0][0] add_12[0][0] __________________________________________________________________________________________________ conv2d_36 (Conv2D) (None, 128, 128, 64) 36928 add_13[0][0] __________________________________________________________________________________________________ batch_normalization_34 (BatchNo (None, 128, 128, 64) 256 conv2d_36[0][0] __________________________________________________________________________________________________ activation_15 (Activation) (None, 128, 128, 64) 0 batch_normalization_34[0][0] __________________________________________________________________________________________________ conv2d_37 (Conv2D) (None, 128, 128, 64) 36928 activation_15[0][0] __________________________________________________________________________________________________ batch_normalization_35 (BatchNo (None, 128, 128, 64) 256 conv2d_37[0][0] __________________________________________________________________________________________________ add_14 (Add) (None, 128, 128, 64) 0 batch_normalization_35[0][0] add_13[0][0] __________________________________________________________________________________________________ conv2d_38 (Conv2D) (None, 128, 128, 64) 36928 add_14[0][0] __________________________________________________________________________________________________ batch_normalization_36 (BatchNo (None, 128, 128, 64) 256 conv2d_38[0][0] __________________________________________________________________________________________________ activation_16 (Activation) (None, 128, 128, 64) 0 batch_normalization_36[0][0] __________________________________________________________________________________________________ conv2d_39 (Conv2D) (None, 128, 128, 64) 36928 activation_16[0][0] __________________________________________________________________________________________________ batch_normalization_37 (BatchNo (None, 128, 128, 64) 256 conv2d_39[0][0] __________________________________________________________________________________________________ add_15 (Add) (None, 128, 128, 64) 0 batch_normalization_37[0][0] add_14[0][0] __________________________________________________________________________________________________ conv2d_40 (Conv2D) (None, 128, 128, 64) 36928 add_15[0][0] __________________________________________________________________________________________________ batch_normalization_38 (BatchNo (None, 128, 128, 64) 256 conv2d_40[0][0] __________________________________________________________________________________________________ activation_17 (Activation) (None, 128, 128, 64) 0 batch_normalization_38[0][0] __________________________________________________________________________________________________ conv2d_41 (Conv2D) (None, 128, 128, 64) 36928 activation_17[0][0] __________________________________________________________________________________________________ batch_normalization_39 (BatchNo (None, 128, 128, 64) 256 conv2d_41[0][0] __________________________________________________________________________________________________ add_16 (Add) (None, 128, 128, 64) 0 batch_normalization_39[0][0] add_15[0][0] __________________________________________________________________________________________________ conv2d_42 (Conv2D) (None, 128, 128, 64) 36928 add_16[0][0] __________________________________________________________________________________________________ batch_normalization_40 (BatchNo (None, 128, 128, 64) 256 conv2d_42[0][0] __________________________________________________________________________________________________ add_17 (Add) (None, 128, 128, 64) 0 batch_normalization_40[0][0] activation_1[0][0] __________________________________________________________________________________________________ up_sampling2d_1 (UpSampling2D) (None, 256, 256, 64) 0 add_17[0][0] __________________________________________________________________________________________________ conv2d_43 (Conv2D) (None, 256, 256, 256 147712 up_sampling2d_1[0][0] __________________________________________________________________________________________________ activation_18 (Activation) (None, 256, 256, 256 0 conv2d_43[0][0] __________________________________________________________________________________________________ up_sampling2d_2 (UpSampling2D) (None, 512, 512, 256 0 activation_18[0][0] __________________________________________________________________________________________________ conv2d_44 (Conv2D) (None, 512, 512, 256 590080 up_sampling2d_2[0][0] __________________________________________________________________________________________________ activation_19 (Activation) (None, 512, 512, 256 0 conv2d_44[0][0] __________________________________________________________________________________________________ conv2d_45 (Conv2D) (None, 512, 512, 3) 62211 activation_19[0][0] ================================================================================================== Total params: 2,042,691 Trainable params: 2,038,467 Non-trainable params: 4,224

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/小丑西瓜9/article/detail/316658?site=

推荐阅读

相关标签