热门标签

热门文章

- 12024年【危险化学品经营单位安全管理人员】新版试题及危险化学品经营单位安全管理人员模拟考试题_如果用人单位没有统一购买劳动防护用品,应该按照

- 2hanlp,pyhanlp 实现 NLP 任务_基于hanlp工具包的自然语言处理任务实现

- 3md5前端加密_md5 前端怎么不泄露

- 4[转]出租车轨迹处理(一):预处理+DBSCAN聚类+gmplot可视化_出租车轨迹聚类结果的可视化

- 5Mac上的VS code在终端直接运行.py_mac vscode 运行指定python行

- 6Tomcat对HTTP请求的处理(三)

- 7推荐一款高效便捷的Android APK签名工具:Uber Apk Signer

- 8各种机器学习算法的应用场景分别是什么(比如朴素贝叶斯、决策树、K 近邻、SVM、逻辑回归最大熵模型)?

- 9程序员的专属节日~定制礼包疯狂送,独宠你一人!

- 10PDPS教程之工艺仿真必备软件_pdps仿真软件

当前位置: article > 正文

kafka-生产者-发送对象消息(SpringBoot整合Kafka)

作者:我家小花儿 | 2024-06-12 16:16:29

赞

踩

kafka-生产者-发送对象消息(SpringBoot整合Kafka)

1、发送对象消息

1.1、新建javabean

package com.atguigu.kafka.bean;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import java.util.Date;

@Data

@NoArgsConstructor

@AllArgsConstructor

public class UserDTO {

private Long userId;

private String userName;

private String phone;

private Date joinDate;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

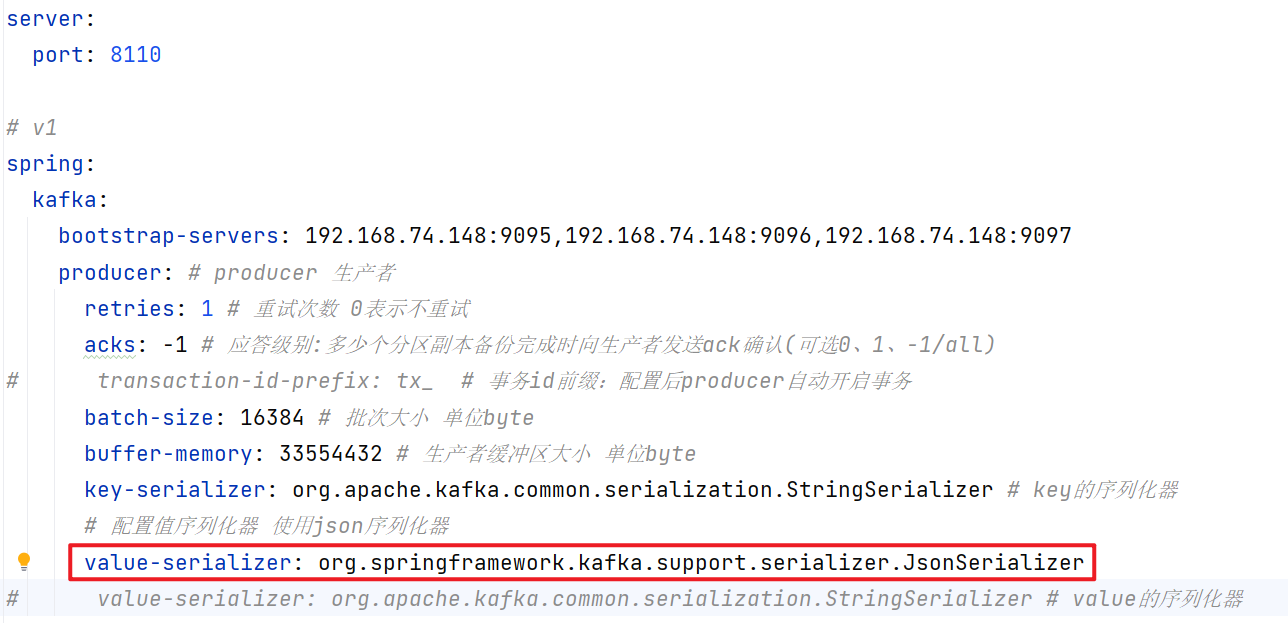

1.2、appication.yml中修改生产者序列化器

server:

port: 8110

# v1

spring:

kafka:

bootstrap-servers: 192.168.74.148:9095,192.168.74.148:9096,192.168.74.148:9097

producer: # producer 生产者

retries: 1 # 重试次数 0表示不重试

acks: -1 # 应答级别:多少个分区副本备份完成时向生产者发送ack确认(可选0、1、-1/all)

# transaction-id-prefix: tx_ # 事务id前缀:配置后producer自动开启事务

batch-size: 16384 # 批次大小 单位byte

buffer-memory: 33554432 # 生产者缓冲区大小 单位byte

key-serializer: org.apache.kafka.common.serialization.StringSerializer # key的序列化器

# 配置值序列化器 使用json序列化器

value-serializer: org.springframework.kafka.support.serializer.JsonSerializer

# value-serializer: org.apache.kafka.common.serialization.StringSerializer # value的序列化器

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

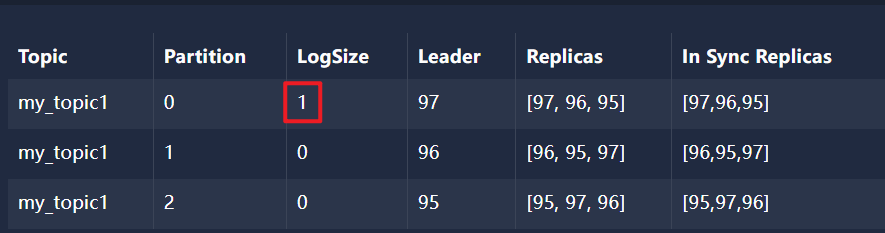

1.3、使用 Java代码 创建 主题 my_topic1 并建立3个分区并给每个分区建立3个副本

package com.atguigu.kafka.config;

import org.apache.kafka.clients.admin.NewTopic;

import org.springframework.context.annotation.Bean;

import org.springframework.kafka.config.TopicBuilder;

import org.springframework.stereotype.Component;

@Component

public class KafkaTopicConfig {

@Bean

public NewTopic myTopic1() {

//相同名称的主题 只会创建一次,后面创建的主题名称相同配置不同可以做增量更新(分区、副本数)

return TopicBuilder.name("my_topic1")//主题名称

.partitions(3)//主题分区

.replicas(3)//主题分区副本数

.build();//创建

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

1.4、创建生产者拦截器

package com.atguigu.kafka.interceptor;

import org.apache.kafka.clients.producer.ProducerInterceptor;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.springframework.stereotype.Component;

import java.util.Map;

//拦截器必须手动注册给kafka生产者(KafkaTemplate)

@Component

public class MyKafkaInterceptor implements ProducerInterceptor<String,Object> {

//kafka生产者发送消息前执行:拦截发送的消息预处理

@Override

public ProducerRecord<String, Object> onSend(ProducerRecord<String, Object> producerRecord) {

System.out.println("生产者即将发送消息:topic = "+ producerRecord.topic()

+",partition:"+producerRecord.partition()

+",key = "+producerRecord.key()

+",value = "+producerRecord.value());

return null;

}

//kafka broker 给出应答后执行

@Override

public void onAcknowledgement(RecordMetadata recordMetadata, Exception e) {

//exception为空表示消息发送成功

if(e == null){

System.out.println("消息发送成功:topic = "+ recordMetadata.topic()

+",partition:"+recordMetadata.partition()

+",offset="+recordMetadata.offset()

+",timestamp="+recordMetadata.timestamp());

}

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> map) {

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

1.5、创建生产者监听器

package com.atguigu.kafka.listener;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.springframework.kafka.support.ProducerListener;

import org.springframework.stereotype.Component;

@Component

public class MyKafkaProducerListener implements ProducerListener {

//生产者 ack 配置为 0 只要发送即成功

//ack为 1 leader落盘 broker ack之后 才成功

//ack为 -1 分区所有副本全部落盘 broker ack之后 才成功

@Override

public void onSuccess(ProducerRecord producerRecord, RecordMetadata recordMetadata) {

//ProducerListener.super.onSuccess(producerRecord, recordMetadata);

System.out.println("MyKafkaProducerListener消息发送成功:"+"topic="+producerRecord.topic()

+",partition = "+producerRecord.partition()

+",key = "+producerRecord.key()

+",value = "+producerRecord.value()

+",offset = "+recordMetadata.offset());

}

//消息发送失败的回调:监听器可以接收到发送失败的消息 可以记录失败的消息

@Override

public void onError(ProducerRecord producerRecord, RecordMetadata recordMetadata, Exception exception) {

System.out.println("MyKafkaProducerListener消息发送失败:"+"topic="+producerRecord.topic()

+",partition = "+producerRecord.partition()

+",key = "+producerRecord.key()

+",value = "+producerRecord.value()

+",offset = "+recordMetadata.offset());

System.out.println("异常信息:" + exception.getMessage());

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

1.6、发送消息测试

package com.atguigu.kafka.producer;

import com.atguigu.kafka.bean.UserDTO;

import com.atguigu.kafka.interceptor.MyKafkaInterceptor;

import jakarta.annotation.PostConstruct;

import jakarta.annotation.Resource;

import org.junit.jupiter.api.Test;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.kafka.core.KafkaTemplate;

import java.io.IOException;

import java.util.Date;

@SpringBootTest

class KafkaProducerApplicationTests {

//装配kafka模板类: springboot启动时会自动根据配置文初始化kafka模板类对象注入到容器中

@Resource

KafkaTemplate kafkaTemplate;

@Resource

MyKafkaInterceptor myKafkaInterceptor;

@PostConstruct

public void init() {

kafkaTemplate.setProducerInterceptor(myKafkaInterceptor);

}

@Test

void contextLoads() throws IOException {

UserDTO userDTO = new UserDTO(1L, "张三", "13800000000", new Date());

kafkaTemplate.send("my_topic1", userDTO);

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

1.7、引入spring-kafka依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.0.5</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<!-- Generated by https://start.springboot.io -->

<!-- 优质的 spring/boot/data/security/cloud 框架中文文档尽在 => https://springdoc.cn -->

<groupId>com.atguigu.kafka</groupId>

<artifactId>kafka-producer</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>kafka-producer</name>

<description>kafka-producer</description>

<properties>

<java.version>17</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

1.8、控制台日志

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v3.0.5)

生产者即将发送消息:topic = my_topic1,partition:null,key = null,value = UserDTO(userId=1, userName=张三, phone=13800000000, joinDate=Thu Jun 06 20:00:57 CST 2024)

消息发送成功:topic = my_topic1,partition:0,offset=0,timestamp=1717675257112

MyKafkaProducerListener消息发送成功:topic=my_topic1,partition = null,key = null,value = UserDTO(userId=1, userName=张三, phone=13800000000, joinDate=Thu Jun 06 20:00:57 CST 2024),offset = 0

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

[

[

{

"partition": 0,

"offset": 0,

"msg": "{\"userId\":1,\"userName\":\"张三\",\"phone\":\"13800000000\",\"joinDate\":1717675257046}",

"timespan": 1717675257112,

"date": "2024-06-06 12:00:57"

}

]

]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/我家小花儿/article/detail/708715

推荐阅读

相关标签